Mohamed Elobaid

On the Stabilization of Rigid Formations on Regular Curves

Dec 11, 2025Abstract:This work deals with the problem of stabilizing a multi-agent rigid formation on a general class of planar curves. Namely, we seek to stabilize an equilateral polygonal formation on closed planar differentiable curves after a path sweep. The task of finding an inscribed regular polygon centered at the point of interest is solved via a randomized multi-start Newton-Like algorithm for which one is able to ascertain the existence of a minimizer. Then we design a continuous feedback law that guarantees convergence to, and sufficient sweeping of the curve, followed by convergence to the desired formation vertices while ensuring inter-agent avoidance. The proposed approach is validated through numerical simulations for different classes of curves and different rigid formations. Code: https://github.com/mebbaid/paper-elobaid-ifacwc-2026

Online Non-linear Centroidal MPC with Stability Guarantees for Robust Locomotion of Legged Robots

Sep 02, 2024

Abstract:Nonlinear model predictive locomotion controllers based on the reduced centroidal dynamics are nowadays ubiquitous in legged robots. These schemes, even if they assume an inherent simplification of the robot's dynamics, were shown to endow robots with a step-adjustment capability in reaction to small pushes, and, moreover, in the case of uncertain parameters - as unknown payloads - they were shown to be able to provide some practical, albeit limited, robustness. In this work, we provide rigorous certificates of their closed loop stability via a reformulation of the centroidal MPC controller. This is achieved thanks to a systematic procedure inspired by the machinery of adaptive control, together with ideas coming from Control Lyapunov functions. Our reformulation, in addition, provides robustness for a class of unmeasured constant disturbances. To demonstrate the generality of our approach, we validated our formulation on a new generation of humanoid robots - the 56.7 kg ergoCub, as well as on a commercially available 21 kg quadruped robot, Aliengo.

Remote telepresence over large distances via robot avatars: case studies

Sep 02, 2024

Abstract:This paper discusses the necessary considerations and adjustments that allow a recently proposed avatar system architecture to be used with different robotic avatar morphologies (both wheeled and legged robots with various types of hands and kinematic structures) for the purpose of enabling remote (intercontinental) telepresence under communication bandwidth restrictions. The case studies reported involve robots using both position and torque control modes, independently of their software middleware.

XBG: End-to-end Imitation Learning for Autonomous Behaviour in Human-Robot Interaction and Collaboration

Jun 22, 2024

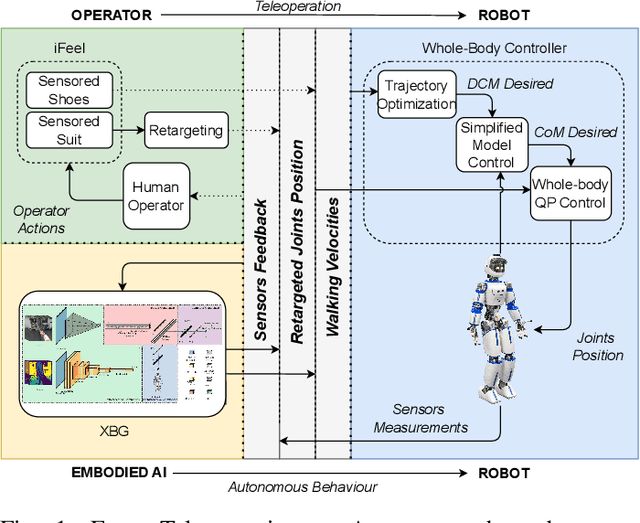

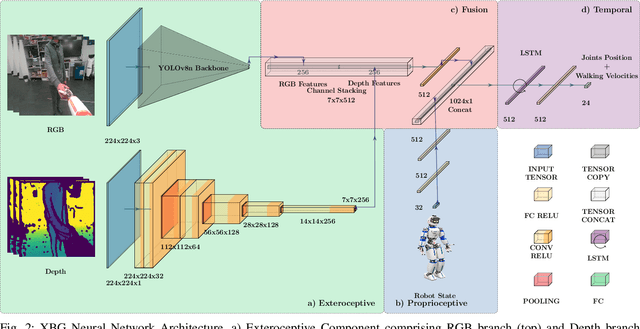

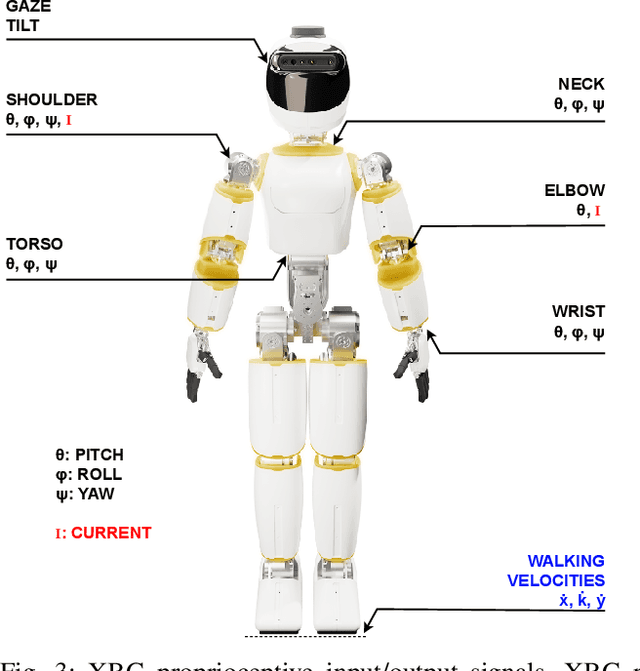

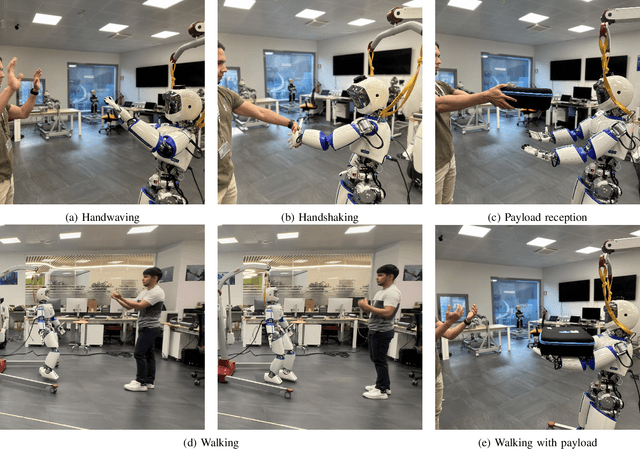

Abstract:This paper presents XBG (eXteroceptive Behaviour Generation), a multimodal end-to-end Imitation Learning (IL) system for a whole-body autonomous humanoid robot used in real-world Human-Robot Interaction (HRI) scenarios. The main contribution of this paper is an architecture for learning HRI behaviours using a data-driven approach. Through teleoperation, a diverse dataset is collected, comprising demonstrations across multiple HRI scenarios, including handshaking, handwaving, payload reception, walking, and walking with a payload. After synchronizing, filtering, and transforming the data, different Deep Neural Networks (DNN) models are trained. The final system integrates different modalities comprising exteroceptive and proprioceptive sources of information to provide the robot with an understanding of its environment and its own actions. The robot takes sequence of images (RGB and depth) and joints state information during the interactions and then reacts accordingly, demonstrating learned behaviours. By fusing multimodal signals in time, we encode new autonomous capabilities into the robotic platform, allowing the understanding of context changes over time. The models are deployed on ergoCub, a real-world humanoid robot, and their performance is measured by calculating the success rate of the robot's behaviour under the mentioned scenarios.

Online Non-linear Centroidal MPC for Humanoid Robots Payload Carrying with Contact-Stable Force Parametrization

May 18, 2023

Abstract:In this paper we consider the problem of allowing a humanoid robot that is subject to a persistent disturbance, in the form of a payload-carrying task, to follow given planned footsteps. To solve this problem, we combine an online nonlinear centroidal Model Predictive Controller - MPC with a contact stable force parametrization. The cost function of the MPC is augmented with terms handling the disturbance and regularizing the parameter. The performance of the resulting controller is validated both in simulations and on the humanoid robot iCub. Finally, the effect of using the parametrization on the computational time of the controller is briefly studied.

A Control Approach for Human-Robot Ergonomic Payload Lifting

May 15, 2023

Abstract:Collaborative robots can relief human operators from excessive efforts during payload lifting activities. Modelling the human partner allows the design of safe and efficient collaborative strategies. In this paper, we present a control approach for human-robot collaboration based on human monitoring through whole-body wearable sensors, and interaction modelling through coupled rigid-body dynamics. Moreover, a trajectory advancement strategy is proposed, allowing for online adaptation of the robot trajectory depending on the human motion. The resulting framework allows us to perform payload lifting tasks, taking into account the ergonomic requirements of the agents. Validation has been performed in an experimental scenario using the iCub3 humanoid robot and a human subject sensorized with the iFeel wearable system.

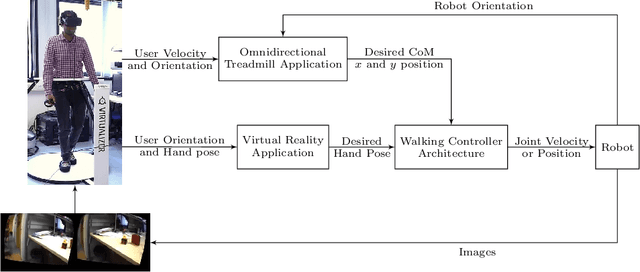

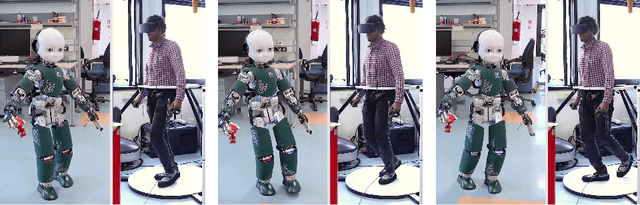

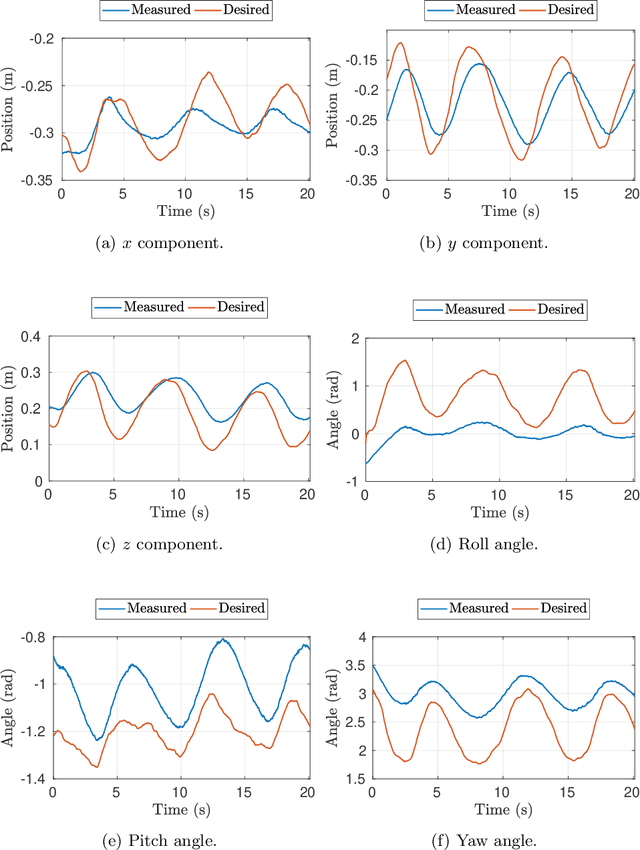

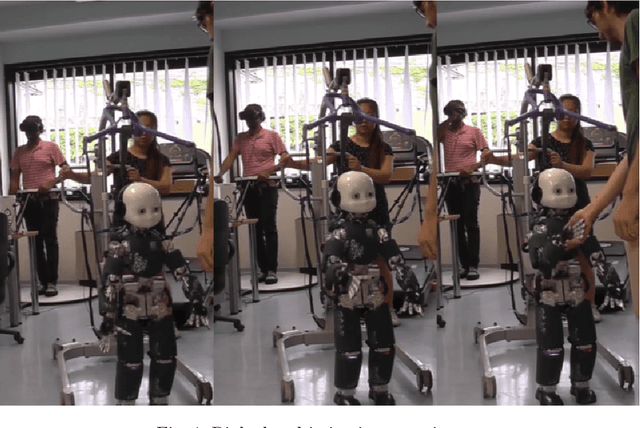

Telexistence and Teleoperation for Walking Humanoid Robots

Apr 04, 2019

Abstract:This paper proposes an architecture for achieving telexistence and teleoperation of humanoid robots. The architecture combines several technological set-ups, methodologies, locomotion and manipulation algorithms in a novel manner, thus building upon and extending works available in literature. The approach allows a human operator to command and telexist with the robot. Therefore, in this work we treat aspects pertaining not only to the proposed architecture structure and implementation, but also the human operator experience in terms of ability to adapt to the robot and to the architecture. Also the proprioception aspects and embodiment of the robot are studied through specific experimental results, which are also treated in a somewhat formal, albeit high-level manner. Application of the proposed architecture and experiments incorporating user training and experience are addressed using an illustrative bipedal humanoid robot, namely the iCub robot.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge