Zero-Shot Uncertainty-Aware Deployment of Simulation Trained Policies on Real-World Robots

Paper and Code

Dec 10, 2021

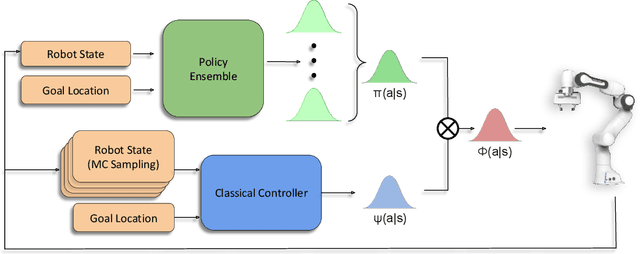

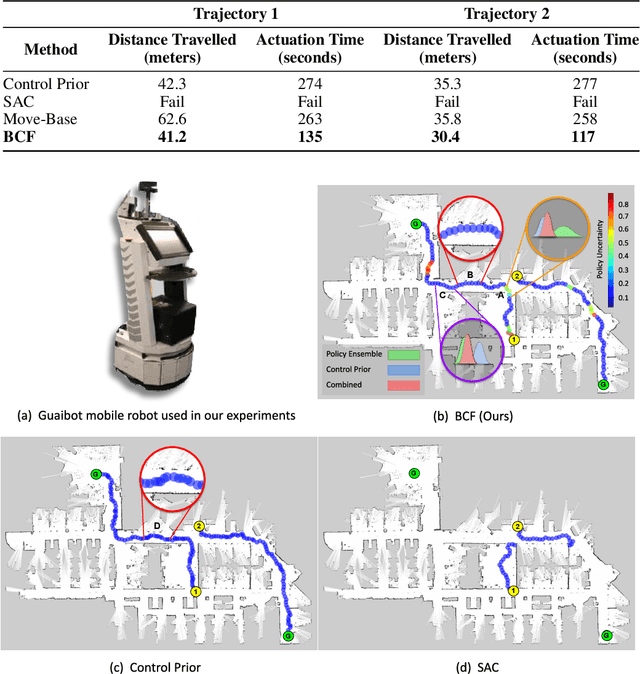

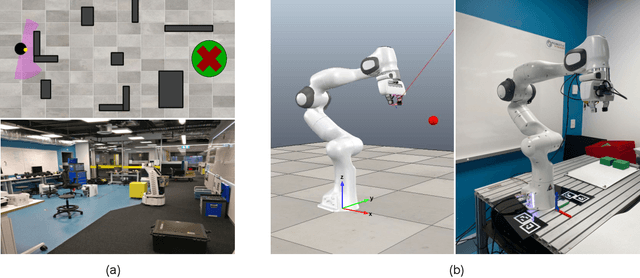

While deep reinforcement learning (RL) agents have demonstrated incredible potential in attaining dexterous behaviours for robotics, they tend to make errors when deployed in the real world due to mismatches between the training and execution environments. In contrast, the classical robotics community have developed a range of controllers that can safely operate across most states in the real world given their explicit derivation. These controllers however lack the dexterity required for complex tasks given limitations in analytical modelling and approximations. In this paper, we propose Bayesian Controller Fusion (BCF), a novel uncertainty-aware deployment strategy that combines the strengths of deep RL policies and traditional handcrafted controllers. In this framework, we can perform zero-shot sim-to-real transfer, where our uncertainty based formulation allows the robot to reliably act within out-of-distribution states by leveraging the handcrafted controller while gaining the dexterity of the learned system otherwise. We show promising results on two real-world continuous control tasks, where BCF outperforms both the standalone policy and controller, surpassing what either can achieve independently. A supplementary video demonstrating our system is provided at https://bit.ly/bcf_deploy.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge