Wesley Chan

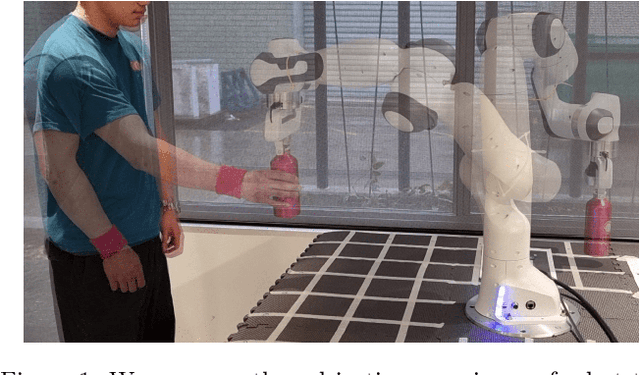

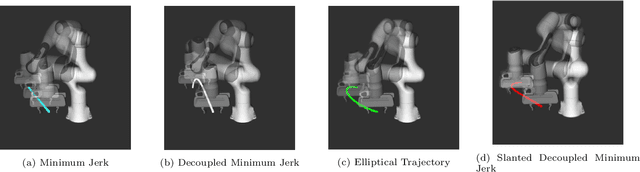

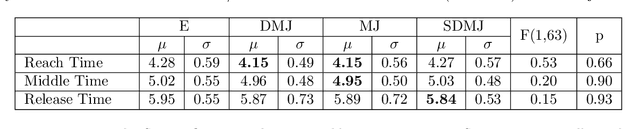

Comparing Subjective Perceptions of Robot-to-Human Handover Trajectories

Nov 16, 2022

Abstract:Robots must move legibly around people for safety reasons, especially for tasks where physical contact is possible. One such task is handovers, which requires implicit communication on where and when physical contact (object transfer) occurs. In this work, we study whether the trajectory model used by a robot during the reaching phase affects the subjective perceptions of receivers for robot-to-human handovers. We conducted a user study where 32 participants were handed over three objects with four trajectory models: three were versions of a minimum jerk trajectory, and one was an ellipse-fitting-based trajectory. The start position of the handover was fixed for all trajectories, and the end position was allowed to vary randomly around a fixed position by $\pm$3 cm in all axis. The user study found no significant differences among the handover trajectories in survey questions relating to safety, predictability, naturalness, and other subjective metrics. While these results seemingly reject the hypothesis that the trajectory affects human perceptions of a handover, it prompts future research to investigate the effect of other variables, such as robot speed, object transfer position, object orientation at the transfer point, and explicit communication signals such as gaze and speech.

On-The-Go Robot-to-Human Handovers with a Mobile Manipulator

Mar 16, 2022

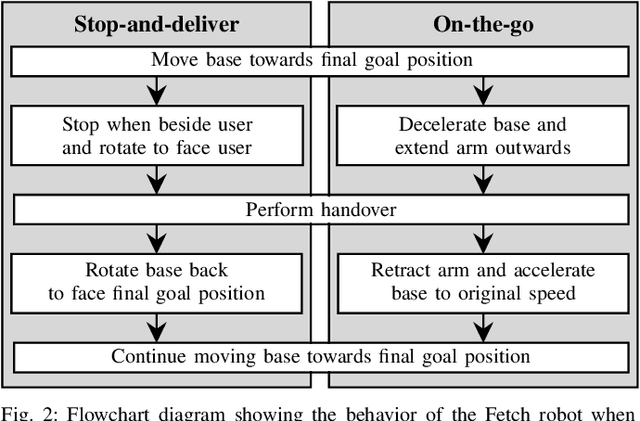

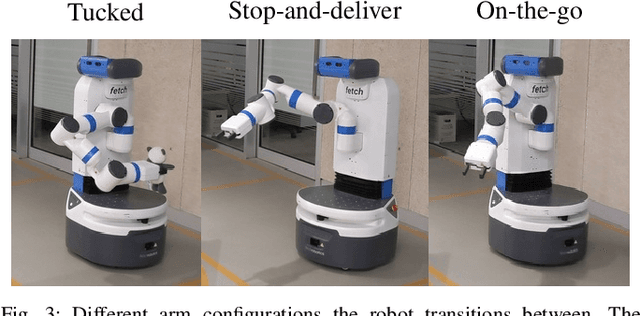

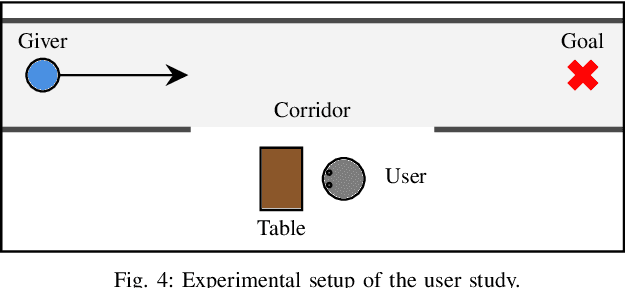

Abstract:Existing approaches to direct robot-to-human handovers are typically implemented on fixed-base robot arms, or on mobile manipulators that come to a full stop before performing the handover. We propose "on-the-go" handovers which permit a moving mobile manipulator to hand over an object to a human without stopping. The on-the-go handover motion is generated with a reactive controller that allows simultaneous control of the base and the arm. In a user study, human receivers subjectively assessed on-the-go handovers to be more efficient, predictable, natural, better timed and safer than handovers that implemented a "stop-and-deliver" behavior.

Object Handovers: a Review for Robotics

Jul 25, 2020

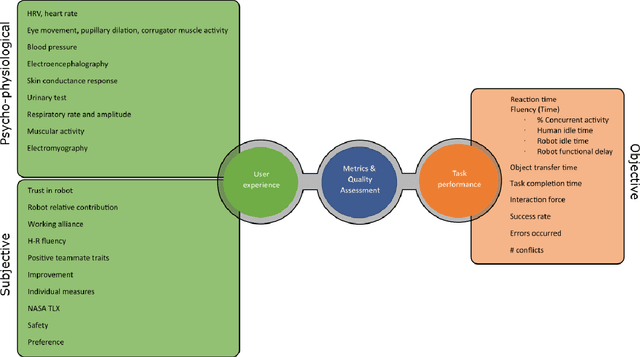

Abstract:This article surveys the literature on human-robot object handovers. A handover is a collaborative joint action where an agent, the giver, gives an object to another agent, the receiver. The physical exchange starts when the receiver first contacts the object held by the giver and ends when the giver fully releases the object to the receiver. However, important cognitive and physical processes begin before the physical exchange, including initiating implicit agreement with respect to the location and timing of the exchange. From this perspective, we structure our review into the two main phases delimited by the aforementioned events: 1) a pre-handover phase, and 2) the physical exchange. We focus our analysis on the two actors (giver and receiver) and report the state of the art of robotic givers (robot-to-human handovers) and the robotic receivers (human-to-robot handovers). We report a comprehensive list of qualitative and quantitative metrics commonly used to assess the interaction. While focusing our review on the cognitive level (e.g., prediction, perception, motion planning, learning) and the physical level (e.g., motion, grasping, grip release) of the handover, we briefly discuss also the concepts of safety, social context, and ergonomics. We compare the behaviours displayed during human-to-human handovers to the state of the art of robotic assistants, and identify the major areas of improvement for robotic assistants to reach performance comparable to human interactions. Finally, we propose a minimal set of metrics that should be used in order to enable a fair comparison among the approaches.

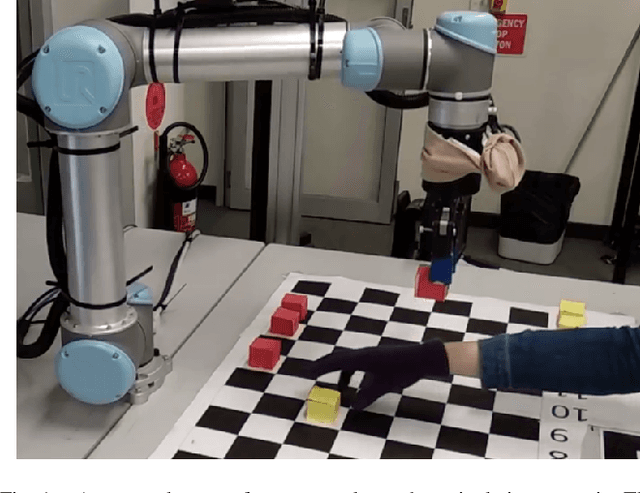

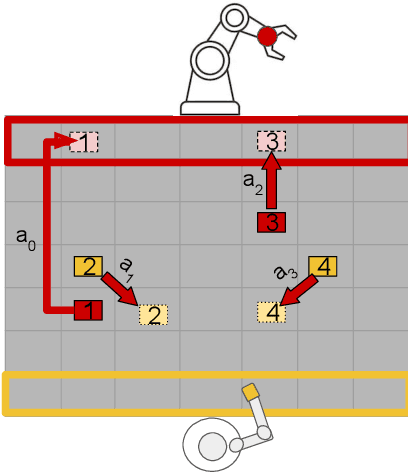

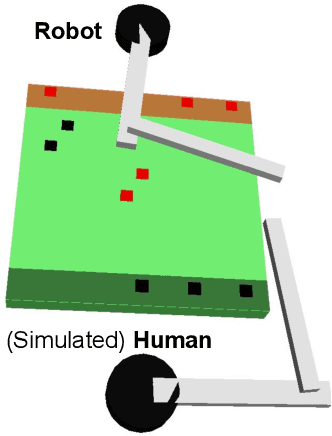

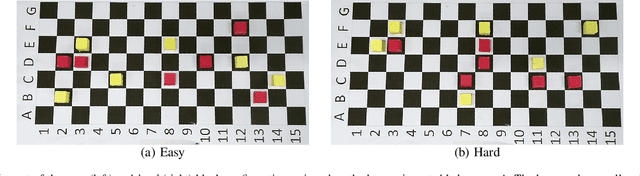

Supportive Actions for Manipulation in Human-Robot Coworker Teams

May 02, 2020

Abstract:The increasing presence of robots alongside humans, such as in human-robot teams in manufacturing, gives rise to research questions about the kind of behaviors people prefer in their robot counterparts. We term actions that support interaction by reducing future interference with others as supportive robot actions and investigate their utility in a co-located manipulation scenario. We compare two robot modes in a shared table pick-and-place task: (1) Task-oriented: the robot only takes actions to further its own task objective and (2) Supportive: the robot sometimes prefers supportive actions to task-oriented ones when they reduce future goal-conflicts. Our experiments in simulation, using a simplified human model, reveal that supportive actions reduce the interference between agents, especially in more difficult tasks, but also cause the robot to take longer to complete the task. We implemented these modes on a physical robot in a user study where a human and a robot perform object placement on a shared table. Our results show that a supportive robot was perceived as a more favorable coworker by the human and also reduced interference with the human in the more difficult of two scenarios. However, it also took longer to complete the task highlighting an interesting trade-off between task-efficiency and human-preference that needs to be considered before designing robot behavior for close-proximity manipulation scenarios.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge