Aimee Allen

Monash University - Australia

Sound Judgment: Properties of Consequential Sounds Affecting Human-Perception of Robots

Feb 04, 2025

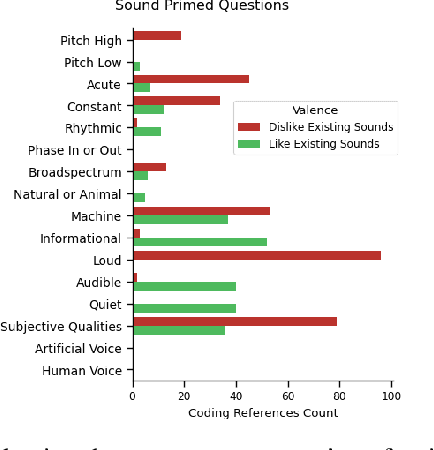

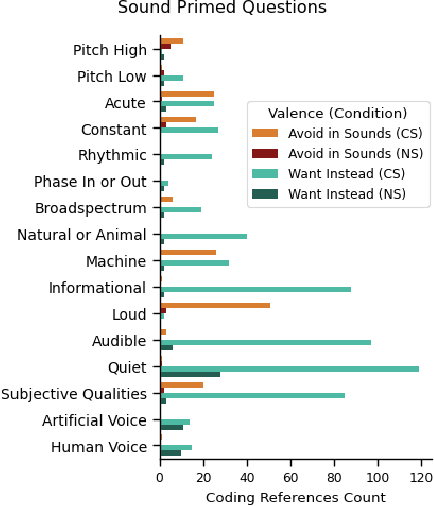

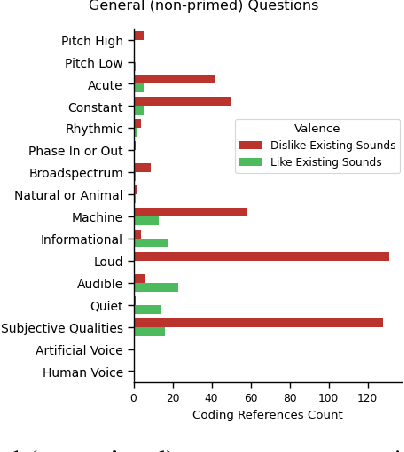

Abstract:Positive human-perception of robots is critical to achieving sustained use of robots in shared environments. One key factor affecting human-perception of robots are their sounds, especially the consequential sounds which robots (as machines) must produce as they operate. This paper explores qualitative responses from 182 participants to gain insight into human-perception of robot consequential sounds. Participants viewed videos of different robots performing their typical movements, and responded to an online survey regarding their perceptions of robots and the sounds they produce. Topic analysis was used to identify common properties of robot consequential sounds that participants expressed liking, disliking, wanting or wanting to avoid being produced by robots. Alongside expected reports of disliking high pitched and loud sounds, many participants preferred informative and audible sounds (over no sound) to provide predictability of purpose and trajectory of the robot. Rhythmic sounds were preferred over acute or continuous sounds, and many participants wanted more natural sounds (such as wind or cat purrs) in-place of machine-like noise. The results presented in this paper support future research on methods to improve consequential sounds produced by robots by highlighting features of sounds that cause negative perceptions, and providing insights into sound profile changes for improvement of human-perception of robots, thus enhancing human robot interaction.

Robots Have Been Seen and Not Heard: Effects of Consequential Sounds on Human-Perception of Robots

Jun 05, 2024

Abstract:Many people expect robots to move fairly quietly, or make pleasant "beep boop" sounds or jingles similar to what they have observed in videos of robots. Unfortunately, this expectation of quietness does not match reality, as robots make machine sounds, known as 'consequential sounds', as they move and operate. As robots become more prevalent within society, understanding the sounds produced by robots and how these sounds are perceived by people is becoming increasingly important for positive human robot interactions (HRI). This paper investigates how people respond to the consequential sounds of robots, specifically how robots make a participant feel, how much they like the robot, would be distracted by the robot, and a person's desire to colocate with robots. Participants were shown 5 videos of different robots and asked their opinions on the robots and the sounds they made. This was compared with a control condition of completely silent videos. The results in this paper demonstrate with data from 182 participants (858 trials) that consequential sounds produced by robots have a significant negative effect on human perceptions of robots. Firstly there were increased negative 'associated affects' of the participants, such as making them feel more uncomfortable or agitated around the robot. Secondly, the presence of consequential sounds correlated with participants feeling more distracted and less able to focus. Thirdly participants reported being less likely to want to colocate in a shared environment with robots.

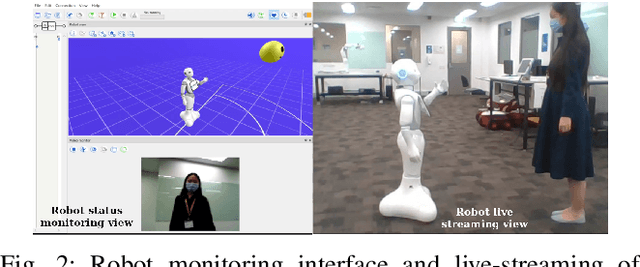

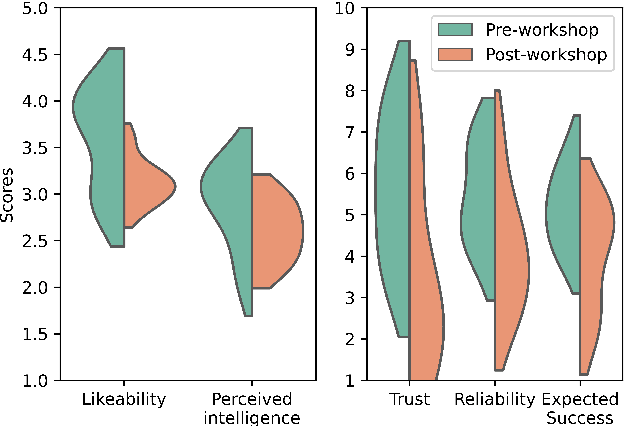

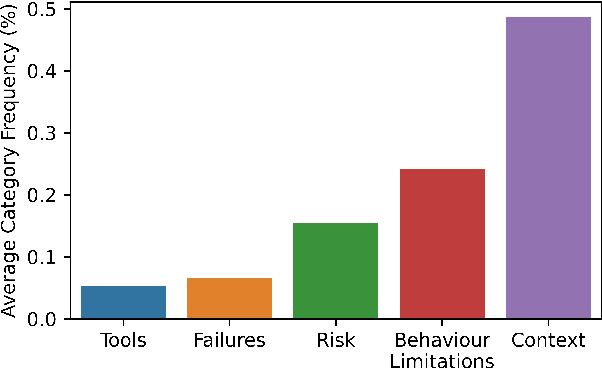

Aligning Robot's Behaviours and Users' Perceptions Through Participatory Prototyping

Jan 11, 2021

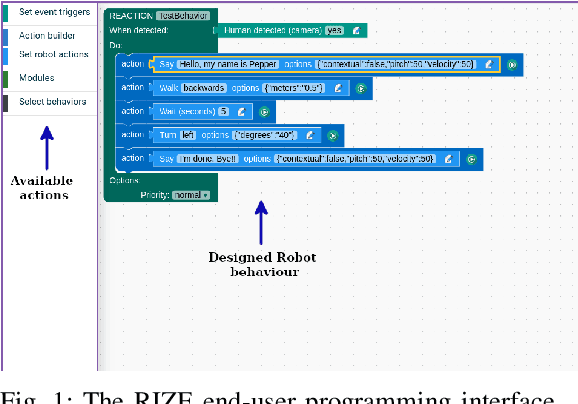

Abstract:Robots are increasingly being deployed in public spaces. However, the general population rarely has the opportunity to nominate what they would prefer or expect a robot to do in these contexts. Since most people have little or no experience interacting with a robot, it is not surprising that robots deployed in the real world may fail to gain acceptance or engage their intended users. To address this issue, we examine users' understanding of robots in public spaces and their expectations of appropriate uses of robots in these spaces. Furthermore, we investigate how these perceptions and expectations change as users engage and interact with a robot. To support this goal, we conducted a participatory design workshop in which participants were actively involved in the prototyping and testing of a robot's behaviours in simulation and on the physical robot. Our work highlights how social and interaction contexts influence users' perception of robots in public spaces and how users' design and understanding of what are appropriate robot behaviors shifts as they observe the enactment of their designs.

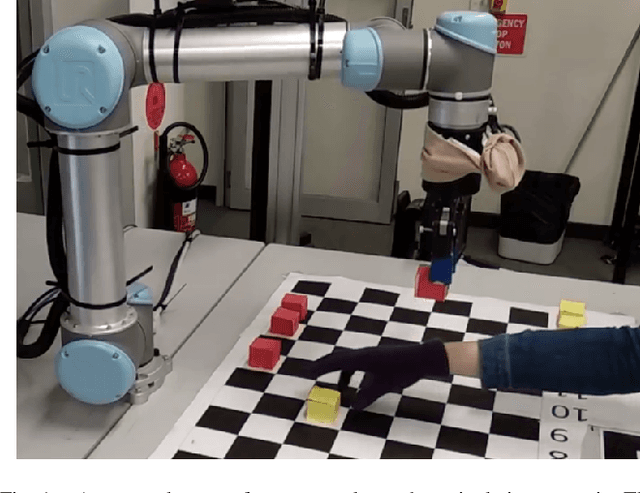

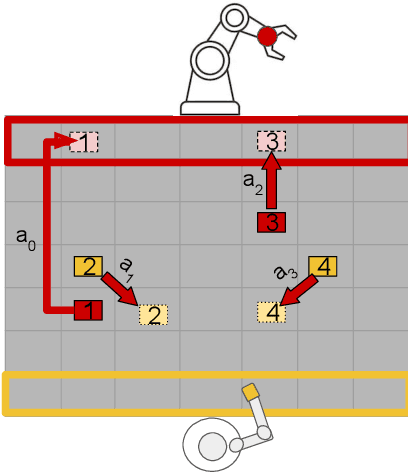

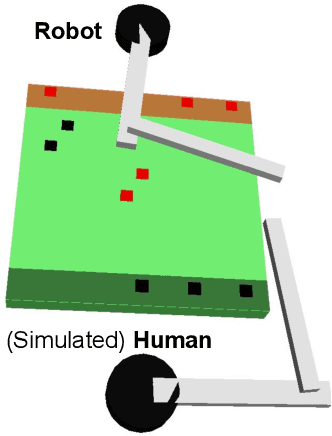

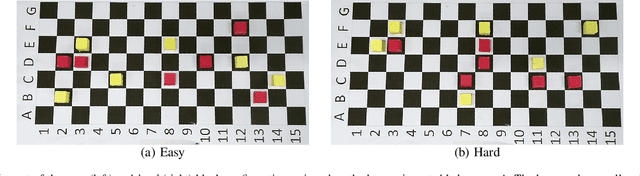

Supportive Actions for Manipulation in Human-Robot Coworker Teams

May 02, 2020

Abstract:The increasing presence of robots alongside humans, such as in human-robot teams in manufacturing, gives rise to research questions about the kind of behaviors people prefer in their robot counterparts. We term actions that support interaction by reducing future interference with others as supportive robot actions and investigate their utility in a co-located manipulation scenario. We compare two robot modes in a shared table pick-and-place task: (1) Task-oriented: the robot only takes actions to further its own task objective and (2) Supportive: the robot sometimes prefers supportive actions to task-oriented ones when they reduce future goal-conflicts. Our experiments in simulation, using a simplified human model, reveal that supportive actions reduce the interference between agents, especially in more difficult tasks, but also cause the robot to take longer to complete the task. We implemented these modes on a physical robot in a user study where a human and a robot perform object placement on a shared table. Our results show that a supportive robot was perceived as a more favorable coworker by the human and also reduced interference with the human in the more difficult of two scenarios. However, it also took longer to complete the task highlighting an interesting trade-off between task-efficiency and human-preference that needs to be considered before designing robot behavior for close-proximity manipulation scenarios.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge