Gentiane Venture

Adaptive Wiping: Adaptive contact-rich manipulation through few-shot imitation learning with Force-Torque feedback and pre-trained object representations

May 09, 2025Abstract:Imitation learning offers a pathway for robots to perform repetitive tasks, allowing humans to focus on more engaging and meaningful activities. However, challenges arise from the need for extensive demonstrations and the disparity between training and real-world environments. This paper focuses on contact-rich tasks like wiping with soft and deformable objects, requiring adaptive force control to handle variations in wiping surface height and the sponge's physical properties. To address these challenges, we propose a novel method that integrates real-time force-torque (FT) feedback with pre-trained object representations. This approach allows robots to dynamically adjust to previously unseen changes in surface heights and sponges' physical properties. In real-world experiments, our method achieved 96% accuracy in applying reference forces, significantly outperforming the previous method that lacked an FT feedback loop, which only achieved 4% accuracy. To evaluate the adaptability of our approach, we conducted experiments under different conditions from the training setup, involving 40 scenarios using 10 sponges with varying physical properties and 4 types of wiping surface heights, demonstrating significant improvements in the robot's adaptability by analyzing force trajectories. The video of our work is available at: https://sites.google.com/view/adaptive-wiping

Tactile-based Active Inference for Force-Controlled Peg-in-Hole Insertions

Sep 27, 2023

Abstract:Reinforcement Learning (RL) has shown great promise for efficiently learning force control policies in peg-in-hole tasks. However, robots often face difficulties due to visual occlusions by the gripper and uncertainties in the initial grasping pose of the peg. These challenges often restrict force-controlled insertion policies to situations where the peg is rigidly fixed to the end-effector. While vision-based tactile sensors offer rich tactile feedback that could potentially address these issues, utilizing them to learn effective tactile policies is both computationally intensive and difficult to generalize. In this paper, we propose a robust tactile insertion policy that can align the tilted peg with the hole using active inference, without the need for extensive training on large datasets. Our approach employs a dual-policy architecture: one policy focuses on insertion, integrating force control and RL to guide the object into the hole, while the other policy performs active inference based on tactile feedback to align the tilted peg with the hole. In real-world experiments, our dual-policy architecture achieved 90% success rate into a hole with a clearance of less than 0.1 mm, significantly outperforming previous methods that lack tactile sensory feedback (5%). To assess the generalizability of our alignment policy, we conducted experiments with five different pegs, demonstrating its effective adaptation to multiple objects.

Aligning Robot's Behaviours and Users' Perceptions Through Participatory Prototyping

Jan 11, 2021

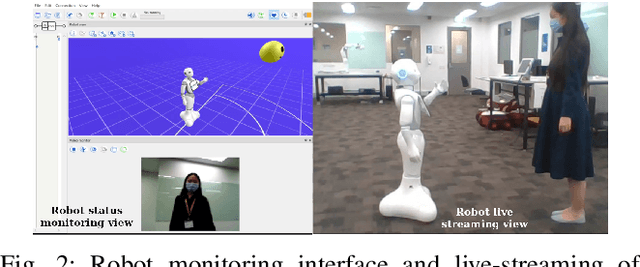

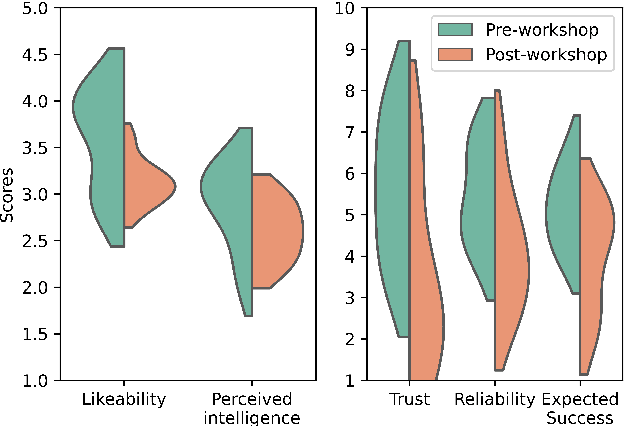

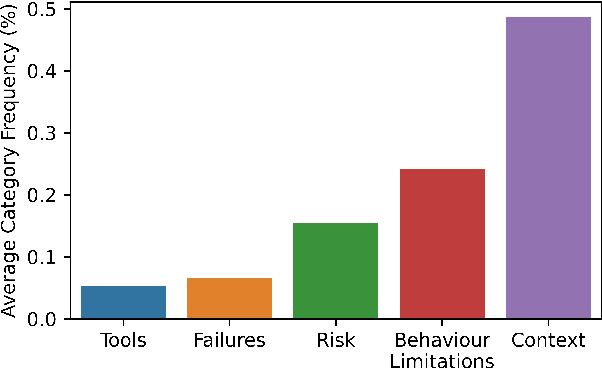

Abstract:Robots are increasingly being deployed in public spaces. However, the general population rarely has the opportunity to nominate what they would prefer or expect a robot to do in these contexts. Since most people have little or no experience interacting with a robot, it is not surprising that robots deployed in the real world may fail to gain acceptance or engage their intended users. To address this issue, we examine users' understanding of robots in public spaces and their expectations of appropriate uses of robots in these spaces. Furthermore, we investigate how these perceptions and expectations change as users engage and interact with a robot. To support this goal, we conducted a participatory design workshop in which participants were actively involved in the prototyping and testing of a robot's behaviours in simulation and on the physical robot. Our work highlights how social and interaction contexts influence users' perception of robots in public spaces and how users' design and understanding of what are appropriate robot behaviors shifts as they observe the enactment of their designs.

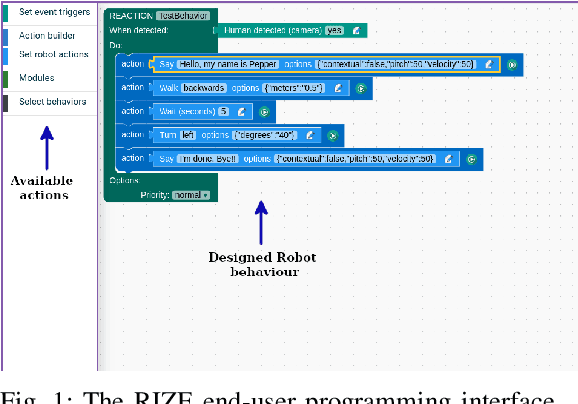

Node Primitives: an open end-user programming platform for social robots

Sep 25, 2017

Abstract:With the expected adoption of robots able to seamlessly and intuitively interact with people in real-world scenarios, the need arises to provide non-technically-skilled users with easy-to-understand paradigms for customising robot behaviors. In this paper, we present an interaction design robot programming platform for enabling multidisciplinary social robot research and applications. This platform is referred to Node Primitives (NEP) and consists of two main parts. On the one hand, a ZeroMQ and Python-based distributed software framework has been developed to provide inter-process communication and robot behavior specification mechanisms. On the other hand, a web-based end-user programming (EUP) interface has been developed to allow for an easy and intuitive way of programming and executing robot behaviors. In order to evaluate NEP, we discuss the development of a human-robot interaction application using arm gestures to control robot behaviors. A usability test for the proposed EUP interface is also presented.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge