Pamela Carreno-Medrano

Explaining Why Things Go Where They Go: Interpretable Constructs of Human Organizational Preferences

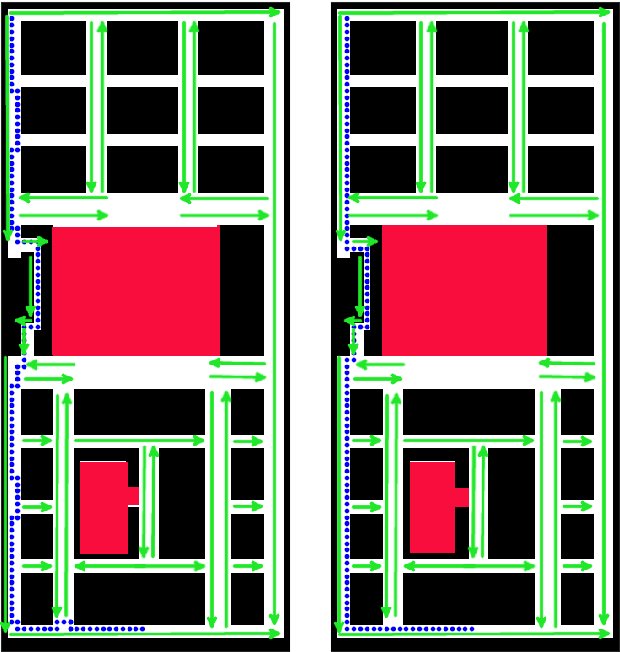

Dec 31, 2025Abstract:Robotic systems for household object rearrangement often rely on latent preference models inferred from human demonstrations. While effective at prediction, these models offer limited insight into the interpretable factors that guide human decisions. We introduce an explicit formulation of object arrangement preferences along four interpretable constructs: spatial practicality (putting items where they naturally fit best in the space), habitual convenience (making frequently used items easy to reach), semantic coherence (placing items together if they are used for the same task or are contextually related), and commonsense appropriateness (putting things where people would usually expect to find them). To capture these constructs, we designed and validated a self-report questionnaire through a 63-participant online study. Results confirm the psychological distinctiveness of these constructs and their explanatory power across two scenarios (kitchen and living room). We demonstrate the utility of these constructs by integrating them into a Monte Carlo Tree Search (MCTS) planner and show that when guided by participant-derived preferences, our planner can generate reasonable arrangements that closely align with those generated by participants. This work contributes a compact, interpretable formulation of object arrangement preferences and a demonstration of how it can be operationalized for robot planning.

Mixed Reality Outperforms Virtual Reality for Remote Error Resolution in Pick-and-Place Tasks

Feb 10, 2025

Abstract:This study evaluates the performance and usability of Mixed Reality (MR), Virtual Reality (VR), and camera stream interfaces for remote error resolution tasks, such as correcting warehouse packaging errors. Specifically, we consider a scenario where a robotic arm halts after detecting an error, requiring a remote operator to intervene and resolve it via pick-and-place actions. Twenty-one participants performed simulated pick-and-place tasks using each interface. A linear mixed model (LMM) analysis of task resolution time, usability scores (SUS), and mental workload scores (NASA-TLX) showed that the MR interface outperformed both VR and camera interfaces. MR enabled significantly faster task completion, was rated higher in usability, and was perceived to be less cognitively demanding. Notably, the MR interface, which projected a virtual robot onto a physical table, provided superior spatial understanding and physical reference cues. Post-study surveys further confirmed participants' preference for MR over other interfaces.

Contextual Affordances for Safe Exploration in Robotic Scenarios

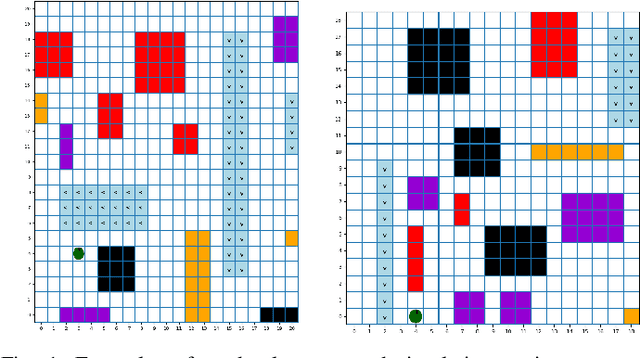

May 10, 2024Abstract:Robotics has been a popular field of research in the past few decades, with much success in industrial applications such as manufacturing and logistics. This success is led by clearly defined use cases and controlled operating environments. However, robotics has yet to make a large impact in domestic settings. This is due in part to the difficulty and complexity of designing mass-manufactured robots that can succeed in the variety of homes and environments that humans live in and that can operate safely in close proximity to humans. This paper explores the use of contextual affordances to enable safe exploration and learning in robotic scenarios targeted in the home. In particular, we propose a simple state representation that allows us to extend contextual affordances to larger state spaces and showcase how affordances can improve the success and convergence rate of a reinforcement learning algorithm in simulation. Our results suggest that after further iterations, it is possible to consider the implementation of this approach in a real robot manipulator. Furthermore, in the long term, this work could be the foundation for future explorations of human-robot interactions in complex domestic environments. This could be possible once state-of-the-art robot manipulators achieve the required level of dexterity for the described affordances in this paper.

Adapting Neural Models with Sequential Monte Carlo Dropout

Oct 27, 2022

Abstract:The ability to adapt to changing environments and settings is essential for robots acting in dynamic and unstructured environments or working alongside humans with varied abilities or preferences. This work introduces an extremely simple and effective approach to adapting neural models in response to changing settings. We first train a standard network using dropout, which is analogous to learning an ensemble of predictive models or distribution over predictions. At run-time, we use a particle filter to maintain a distribution over dropout masks to adapt the neural model to changing settings in an online manner. Experimental results show improved performance in control problems requiring both online and look-ahead prediction, and showcase the interpretability of the inferred masks in a human behaviour modelling task for drone teleoperation.

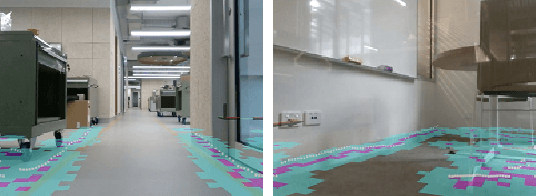

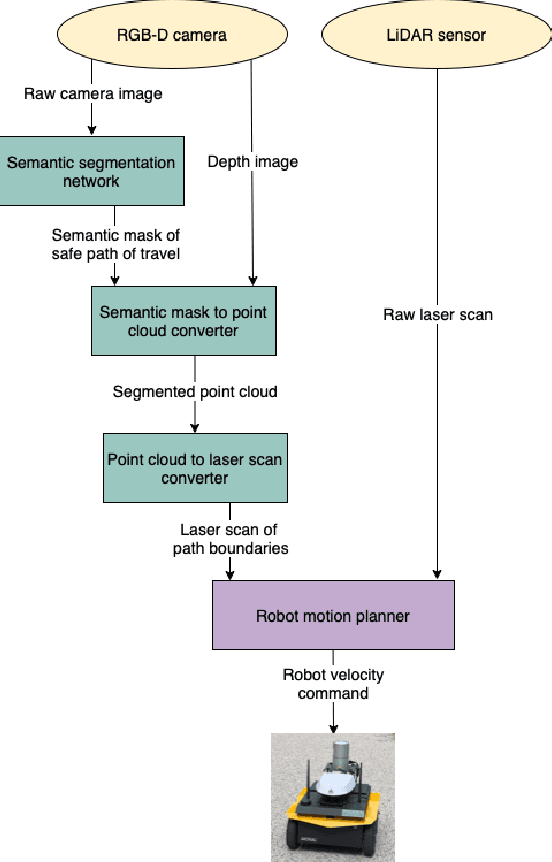

Autonomous social robot navigation in unknown urban environments using semantic segmentation

Aug 25, 2022

Abstract:For autonomous robots navigating in urban environments, it is important for the robot to stay on the designated path of travel (i.e., the footpath), and avoid areas such as grass and garden beds, for safety and social conformity considerations. This paper presents an autonomous navigation approach for unknown urban environments that combines the use of semantic segmentation and LiDAR data. The proposed approach uses the segmented image mask to create a 3D obstacle map of the environment, from which, the boundaries of the footpath is computed. Compared to existing methods, our approach does not require a pre-built map and provides a 3D understanding of the safe region of travel, enabling the robot to plan any path through the footpath. Experiments comparing our method with two alternatives using only LiDAR or only semantic segmentation show that overall our proposed approach performs significantly better with greater than 91% success rate outdoors, and greater than 66% indoors. Our method enabled the robot to remain on the safe path of travel at all times, and reduced the number of collisions.

Metrics for Evaluating Social Conformity of Crowd Navigation Algorithms

Feb 02, 2022

Abstract:Recent protocols and metrics for training and evaluating autonomous robot navigation through crowds are inconsistent due to diversified definitions of "social behavior". This makes it difficult, if not impossible, to effectively compare published navigation algorithms. Furthermore, with the lack of a good evaluation protocol, resulting algorithms may fail to generalize, due to lack of diversity in training. To address these gaps, this paper facilitates a more comprehensive evaluation and objective comparison of crowd navigation algorithms by proposing a consistent set of metrics that accounts for both efficiency and social conformity, and a systematic protocol comprising multiple crowd navigation scenarios of varying complexity for evaluation. We tested four state-of-the-art algorithms under this protocol. Results revealed that some state-of-the-art algorithms have much challenge in generalizing, and using our protocol for training, we were able to improve the algorithm's performance. We demonstrate that the set of proposed metrics provides more insight and effectively differentiates the performance of these algorithms with respect to efficiency and social conformity.

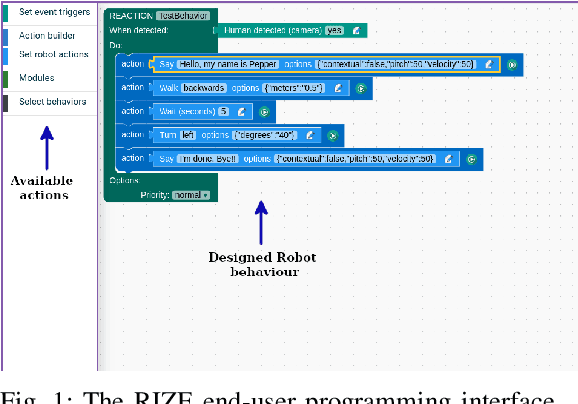

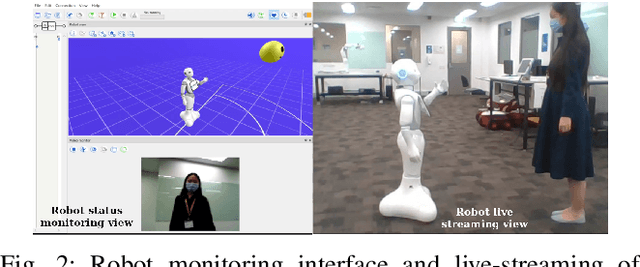

Aligning Robot's Behaviours and Users' Perceptions Through Participatory Prototyping

Jan 11, 2021

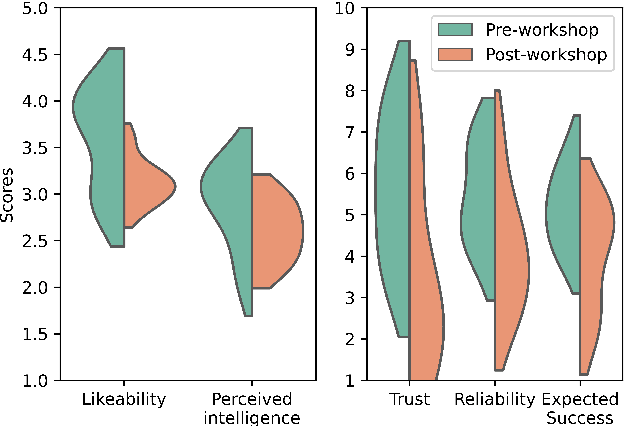

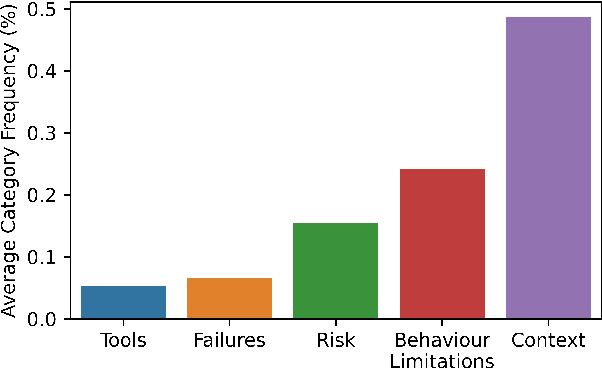

Abstract:Robots are increasingly being deployed in public spaces. However, the general population rarely has the opportunity to nominate what they would prefer or expect a robot to do in these contexts. Since most people have little or no experience interacting with a robot, it is not surprising that robots deployed in the real world may fail to gain acceptance or engage their intended users. To address this issue, we examine users' understanding of robots in public spaces and their expectations of appropriate uses of robots in these spaces. Furthermore, we investigate how these perceptions and expectations change as users engage and interact with a robot. To support this goal, we conducted a participatory design workshop in which participants were actively involved in the prototyping and testing of a robot's behaviours in simulation and on the physical robot. Our work highlights how social and interaction contexts influence users' perception of robots in public spaces and how users' design and understanding of what are appropriate robot behaviors shifts as they observe the enactment of their designs.

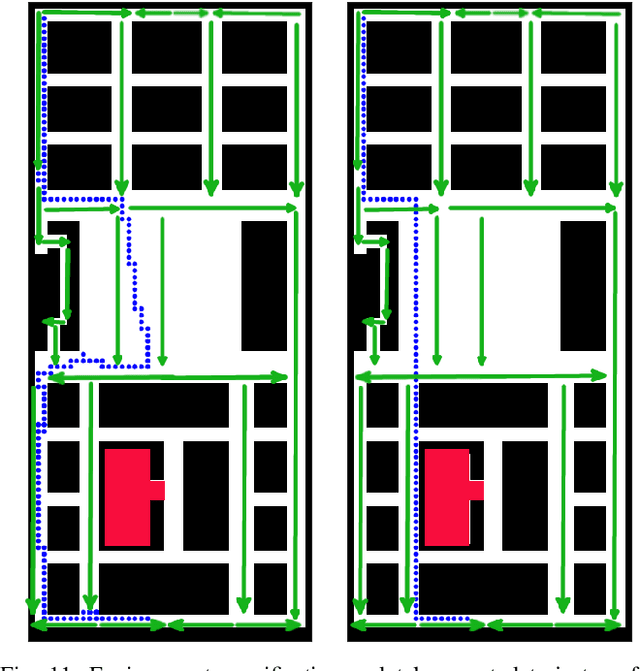

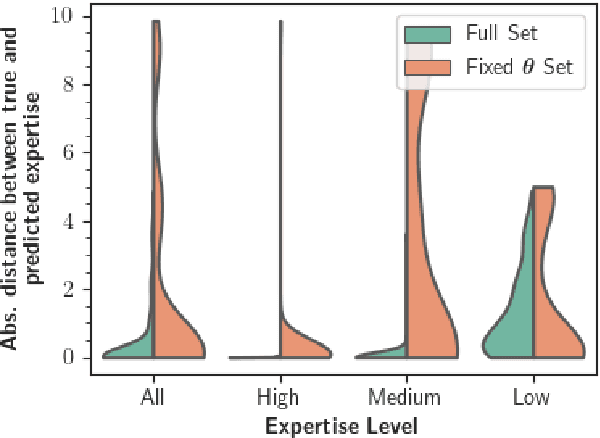

Joint Estimation of Expertise and Reward Preferences From Human Demonstrations

Nov 09, 2020

Abstract:When a robot learns from human examples, most approaches assume that the human partner provides examples of optimal behavior. However, there are applications in which the robot learns from non-expert humans. We argue that the robot should learn not only about the human's objectives, but also about their expertise level. The robot could then leverage this joint information to reduce or increase the frequency at which it provides assistance to its human's partner or be more cautious when learning new skills from novice users. Similarly, by taking into account the human's expertise, the robot would also be able of inferring a human's true objectives even when the human's fails to properly demonstrate these objectives due to a lack of expertise. In this paper, we propose to jointly infer the expertise level and objective function of a human given observations of their (possibly) non-optimal demonstrations. Two inference approaches are proposed. In the first approach, inference is done over a finite, discrete set of possible objective functions and expertise levels. In the second approach, the robot optimizes over the space of all possible hypotheses and finds the objective function and expertise level that best explain the observed human behavior. We demonstrate our proposed approaches both in simulation and with real user data.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge