Alexander L. Mitchell

Building Gradient by Gradient: Decentralised Energy Functions for Bimanual Robot Assembly

Oct 06, 2025

Abstract:There are many challenges in bimanual assembly, including high-level sequencing, multi-robot coordination, and low-level, contact-rich operations such as component mating. Task and motion planning (TAMP) methods, while effective in this domain, may be prohibitively slow to converge when adapting to disturbances that require new task sequencing and optimisation. These events are common during tight-tolerance assembly, where difficult-to-model dynamics such as friction or deformation require rapid replanning and reattempts. Moreover, defining explicit task sequences for assembly can be cumbersome, limiting flexibility when task replanning is required. To simplify this planning, we introduce a decentralised gradient-based framework that uses a piecewise continuous energy function through the automatic composition of adaptive potential functions. This approach generates sub-goals using only myopic optimisation, rather than long-horizon planning. It demonstrates effectiveness at solving long-horizon tasks due to the structure and adaptivity of the energy function. We show that our approach scales to physical bimanual assembly tasks for constructing tight-tolerance assemblies. In these experiments, we discover that our gradient-based rapid replanning framework generates automatic retries, coordinated motions and autonomous handovers in an emergent fashion.

Task and Joint Space Dual-Arm Compliant Control

Apr 29, 2025Abstract:Robots that interact with humans or perform delicate manipulation tasks must exhibit compliance. However, most commercial manipulators are rigid and suffer from significant friction, limiting end-effector tracking accuracy in torque-controlled modes. To address this, we present a real-time, open-source impedance controller that smoothly interpolates between joint-space and task-space compliance. This hybrid approach ensures safe interaction and precise task execution, such as sub-centimetre pin insertions. We deploy our controller on Frank, a dual-arm platform with two Kinova Gen3 arms, and compensate for modelled friction dynamics using a model-free observer. The system is real-time capable and integrates with standard ROS tools like MoveIt!. It also supports high-frequency trajectory streaming, enabling closed-loop execution of trajectories generated by learning-based methods, optimal control, or teleoperation. Our results demonstrate robust tracking and compliant behaviour even under high-friction conditions. The complete system is available open-source at https://github.com/applied-ai-lab/compliant_controllers.

COMBO-Grasp: Learning Constraint-Based Manipulation for Bimanual Occluded Grasping

Feb 12, 2025

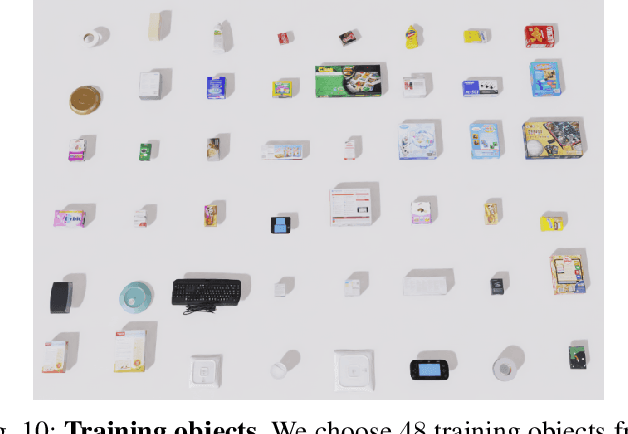

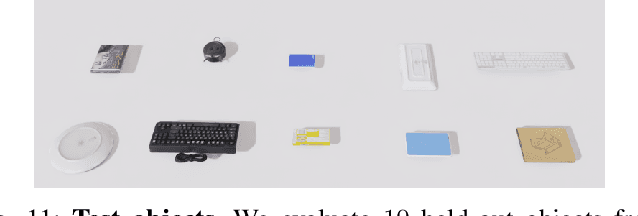

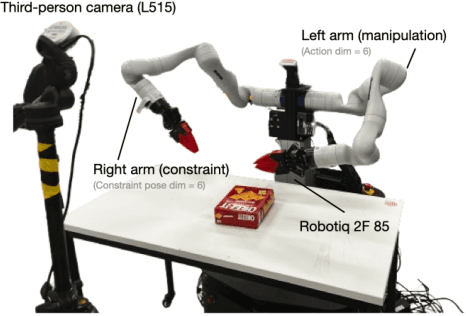

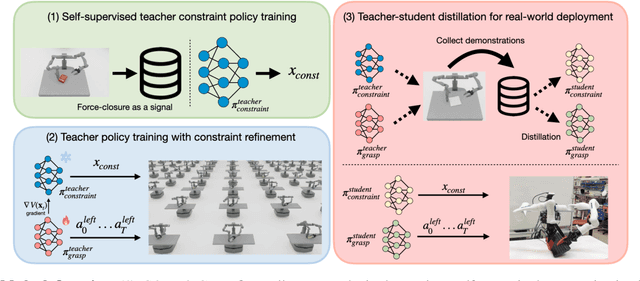

Abstract:This paper addresses the challenge of occluded robot grasping, i.e. grasping in situations where the desired grasp poses are kinematically infeasible due to environmental constraints such as surface collisions. Traditional robot manipulation approaches struggle with the complexity of non-prehensile or bimanual strategies commonly used by humans in these circumstances. State-of-the-art reinforcement learning (RL) methods are unsuitable due to the inherent complexity of the task. In contrast, learning from demonstration requires collecting a significant number of expert demonstrations, which is often infeasible. Instead, inspired by human bimanual manipulation strategies, where two hands coordinate to stabilise and reorient objects, we focus on a bimanual robotic setup to tackle this challenge. In particular, we introduce Constraint-based Manipulation for Bimanual Occluded Grasping (COMBO-Grasp), a learning-based approach which leverages two coordinated policies: a constraint policy trained using self-supervised datasets to generate stabilising poses and a grasping policy trained using RL that reorients and grasps the target object. A key contribution lies in value function-guided policy coordination. Specifically, during RL training for the grasping policy, the constraint policy's output is refined through gradients from a jointly trained value function, improving bimanual coordination and task performance. Lastly, COMBO-Grasp employs teacher-student policy distillation to effectively deploy point cloud-based policies in real-world environments. Empirical evaluations demonstrate that COMBO-Grasp significantly improves task success rates compared to competitive baseline approaches, with successful generalisation to unseen objects in both simulated and real-world environments.

Offline Adaptation of Quadruped Locomotion using Diffusion Models

Nov 13, 2024

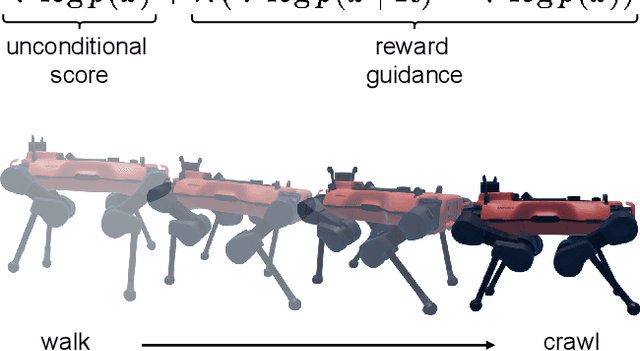

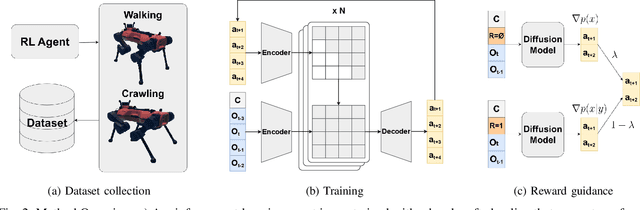

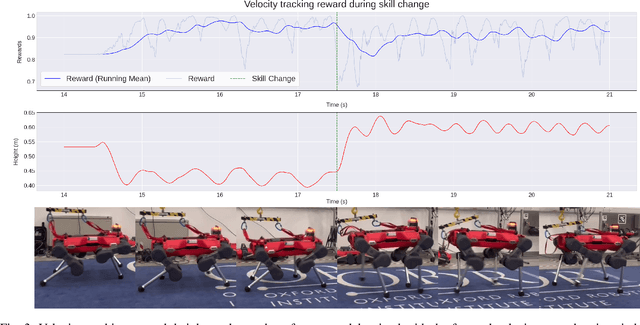

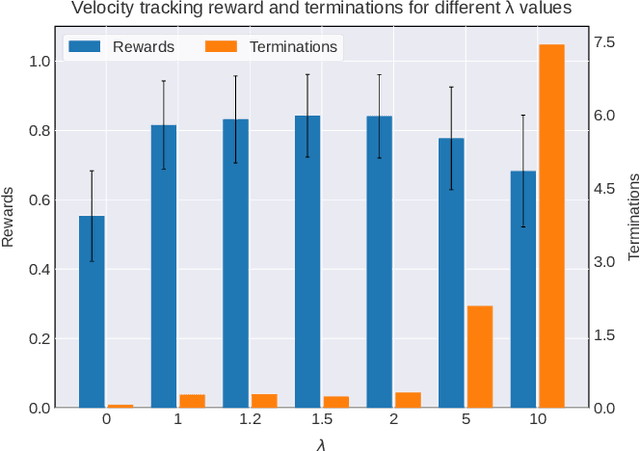

Abstract:We present a diffusion-based approach to quadrupedal locomotion that simultaneously addresses the limitations of learning and interpolating between multiple skills and of (modes) offline adapting to new locomotion behaviours after training. This is the first framework to apply classifier-free guided diffusion to quadruped locomotion and demonstrate its efficacy by extracting goal-conditioned behaviour from an originally unlabelled dataset. We show that these capabilities are compatible with a multi-skill policy and can be applied with little modification and minimal compute overhead, i.e., running entirely on the robots onboard CPU. We verify the validity of our approach with hardware experiments on the ANYmal quadruped platform.

Gaitor: Learning a Unified Representation Across Gaits for Real-World Quadruped Locomotion

May 29, 2024

Abstract:The current state-of-the-art in quadruped locomotion is able to produce robust motion for terrain traversal but requires the segmentation of a desired robot trajectory into a discrete set of locomotion skills such as trot and crawl. In contrast, in this work we demonstrate the feasibility of learning a single, unified representation for quadruped locomotion enabling continuous blending between gait types and characteristics. We present Gaitor, which learns a disentangled representation of locomotion skills, thereby sharing information common to all gait types seen during training. The structure emerging in the learnt representation is interpretable in that it is found to encode phase correlations between the different gait types. These can be leveraged to produce continuous gait transitions. In addition, foot swing characteristics are disentangled and directly addressable. Together with a rudimentary terrain encoding and a learned planner operating in this structured latent representation, Gaitor is able to take motion commands including desired gait type and characteristics from a user while reacting to uneven terrain. We evaluate Gaitor in both simulated and real-world settings on the ANYmal C platform. To the best of our knowledge, this is the first work learning such a unified and interpretable latent representation for multiple gaits, resulting in on-demand continuous blending between different locomotion modes on a real quadruped robot.

Towards Agility: A Momentum Aware Trajectory Optimisation Framework using Full-Centroidal Dynamics & Implicit Inverse Kinematics

Oct 09, 2023

Abstract:Online planning and execution of acrobatic maneuvers pose significant challenges in legged locomotion. Their underlying combinatorial nature, along with the current hardware's limitations constitute the main obstacles in unlocking the true potential of legged-robots. This letter tries to expose the intricacies of these optimal control problems in a tangible way, directly applicable to the creation of more efficient online trajectory optimisation frameworks. By analysing the fundamental principles that shape the behaviour of the system, the dynamics themselves can be exploited to surpass its hardware limitations. More specifically, a trajectory optimisation formulation is proposed that exploits the system's high-order nonlinearities, such as the nonholonomy of the angular momentum, and phase-space symmetries in order to produce feasible high-acceleration maneuvers. By leveraging the full-centroidal dynamics of the quadruped ANYmal C and directly optimising its footholds and contact forces, the framework is capable of producing efficient motion plans with low computational overhead. The feasibility of the produced trajectories is ensured by taking into account the configuration-dependent inertial properties of the robot during the planning process, while its robustness is increased by supplying the full analytic derivatives & hessians to the solver. Finally, a significant portion of the discussion is centred around the deployment of the proposed framework on the ANYmal C platform, while its true capabilities are demonstrated through real-world experiments, with the successful execution of high-acceleration motion scenarios like the squat-jump.

VAE-Loco: Versatile Quadruped Locomotion by Learning a Disentangled Gait Representation

May 02, 2022

Abstract:Quadruped locomotion is rapidly maturing to a degree where robots now routinely traverse a variety of unstructured terrains. However, while gaits can be varied typically by selecting from a range of pre-computed styles, current planners are unable to vary key gait parameters continuously while the robot is in motion. The synthesis, on-the-fly, of gaits with unexpected operational characteristics or even the blending of dynamic manoeuvres lies beyond the capabilities of the current state-of-the-art. In this work we address this limitation by learning a latent space capturing the key stance phases constituting a particular gait. This is achieved via a generative model trained on a single trot style, which encourages disentanglement such that application of a drive signal to a single dimension of the latent state induces holistic plans synthesising a continuous variety of trot styles. We demonstrate that specific properties of the drive signal map directly to gait parameters such as cadence, footstep height and full stance duration. Due to the nature of our approach these synthesised gaits are continuously variable online during robot operation and robustly capture a richness of movement significantly exceeding the relatively narrow behaviour seen during training. In addition, the use of a generative model facilitates the detection and mitigation of disturbances to provide a versatile and robust planning framework. We evaluate our approach on two versions of the real ANYmal quadruped robots and demonstrate that our method achieves a continuous blend of dynamic trot styles whilst being robust and reactive to external perturbations.

Next Steps: Learning a Disentangled Gait Representation for Versatile Quadruped Locomotion

Dec 09, 2021

Abstract:Quadruped locomotion is rapidly maturing to a degree where robots now routinely traverse a variety of unstructured terrains. However, while gaits can be varied typically by selecting from a range of pre-computed styles, current planners are unable to vary key gait parameters continuously while the robot is in motion. The synthesis, on-the-fly, of gaits with unexpected operational characteristics or even the blending of dynamic manoeuvres lies beyond the capabilities of the current state-of-the-art. In this work we address this limitation by learning a latent space capturing the key stance phases constituting a particular gait. This is achieved via a generative model trained on a single trot style, which encourages disentanglement such that application of a drive signal to a single dimension of the latent state induces holistic plans synthesising a continuous variety of trot styles. We demonstrate that specific properties of the drive signal map directly to gait parameters such as cadence, foot step height and full stance duration. Due to the nature of our approach these synthesised gaits are continuously variable online during robot operation and robustly capture a richness of movement significantly exceeding the relatively narrow behaviour seen during training. In addition, the use of a generative model facilitates the detection and mitigation of disturbances to provide a versatile and robust planning framework. We evaluate our approach on a real ANYmal quadruped robot and demonstrate that our method achieves a continuous blend of dynamic trot styles whilst being robust and reactive to external perturbations.

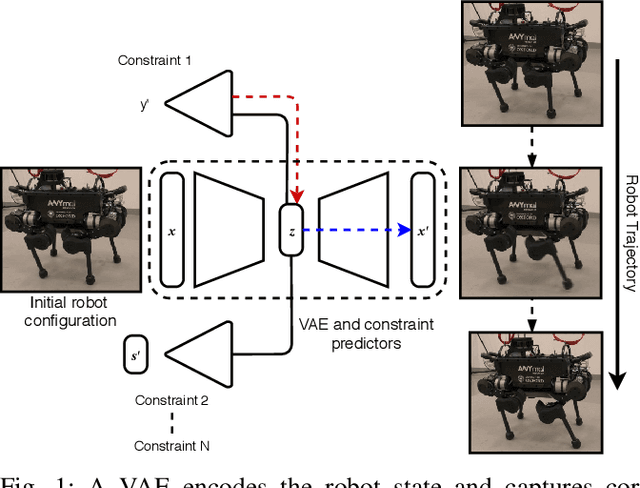

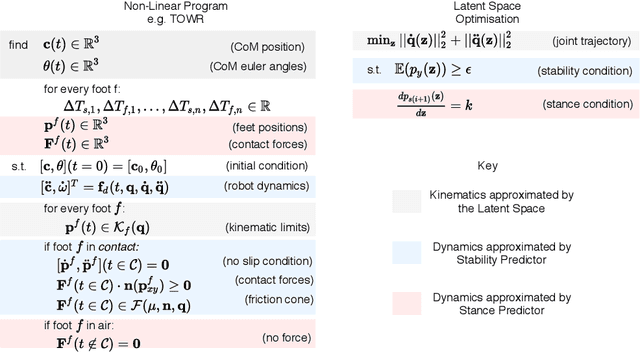

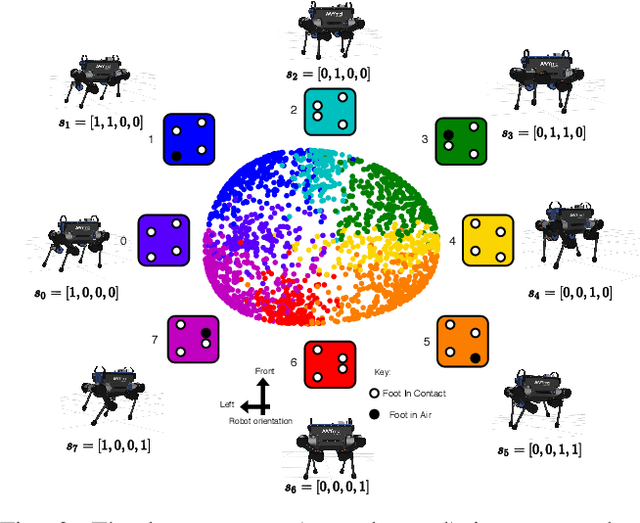

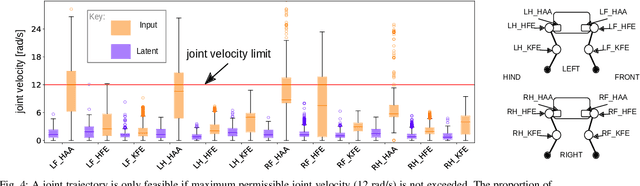

First Steps: Latent-Space Control with Semantic Constraints for Quadruped Locomotion

Jul 03, 2020

Abstract:Traditional approaches to quadruped control frequently employ simplified, hand-derived models. This significantly reduces the capability of the robot since its effective kinematic range is curtailed. In addition, kinodynamic constraints are often non-differentiable and difficult to implement in an optimisation approach. In this work, these challenges are addressed by framing quadruped control as optimisation in a structured latent space. A deep generative model captures a statistical representation of feasible joint configurations, whilst complex dynamic and terminal constraints are expressed via high-level, semantic indicators and represented by learned classifiers operating upon the latent space. As a consequence, complex constraints are rendered differentiable and evaluated an order of magnitude faster than analytical approaches. We validate the feasibility of locomotion trajectories optimised using our approach both in simulation and on a real-world ANYmal quadruped. Our results demonstrate that this approach is capable of generating smooth and realisable trajectories. To the best of our knowledge, this is the first time latent space control has been successfully applied to a complex, real robot platform.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge