Oliver Brock

A Helping (Human) Hand in Kinematic Structure Estimation

Mar 07, 2025Abstract:Visual uncertainties such as occlusions, lack of texture, and noise present significant challenges in obtaining accurate kinematic models for safe robotic manipulation. We introduce a probabilistic real-time approach that leverages the human hand as a prior to mitigate these uncertainties. By tracking the constrained motion of the human hand during manipulation and explicitly modeling uncertainties in visual observations, our method reliably estimates an object's kinematic model online. We validate our approach on a novel dataset featuring challenging objects that are occluded during manipulation and offer limited articulations for perception. The results demonstrate that by incorporating an appropriate prior and explicitly accounting for uncertainties, our method produces accurate estimates, outperforming two recent baselines by 195% and 140%, respectively. Furthermore, we demonstrate that our approach's estimates are precise enough to allow a robot to manipulate even small objects safely.

No Plan but Everything Under Control: Robustly Solving Sequential Tasks with Dynamically Composed Gradient Descent

Mar 03, 2025

Abstract:We introduce a novel gradient-based approach for solving sequential tasks by dynamically adjusting the underlying myopic potential field in response to feedback and the world's regularities. This adjustment implicitly considers subgoals encoded in these regularities, enabling the solution of long sequential tasks, as demonstrated by solving the traditional planning domain of Blocks World - without any planning. Unlike conventional planning methods, our feedback-driven approach adapts to uncertain and dynamic environments, as demonstrated by one hundred real-world trials involving drawer manipulation. These experiments highlight the robustness of our method compared to planning and show how interactive perception and error recovery naturally emerge from gradient descent without explicitly implementing them. This offers a computationally efficient alternative to planning for a variety of sequential tasks, while aligning with observations on biological problem-solving strategies.

A Biologically Inspired Design Principle for Building Robust Robotic Systems

Aug 19, 2024Abstract:Robustness, the ability of a system to maintain performance under significant and unanticipated environmental changes, is a critical property for robotic systems. While biological systems naturally exhibit robustness, there is no comprehensive understanding of how to achieve similar robustness in robotic systems. In this work, we draw inspirations from biological systems and propose a design principle that advocates active interconnections among system components to enhance robustness to environmental variations. We evaluate this design principle in a challenging long-horizon manipulation task: solving lockboxes. Our extensive simulated and real-world experiments demonstrate that we could enhance robustness against environmental changes by establishing active interconnections among system components without substantial changes in individual components. Our findings suggest that a systematic investigation of design principles in system building is necessary. It also advocates for interdisciplinary collaborations to explore and evaluate additional principles of biological robustness to advance the development of intelligent and adaptable robotic systems.

A Robotics-Inspired Scanpath Model Reveals the Importance of Uncertainty and Semantic Object Cues for Gaze Guidance in Dynamic Scenes

Aug 02, 2024Abstract:How we perceive objects around us depends on what we actively attend to, yet our eye movements depend on the perceived objects. Still, object segmentation and gaze behavior are typically treated as two independent processes. Drawing on an information processing pattern from robotics, we present a mechanistic model that simulates these processes for dynamic real-world scenes. Our image-computable model uses the current scene segmentation for object-based saccadic decision-making while using the foveated object to refine its scene segmentation recursively. To model this refinement, we use a Bayesian filter, which also provides an uncertainty estimate for the segmentation that we use to guide active scene exploration. We demonstrate that this model closely resembles observers' free viewing behavior, measured by scanpath statistics, including foveation duration and saccade amplitude distributions used for parameter fitting and higher-level statistics not used for fitting. These include how object detections, inspections, and returns are balanced and a delay of returning saccades without an explicit implementation of such temporal inhibition of return. Extensive simulations and ablation studies show that uncertainty promotes balanced exploration and that semantic object cues are crucial to form the perceptual units used in object-based attention. Moreover, we show how our model's modular design allows for extensions, such as incorporating saccadic momentum or pre-saccadic attention, to further align its output with human scanpaths.

Intelligence as Computation

May 26, 2024Abstract:This paper proposes a specific conceptualization of intelligence as computation. This conceptualization is intended to provide a unified view for all disciplines of intelligence research. Already, it unifies several conceptualizations currently under investigation, including physical, neural, embodied, morphological, and mechanical intelligences. To achieve this, the proposed conceptualization explains the differences among existing views by different computational paradigms, such as digital, analog, mechanical, or morphological computation. Viewing intelligence as a composition of computations from different paradigms, the challenges posed by previous conceptualizations are resolved. Intelligence is hypothesized as a multi-paradigmatic computation relying on specific computational principles. These principles distinguish intelligence from other, non-intelligent computations. The proposed conceptualization implies a multi-disciplinary research agenda that is intended to lead to unified science of intelligence.

In-Hand Cube Reconfiguration: Simplified

Aug 23, 2023Abstract:We present a simple approach to in-hand cube reconfiguration. By simplifying planning, control, and perception as much as possible, while maintaining robust and general performance, we gain insights into the inherent complexity of in-hand cube reconfiguration. We also demonstrate the effectiveness of combining GOFAI-based planning with the exploitation of environmental constraints and inherently compliant end-effectors in the context of dexterous manipulation. The proposed system outperforms a substantially more complex system for cube reconfiguration based on deep learning and accurate physical simulation, contributing arguments to the discussion about what the most promising approach to general manipulation might be. Project website: https://rbo.gitlab-pages.tu-berlin.de/robotics/simpleIHM/

Dexterous Soft Hands Linearize Feedback-Control for In-Hand Manipulation

Aug 22, 2023Abstract:This paper presents a feedback-control framework for in-hand manipulation (IHM) with dexterous soft hands that enables the acquisition of manipulation skills in the real-world within minutes. We choose the deformation state of the soft hand as the control variable. To control for a desired deformation state, we use coarsely approximated Jacobians of the actuation-deformation dynamics. These Jacobian are obtained via explorative actions. This is enabled by the self-stabilizing properties of compliant hands, which allow us to use linear feedback control in the presence of complex contact dynamics. To evaluate the effectiveness of our approach, we show the generalization capabilities for a learned manipulation skill to variations in object size by 100 %, 360 degree changes in palm inclination and to disabling up to 50 % of the involved actuators. In addition, complex manipulations can be obtained by sequencing such feedback-skills.

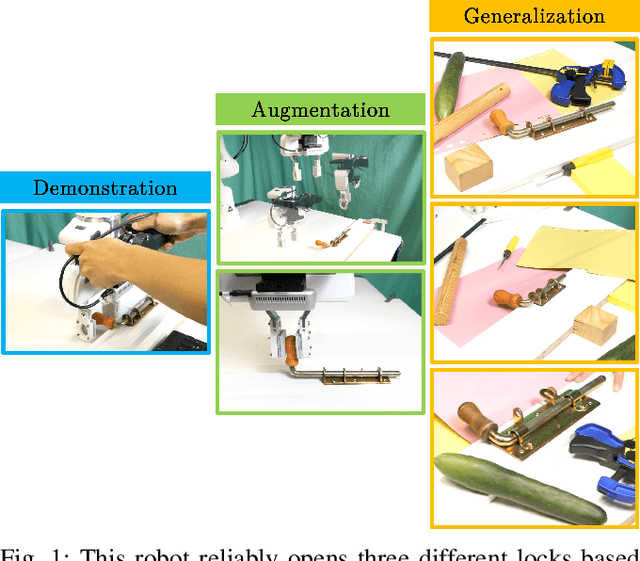

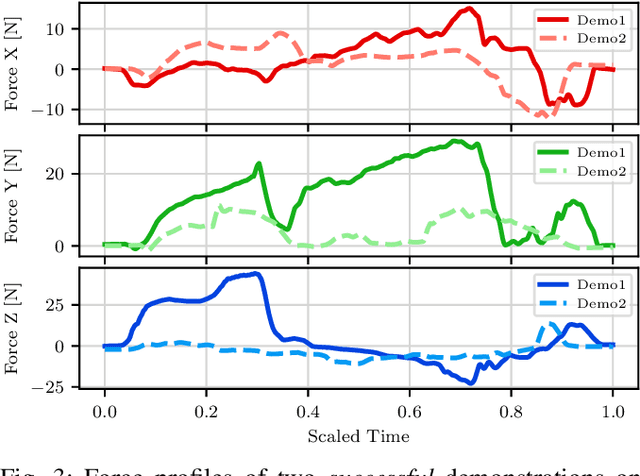

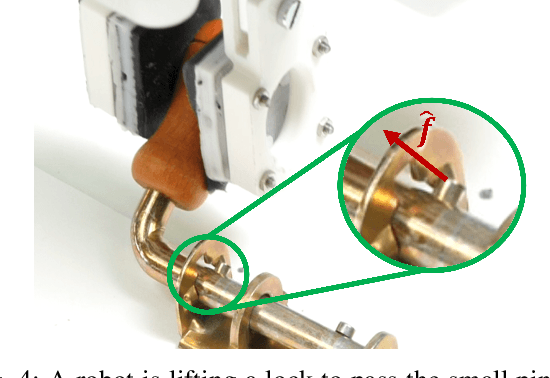

Augmentation for Learning From Demonstration with Environmental Constraints

Oct 13, 2022

Abstract:We introduce a Learning from Demonstration (LfD) approach for contact-rich manipulation tasks with articulated mechanisms. The extracted policy from a single human demonstration generalizes to different mechanisms of the same type and is robust against environmental variations. The key to achieving such generalization and robustness from a single human demonstration is to autonomously augment the initial demonstration to gather additional information through purposefully interacting with the environment. Our real-world experiments on complex mechanisms with multi-DOF demonstrate that our approach can reliably accomplish the task in a changing environment. Videos are available at the: https://sites.google.com/view/rbosalfdec/home

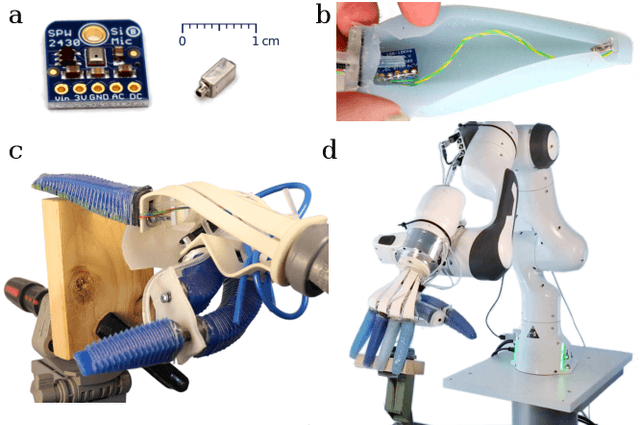

Passive and Active Acoustic Sensing for Soft Pneumatic Actuators

Aug 22, 2022

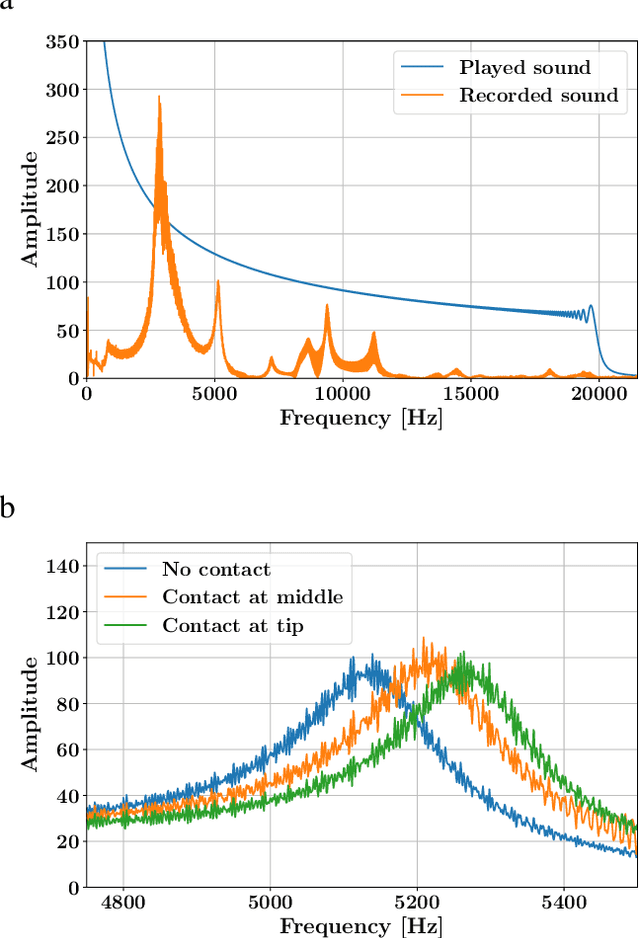

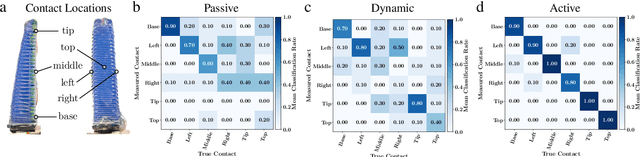

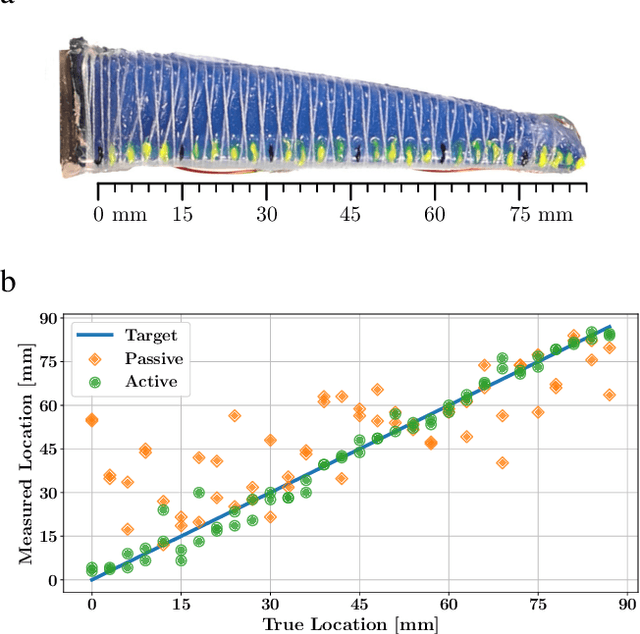

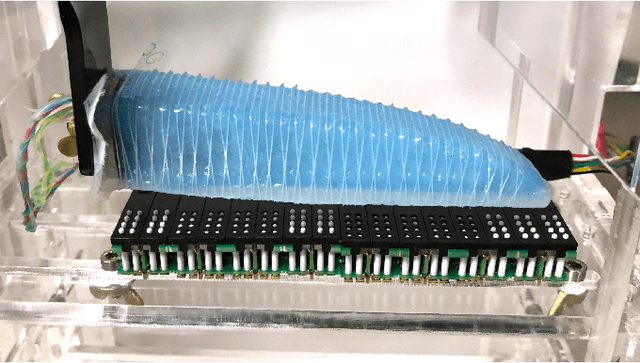

Abstract:We propose a sensorization method for soft pneumatic actuators that uses an embedded microphone and speaker to measure different actuator properties. The physical state of the actuator determines the specific modulation of sound as it travels through the structure. Using simple machine learning, we create a computational sensor that infers the corresponding state from sound recordings. We demonstrate the acoustic sensor on a soft pneumatic continuum actuator and use it to measure contact locations, contact forces, object materials, actuator inflation, and actuator temperature. We show that the sensor is reliable (average classification rate for six contact locations of 93%), precise (mean spatial accuracy of 3.7 mm), and robust against common disturbances like background noise. Finally, we compare different sounds and learning methods and achieve best results with 20 ms of white noise and a support vector classifier as the sensor model.

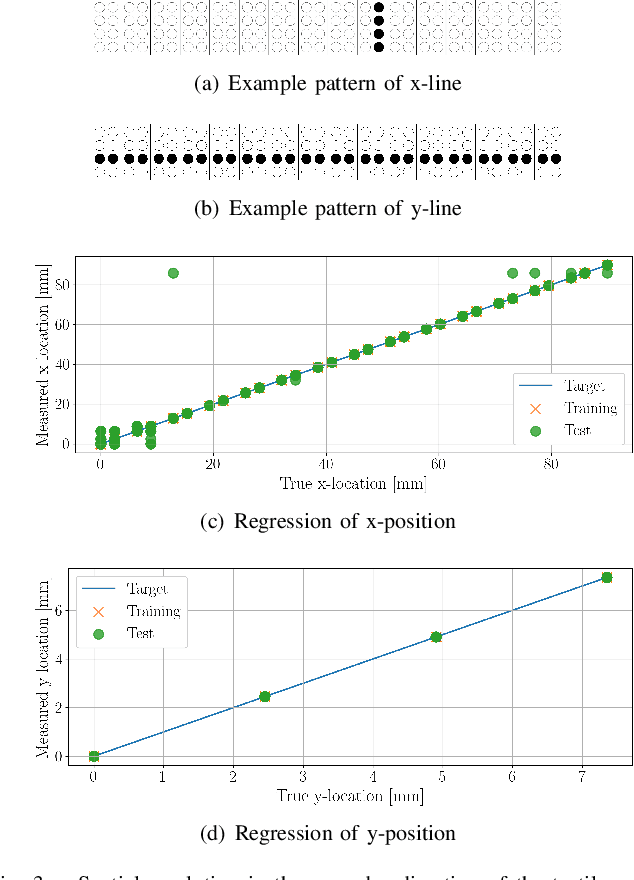

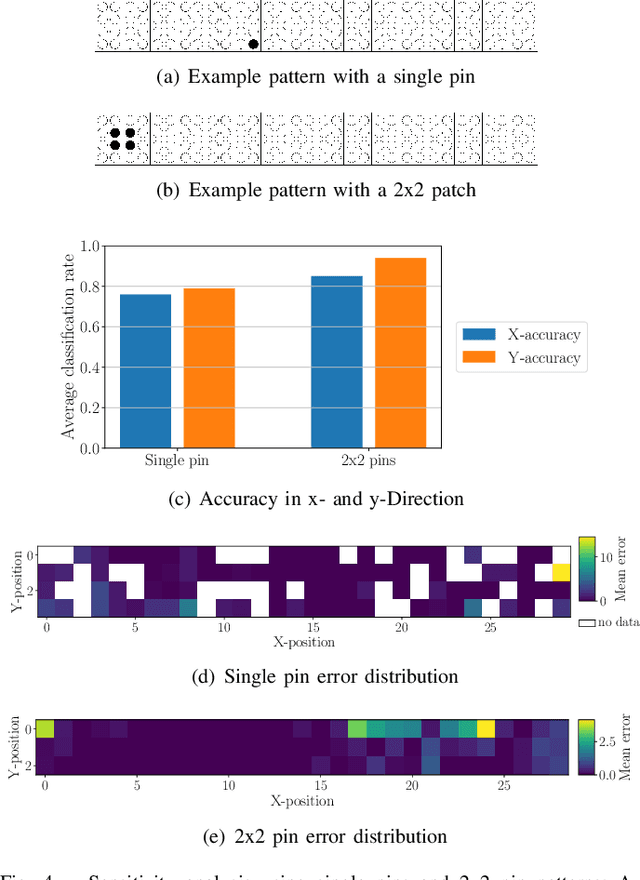

A Virtual 2D Tactile Array for Soft Actuators Using Acoustic Sensing

Aug 22, 2022

Abstract:We create a virtual 2D tactile array for soft pneumatic actuators using embedded audio components. We detect contact-specific changes in sound modulation to infer tactile information. We evaluate different sound representations and learning methods to detect even small contact variations. We demonstrate the acoustic tactile sensor array by the example of a PneuFlex actuator and use a Braille display to individually control the contact of 29x4 pins with the actuator's 90x10 mm palmar surface. Evaluating the spatial resolution, the acoustic sensor localizes edges in x- and y-direction with a root-mean-square regression error of 1.67 mm and 0.0 mm, respectively. Even light contacts of a single Braille pin with a lifting force of 0.17 N are measured with high accuracy. Finally, we demonstrate the sensor's sensitivity to complex contact shapes by successfully reading the 26 letters of the Braille alphabet from a single display cell with a classification rate of 88%.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge