Manuel Baum

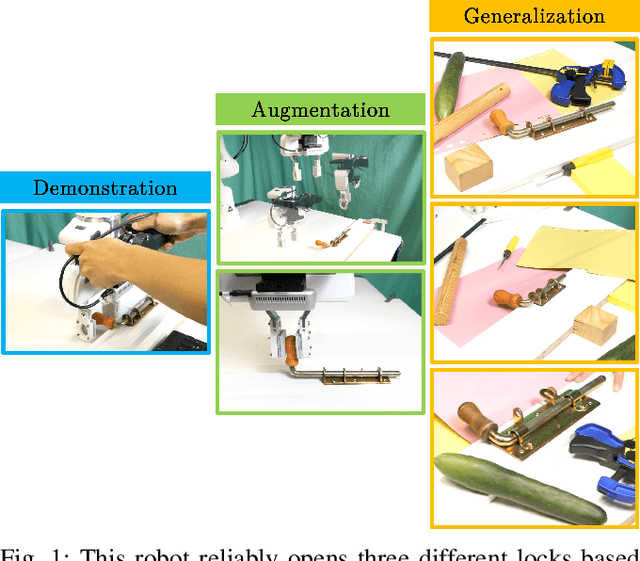

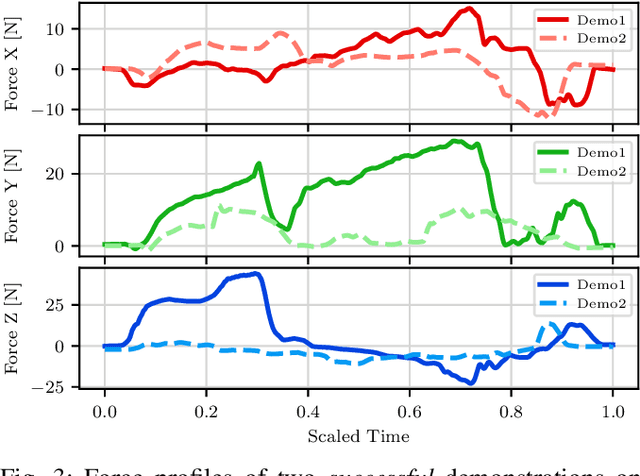

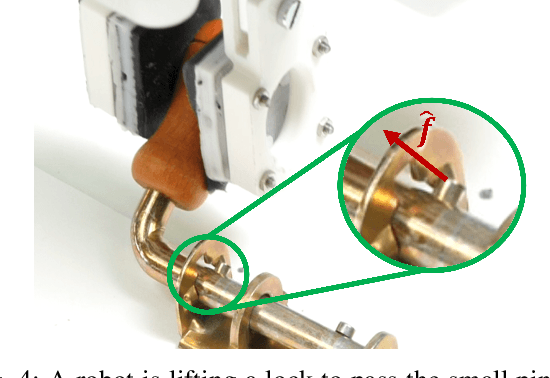

Augmentation for Learning From Demonstration with Environmental Constraints

Oct 13, 2022

Abstract:We introduce a Learning from Demonstration (LfD) approach for contact-rich manipulation tasks with articulated mechanisms. The extracted policy from a single human demonstration generalizes to different mechanisms of the same type and is robust against environmental variations. The key to achieving such generalization and robustness from a single human demonstration is to autonomously augment the initial demonstration to gather additional information through purposefully interacting with the environment. Our real-world experiments on complex mechanisms with multi-DOF demonstrate that our approach can reliably accomplish the task in a changing environment. Videos are available at the: https://sites.google.com/view/rbosalfdec/home

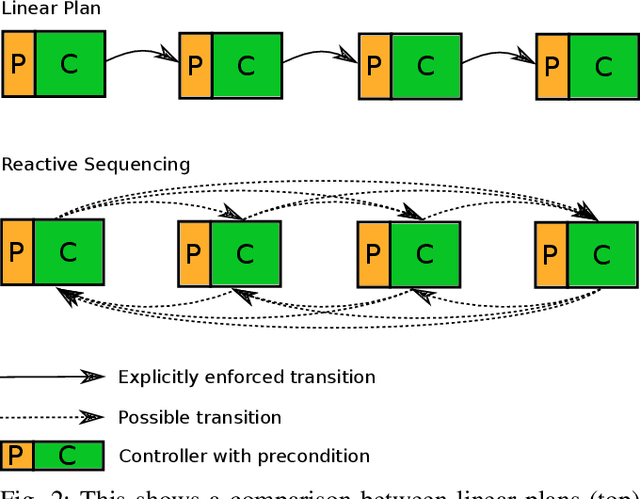

"The World Is Its Own Best Model": Robust Real-World Manipulation Through Online Behavior Selection

May 09, 2022

Abstract:Robotic manipulation behavior should be robust to disturbances that violate high-level task-structure. Such robustness can be achieved by constantly monitoring the environment to observe the discrete high-level state of the task. This is possible because different phases of a task are characterized by different sensor patterns and by monitoring these patterns a robot can decide which controllers to execute in the moment. This relaxes assumptions about the temporal sequence of those controllers and makes behavior robust to unforeseen disturbances. We implement this idea as probabilistic filter over discrete states where each state is direcly associated with a controller. Based on this framework we present a robotic system that is able to open a drawer and grasp tennis balls from it in a surprisingly robust way.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge