Oussama Zenkri

A Biologically Inspired Design Principle for Building Robust Robotic Systems

Aug 19, 2024Abstract:Robustness, the ability of a system to maintain performance under significant and unanticipated environmental changes, is a critical property for robotic systems. While biological systems naturally exhibit robustness, there is no comprehensive understanding of how to achieve similar robustness in robotic systems. In this work, we draw inspirations from biological systems and propose a design principle that advocates active interconnections among system components to enhance robustness to environmental variations. We evaluate this design principle in a challenging long-horizon manipulation task: solving lockboxes. Our extensive simulated and real-world experiments demonstrate that we could enhance robustness against environmental changes by establishing active interconnections among system components without substantial changes in individual components. Our findings suggest that a systematic investigation of design principles in system building is necessary. It also advocates for interdisciplinary collaborations to explore and evaluate additional principles of biological robustness to advance the development of intelligent and adaptable robotic systems.

Hierarchical Policy Learning for Mechanical Search

Feb 28, 2022

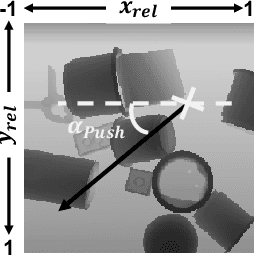

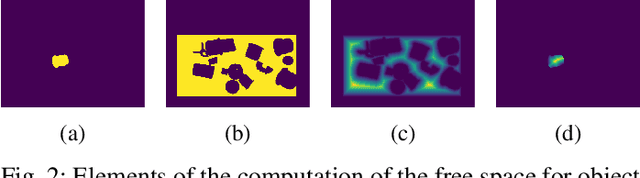

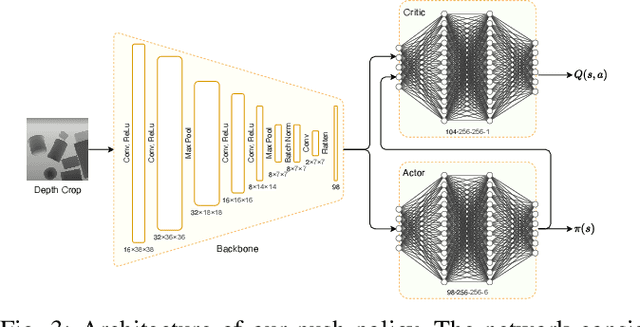

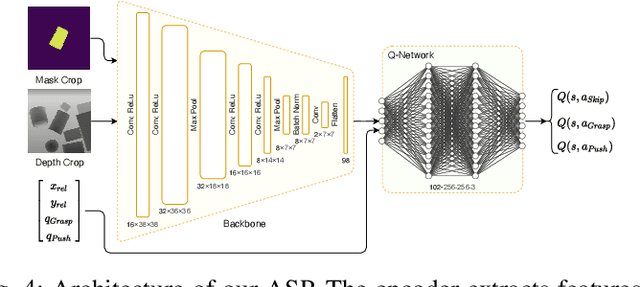

Abstract:Retrieving objects from clutters is a complex task, which requires multiple interactions with the environment until the target object can be extracted. These interactions involve executing action primitives like grasping or pushing as well as setting priorities for the objects to manipulate and the actions to execute. Mechanical Search (MS) is a framework for object retrieval, which uses a heuristic algorithm for pushing and rule-based algorithms for high-level planning. While rule-based policies profit from human intuition in how they work, they usually perform sub-optimally in many cases. Deep reinforcement learning (RL) has shown great performance in complex tasks such as taking decisions through evaluating pixels, which makes it suitable for training policies in the context of object-retrieval. In this work, we first formulate the MS problem in a principled formulation as a hierarchical POMDP. Based on this formulation, we propose a hierarchical policy learning approach for the MS problem. For demonstration, we present two main parameterized sub-policies: a push policy and an action selection policy. When integrated into the hierarchical POMDP's policy, our proposed sub-policies increase the success rate of retrieving the target object from less than 32% to nearly 80%, while reducing the computation time for push actions from multiple seconds to less than 10 milliseconds.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge