Vincent Wall

Passive and Active Acoustic Sensing for Soft Pneumatic Actuators

Aug 22, 2022

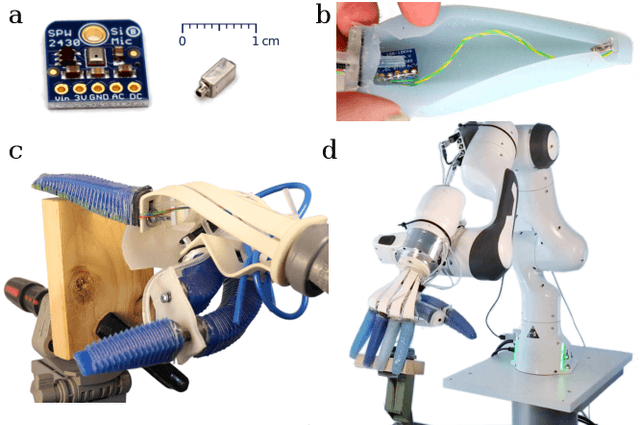

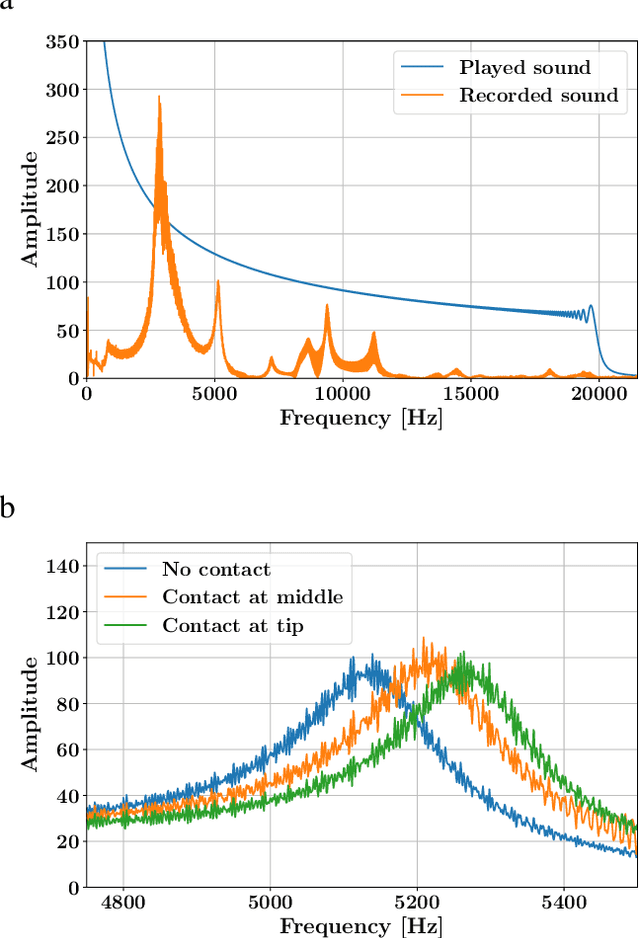

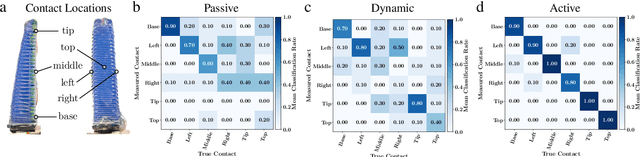

Abstract:We propose a sensorization method for soft pneumatic actuators that uses an embedded microphone and speaker to measure different actuator properties. The physical state of the actuator determines the specific modulation of sound as it travels through the structure. Using simple machine learning, we create a computational sensor that infers the corresponding state from sound recordings. We demonstrate the acoustic sensor on a soft pneumatic continuum actuator and use it to measure contact locations, contact forces, object materials, actuator inflation, and actuator temperature. We show that the sensor is reliable (average classification rate for six contact locations of 93%), precise (mean spatial accuracy of 3.7 mm), and robust against common disturbances like background noise. Finally, we compare different sounds and learning methods and achieve best results with 20 ms of white noise and a support vector classifier as the sensor model.

A Virtual 2D Tactile Array for Soft Actuators Using Acoustic Sensing

Aug 22, 2022

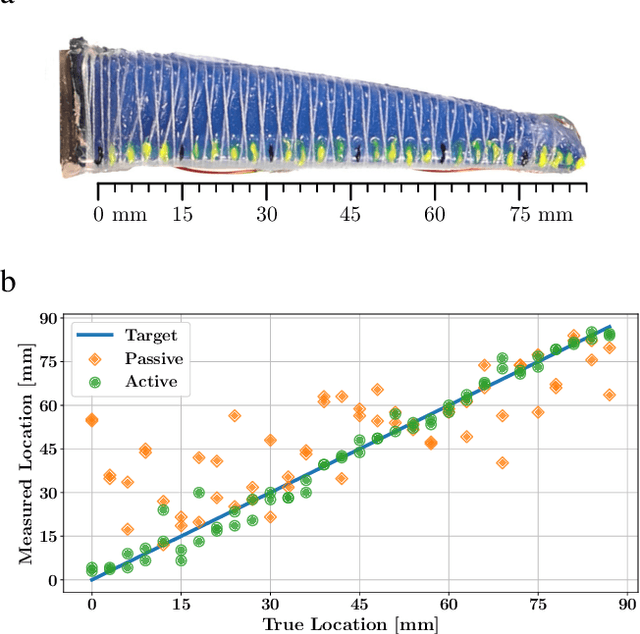

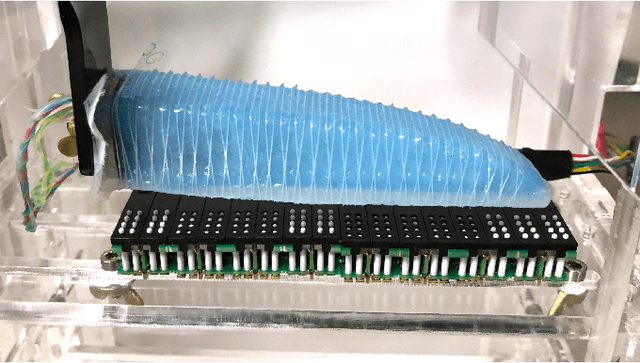

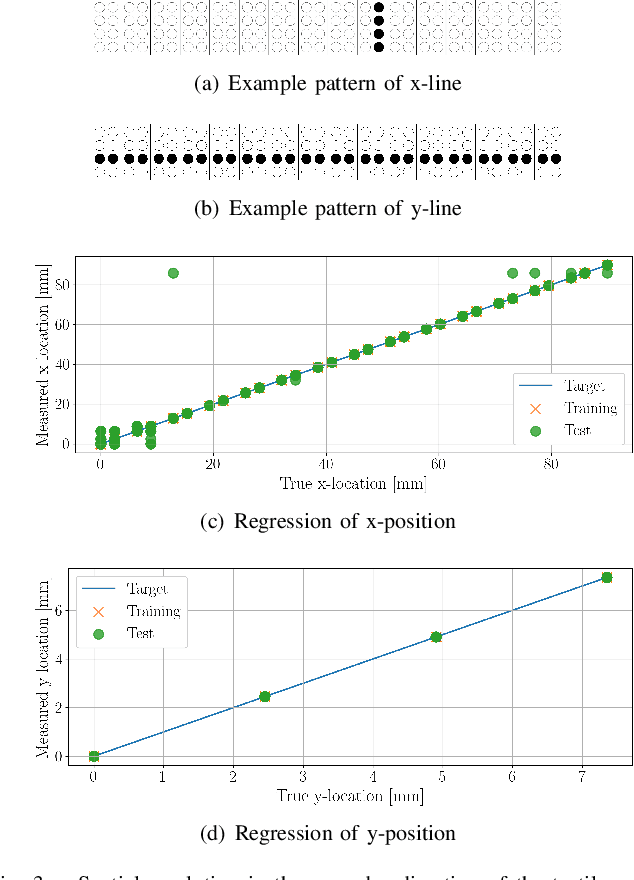

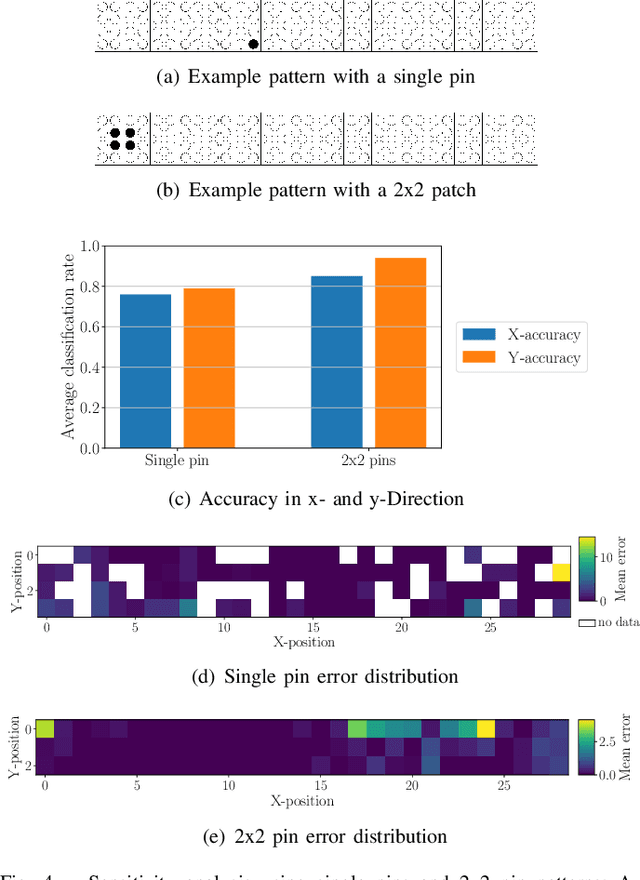

Abstract:We create a virtual 2D tactile array for soft pneumatic actuators using embedded audio components. We detect contact-specific changes in sound modulation to infer tactile information. We evaluate different sound representations and learning methods to detect even small contact variations. We demonstrate the acoustic tactile sensor array by the example of a PneuFlex actuator and use a Braille display to individually control the contact of 29x4 pins with the actuator's 90x10 mm palmar surface. Evaluating the spatial resolution, the acoustic sensor localizes edges in x- and y-direction with a root-mean-square regression error of 1.67 mm and 0.0 mm, respectively. Even light contacts of a single Braille pin with a lifting force of 0.17 N are measured with high accuracy. Finally, we demonstrate the sensor's sensitivity to complex contact shapes by successfully reading the 26 letters of the Braille alphabet from a single display cell with a classification rate of 88%.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge