Annie Feng

Guided Exploration for Efficient Relational Model Learning

Feb 10, 2025

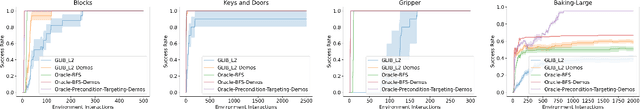

Abstract:Efficient exploration is critical for learning relational models in large-scale environments with complex, long-horizon tasks. Random exploration methods often collect redundant or irrelevant data, limiting their ability to learn accurate relational models of the environment. Goal-literal babbling (GLIB) improves upon random exploration by setting and planning to novel goals, but its reliance on random actions and random novel goal selection limits its scalability to larger domains. In this work, we identify the principles underlying efficient exploration in relational domains: (1) operator initialization with demonstrations that cover the distinct lifted effects necessary for planning and (2) refining preconditions to collect maximally informative transitions by selecting informative goal-action pairs and executing plans to them. To demonstrate these principles, we introduce Baking-Large, a challenging domain with extensive state-action spaces and long-horizon tasks. We evaluate methods using oracle-driven demonstrations for operator initialization and precondition-targeting guidance to efficiently gather critical transitions. Experiments show that both the oracle demonstrations and precondition-targeting oracle guidance significantly improve sample efficiency and generalization, paving the way for future methods to use these principles to efficiently learn accurate relational models in complex domains.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge