Ting Su

LocalSearchBench: Benchmarking Agentic Search in Real-World Local Life Services

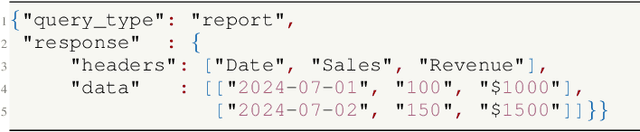

Dec 08, 2025Abstract:Recent advances in large reasoning models (LRMs) have enabled agentic search systems to perform complex multi-step reasoning across multiple sources. However, most studies focus on general information retrieval and rarely explores vertical domains with unique challenges. In this work, we focus on local life services and introduce LocalSearchBench, which encompass diverse and complex business scenarios. Real-world queries in this domain are often ambiguous and require multi-hop reasoning across merchants and products, remaining challenging and not fully addressed. As the first comprehensive benchmark for agentic search in local life services, LocalSearchBench includes over 150,000 high-quality entries from various cities and business types. We construct 300 multi-hop QA tasks based on real user queries, challenging agents to understand questions and retrieve information in multiple steps. We also developed LocalPlayground, a unified environment integrating multiple tools for agent interaction. Experiments show that even state-of-the-art LRMs struggle on LocalSearchBench: the best model (DeepSeek-V3.1) achieves only 34.34% correctness, and most models have issues with completeness (average 77.33%) and faithfulness (average 61.99%). This highlights the need for specialized benchmarks and domain-specific agent training in local life services. Code, Benchmark, and Leaderboard are available at localsearchbench.github.io.

Intrinsic Memory Agents: Heterogeneous Multi-Agent LLM Systems through Structured Contextual Memory

Aug 12, 2025Abstract:Multi-agent systems built on Large Language Models (LLMs) show exceptional promise for complex collaborative problem-solving, yet they face fundamental challenges stemming from context window limitations that impair memory consistency, role adherence, and procedural integrity. This paper introduces Intrinsic Memory Agents, a novel framework that addresses these limitations through structured agent-specific memories that evolve intrinsically with agent outputs. Specifically, our method maintains role-aligned memory templates that preserve specialized perspectives while focusing on task-relevant information. We benchmark our approach on the PDDL dataset, comparing its performance to existing state-of-the-art multi-agentic memory approaches and showing an improvement of 38.6\% with the highest token efficiency. An additional evaluation is performed on a complex data pipeline design task, we demonstrate that our approach produces higher quality designs when comparing 5 metrics: scalability, reliability, usability, cost-effectiveness and documentation with additional qualitative evidence of the improvements. Our findings suggest that addressing memory limitations through structured, intrinsic approaches can improve the capabilities of multi-agent LLM systems on structured planning tasks.

Automatic Dataset Generation for Knowledge Intensive Question Answering Tasks

May 20, 2025Abstract:A question-answering (QA) system is to search suitable answers within a knowledge base. Current QA systems struggle with queries requiring complex reasoning or real-time knowledge integration. They are often supplemented with retrieval techniques on a data source such as Retrieval-Augmented Generation (RAG). However, RAG continues to face challenges in handling complex reasoning and logical connections between multiple sources of information. A novel approach for enhancing Large Language Models (LLMs) in knowledge-intensive QA tasks is presented through the automated generation of context-based QA pairs. This methodology leverages LLMs to create fine-tuning data, reducing reliance on human labelling and improving model comprehension and reasoning capabilities. The proposed system includes an automated QA generator and a model fine-tuner, evaluated using perplexity, ROUGE, BLEU, and BERTScore. Comprehensive experiments demonstrate improvements in logical coherence and factual accuracy, with implications for developing adaptable Artificial Intelligence (AI) systems. Mistral-7b-v0.3 outperforms Llama-3-8b with BERT F1, BLEU, and ROUGE scores 0.858, 0.172, and 0.260 of for the LLM generated QA pairs compared to scores of 0.836, 0.083, and 0.139 for the human annotated QA pairs.

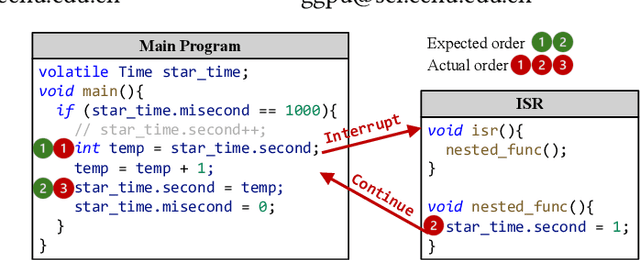

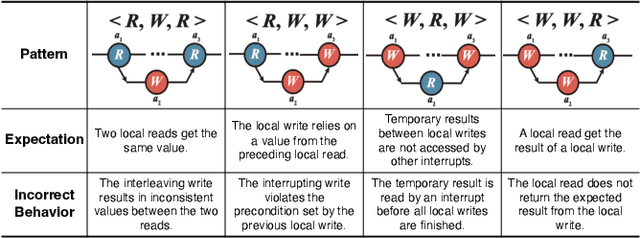

Automated detection of atomicity violations in large-scale systems

Apr 01, 2025

Abstract:Atomicity violations in interrupt-driven programs pose a significant threat to software safety in critical systems. These violations occur when the execution sequence of operations on shared resources is disrupted by asynchronous interrupts. Detecting atomicity violations is challenging due to the vast program state space, application-level code dependencies, and complex domain-specific knowledge. We propose Clover, a hybrid framework that integrates static analysis with large language model (LLM) agents to detect atomicity violations in real-world programs. Clover first performs static analysis to extract critical code snippets and operation information. It then initiates a multi-agent process, where the expert agent leverages domain-specific knowledge to detect atomicity violations, which are subsequently validated by the judge agent. Evaluations on RaceBench 2.1, SV-COMP, and RWIP demonstrate that Clover achieves a precision/recall of 92.3%/86.6%, outperforming existing approaches by 27.4-118.2% on F1-score.

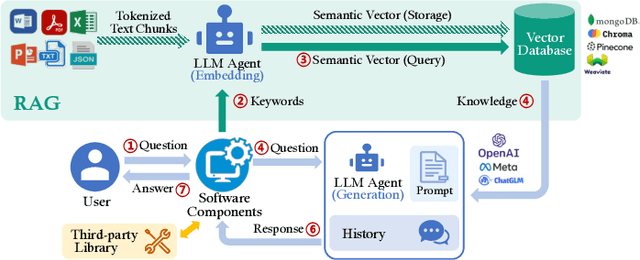

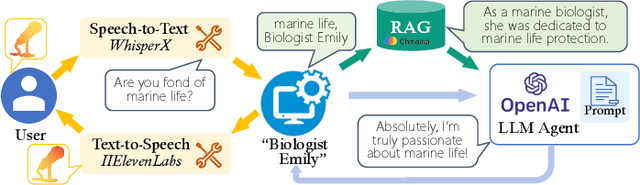

Vortex under Ripplet: An Empirical Study of RAG-enabled Applications

Jul 06, 2024

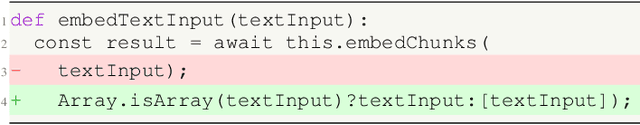

Abstract:Large language models (LLMs) enhanced by retrieval-augmented generation (RAG) provide effective solutions in various application scenarios. However, developers face challenges in integrating RAG-enhanced LLMs into software systems, due to lack of interface specification, requirements from software context, and complicated system management. In this paper, we manually studied 100 open-source applications that incorporate RAG-enhanced LLMs, and their issue reports. We have found that more than 98% of applications contain multiple integration defects that harm software functionality, efficiency, and security. We have also generalized 19 defect patterns and proposed guidelines to tackle them. We hope this work could aid LLM-enabled software development and motivate future research.

Model-driven CT reconstruction algorithm for nano-resolution X-ray phase contrast imaging

May 14, 2023Abstract:The limited imaging performance of low-density objects in a zone plate based nano-resolution hard X-ray computed tomography (CT) system can be significantly improved by accessing the phase information. To do so, a grating-based Lau interferometer needs to be integrated. However, the nano-resolution phase contrast CT, denoted as nPCT, reconstructed from such an interferometer system may suffer resolution loss due to the strong signal diffraction. Aimed at performing accurate nPCT image reconstruction directly from these diffracted projections, a new model-driven nPCT image reconstruction algorithm is developed. First, the diffraction procedure is mathematically modeled into a matrix B, from which the projections without signal splitting can be generated invertedly. Second, a penalized weighed least-square model with total variation (PWLS-TV) is employed to denoise these projections. Finally, nPCT images with high resolution and high accuracy are reconstructed using the filtered-back-projection (FBP) method. Numerical simulations demonstrate that this algorithm is able to deal with diffracted projections having any splitting distances. Interestingly, results reveal that nPCT images with higher signal-to-noise-ratio (SNR) can be reconstructed from projections with larger signal splittings. In conclusion, a novel model-driven nPCT image reconstruction algorithm with high accuracy and robustness is verified for the Lau interferometer based hard X-ray nPCT imaging system.

Entity-Assisted Language Models for Identifying Check-worthy Sentences

Nov 19, 2022Abstract:We propose a new uniform framework for text classification and ranking that can automate the process of identifying check-worthy sentences in political debates and speech transcripts. Our framework combines the semantic analysis of the sentences, with additional entity embeddings obtained through the identified entities within the sentences. In particular, we analyse the semantic meaning of each sentence using state-of-the-art neural language models such as BERT, ALBERT, and RoBERTa, while embeddings for entities are obtained from knowledge graph (KG) embedding models. Specifically, we instantiate our framework using five different language models, entity embeddings obtained from six different KG embedding models, as well as two combination methods leading to several Entity-Assisted neural language models. We extensively evaluate the effectiveness of our framework using two publicly available datasets from the CLEF' 2019 & 2020 CheckThat! Labs. Our results show that the neural language models significantly outperform traditional TF.IDF and LSTM methods. In addition, we show that the ALBERT model is consistently the most effective model among all the tested neural language models. Our entity embeddings significantly outperform other existing approaches from the literature that are based on similarity and relatedness scores between the entities in a sentence, when used alongside a KG embedding.

Leveraging Users' Social Network Embeddings for Fake News Detection on Twitter

Nov 19, 2022Abstract:Social networks (SNs) are increasingly important sources of news for many people. The online connections made by users allows information to spread more easily than traditional news media (e.g., newspaper, television). However, they also make the spread of fake news easier than in traditional media, especially through the users' social network connections. In this paper, we focus on investigating if the SNs' users connection structure can aid fake news detection on Twitter. In particular, we propose to embed users based on their follower or friendship networks on the Twitter platform, so as to identify the groups that users form. Indeed, by applying unsupervised graph embedding methods on the graphs from the Twitter users' social network connections, we observe that users engaged with fake news are more tightly clustered together than users only engaged in factual news. Thus, we hypothesise that the embedded user's network can help detect fake news effectively. Through extensive experiments using a publicly available Twitter dataset, our results show that applying graph embedding methods on SNs, using the user connections as network information, can indeed classify fake news more effectively than most language-based approaches. Specifically, we observe a significant improvement over using only the textual information (i.e., TF.IDF or a BERT language model), as well as over models that deploy both advanced textual features (i.e., stance detection) and complex network features (e.g., users network, publishers cross citations). We conclude that the Twitter users' friendship and followers network information can significantly outperform language-based approaches, as well as the existing state-of-the-art fake news detection models that use a more sophisticated network structure, in classifying fake news on Twitter.

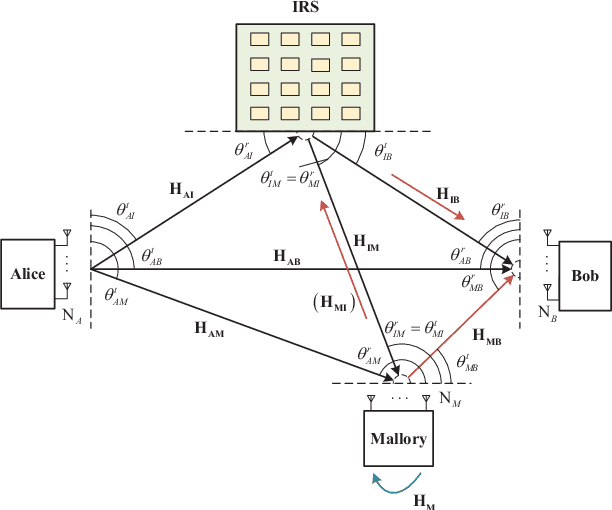

High-performance Estimation of Jamming Covariance Matrix for IRS-aided Directional Modulation Network with a Malicious Attacker

Oct 22, 2021

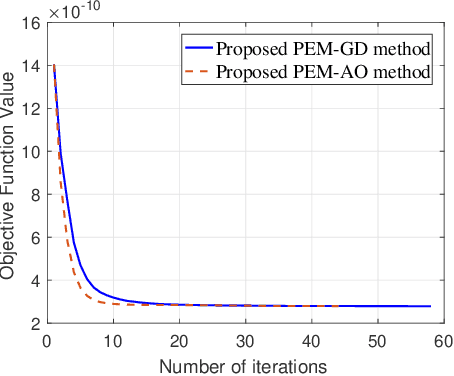

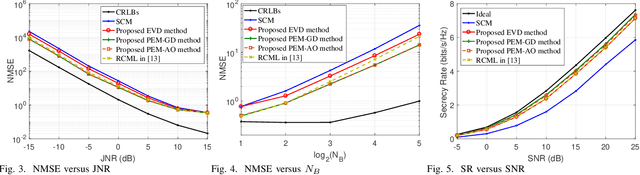

Abstract:In this paper, we investigate the anti-jamming problem of a directional modulation (DM) system with the aid of intelligent reflecting surface (IRS). As an efficient tool to combat malicious jamming, receive beamforming (RBF) is usually designed to be on null-space of jamming channel or covariance matrix from Mallory to Bob. Thus, it is very necessary to estimate the receive jamming covariance matrix (JCM) at Bob. To achieve a precise JCM estimate, three JCM estimation methods, including eigenvalue decomposition (EVD), parametric estimation method by gradient descend (PEM-GD) and parametric estimation method by alternating optimization (PEM-AO), are proposed. Here, the proposed EVD is under rank-2 constraint of JCM. The PEM-GD method fully explores the structure features of JCM and the PEM-AO is to decrease the computational complexity of the former via dimensionality reduction. The simulation results show that in low and medium jamming-noise ratio (JNR) regions, the proposed three methods perform better than the existing sample covariance matrix method. The proposed PEM-GD and PEM-AO outperform EVD method and existing clutter and disturbance covariance estimator RCML.

Quality Assessment of DIBR-synthesized views: An Overview

Nov 16, 2019

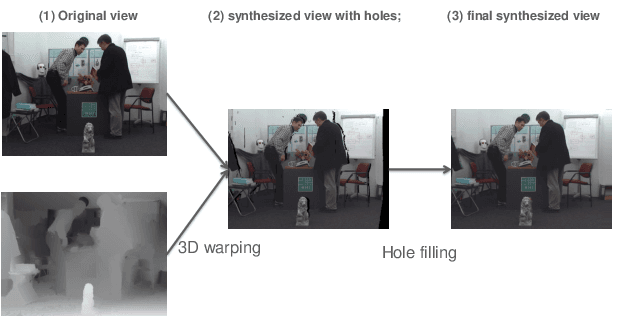

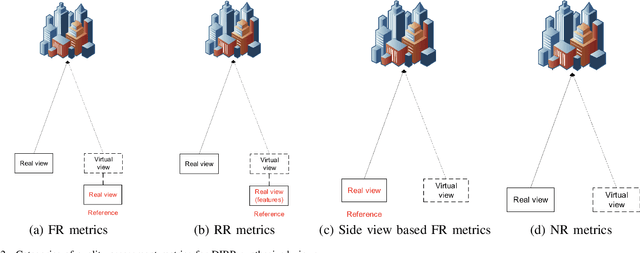

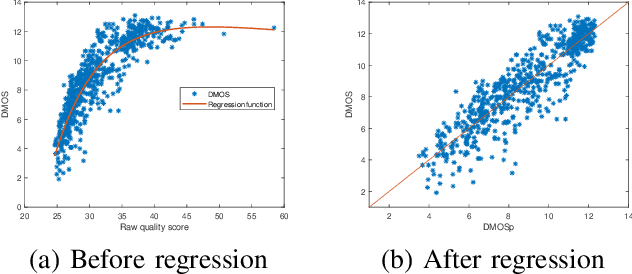

Abstract:The Depth-Image-Based-Rendering (DIBR) is one of the main fundamental technique to generate new views in 3D video applications, such as Multi-View Videos (MVV), Free-Viewpoint Videos (FVV) and Virtual Reality (VR). However, the quality assessment of DIBR-synthesized views is quite different from the traditional 2D images/videos. In recent years, several efforts have been made towards this topic, but there lacks a detailed survey in literature. In this paper, we provide a comprehensive survey on various current approaches for DIBR-synthesized views. The current accessible datasets of DIBR-synthesized views are firstly reviewed. Followed by a summary and analysis of the representative state-of-the-art objective metrics. Then, the performances of different objective metrics are evaluated and discussed on all available datasets. Finally, we discuss the potential challenges and suggest possible directions for future research.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge