Xia Li

LIDILEM

Human-in-Context: Unified Cross-Domain 3D Human Motion Modeling via In-Context Learning

Aug 14, 2025Abstract:This paper aims to model 3D human motion across domains, where a single model is expected to handle multiple modalities, tasks, and datasets. Existing cross-domain models often rely on domain-specific components and multi-stage training, which limits their practicality and scalability. To overcome these challenges, we propose a new setting to train a unified cross-domain model through a single process, eliminating the need for domain-specific components and multi-stage training. We first introduce Pose-in-Context (PiC), which leverages in-context learning to create a pose-centric cross-domain model. While PiC generalizes across multiple pose-based tasks and datasets, it encounters difficulties with modality diversity, prompting strategy, and contextual dependency handling. We thus propose Human-in-Context (HiC), an extension of PiC that broadens generalization across modalities, tasks, and datasets. HiC combines pose and mesh representations within a unified framework, expands task coverage, and incorporates larger-scale datasets. Additionally, HiC introduces a max-min similarity prompt sampling strategy to enhance generalization across diverse domains and a network architecture with dual-branch context injection for improved handling of contextual dependencies. Extensive experimental results show that HiC performs better than PiC in terms of generalization, data scale, and performance across a wide range of domains. These results demonstrate the potential of HiC for building a unified cross-domain 3D human motion model with improved flexibility and scalability. The source codes and models are available at https://github.com/BradleyWang0416/Human-in-Context.

Designing conflict-based communicative tasks in Teaching Chinese as a Foreign Language with ChatGPT

Jun 10, 2025Abstract:In developing the teaching program for a course in Oral Expression in Teaching Chinese as a Foreign Language at the university level, the teacher designs communicative tasks based on conflicts to encourage learners to engage in interactive dynamics and develop their oral interaction skills. During the design of these tasks, the teacher uses ChatGPT to assist in finalizing the program. This article aims to present the key characteristics of the interactions between the teacher and ChatGPT during this program development process, as well as to examine the use of ChatGPT and its impacts in this specific context.

* in French language

Automatic Pruning via Structured Lasso with Class-wise Information

Feb 13, 2025Abstract:Most pruning methods concentrate on unimportant filters of neural networks. However, they face the loss of statistical information due to a lack of consideration for class-wise data. In this paper, from the perspective of leveraging precise class-wise information for model pruning, we utilize structured lasso with guidance from Information Bottleneck theory. Our approach ensures that statistical information is retained during the pruning process. With these techniques, we introduce two innovative adaptive network pruning schemes: sparse graph-structured lasso pruning with Information Bottleneck (\textbf{sGLP-IB}) and sparse tree-guided lasso pruning with Information Bottleneck (\textbf{sTLP-IB}). The key aspect is pruning model filters using sGLP-IB and sTLP-IB to better capture class-wise relatedness. Compared to multiple state-of-the-art methods, our approaches demonstrate superior performance across three datasets and six model architectures in extensive experiments. For instance, using the VGG16 model on the CIFAR-10 dataset, we achieve a parameter reduction of 85%, a decrease in FLOPs by 61%, and maintain an accuracy of 94.10% (0.14% higher than the original model); we reduce the parameters by 55% with the accuracy at 76.12% using the ResNet architecture on ImageNet (only drops 0.03%). In summary, we successfully reduce model size and computational resource usage while maintaining accuracy. Our codes are at https://anonymous.4open.science/r/IJCAI-8104.

One-shot Federated Learning Methods: A Practical Guide

Feb 13, 2025Abstract:One-shot Federated Learning (OFL) is a distributed machine learning paradigm that constrains client-server communication to a single round, addressing privacy and communication overhead issues associated with multiple rounds of data exchange in traditional Federated Learning (FL). OFL demonstrates the practical potential for integration with future approaches that require collaborative training models, such as large language models (LLMs). However, current OFL methods face two major challenges: data heterogeneity and model heterogeneity, which result in subpar performance compared to conventional FL methods. Worse still, despite numerous studies addressing these limitations, a comprehensive summary is still lacking. To address these gaps, this paper presents a systematic analysis of the challenges faced by OFL and thoroughly reviews the current methods. We also offer an innovative categorization method and analyze the trade-offs of various techniques. Additionally, we discuss the most promising future directions and the technologies that should be integrated into the OFL field. This work aims to provide guidance and insights for future research.

Implementation of an Asymmetric Adjusted Activation Function for Class Imbalance Credit Scoring

Jan 21, 2025

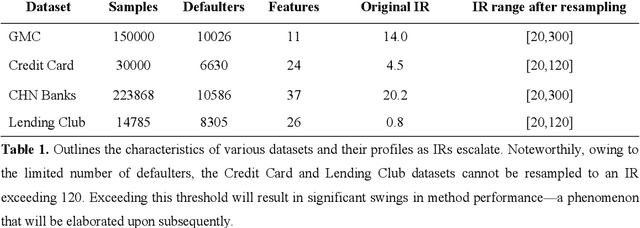

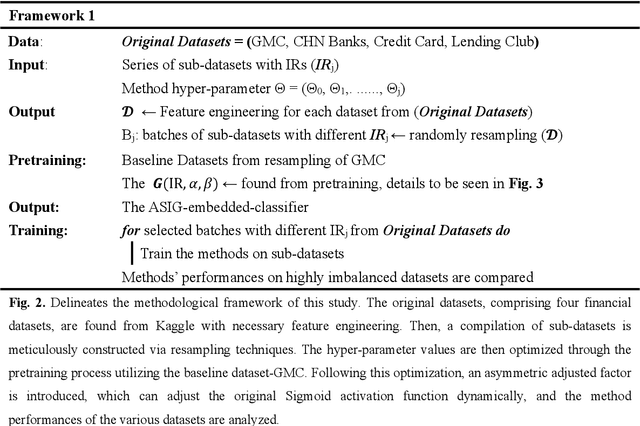

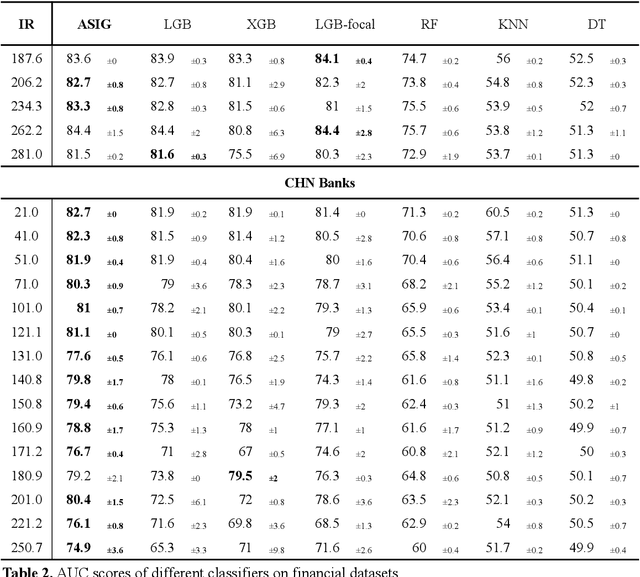

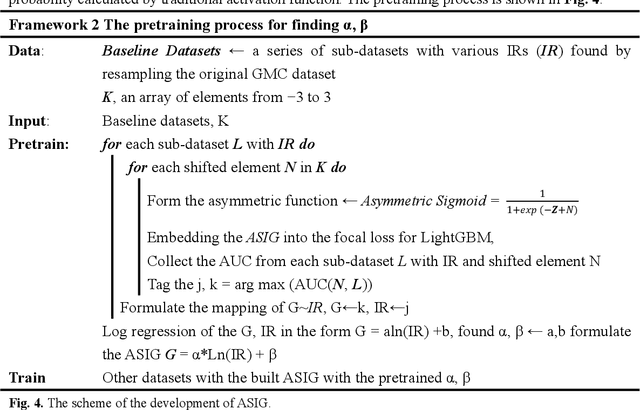

Abstract:Credit scoring is a systematic approach to evaluate a borrower's probability of default (PD) on a bank loan. The data associated with such scenarios are characteristically imbalanced, complicating binary classification owing to the often-underestimated cost of misclassification during the classifier's learning process. Considering the high imbalance ratio (IR) of these datasets, we introduce an innovative yet straightforward optimized activation function by incorporating an IR-dependent asymmetric adjusted factor embedded Sigmoid activation function (ASIG). The embedding of ASIG makes the sensitive margin of the Sigmoid function auto-adjustable, depending on the imbalance nature of the datasets distributed, thereby giving the activation function an asymmetric characteristic that prevents the underrepresentation of the minority class (positive samples) during the classifier's learning process. The experimental results show that the ASIG-embedded-classifier outperforms traditional classifiers on datasets across wide-ranging IRs in the downstream credit-scoring task. The algorithm also shows robustness and stability, even when the IR is ultra-high. Therefore, the algorithm provides a competitive alternative in the financial industry, especially in credit scoring, possessing the ability to effectively process highly imbalanced distribution data.

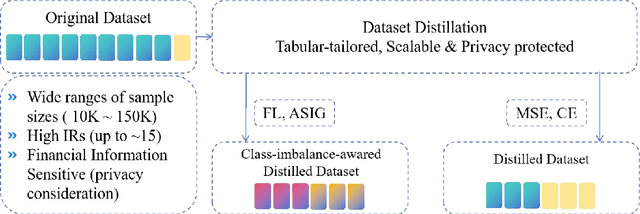

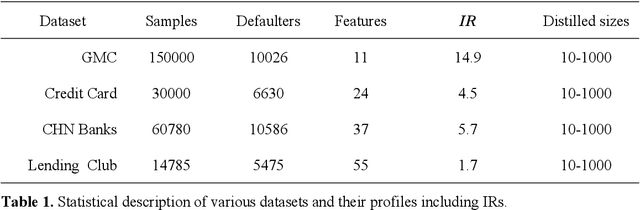

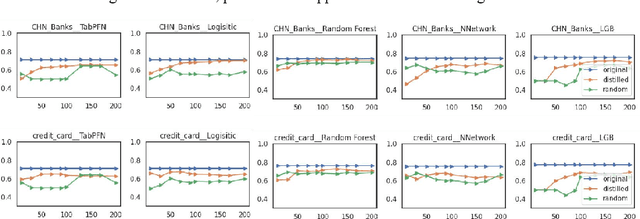

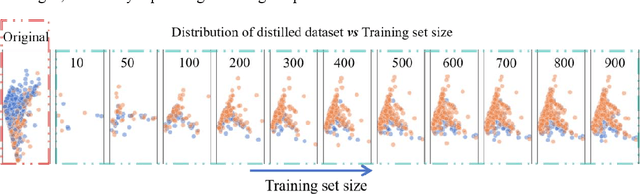

Class-Imbalanced-Aware Adaptive Dataset Distillation for Scalable Pretrained Model on Credit Scoring

Jan 18, 2025

Abstract:The advent of artificial intelligence has significantly enhanced credit scoring technologies. Despite the remarkable efficacy of advanced deep learning models, mainstream adoption continues to favor tree-structured models due to their robust predictive performance on tabular data. Although pretrained models have seen considerable development, their application within the financial realm predominantly revolves around question-answering tasks and the use of such models for tabular-structured credit scoring datasets remains largely unexplored. Tabular-oriented large models, such as TabPFN, has made the application of large models in credit scoring feasible, albeit can only processing with limited sample sizes. This paper provides a novel framework to combine tabular-tailored dataset distillation technique with the pretrained model, empowers the scalability for TabPFN. Furthermore, though class imbalance distribution is the common nature in financial datasets, its influence during dataset distillation has not been explored. We thus integrate the imbalance-aware techniques during dataset distillation, resulting in improved performance in financial datasets (e.g., a 2.5% enhancement in AUC). This study presents a novel framework for scaling up the application of large pretrained models on financial tabular datasets and offers a comparative analysis of the influence of class imbalance on the dataset distillation process. We believe this approach can broaden the applications and downstream tasks of large models in the financial domain.

Image Gradient-Aided Photometric Stereo Network

Dec 16, 2024Abstract:Photometric stereo (PS) endeavors to ascertain surface normals using shading clues from photometric images under various illuminations. Recent deep learning-based PS methods often overlook the complexity of object surfaces. These neural network models, which exclusively rely on photometric images for training, often produce blurred results in high-frequency regions characterized by local discontinuities, such as wrinkles and edges with significant gradient changes. To address this, we propose the Image Gradient-Aided Photometric Stereo Network (IGA-PSN), a dual-branch framework extracting features from both photometric images and their gradients. Furthermore, we incorporate an hourglass regression network along with supervision to regularize normal regression. Experiments on DiLiGenT benchmarks show that IGA-PSN outperforms previous methods in surface normal estimation, achieving a mean angular error of 6.46 while preserving textures and geometric shapes in complex regions.

* 13 pages, 5 figures, published to Springer

DECO: Life-Cycle Management of Enterprise-Grade Chatbots

Dec 08, 2024

Abstract:Software engineers frequently grapple with the challenge of accessing disparate documentation and telemetry data, including Troubleshooting Guides (TSGs), incident reports, code repositories, and various internal tools developed by multiple stakeholders. While on-call duties are inevitable, incident resolution becomes even more daunting due to the obscurity of legacy sources and the pressures of strict time constraints. To enhance the efficiency of on-call engineers (OCEs) and streamline their daily workflows, we introduced DECO -- a comprehensive framework for developing, deploying, and managing enterprise-grade chatbots tailored to improve productivity in engineering routines. This paper details the design and implementation of the DECO framework, emphasizing its innovative NL2SearchQuery functionality and a hierarchical planner. These features support efficient and customized retrieval-augmented-generation (RAG) algorithms that not only extract relevant information from diverse sources but also select the most pertinent toolkits in response to user queries. This enables the addressing of complex technical questions and provides seamless, automated access to internal resources. Additionally, DECO incorporates a robust mechanism for converting unstructured incident logs into user-friendly, structured guides, effectively bridging the documentation gap. Feedback from users underscores DECO's pivotal role in simplifying complex engineering tasks, accelerating incident resolution, and bolstering organizational productivity. Since its launch in September 2023, DECO has demonstrated its effectiveness through extensive engagement, with tens of thousands of interactions from hundreds of active users across multiple organizations within the company.

Graph Retention Networks for Dynamic Graphs

Nov 18, 2024Abstract:In this work, we propose Graph Retention Network as a unified architecture for deep learning on dynamic graphs. The GRN extends the core computational manner of retention to dynamic graph data as graph retention, which empowers the model with three key computational paradigms that enable training parallelism, $O(1)$ low-cost inference, and long-term batch training. This architecture achieves an optimal balance of effectiveness, efficiency, and scalability. Extensive experiments conducted on benchmark datasets present the superior performance of the GRN in both edge-level prediction and node-level classification tasks. Our architecture achieves cutting-edge results while maintaining lower training latency, reduced GPU memory consumption, and up to an 86.7x improvement in inference throughput compared to baseline models. The GRNs have demonstrated strong potential to become a widely adopted architecture for dynamic graph learning tasks. Code will be available at https://github.com/Chandler-Q/GraphRetentionNet.

ED-ViT: Splitting Vision Transformer for Distributed Inference on Edge Devices

Oct 15, 2024

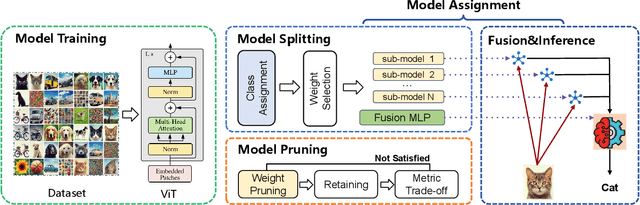

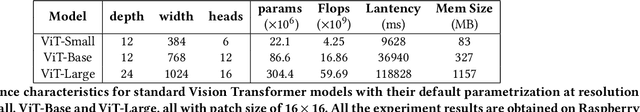

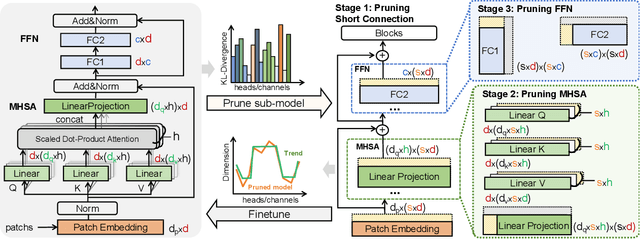

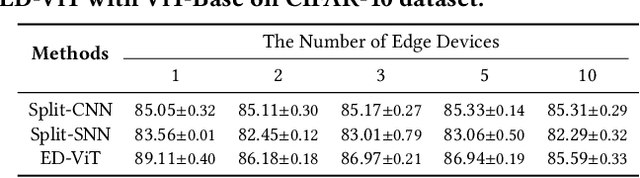

Abstract:Deep learning models are increasingly deployed on resource-constrained edge devices for real-time data analytics. In recent years, Vision Transformer models and their variants have demonstrated outstanding performance across various computer vision tasks. However, their high computational demands and inference latency pose significant challenges for model deployment on resource-constraint edge devices. To address this issue, we propose a novel Vision Transformer splitting framework, ED-ViT, designed to execute complex models across multiple edge devices efficiently. Specifically, we partition Vision Transformer models into several sub-models, where each sub-model is tailored to handle a specific subset of data classes. To further minimize computation overhead and inference latency, we introduce a class-wise pruning technique that reduces the size of each sub-model. We conduct extensive experiments on five datasets with three model structures, demonstrating that our approach significantly reduces inference latency on edge devices and achieves a model size reduction of up to 28.9 times and 34.1 times, respectively, while maintaining test accuracy comparable to the original Vision Transformer. Additionally, we compare ED-ViT with two state-of-the-art methods that deploy CNN and SNN models on edge devices, evaluating accuracy, inference time, and overall model size. Our comprehensive evaluation underscores the effectiveness of the proposed ED-ViT framework.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge