Teresa Araújo

Automatic detection and prediction of nAMD activity change in retinal OCT using Siamese networks and Wasserstein Distance for ordinality

Jan 24, 2025Abstract:Neovascular age-related macular degeneration (nAMD) is a leading cause of vision loss among older adults, where disease activity detection and progression prediction are critical for nAMD management in terms of timely drug administration and improving patient outcomes. Recent advancements in deep learning offer a promising solution for predicting changes in AMD from optical coherence tomography (OCT) retinal volumes. In this work, we proposed deep learning models for the two tasks of the public MARIO Challenge at MICCAI 2024, designed to detect and forecast changes in nAMD severity with longitudinal retinal OCT. For the first task, we employ a Vision Transformer (ViT) based Siamese Network to detect changes in AMD severity by comparing scan embeddings of a patient from different time points. To train a model to forecast the change after 3 months, we exploit, for the first time, an Earth Mover (Wasserstein) Distance-based loss to harness the ordinal relation within the severity change classes. Both models ranked high on the preliminary leaderboard, demonstrating that their predictive capabilities could facilitate nAMD treatment management.

AIROGS: Artificial Intelligence for RObust Glaucoma Screening Challenge

Feb 10, 2023

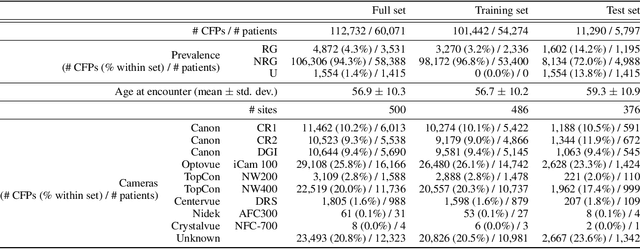

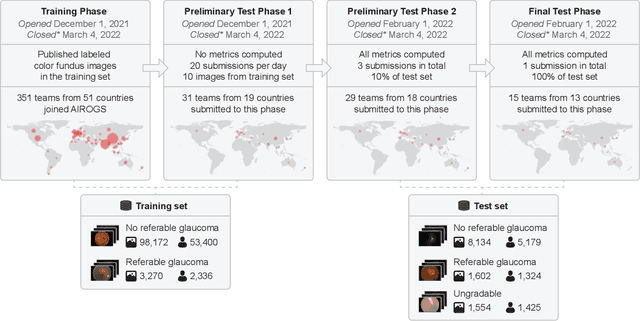

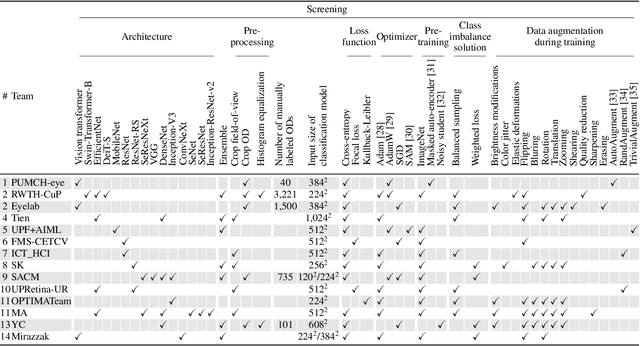

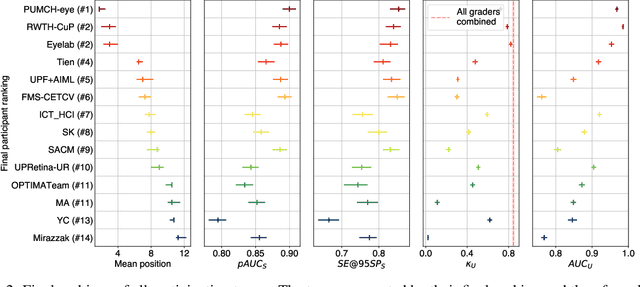

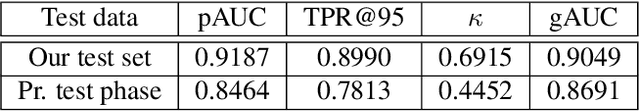

Abstract:The early detection of glaucoma is essential in preventing visual impairment. Artificial intelligence (AI) can be used to analyze color fundus photographs (CFPs) in a cost-effective manner, making glaucoma screening more accessible. While AI models for glaucoma screening from CFPs have shown promising results in laboratory settings, their performance decreases significantly in real-world scenarios due to the presence of out-of-distribution and low-quality images. To address this issue, we propose the Artificial Intelligence for Robust Glaucoma Screening (AIROGS) challenge. This challenge includes a large dataset of around 113,000 images from about 60,000 patients and 500 different screening centers, and encourages the development of algorithms that are robust to ungradable and unexpected input data. We evaluated solutions from 14 teams in this paper, and found that the best teams performed similarly to a set of 20 expert ophthalmologists and optometrists. The highest-scoring team achieved an area under the receiver operating characteristic curve of 0.99 (95% CI: 0.98-0.99) for detecting ungradable images on-the-fly. Additionally, many of the algorithms showed robust performance when tested on three other publicly available datasets. These results demonstrate the feasibility of robust AI-enabled glaucoma screening.

Deep Dirichlet uncertainty for unsupervised out-of-distribution detection of eye fundus photographs in glaucoma screening

Mar 18, 2022

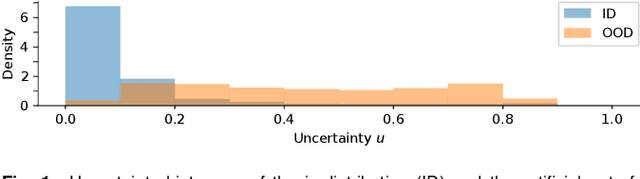

Abstract:The development of automatic tools for early glaucoma diagnosis with color fundus photographs can significantly reduce the impact of this disease. However, current state-of-the-art solutions are not robust to real-world scenarios, providing over-confident predictions for out-of-distribution cases. With this in mind, we propose a model based on the Dirichlet distribution that allows to obtain class-wise probabilities together with an uncertainty estimation without exposure to out-of-distribution cases. We demonstrate our approach on the AIROGS challenge. At the start of the final test phase (8 Feb. 2022), our method had the highest average score among all submissions.

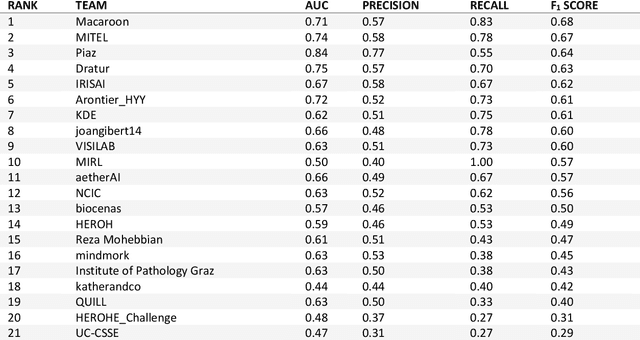

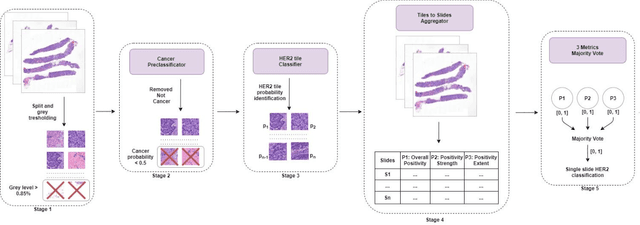

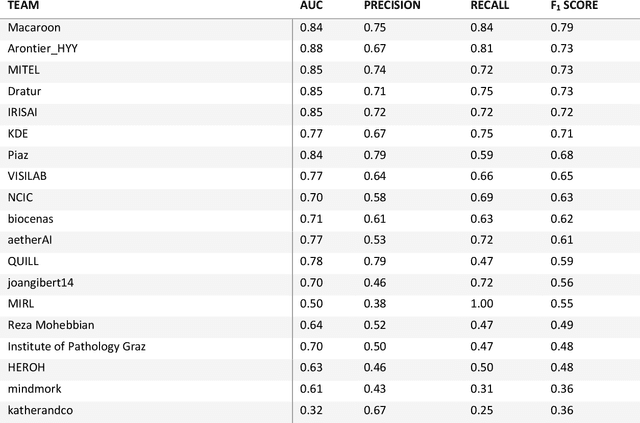

HEROHE Challenge: assessing HER2 status in breast cancer without immunohistochemistry or in situ hybridization

Nov 08, 2021

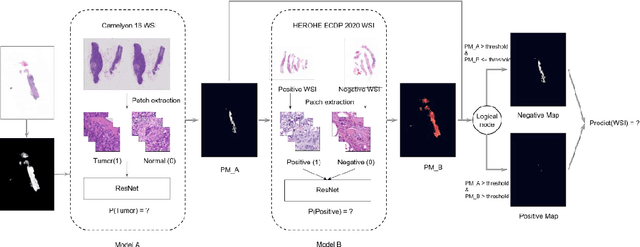

Abstract:Breast cancer is the most common malignancy in women, being responsible for more than half a million deaths every year. As such, early and accurate diagnosis is of paramount importance. Human expertise is required to diagnose and correctly classify breast cancer and define appropriate therapy, which depends on the evaluation of the expression of different biomarkers such as the transmembrane protein receptor HER2. This evaluation requires several steps, including special techniques such as immunohistochemistry or in situ hybridization to assess HER2 status. With the goal of reducing the number of steps and human bias in diagnosis, the HEROHE Challenge was organized, as a parallel event of the 16th European Congress on Digital Pathology, aiming to automate the assessment of the HER2 status based only on hematoxylin and eosin stained tissue sample of invasive breast cancer. Methods to assess HER2 status were presented by 21 teams worldwide and the results achieved by some of the proposed methods open potential perspectives to advance the state-of-the-art.

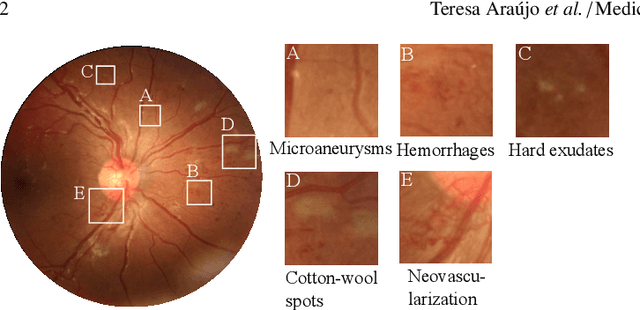

DR$\vert$GRADUATE: uncertainty-aware deep learning-based diabetic retinopathy grading in eye fundus images

Oct 25, 2019

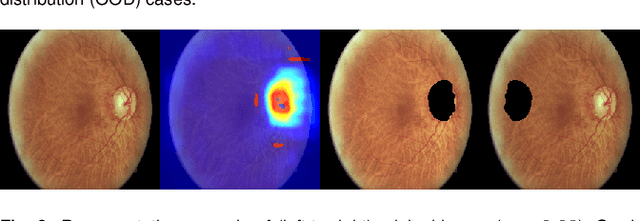

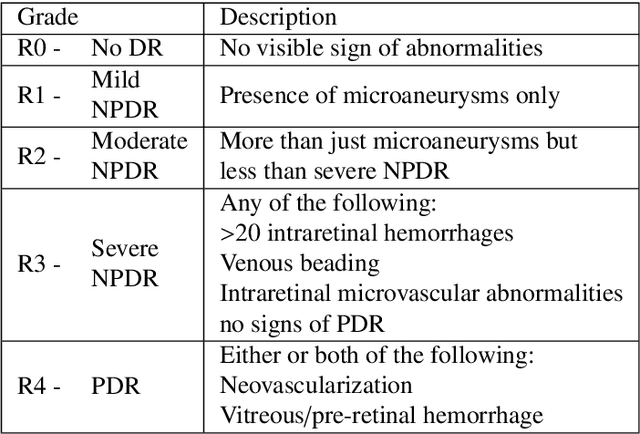

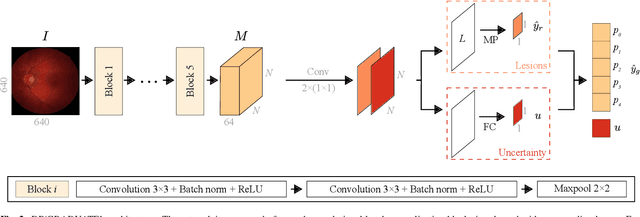

Abstract:Diabetic retinopathy (DR) grading is crucial in determining the patients' adequate treatment and follow up, but the screening process can be tiresome and prone to errors. Deep learning approaches have shown promising performance as computer-aided diagnosis(CAD) systems, but their black-box behaviour hinders the clinical application. We propose DR$\vert$GRADUATE, a novel deep learning-based DR grading CAD system that supports its decision by providing a medically interpretable explanation and an estimation of how uncertain that prediction is, allowing the ophthalmologist to measure how much that decision should be trusted. We designed DR$\vert$GRADUATE taking into account the ordinal nature of the DR grading problem. A novel Gaussian-sampling approach built upon a Multiple Instance Learning framework allow DR$\vert$GRADUATE to infer an image grade associated with an explanation map and a prediction uncertainty while being trained only with image-wise labels. DR$\vert$GRADUATE was trained on the Kaggle training set and evaluated across multiple datasets. In DR grading, a quadratic-weighted Cohen's kappa (QWK) between 0.71 and 0.84 was achieved in five different datasets. We show that high QWK values occur for images with low prediction uncertainty, thus indicating that this uncertainty is a valid measure of the predictions' quality. Further, bad quality images are generally associated with higher uncertainties, showing that images not suitable for diagnosis indeed lead to less trustworthy predictions. Additionally, tests on unfamiliar medical image data types suggest that DR$\vert$GRADUATE allows outlier detection. The attention maps generally highlight regions of interest for diagnosis. These results show the great potential of DR$\vert$GRADUATE as a second-opinion system in DR severity grading.

Did you miss it? Automatic lung nodule detection combined with gaze information improves radiologists' screening performance

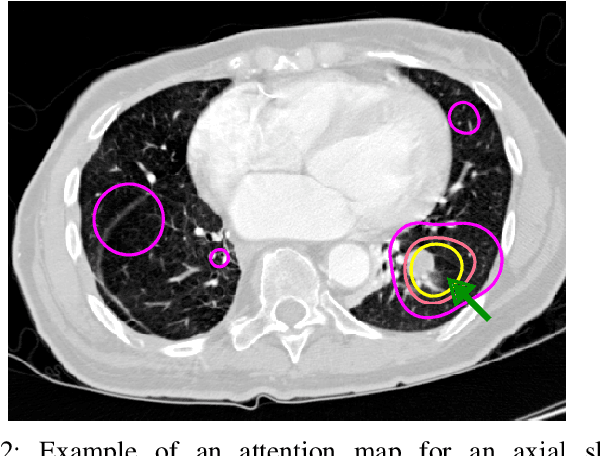

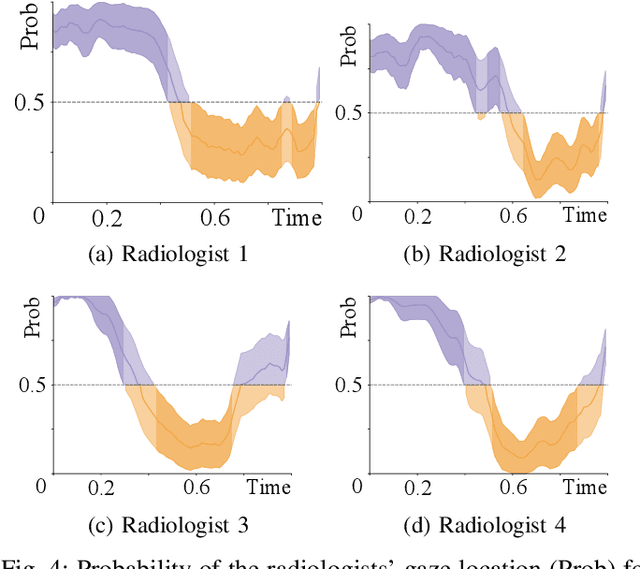

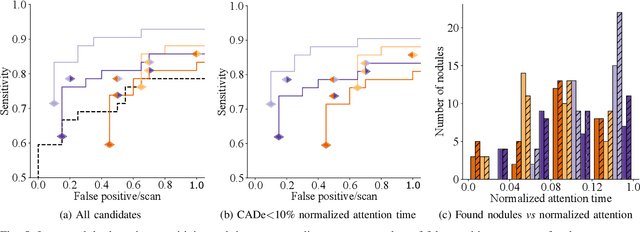

Oct 09, 2019

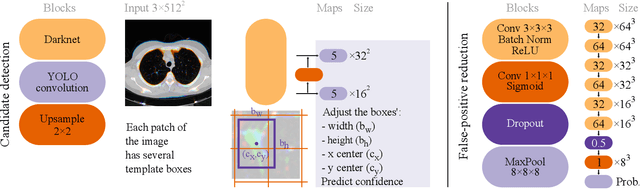

Abstract:Early diagnosis of lung cancer via computed tomography can significantly reduce the morbidity and mortality rates associated with the pathology. However, search lung nodules is a high complexity task, which affects the success of screening programs. Whilst computer-aided detection systems can be used as second observers, they may bias radiologists and introduce significant time overheads. With this in mind, this study assesses the potential of using gaze information for integrating automatic detection systems in the clinical practice. For that purpose, 4 radiologists were asked to annotate 20 scans from a public dataset while being monitored by an eye tracker device and an automatic lung nodule detection system was developed. Our results show that radiologists follow a similar search routine and tend to have lower fixation periods in regions where finding errors occur. The overall detection sensitivity of the specialists was 0.67$\pm$0.07, whereas the system achieved 0.69. Combining the annotations of one radiologist with the automatic system significantly improves the detection performance to similar levels of two annotators. Likewise, combining the findings of radiologist with the detection algorithm only for low fixation regions still significantly improves the detection sensitivity without increasing the number of false-positives. The combination of the automatic system with the gaze information allows to mitigate possible errors of the radiologist without some of the issues usually associated with automatic detection system.

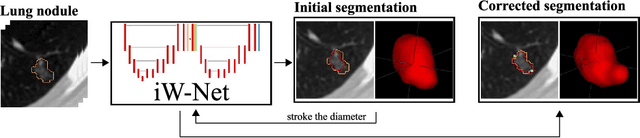

iW-Net: an automatic and minimalistic interactive lung nodule segmentation deep network

Nov 30, 2018

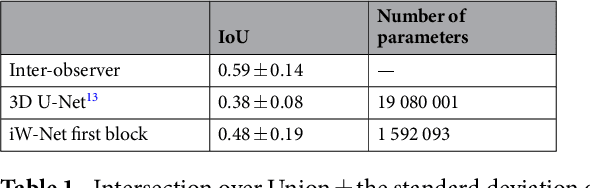

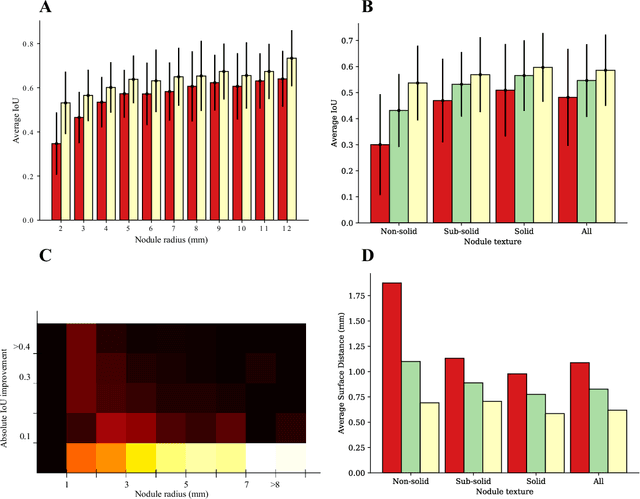

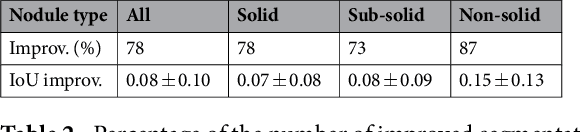

Abstract:We propose iW-Net, a deep learning model that allows for both automatic and interactive segmentation of lung nodules in computed tomography images. iW-Net is composed of two blocks: the first one provides an automatic segmentation and the second one allows to correct it by analyzing 2 points introduced by the user in the nodule's boundary. For this purpose, a physics inspired weight map that takes the user input into account is proposed, which is used both as a feature map and in the system's loss function. Our approach is extensively evaluated on the public LIDC-IDRI dataset, where we achieve a state-of-the-art performance of 0.55 intersection over union vs the 0.59 inter-observer agreement. Also, we show that iW-Net allows to correct the segmentation of small nodules, essential for proper patient referral decision, as well as improve the segmentation of the challenging non-solid nodules and thus may be an important tool for increasing the early diagnosis of lung cancer.

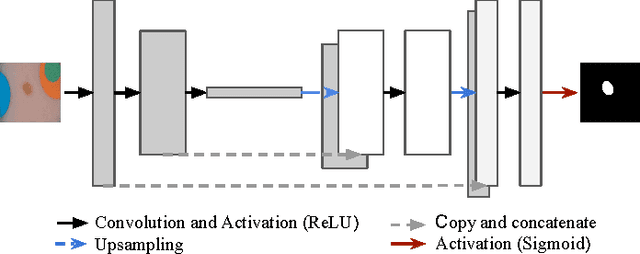

UOLO - automatic object detection and segmentation in biomedical images

Oct 09, 2018

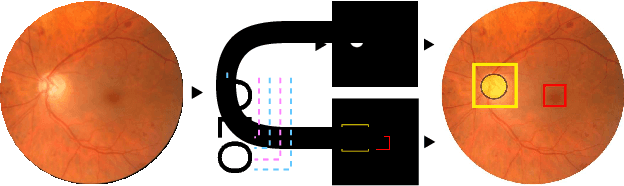

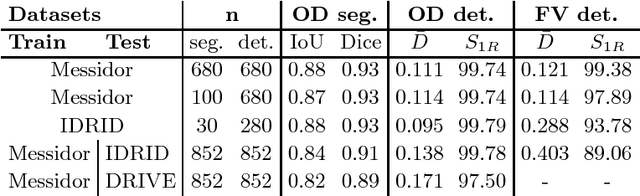

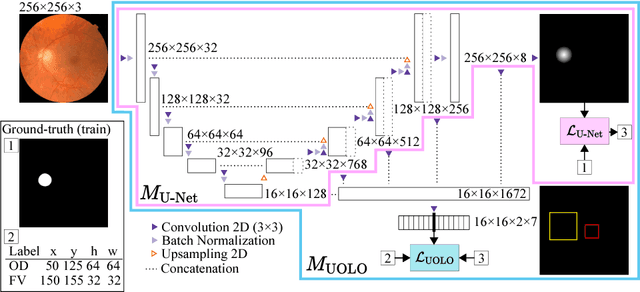

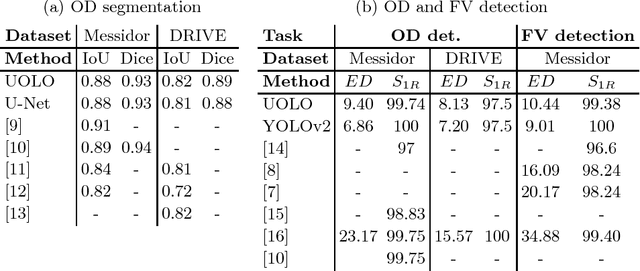

Abstract:We propose UOLO, a novel framework for the simultaneous detection and segmentation of structures of interest in medical images. UOLO consists of an object segmentation module which intermediate abstract representations are processed and used as input for object detection. The resulting system is optimized simultaneously for detecting a class of objects and segmenting an optionally different class of structures. UOLO is trained on a set of bounding boxes enclosing the objects to detect, as well as pixel-wise segmentation information, when available. A new loss function is devised, taking into account whether a reference segmentation is accessible for each training image, in order to suitably backpropagate the error. We validate UOLO on the task of simultaneous optic disc (OD) detection, fovea detection, and OD segmentation from retinal images, achieving state-of-the-art performance on public datasets.

* Publised on DLMIA 2018. Licensed under the Creative Commons CC-BY-NC-ND 4.0 license: http://creativecommons.org/licenses/by-nc-nd/4.0/

BACH: Grand Challenge on Breast Cancer Histology Images

Aug 13, 2018

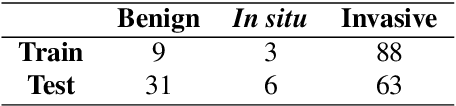

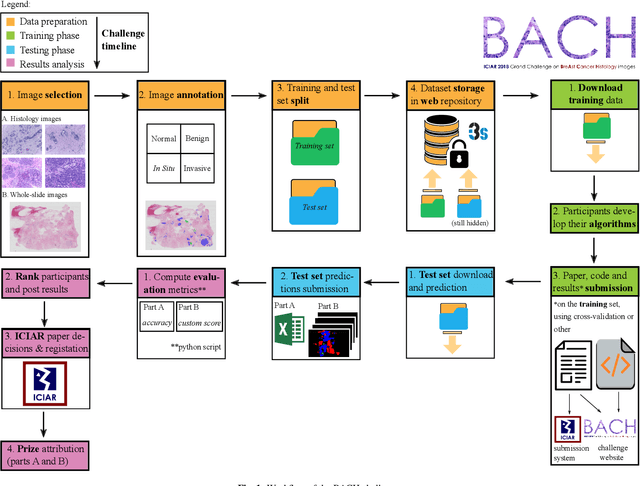

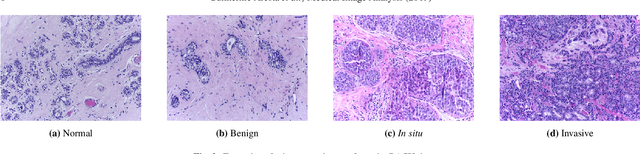

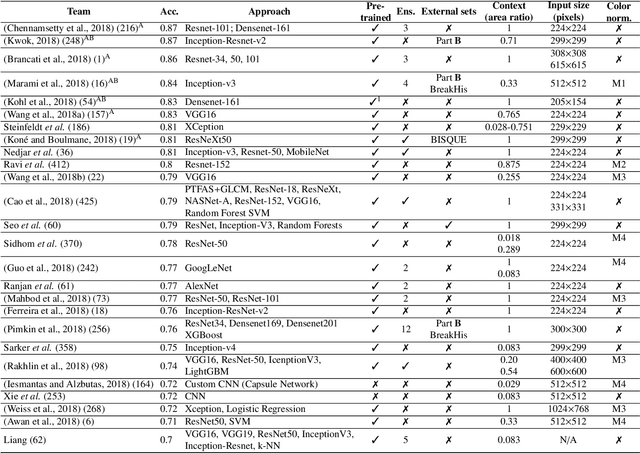

Abstract:Breast cancer is the most common invasive cancer in women, affecting more than 10% of women worldwide. Microscopic analysis of a biopsy remains one of the most important methods to diagnose the type of breast cancer. This requires specialized analysis by pathologists, in a task that i) is highly time- and cost-consuming and ii) often leads to nonconsensual results. The relevance and potential of automatic classification algorithms using hematoxylin-eosin stained histopathological images has already been demonstrated, but the reported results are still sub-optimal for clinical use. With the goal of advancing the state-of-the-art in automatic classification, the Grand Challenge on BreAst Cancer Histology images (BACH) was organized in conjunction with the 15th International Conference on Image Analysis and Recognition (ICIAR 2018). A large annotated dataset, composed of both microscopy and whole-slide images, was specifically compiled and made publicly available for the BACH challenge. Following a positive response from the scientific community, a total of 64 submissions, out of 677 registrations, effectively entered the competition. From the submitted algorithms it was possible to push forward the state-of-the-art in terms of accuracy (87%) in automatic classification of breast cancer with histopathological images. Convolutional neuronal networks were the most successful methodology in the BACH challenge. Detailed analysis of the collective results allowed the identification of remaining challenges in the field and recommendations for future developments. The BACH dataset remains publically available as to promote further improvements to the field of automatic classification in digital pathology.

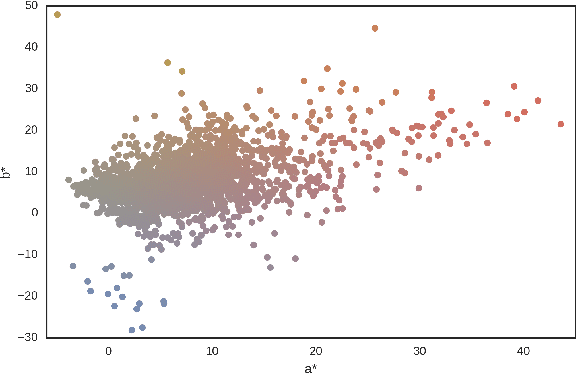

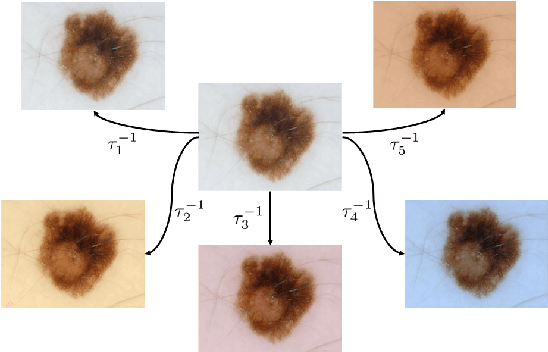

Data-Driven Color Augmentation Techniques for Deep Skin Image Analysis

Mar 10, 2017

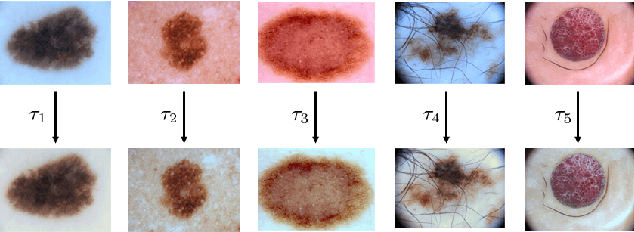

Abstract:Dermoscopic skin images are often obtained with different imaging devices, under varying acquisition conditions. In this work, instead of attempting to perform intensity and color normalization, we propose to leverage computational color constancy techniques to build an artificial data augmentation technique suitable for this kind of images. Specifically, we apply the \emph{shades of gray} color constancy technique to color-normalize the entire training set of images, while retaining the estimated illuminants. We then draw one sample from the distribution of training set illuminants and apply it on the normalized image. We employ this technique for training two deep convolutional neural networks for the tasks of skin lesion segmentation and skin lesion classification, in the context of the ISIC 2017 challenge and without using any external dermatologic image set. Our results on the validation set are promising, and will be supplemented with extended results on the hidden test set when available.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge