Shreyank N Gowda

Adversarial Robustness in Zero-Shot Learning:An Empirical Study on Class and Concept-Level Vulnerabilities

Dec 21, 2025

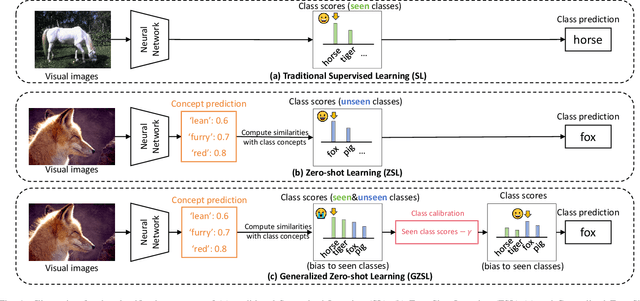

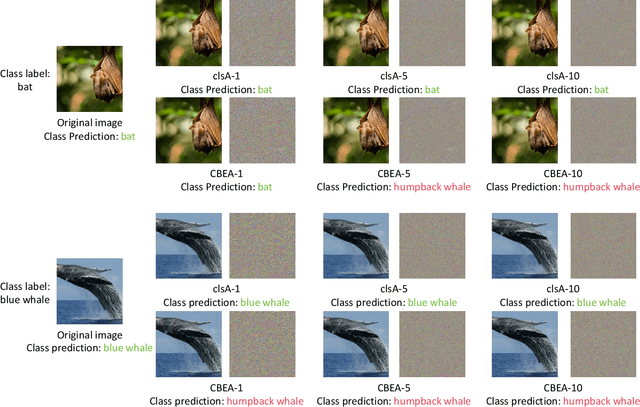

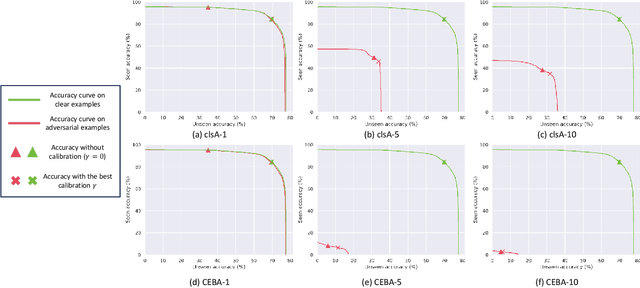

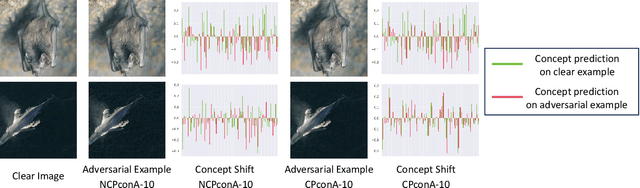

Abstract:Zero-shot Learning (ZSL) aims to enable image classifiers to recognize images from unseen classes that were not included during training. Unlike traditional supervised classification, ZSL typically relies on learning a mapping from visual features to predefined, human-understandable class concepts. While ZSL models promise to improve generalization and interpretability, their robustness under systematic input perturbations remain unclear. In this study, we present an empirical analysis about the robustness of existing ZSL methods at both classlevel and concept-level. Specifically, we successfully disrupted their class prediction by the well-known non-target class attack (clsA). However, in the Generalized Zero-shot Learning (GZSL) setting, we observe that the success of clsA is only at the original best-calibrated point. After the attack, the optimal bestcalibration point shifts, and ZSL models maintain relatively strong performance at other calibration points, indicating that clsA results in a spurious attack success in the GZSL. To address this, we propose the Class-Bias Enhanced Attack (CBEA), which completely eliminates GZSL accuracy across all calibrated points by enhancing the gap between seen and unseen class probabilities.Next, at concept-level attack, we introduce two novel attack modes: Class-Preserving Concept Attack (CPconA) and NonClass-Preserving Concept Attack (NCPconA). Our extensive experiments evaluate three typical ZSL models across various architectures from the past three years and reveal that ZSL models are vulnerable not only to the traditional class attack but also to concept-based attacks. These attacks allow malicious actors to easily manipulate class predictions by erasing or introducing concepts. Our findings highlight a significant performance gap between existing approaches, emphasizing the need for improved adversarial robustness in current ZSL models.

Distribution-Based Masked Medical Vision-Language Model Using Structured Reports

Jul 29, 2025Abstract:Medical image-language pre-training aims to align medical images with clinically relevant text to improve model performance on various downstream tasks. However, existing models often struggle with the variability and ambiguity inherent in medical data, limiting their ability to capture nuanced clinical information and uncertainty. This work introduces an uncertainty-aware medical image-text pre-training model that enhances generalization capabilities in medical image analysis. Building on previous methods and focusing on Chest X-Rays, our approach utilizes structured text reports generated by a large language model (LLM) to augment image data with clinically relevant context. These reports begin with a definition of the disease, followed by the `appearance' section to highlight critical regions of interest, and finally `observations' and `verdicts' that ground model predictions in clinical semantics. By modeling both inter- and intra-modal uncertainty, our framework captures the inherent ambiguity in medical images and text, yielding improved representations and performance on downstream tasks. Our model demonstrates significant advances in medical image-text pre-training, obtaining state-of-the-art performance on multiple downstream tasks.

Anyone Can Jailbreak: Prompt-Based Attacks on LLMs and T2Is

Jul 29, 2025Abstract:Despite significant advancements in alignment and content moderation, large language models (LLMs) and text-to-image (T2I) systems remain vulnerable to prompt-based attacks known as jailbreaks. Unlike traditional adversarial examples requiring expert knowledge, many of today's jailbreaks are low-effort, high-impact crafted by everyday users with nothing more than cleverly worded prompts. This paper presents a systems-style investigation into how non-experts reliably circumvent safety mechanisms through techniques such as multi-turn narrative escalation, lexical camouflage, implication chaining, fictional impersonation, and subtle semantic edits. We propose a unified taxonomy of prompt-level jailbreak strategies spanning both text-output and T2I models, grounded in empirical case studies across popular APIs. Our analysis reveals that every stage of the moderation pipeline, from input filtering to output validation, can be bypassed with accessible strategies. We conclude by highlighting the urgent need for context-aware defenses that reflect the ease with which these jailbreaks can be reproduced in real-world settings.

Progressive Data Dropout: An Embarrassingly Simple Approach to Faster Training

May 28, 2025Abstract:The success of the machine learning field has reliably depended on training on large datasets. While effective, this trend comes at an extraordinary cost. This is due to two deeply intertwined factors: the size of models and the size of datasets. While promising research efforts focus on reducing the size of models, the other half of the equation remains fairly mysterious. Indeed, it is surprising that the standard approach to training remains to iterate over and over, uniformly sampling the training dataset. In this paper we explore a series of alternative training paradigms that leverage insights from hard-data-mining and dropout, simple enough to implement and use that can become the new training standard. The proposed Progressive Data Dropout reduces the number of effective epochs to as little as 12.4% of the baseline. This savings actually do not come at any cost for accuracy. Surprisingly, the proposed method improves accuracy by up to 4.82%. Our approach requires no changes to model architecture or optimizer, and can be applied across standard training pipelines, thus posing an excellent opportunity for wide adoption. Code can be found here: https://github.com/bazyagami/LearningWithRevision

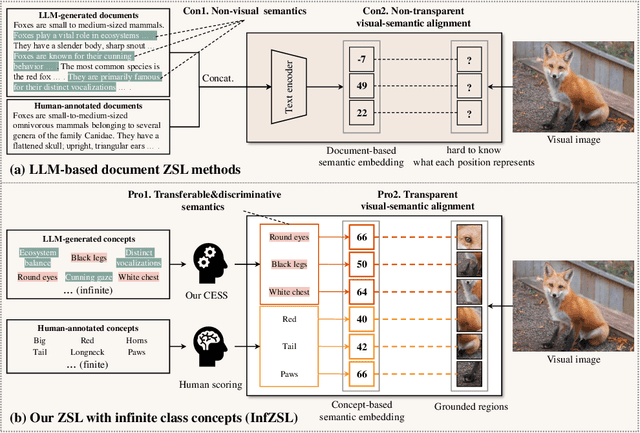

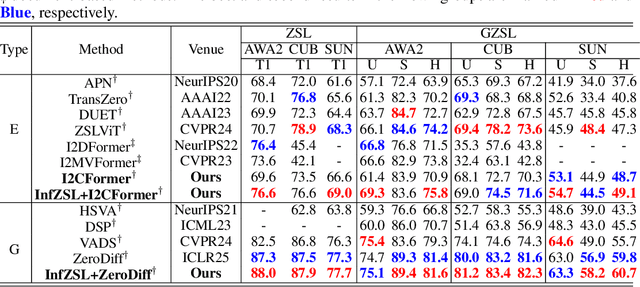

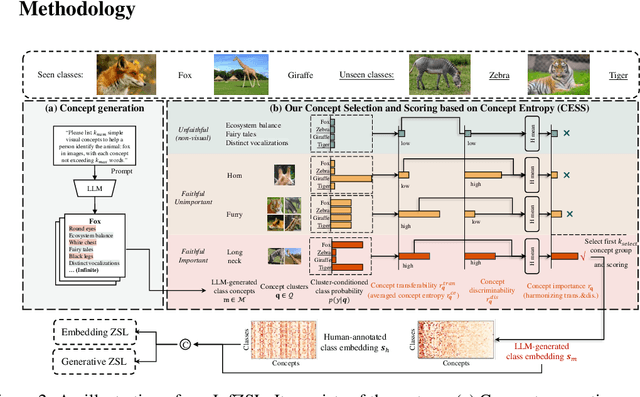

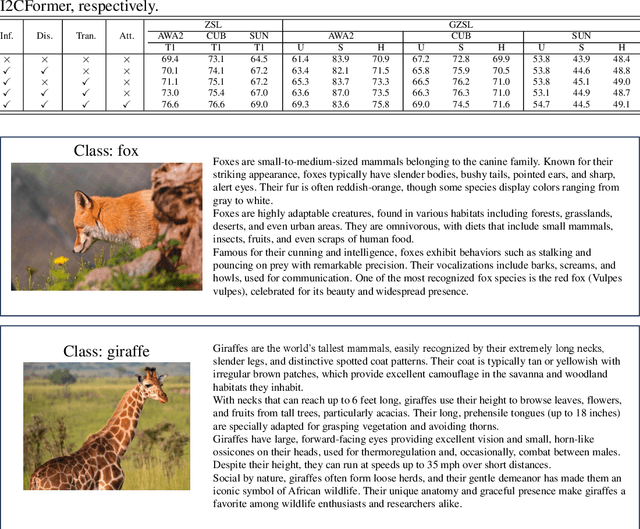

Interpretable Zero-shot Learning with Infinite Class Concepts

May 06, 2025

Abstract:Zero-shot learning (ZSL) aims to recognize unseen classes by aligning images with intermediate class semantics, like human-annotated concepts or class definitions. An emerging alternative leverages Large-scale Language Models (LLMs) to automatically generate class documents. However, these methods often face challenges with transparency in the classification process and may suffer from the notorious hallucination problem in LLMs, resulting in non-visual class semantics. This paper redefines class semantics in ZSL with a focus on transferability and discriminability, introducing a novel framework called Zero-shot Learning with Infinite Class Concepts (InfZSL). Our approach leverages the powerful capabilities of LLMs to dynamically generate an unlimited array of phrase-level class concepts. To address the hallucination challenge, we introduce an entropy-based scoring process that incorporates a ``goodness" concept selection mechanism, ensuring that only the most transferable and discriminative concepts are selected. Our InfZSL framework not only demonstrates significant improvements on three popular benchmark datasets but also generates highly interpretable, image-grounded concepts. Code will be released upon acceptance.

FATE: A Prompt-Tuning-Based Semi-Supervised Learning Framework for Extremely Limited Labeled Data

Apr 14, 2025Abstract:Semi-supervised learning (SSL) has achieved significant progress by leveraging both labeled data and unlabeled data. Existing SSL methods overlook a common real-world scenario when labeled data is extremely scarce, potentially as limited as a single labeled sample in the dataset. General SSL approaches struggle to train effectively from scratch under such constraints, while methods utilizing pre-trained models often fail to find an optimal balance between leveraging limited labeled data and abundant unlabeled data. To address this challenge, we propose Firstly Adapt, Then catEgorize (FATE), a novel SSL framework tailored for scenarios with extremely limited labeled data. At its core, the two-stage prompt tuning paradigm FATE exploits unlabeled data to compensate for scarce supervision signals, then transfers to downstream tasks. Concretely, FATE first adapts a pre-trained model to the feature distribution of downstream data using volumes of unlabeled samples in an unsupervised manner. It then applies an SSL method specifically designed for pre-trained models to complete the final classification task. FATE is designed to be compatible with both vision and vision-language pre-trained models. Extensive experiments demonstrate that FATE effectively mitigates challenges arising from the scarcity of labeled samples in SSL, achieving an average performance improvement of 33.74% across seven benchmarks compared to state-of-the-art SSL methods. Code is available at https://anonymous.4open.science/r/Semi-supervised-learning-BA72.

Is Temporal Prompting All We Need For Limited Labeled Action Recognition?

Apr 02, 2025

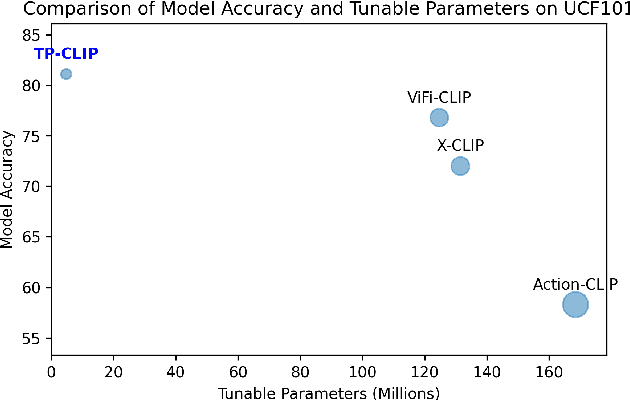

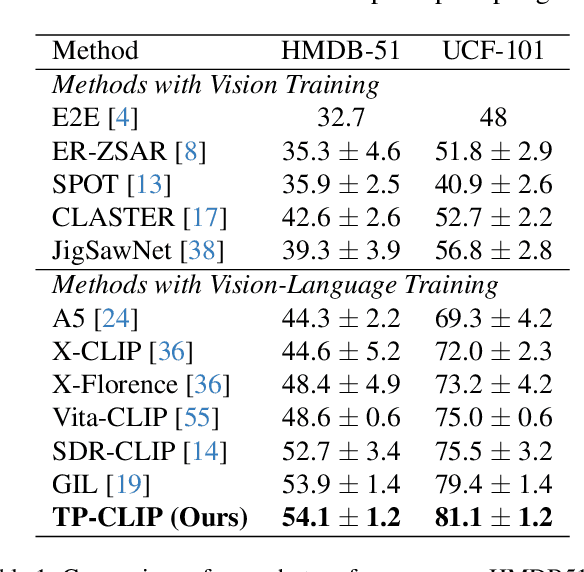

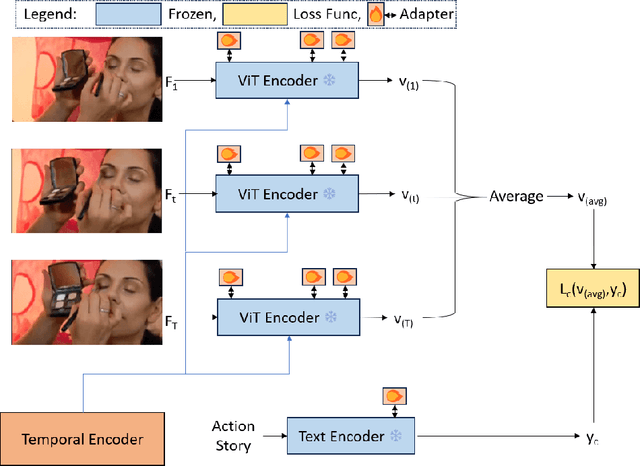

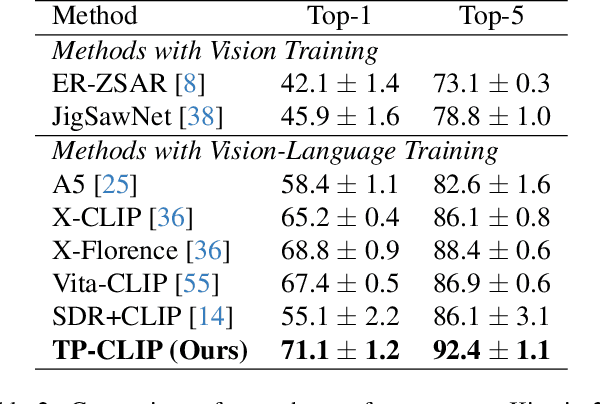

Abstract:Video understanding has shown remarkable improvements in recent years, largely dependent on the availability of large scaled labeled datasets. Recent advancements in visual-language models, especially based on contrastive pretraining, have shown remarkable generalization in zero-shot tasks, helping to overcome this dependence on labeled datasets. Adaptations of such models for videos, typically involve modifying the architecture of vision-language models to cater to video data. However, this is not trivial, since such adaptations are mostly computationally intensive and struggle with temporal modeling. We present TP-CLIP, an adaptation of CLIP that leverages temporal visual prompting for temporal adaptation without modifying the core CLIP architecture. This preserves its generalization abilities. TP-CLIP efficiently integrates into the CLIP architecture, leveraging its pre-trained capabilities for video data. Extensive experiments across various datasets demonstrate its efficacy in zero-shot and few-shot learning, outperforming existing approaches with fewer parameters and computational efficiency. In particular, we use just 1/3 the GFLOPs and 1/28 the number of tuneable parameters in comparison to recent state-of-the-art and still outperform it by up to 15.8% depending on the task and dataset.

CAPT: Class-Aware Prompt Tuning for Federated Long-Tailed Learning with Vision-Language Model

Mar 10, 2025Abstract:Effectively handling the co-occurrence of non-IID data and long-tailed distributions remains a critical challenge in federated learning. While fine-tuning vision-language models (VLMs) like CLIP has shown to be promising in addressing non-IID data challenges, this approach leads to severe degradation of tail classes in federated long-tailed scenarios. Under the composite effects of strong non-IID data distribution and long-tailed class imbalances, VLM fine-tuning may even fail to yield any improvement. To address this issue, we propose Class-Aware Prompt Learning for Federated Long-tailed Learning (CAPT), a novel framework that leverages a pre-trained VLM to effectively handle both data heterogeneity and long-tailed distributions. CAPT introduces a dual-prompt mechanism that synergizes general and class-aware prompts, enabling the framework to capture global trends while preserving class-specific knowledge. To better aggregate and share knowledge across clients, we introduce a heterogeneity-aware client clustering strategy that groups clients based on their data distributions, enabling efficient collaboration and knowledge sharing. Extensive experiments on various long-tailed datasets with different levels of data heterogeneity demonstrate that CAPT significantly improves tail class performance without compromising overall accuracy, outperforming state-of-the-art methods in federated long-tailed learning scenarios.

Enhancing Generalization via Sharpness-Aware Trajectory Matching for Dataset Condensation

Feb 03, 2025

Abstract:Dataset condensation aims to synthesize datasets with a few representative samples that can effectively represent the original datasets. This enables efficient training and produces models with performance close to those trained on the original sets. Most existing dataset condensation methods conduct dataset learning under the bilevel (inner- and outer-loop) based optimization. However, the preceding methods perform with limited dataset generalization due to the notoriously complicated loss landscape and expensive time-space complexity of the inner-loop unrolling of bilevel optimization. These issues deteriorate when the datasets are learned via matching the trajectories of networks trained on the real and synthetic datasets with a long horizon inner-loop. To address these issues, we introduce Sharpness-Aware Trajectory Matching (SATM), which enhances the generalization capability of learned synthetic datasets by optimising the sharpness of the loss landscape and objective simultaneously. Moreover, our approach is coupled with an efficient hypergradient approximation that is mathematically well-supported and straightforward to implement along with controllable computational overhead. Empirical evaluations of SATM demonstrate its effectiveness across various applications, including in-domain benchmarks and out-of-domain settings. Moreover, its easy-to-implement properties afford flexibility, allowing it to integrate with other advanced sharpness-aware minimizers. Our code will be released.

Twin Trigger Generative Networks for Backdoor Attacks against Object Detection

Nov 23, 2024

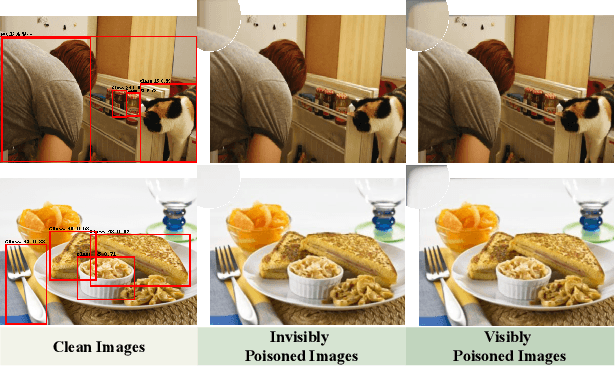

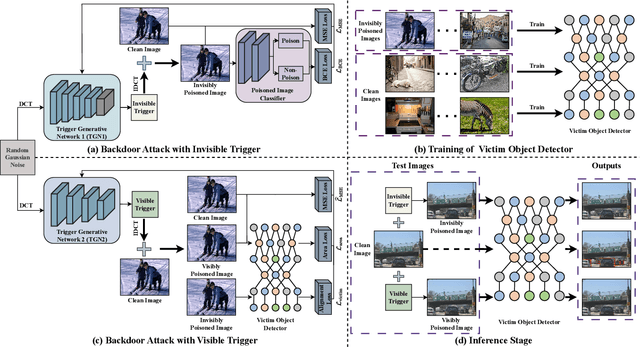

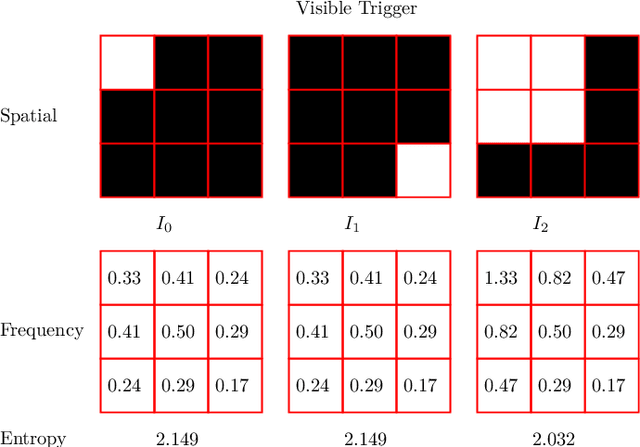

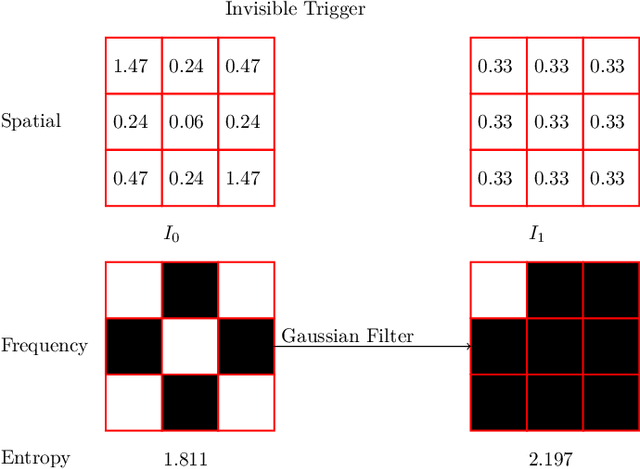

Abstract:Object detectors, which are widely used in real-world applications, are vulnerable to backdoor attacks. This vulnerability arises because many users rely on datasets or pre-trained models provided by third parties due to constraints on data and resources. However, most research on backdoor attacks has focused on image classification, with limited investigation into object detection. Furthermore, the triggers for most existing backdoor attacks on object detection are manually generated, requiring prior knowledge and consistent patterns between the training and inference stages. This approach makes the attacks either easy to detect or difficult to adapt to various scenarios. To address these limitations, we propose novel twin trigger generative networks in the frequency domain to generate invisible triggers for implanting stealthy backdoors into models during training, and visible triggers for steady activation during inference, making the attack process difficult to trace. Specifically, for the invisible trigger generative network, we deploy a Gaussian smoothing layer and a high-frequency artifact classifier to enhance the stealthiness of backdoor implantation in object detectors. For the visible trigger generative network, we design a novel alignment loss to optimize the visible triggers so that they differ from the original patterns but still align with the malicious activation behavior of the invisible triggers. Extensive experimental results and analyses prove the possibility of using different triggers in the training stage and the inference stage, and demonstrate the attack effectiveness of our proposed visible trigger and invisible trigger generative networks, significantly reducing the mAP_0.5 of the object detectors by 70.0% and 84.5%, including YOLOv5 and YOLOv7 with different settings, respectively.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge