Continual Learning Improves Zero-Shot Action Recognition

Paper and Code

Oct 14, 2024

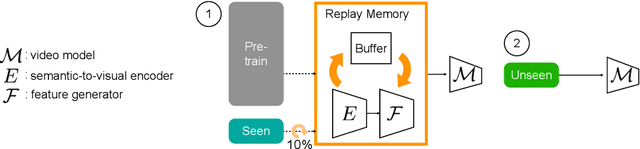

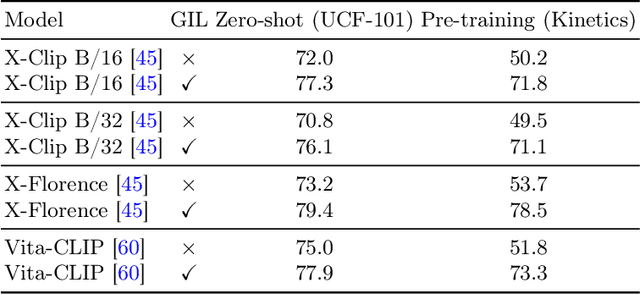

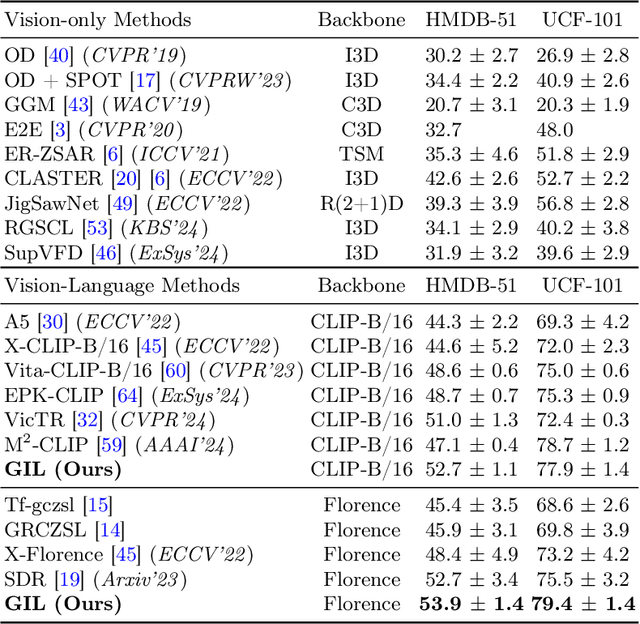

Zero-shot action recognition requires a strong ability to generalize from pre-training and seen classes to novel unseen classes. Similarly, continual learning aims to develop models that can generalize effectively and learn new tasks without forgetting the ones previously learned. The generalization goals of zero-shot and continual learning are closely aligned, however techniques from continual learning have not been applied to zero-shot action recognition. In this paper, we propose a novel method based on continual learning to address zero-shot action recognition. This model, which we call {\em Generative Iterative Learning} (GIL) uses a memory of synthesized features of past classes, and combines these synthetic features with real ones from novel classes. The memory is used to train a classification model, ensuring a balanced exposure to both old and new classes. Experiments demonstrate that {\em GIL} improves generalization in unseen classes, achieving a new state-of-the-art in zero-shot recognition across multiple benchmarks. Importantly, {\em GIL} also boosts performance in the more challenging generalized zero-shot setting, where models need to retain knowledge about classes seen before fine-tuning.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge