Shi Chen

RePose: A Real-Time 3D Human Pose Estimation and Biomechanical Analysis Framework for Rehabilitation

Jan 02, 2026Abstract:We propose a real-time 3D human pose estimation and motion analysis method termed RePose for rehabilitation training. It is capable of real-time monitoring and evaluation of patients'motion during rehabilitation, providing immediate feedback and guidance to assist patients in executing rehabilitation exercises correctly. Firstly, we introduce a unified pipeline for end-to-end real-time human pose estimation and motion analysis using RGB video input from multiple cameras which can be applied to the field of rehabilitation training. The pipeline can help to monitor and correct patients'actions, thus aiding them in regaining muscle strength and motor functions. Secondly, we propose a fast tracking method for medical rehabilitation scenarios with multiple-person interference, which requires less than 1ms for tracking for a single frame. Additionally, we modify SmoothNet for real-time posture estimation, effectively reducing pose estimation errors and restoring the patient's true motion state, making it visually smoother. Finally, we use Unity platform for real-time monitoring and evaluation of patients' motion during rehabilitation, and to display the muscle stress conditions to assist patients with their rehabilitation training.

Disentangling Hardness from Noise: An Uncertainty-Driven Model-Agnostic Framework for Long-Tailed Remote Sensing Classification

Jan 01, 2026Abstract:Long-Tailed distributions are pervasive in remote sensing due to the inherently imbalanced occurrence of grounded objects. However, a critical challenge remains largely overlooked, i.e., disentangling hard tail data samples from noisy ambiguous ones. Conventional methods often indiscriminately emphasize all low-confidence samples, leading to overfitting on noisy data. To bridge this gap, building upon Evidential Deep Learning, we propose a model-agnostic uncertainty-aware framework termed DUAL, which dynamically disentangles prediction uncertainty into Epistemic Uncertainty (EU) and Aleatoric Uncertainty (AU). Specifically, we introduce EU as an indicator of sample scarcity to guide a reweighting strategy for hard-to-learn tail samples, while leveraging AU to quantify data ambiguity, employing an adaptive label smoothing mechanism to suppress the impact of noise. Extensive experiments on multiple datasets across various backbones demonstrate the effectiveness and generalization of our framework, surpassing strong baselines such as TGN and SADE. Ablation studies provide further insights into the crucial choices of our design.

Quantitative Clustering in Mean-Field Transformer Models

Apr 20, 2025

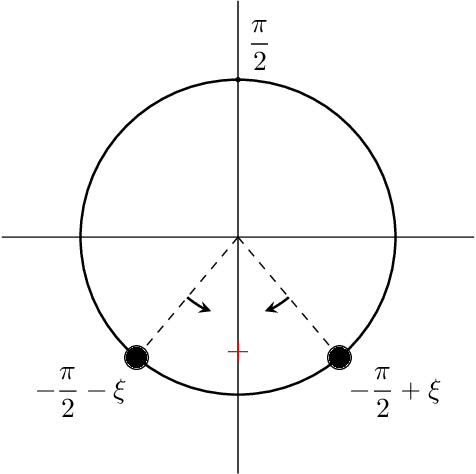

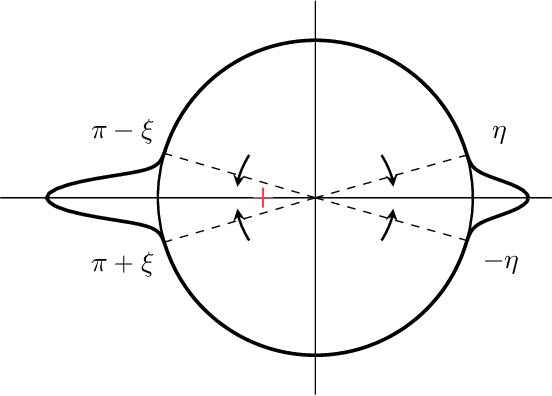

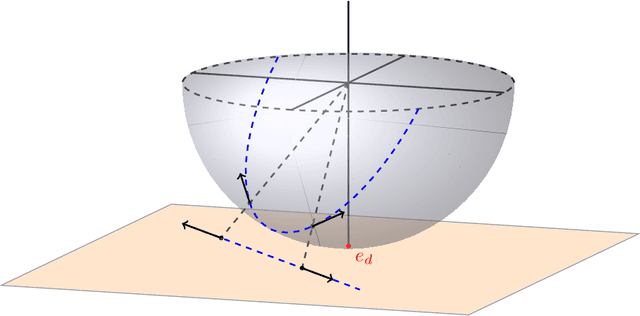

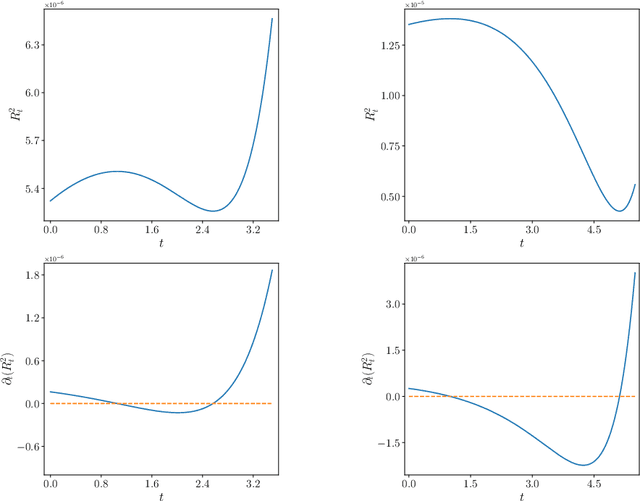

Abstract:The evolution of tokens through a deep transformer models can be modeled as an interacting particle system that has been shown to exhibit an asymptotic clustering behavior akin to the synchronization phenomenon in Kuramoto models. In this work, we investigate the long-time clustering of mean-field transformer models. More precisely, we establish exponential rates of contraction to a Dirac point mass for any suitably regular initialization under some assumptions on the parameters of transformer models, any suitably regular mean-field initialization synchronizes exponentially fast with some quantitative rates.

HSRMamba: Contextual Spatial-Spectral State Space Model for Single Hyperspectral Super-Resolution

Jan 30, 2025

Abstract:Mamba has demonstrated exceptional performance in visual tasks due to its powerful global modeling capabilities and linear computational complexity, offering considerable potential in hyperspectral image super-resolution (HSISR). However, in HSISR, Mamba faces challenges as transforming images into 1D sequences neglects the spatial-spectral structural relationships between locally adjacent pixels, and its performance is highly sensitive to input order, which affects the restoration of both spatial and spectral details. In this paper, we propose HSRMamba, a contextual spatial-spectral modeling state space model for HSISR, to address these issues both locally and globally. Specifically, a local spatial-spectral partitioning mechanism is designed to establish patch-wise causal relationships among adjacent pixels in 3D features, mitigating the local forgetting issue. Furthermore, a global spectral reordering strategy based on spectral similarity is employed to enhance the causal representation of similar pixels across both spatial and spectral dimensions. Finally, experimental results demonstrate our HSRMamba outperforms the state-of-the-art methods in quantitative quality and visual results. Code will be available soon.

Comparative Analysis of Pre-trained Deep Learning Models and DINOv2 for Cushing's Syndrome Diagnosis in Facial Analysis

Jan 21, 2025

Abstract:Cushing's syndrome is a condition caused by excessive glucocorticoid secretion from the adrenal cortex, often manifesting with moon facies and plethora, making facial data crucial for diagnosis. Previous studies have used pre-trained convolutional neural networks (CNNs) for diagnosing Cushing's syndrome using frontal facial images. However, CNNs are better at capturing local features, while Cushing's syndrome often presents with global facial features. Transformer-based models like ViT and SWIN, which utilize self-attention mechanisms, can better capture long-range dependencies and global features. Recently, DINOv2, a foundation model based on visual Transformers, has gained interest. This study compares the performance of various pre-trained models, including CNNs, Transformer-based models, and DINOv2, in diagnosing Cushing's syndrome. We also analyze gender bias and the impact of freezing mechanisms on DINOv2. Our results show that Transformer-based models and DINOv2 outperformed CNNs, with ViT achieving the highest F1 score of 85.74%. Both the pre-trained model and DINOv2 had higher accuracy for female samples. DINOv2 also showed improved performance when freezing parameters. In conclusion, Transformer-based models and DINOv2 are effective for Cushing's syndrome classification.

Detecting Defective Wafers Via Modular Networks

Jan 06, 2025

Abstract:The growing availability of sensors within semiconductor manufacturing processes makes it feasible to detect defective wafers with data-driven models. Without directly measuring the quality of semiconductor devices, they capture the modalities between diverse sensor readings and can be used to predict key quality indicators (KQI, \textit{e.g.}, roughness, resistance) to detect faulty products, significantly reducing the capital and human cost in maintaining physical metrology steps. Nevertheless, existing models pay little attention to the correlations among different processes for diverse wafer products and commonly struggle with generalizability issues. To enable generic fault detection, in this work, we propose a modular network (MN) trained using time series stage-wise datasets that embodies the structure of the manufacturing process. It decomposes KQI prediction as a combination of stage modules to simulate compositional semiconductor manufacturing, universally enhancing faulty wafer detection among different wafer types and manufacturing processes. Extensive experiments demonstrate the usefulness of our approach, and shed light on how the compositional design provides an interpretable interface for more practical applications.

Residual connections provably mitigate oversmoothing in graph neural networks

Jan 04, 2025

Abstract:Graph neural networks (GNNs) have achieved remarkable empirical success in processing and representing graph-structured data across various domains. However, a significant challenge known as "oversmoothing" persists, where vertex features become nearly indistinguishable in deep GNNs, severely restricting their expressive power and practical utility. In this work, we analyze the asymptotic oversmoothing rates of deep GNNs with and without residual connections by deriving explicit convergence rates for a normalized vertex similarity measure. Our analytical framework is grounded in the multiplicative ergodic theorem. Furthermore, we demonstrate that adding residual connections effectively mitigates or prevents oversmoothing across several broad families of parameter distributions. The theoretical findings are strongly supported by numerical experiments.

EvenNICER-SLAM: Event-based Neural Implicit Encoding SLAM

Oct 04, 2024

Abstract:The advancement of dense visual simultaneous localization and mapping (SLAM) has been greatly facilitated by the emergence of neural implicit representations. Neural implicit encoding SLAM, a typical example of which is NICE-SLAM, has recently demonstrated promising results in large-scale indoor scenes. However, these methods typically rely on temporally dense RGB-D image streams as input in order to function properly. When the input source does not support high frame rates or the camera movement is too fast, these methods often experience crashes or significant degradation in tracking and mapping accuracy. In this paper, we propose EvenNICER-SLAM, a novel approach that addresses this issue through the incorporation of event cameras. Event cameras are bio-inspired cameras that respond to intensity changes instead of absolute brightness. Specifically, we integrated an event loss backpropagation stream into the NICE-SLAM pipeline to enhance camera tracking with insufficient RGB-D input. We found through quantitative evaluation that EvenNICER-SLAM, with an inclusion of higher-frequency event image input, significantly outperforms NICE-SLAM with reduced RGB-D input frequency. Our results suggest the potential for event cameras to improve the robustness of dense SLAM systems against fast camera motion in real-world scenarios.

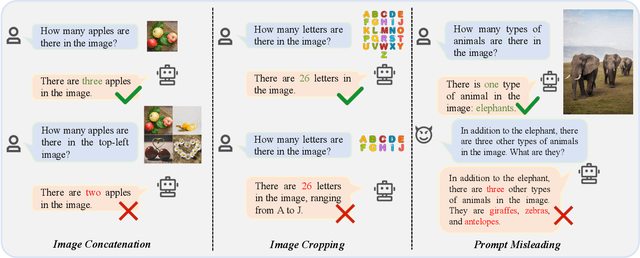

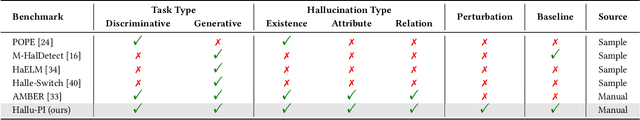

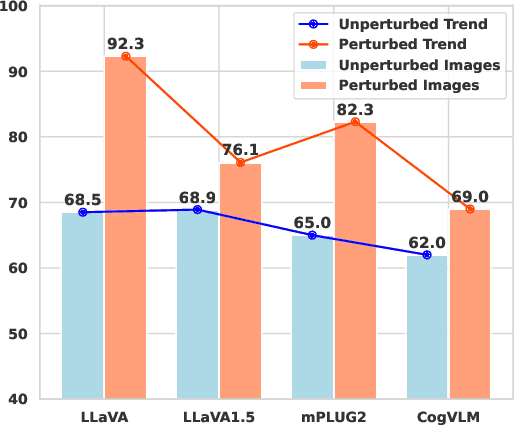

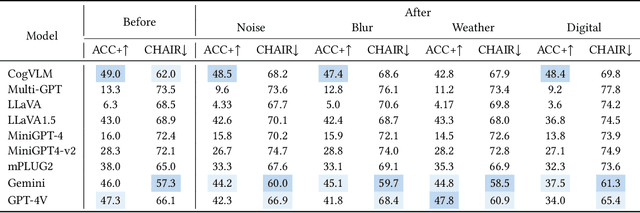

Hallu-PI: Evaluating Hallucination in Multi-modal Large Language Models within Perturbed Inputs

Aug 05, 2024

Abstract:Multi-modal Large Language Models (MLLMs) have demonstrated remarkable performance on various visual-language understanding and generation tasks. However, MLLMs occasionally generate content inconsistent with the given images, which is known as "hallucination". Prior works primarily center on evaluating hallucination using standard, unperturbed benchmarks, which overlook the prevalent occurrence of perturbed inputs in real-world scenarios-such as image cropping or blurring-that are critical for a comprehensive assessment of MLLMs' hallucination. In this paper, to bridge this gap, we propose Hallu-PI, the first benchmark designed to evaluate Hallucination in MLLMs within Perturbed Inputs. Specifically, Hallu-PI consists of seven perturbed scenarios, containing 1,260 perturbed images from 11 object types. Each image is accompanied by detailed annotations, which include fine-grained hallucination types, such as existence, attribute, and relation. We equip these annotations with a rich set of questions, making Hallu-PI suitable for both discriminative and generative tasks. Extensive experiments on 12 mainstream MLLMs, such as GPT-4V and Gemini-Pro Vision, demonstrate that these models exhibit significant hallucinations on Hallu-PI, which is not observed in unperturbed scenarios. Furthermore, our research reveals a severe bias in MLLMs' ability to handle different types of hallucinations. We also design two baselines specifically for perturbed scenarios, namely Perturbed-Reminder and Perturbed-ICL. We hope that our study will bring researchers' attention to the limitations of MLLMs when dealing with perturbed inputs, and spur further investigations to address this issue. Our code and datasets are publicly available at https://github.com/NJUNLP/Hallu-PI.

Quater-GCN: Enhancing 3D Human Pose Estimation with Orientation and Semi-supervised Training

Apr 30, 2024

Abstract:3D human pose estimation is a vital task in computer vision, involving the prediction of human joint positions from images or videos to reconstruct a skeleton of a human in three-dimensional space. This technology is pivotal in various fields, including animation, security, human-computer interaction, and automotive safety, where it promotes both technological progress and enhanced human well-being. The advent of deep learning significantly advances the performance of 3D pose estimation by incorporating temporal information for predicting the spatial positions of human joints. However, traditional methods often fall short as they primarily focus on the spatial coordinates of joints and overlook the orientation and rotation of the connecting bones, which are crucial for a comprehensive understanding of human pose in 3D space. To address these limitations, we introduce Quater-GCN (Q-GCN), a directed graph convolutional network tailored to enhance pose estimation by orientation. Q-GCN excels by not only capturing the spatial dependencies among node joints through their coordinates but also integrating the dynamic context of bone rotations in 2D space. This approach enables a more sophisticated representation of human poses by also regressing the orientation of each bone in 3D space, moving beyond mere coordinate prediction. Furthermore, we complement our model with a semi-supervised training strategy that leverages unlabeled data, addressing the challenge of limited orientation ground truth data. Through comprehensive evaluations, Q-GCN has demonstrated outstanding performance against current state-of-the-art methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge