Satoshi Nakamura

Understanding Mental States to Guide Social Influence in Multi-Person Group Dialogue

Jan 20, 2026Abstract:Existing dynamic Theory of Mind (ToM) benchmarks mostly place language models in a passive role: the model reads a sequence of connected scenarios and reports what people believe, feel, intend, and do as these states change. In real social interaction, ToM is also used for action: a speaker plans what to say in order to shift another person's mental-state trajectory toward a goal. We introduce SocialMindChange, a benchmark that moves from tracking minds to changing minds in social interaction. Each instance defines a social context with 4 characters and five connected scenes. The model plays one character and generates dialogue across the five scenes to reach the target while remaining consistent with the evolving states of all participants. SocialMindChange also includes selected higher-order states. Using a structured four-step framework, we construct 1,200 social contexts, covering 6000 scenarios and over 90,000 questions, each validated for realism and quality. Evaluations on ten state-of-the-art LLMs show that their average performance is 54.2% below human performance. This gap suggests that current LLMs still struggle to maintain and change mental-state representations across long, linked interactions.

Redefining Machine Simultaneous Interpretation: From Incremental Translation to Human-Like Strategies

Jan 16, 2026Abstract:Simultaneous Machine Translation (SiMT) requires high-quality translations under strict real-time constraints, which traditional policies with only READ/WRITE actions cannot fully address. We extend the action space of SiMT with four adaptive actions: Sentence_Cut, Drop, Partial_Summarization and Pronominalization, which enable real-time restructuring, omission, and simplification while preserving semantic fidelity. We adapt these actions in a large language model (LLM) framework and construct training references through action-aware prompting. To evaluate both quality and word-level monotonicity, we further develop a latency-aware TTS pipeline that maps textual outputs to speech with realistic timing. Experiments on the ACL60/60 English-Chinese, English-German and English-Japanese benchmarks show that our framework consistently improves semantic metrics and achieves lower delay compared to reference translations and salami-based baselines. Notably, combining Drop and Sentence_Cut leads to consistent improvements in the balance between fluency and latency. These results demonstrate that enriching the action space of LLM-based SiMT provides a promising direction for bridging the gap between human and machine interpretation.

SASST: Leveraging Syntax-Aware Chunking and LLMs for Simultaneous Speech Translation

Aug 11, 2025Abstract:This work proposes a grammar-based chunking strategy that segments input streams into semantically complete units by parsing dependency relations (e.g., noun phrase boundaries, verb-object structures) and punctuation features. The method ensures chunk coherence and minimizes semantic fragmentation. Building on this mechanism, we present SASST (Syntax-Aware Simultaneous Speech Translation), an end-to-end framework integrating frozen Whisper encoder and decoder-only LLM. The unified architecture dynamically outputs translation tokens or <WAIT> symbols to jointly optimize translation timing and content, with target-side reordering addressing word-order divergence. Experiments on CoVoST2 multilingual corpus En-{De, Zh, Ja} demonstrate significant translation quality improvements across languages and validate the effectiveness of syntactic structures in LLM-driven SimulST systems.

Development of a Large-scale Dataset of Chest Computed Tomography Reports in Japanese and a High-performance Finding Classification Model

Dec 20, 2024

Abstract:Background: Recent advances in large language models highlight the need for high-quality multilingual medical datasets. While Japan leads globally in CT scanner deployment and utilization, the lack of large-scale Japanese radiology datasets has hindered the development of specialized language models for medical imaging analysis. Objective: To develop a comprehensive Japanese CT report dataset through machine translation and establish a specialized language model for structured finding classification. Additionally, to create a rigorously validated evaluation dataset through expert radiologist review. Methods: We translated the CT-RATE dataset (24,283 CT reports from 21,304 patients) into Japanese using GPT-4o mini. The training dataset consisted of 22,778 machine-translated reports, while the validation dataset included 150 radiologist-revised reports. We developed CT-BERT-JPN based on "tohoku-nlp/bert-base-japanese-v3" architecture for extracting 18 structured findings from Japanese radiology reports. Results: Translation metrics showed strong performance with BLEU scores of 0.731 and 0.690, and ROUGE scores ranging from 0.770 to 0.876 for Findings and from 0.748 to 0.857 for Impression sections. CT-BERT-JPN demonstrated superior performance compared to GPT-4o in 11 out of 18 conditions, including lymphadenopathy (+14.2%), interlobular septal thickening (+10.9%), and atelectasis (+7.4%). The model maintained F1 scores exceeding 0.95 in 14 out of 18 conditions and achieved perfect scores in four conditions. Conclusions: Our study establishes a robust Japanese CT report dataset and demonstrates the effectiveness of a specialized language model for structured finding classification. The hybrid approach of machine translation and expert validation enables the creation of large-scale medical datasets while maintaining high quality.

Findings of the IWSLT 2024 Evaluation Campaign

Nov 07, 2024Abstract:This paper reports on the shared tasks organized by the 21st IWSLT Conference. The shared tasks address 7 scientific challenges in spoken language translation: simultaneous and offline translation, automatic subtitling and dubbing, speech-to-speech translation, dialect and low-resource speech translation, and Indic languages. The shared tasks attracted 18 teams whose submissions are documented in 26 system papers. The growing interest towards spoken language translation is also witnessed by the constantly increasing number of shared task organizers and contributors to the overview paper, almost evenly distributed across industry and academia.

A Transformer Framework for Simultaneous Segmentation, Classification, and Caller Identification of Marmoset Vocalization

Nov 06, 2024

Abstract:Marmoset, a highly vocalized primate, has become a popular animal model for studying social-communicative behavior and its underlying mechanism comparing with human infant linguistic developments. In the study of vocal communication, it is vital to know the caller identities, call contents, and vocal exchanges. Previous work of a CNN has achieved a joint model for call segmentation, classification, and caller identification for marmoset vocalizations. However, the CNN has limitations in modeling long-range acoustic patterns; the Transformer architecture that has been shown to outperform CNNs, utilizes the self-attention mechanism that efficiently segregates information parallelly over long distances and captures the global structure of marmoset vocalization. We propose using the Transformer to jointly segment and classify the marmoset calls and identify the callers for each vocalization.

A Neural Transformer Framework for Simultaneous Tasks of Segmentation, Classification, and Caller Identification of Marmoset Vocalization

Oct 30, 2024

Abstract:Marmoset, a highly vocalized primate, has become a popular animal model for studying social-communicative behavior and its underlying mechanism. In the study of vocal communication, it is vital to know the caller identities, call contents, and vocal exchanges. Previous work of a CNN has achieved a joint model for call segmentation, classification, and caller identification for marmoset vocalizations. However, the CNN has limitations in modeling long-range acoustic patterns; the Transformer architecture that has been shown to outperform CNNs, utilizes the self-attention mechanism that efficiently segregates information parallelly over long distances and captures the global structure of marmoset vocalization. We propose using the Transformer to jointly segment and classify the marmoset calls and identify the callers for each vocalization.

LLaST: Improved End-to-end Speech Translation System Leveraged by Large Language Models

Jul 22, 2024

Abstract:We introduces LLaST, a framework for building high-performance Large Language model based Speech-to-text Translation systems. We address the limitations of end-to-end speech translation(E2E ST) models by exploring model architecture design and optimization techniques tailored for LLMs. Our approach includes LLM-based speech translation architecture design, ASR-augmented training, multilingual data augmentation, and dual-LoRA optimization. Our approach demonstrates superior performance on the CoVoST-2 benchmark and showcases exceptional scaling capabilities powered by LLMs. We believe this effective method will serve as a strong baseline for speech translation and provide insights for future improvements of the LLM-based speech translation framework. We release the data, code and models in https://github.com/openaudiolab/LLaST.

A Word Order Synchronization Metric for Evaluating Simultaneous Interpretation and Translation

Jul 09, 2024

Abstract:Simultaneous interpretation (SI), the translation of one language to another in real time, starts translation before the original speech has finished. Its evaluation needs to consider both latency and quality. This trade-off is challenging especially for distant word order language pairs such as English and Japanese. To handle this word order gap, interpreters maintain the word order of the source language as much as possible to keep up with original language to minimize its latency while maintaining its quality, whereas in translation reordering happens to keep fluency in the target language. This means outputs synchronized with the source language are desirable based on the real SI situation, and it's a key for further progress in computational SI and simultaneous machine translation (SiMT). In this work, we propose an automatic evaluation metric for SI and SiMT focusing on word order synchronization. Our evaluation metric is based on rank correlation coefficients, leveraging cross-lingual pre-trained language models. Our experimental results on NAIST-SIC-Aligned and JNPC showed our metrics' effectiveness to measure word order synchronization between source and target language.

NAIST Simultaneous Speech Translation System for IWSLT 2024

Jun 30, 2024

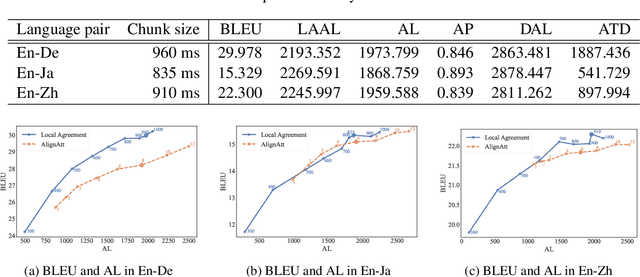

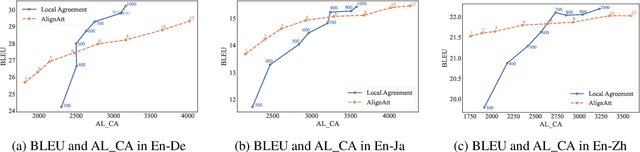

Abstract:This paper describes NAIST's submission to the simultaneous track of the IWSLT 2024 Evaluation Campaign: English-to-{German, Japanese, Chinese} speech-to-text translation and English-to-Japanese speech-to-speech translation. We develop a multilingual end-to-end speech-to-text translation model combining two pre-trained language models, HuBERT and mBART. We trained this model with two decoding policies, Local Agreement (LA) and AlignAtt. The submitted models employ the LA policy because it outperformed the AlignAtt policy in previous models. Our speech-to-speech translation method is a cascade of the above speech-to-text model and an incremental text-to-speech (TTS) module that incorporates a phoneme estimation model, a parallel acoustic model, and a parallel WaveGAN vocoder. We improved our incremental TTS by applying the Transformer architecture with the AlignAtt policy for the estimation model. The results show that our upgraded TTS module contributed to improving the system performance.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge