Ruixiang Tang

Data Augmentation for High-Fidelity Generation of CAR-T/NK Immunological Synapse Images

Feb 03, 2026Abstract:Chimeric antigen receptor (CAR)-T and NK cell immunotherapies have transformed cancer treatment, and recent studies suggest that the quality of the CAR-T/NK cell immunological synapse (IS) may serve as a functional biomarker for predicting therapeutic efficacy. Accurate detection and segmentation of CAR-T/NK IS structures using artificial neural networks (ANNs) can greatly increase the speed and reliability of IS quantification. However, a persistent challenge is the limited size of annotated microscopy datasets, which restricts the ability of ANNs to generalize. To address this challenge, we integrate two complementary data-augmentation frameworks. First, we employ Instance Aware Automatic Augmentation (IAAA), an automated, instance-preserving augmentation method that generates synthetic CAR-T/NK IS images and corresponding segmentation masks by applying optimized augmentation policies to original IS data. IAAA supports multiple imaging modalities (e.g., fluorescence and brightfield) and can be applied directly to CAR-T/NK IS images derived from patient samples. In parallel, we introduce a Semantic-Aware AI Augmentation (SAAA) pipeline that combines a diffusion-based mask generator with a Pix2Pix conditional image synthesizer. This second method enables the creation of diverse, anatomically realistic segmentation masks and produces high-fidelity CAR-T/NK IS images aligned with those masks, further expanding the training corpus beyond what IAAA alone can provide. Together, these augmentation strategies generate synthetic images whose visual and structural properties closely match real IS data, significantly improving CAR-T/NK IS detection and segmentation performance. By enhancing the robustness and accuracy of IS quantification, this work supports the development of more reliable imaging-based biomarkers for predicting patient response to CAR-T/NK immunotherapy.

TokenSeek: Memory Efficient Fine Tuning via Instance-Aware Token Ditching

Jan 27, 2026Abstract:Fine tuning has been regarded as a de facto approach for adapting large language models (LLMs) to downstream tasks, but the high training memory consumption inherited from LLMs makes this process inefficient. Among existing memory efficient approaches, activation-related optimization has proven particularly effective, as activations consistently dominate overall memory consumption. Although prior arts offer various activation optimization strategies, their data-agnostic nature ultimately results in ineffective and unstable fine tuning. In this paper, we propose TokenSeek, a universal plugin solution for various transformer-based models through instance-aware token seeking and ditching, achieving significant fine-tuning memory savings (e.g., requiring only 14.8% of the memory on Llama3.2 1B) with on-par or even better performance. Furthermore, our interpretable token seeking process reveals the underlying reasons for its effectiveness, offering valuable insights for future research on token efficiency. Homepage: https://runjia.tech/iclr_tokenseek/

Reasoning over Precedents Alongside Statutes: Case-Augmented Deliberative Alignment for LLM Safety

Jan 12, 2026Abstract:Ensuring that Large Language Models (LLMs) adhere to safety principles without refusing benign requests remains a significant challenge. While OpenAI introduces deliberative alignment (DA) to enhance the safety of its o-series models through reasoning over detailed ``code-like'' safety rules, the effectiveness of this approach in open-source LLMs, which typically lack advanced reasoning capabilities, is understudied. In this work, we systematically evaluate the impact of explicitly specifying extensive safety codes versus demonstrating them through illustrative cases. We find that referencing explicit codes inconsistently improves harmlessness and systematically degrades helpfulness, whereas training on case-augmented simple codes yields more robust and generalized safety behaviors. By guiding LLMs with case-augmented reasoning instead of extensive code-like safety rules, we avoid rigid adherence to narrowly enumerated rules and enable broader adaptability. Building on these insights, we propose CADA, a case-augmented deliberative alignment method for LLMs utilizing reinforcement learning on self-generated safety reasoning chains. CADA effectively enhances harmlessness, improves robustness against attacks, and reduces over-refusal while preserving utility across diverse benchmarks, offering a practical alternative to rule-only DA for improving safety while maintaining helpfulness.

Read the Scene, Not the Script: Outcome-Aware Safety for LLMs

Oct 05, 2025Abstract:Safety-aligned Large Language Models (LLMs) still show two dominant failure modes: they are easily jailbroken, or they over-refuse harmless inputs that contain sensitive surface signals. We trace both to a common cause: current models reason weakly about links between actions and outcomes and over-rely on surface-form signals, lexical or stylistic cues that do not encode consequences. We define this failure mode as Consequence-blindness. To study consequence-blindness, we build a benchmark named CB-Bench covering four risk scenarios that vary whether semantic risk aligns with outcome risk, enabling evaluation under both matched and mismatched conditions which are often ignored by existing safety benchmarks. Mainstream models consistently fail to separate these risks and exhibit consequence-blindness, indicating that consequence-blindness is widespread and systematic. To mitigate consequence-blindness, we introduce CS-Chain-4k, a consequence-reasoning dataset for safety alignment. Models fine-tuned on CS-Chain-4k show clear gains against semantic-camouflage jailbreaks and reduce over-refusal on harmless inputs, while maintaining utility and generalization on other benchmarks. These results clarify the limits of current alignment, establish consequence-aware reasoning as a core alignment goal and provide a more practical and reproducible evaluation path.

CATP: Contextually Adaptive Token Pruning for Efficient and Enhanced Multimodal In-Context Learning

Aug 11, 2025Abstract:Modern large vision-language models (LVLMs) convert each input image into a large set of tokens, far outnumbering the text tokens. Although this improves visual perception, it introduces severe image token redundancy. Because image tokens carry sparse information, many add little to reasoning, yet greatly increase inference cost. The emerging image token pruning methods tackle this issue by identifying the most important tokens and discarding the rest. These methods can raise efficiency with only modest performance loss. However, most of them only consider single-image tasks and overlook multimodal in-context learning (ICL), where redundancy is greater and efficiency is more critical. Redundant tokens weaken the advantage of multimodal ICL for rapid domain adaptation and cause unstable performance. Applying existing pruning methods in this setting leads to large accuracy drops, exposing a clear gap and the need for new techniques. Thus, we propose Contextually Adaptive Token Pruning (CATP), a training-free pruning method targeted at multimodal ICL. CATP consists of two stages that perform progressive pruning to fully account for the complex cross-modal interactions in the input sequence. After removing 77.8\% of the image tokens, CATP produces an average performance gain of 0.6\% over the vanilla model on four LVLMs and eight benchmarks, exceeding all baselines remarkably. Meanwhile, it effectively improves efficiency by achieving an average reduction of 10.78\% in inference latency. CATP enhances the practical value of multimodal ICL and lays the groundwork for future progress in interleaved image-text scenarios.

A Semantic Segmentation Algorithm for Pleural Effusion Based on DBIF-AUNet

Aug 08, 2025Abstract:Pleural effusion semantic segmentation can significantly enhance the accuracy and timeliness of clinical diagnosis and treatment by precisely identifying disease severity and lesion areas. Currently, semantic segmentation of pleural effusion CT images faces multiple challenges. These include similar gray levels between effusion and surrounding tissues, blurred edges, and variable morphology. Existing methods often struggle with diverse image variations and complex edges, primarily because direct feature concatenation causes semantic gaps. To address these challenges, we propose the Dual-Branch Interactive Fusion Attention model (DBIF-AUNet). This model constructs a densely nested skip-connection network and innovatively refines the Dual-Domain Feature Disentanglement module (DDFD). The DDFD module orthogonally decouples the functions of dual-domain modules to achieve multi-scale feature complementarity and enhance characteristics at different levels. Concurrently, we design a Branch Interaction Attention Fusion module (BIAF) that works synergistically with the DDFD. This module dynamically weights and fuses global, local, and frequency band features, thereby improving segmentation robustness. Furthermore, we implement a nested deep supervision mechanism with hierarchical adaptive hybrid loss to effectively address class imbalance. Through validation on 1,622 pleural effusion CT images from Southwest Hospital, DBIF-AUNet achieved IoU and Dice scores of 80.1% and 89.0% respectively. These results outperform state-of-the-art medical image segmentation models U-Net++ and Swin-UNet by 5.7%/2.7% and 2.2%/1.5% respectively, demonstrating significant optimization in segmentation accuracy for complex pleural effusion CT images.

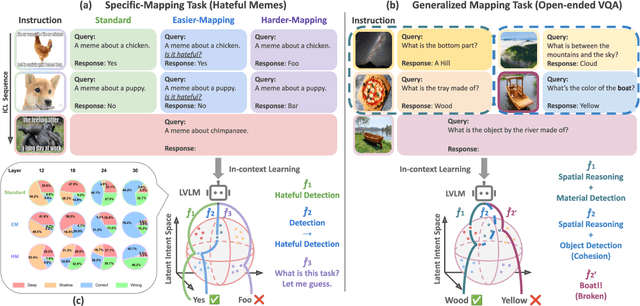

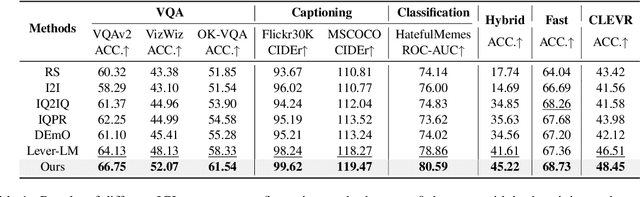

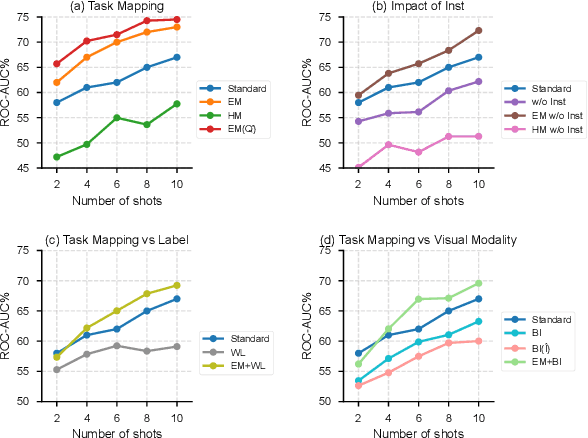

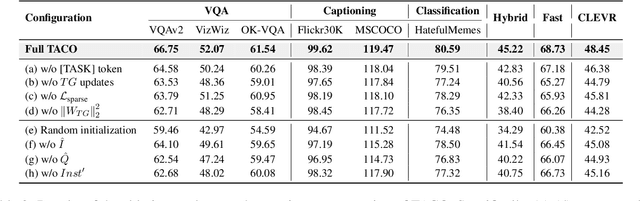

TACO: Enhancing Multimodal In-context Learning via Task Mapping-Guided Sequence Configuration

May 21, 2025

Abstract:Multimodal in-context learning (ICL) has emerged as a key mechanism for harnessing the capabilities of large vision-language models (LVLMs). However, its effectiveness remains highly sensitive to the quality of input in-context sequences, particularly for tasks involving complex reasoning or open-ended generation. A major limitation is our limited understanding of how LVLMs actually exploit these sequences during inference. To bridge this gap, we systematically interpret multimodal ICL through the lens of task mapping, which reveals how local and global relationships within and among demonstrations guide model reasoning. Building on this insight, we present TACO, a lightweight transformer-based model equipped with task-aware attention that dynamically configures in-context sequences. By injecting task-mapping signals into the autoregressive decoding process, TACO creates a bidirectional synergy between sequence construction and task reasoning. Experiments on five LVLMs and nine datasets demonstrate that TACO consistently surpasses baselines across diverse ICL tasks. These results position task mapping as a valuable perspective for interpreting and improving multimodal ICL.

CAMA: Enhancing Multimodal In-Context Learning with Context-Aware Modulated Attention

May 21, 2025Abstract:Multimodal in-context learning (ICL) enables large vision-language models (LVLMs) to efficiently adapt to novel tasks, supporting a wide array of real-world applications. However, multimodal ICL remains unstable, and current research largely focuses on optimizing sequence configuration while overlooking the internal mechanisms of LVLMs. In this work, we first provide a theoretical analysis of attentional dynamics in multimodal ICL and identify three core limitations of standard attention that ICL impair performance. To address these challenges, we propose Context-Aware Modulated Attention (CAMA), a simple yet effective plug-and-play method for directly calibrating LVLM attention logits. CAMA is training-free and can be seamlessly applied to various open-source LVLMs. We evaluate CAMA on four LVLMs across six benchmarks, demonstrating its effectiveness and generality. CAMA opens new opportunities for deeper exploration and targeted utilization of LVLM attention dynamics to advance multimodal reasoning.

M2IV: Towards Efficient and Fine-grained Multimodal In-Context Learning in Large Vision-Language Models

Apr 06, 2025Abstract:Multimodal in-context learning (ICL) is a vital capability for Large Vision-Language Models (LVLMs), allowing task adaptation via contextual prompts without parameter retraining. However, its application is hindered by the token-intensive nature of inputs and the high complexity of cross-modal few-shot learning, which limits the expressive power of representation methods. To tackle these challenges, we propose \textbf{M2IV}, a method that substitutes explicit demonstrations with learnable \textbf{I}n-context \textbf{V}ectors directly integrated into LVLMs. By exploiting the complementary strengths of multi-head attention (\textbf{M}HA) and multi-layer perceptrons (\textbf{M}LP), M2IV achieves robust cross-modal fidelity and fine-grained semantic distillation through training. This significantly enhances performance across diverse LVLMs and tasks and scales efficiently to many-shot scenarios, bypassing the context window limitations. We also introduce \textbf{VLibrary}, a repository for storing and retrieving M2IV, enabling flexible LVLM steering for tasks like cross-modal alignment, customized generation and safety improvement. Experiments across seven benchmarks and three LVLMs show that M2IV surpasses Vanilla ICL and prior representation engineering approaches, with an average accuracy gain of \textbf{3.74\%} over ICL with the same shot count, alongside substantial efficiency advantages.

EAZY: Eliminating Hallucinations in LVLMs by Zeroing out Hallucinatory Image Tokens

Mar 10, 2025Abstract:Despite their remarkable potential, Large Vision-Language Models (LVLMs) still face challenges with object hallucination, a problem where their generated outputs mistakenly incorporate objects that do not actually exist. Although most works focus on addressing this issue within the language-model backbone, our work shifts the focus to the image input source, investigating how specific image tokens contribute to hallucinations. Our analysis reveals a striking finding: a small subset of image tokens with high attention scores are the primary drivers of object hallucination. By removing these hallucinatory image tokens (only 1.5% of all image tokens), the issue can be effectively mitigated. This finding holds consistently across different models and datasets. Building on this insight, we introduce EAZY, a novel, training-free method that automatically identifies and Eliminates hAllucinations by Zeroing out hallucinatorY image tokens. We utilize EAZY for unsupervised object hallucination detection, achieving 15% improvement compared to previous methods. Additionally, EAZY demonstrates remarkable effectiveness in mitigating hallucinations while preserving model utility and seamlessly adapting to various LVLM architectures.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge