Li Yu

School of Electronic Information and Communications, Huazhong University of Science and Technology

Ostrakon-VL: Towards Domain-Expert MLLM for Food-Service and Retail Stores

Jan 29, 2026Abstract:Multimodal Large Language Models (MLLMs) have recently achieved substantial progress in general-purpose perception and reasoning. Nevertheless, their deployment in Food-Service and Retail Stores (FSRS) scenarios encounters two major obstacles: (i) real-world FSRS data, collected from heterogeneous acquisition devices, are highly noisy and lack auditable, closed-loop data curation, which impedes the construction of high-quality, controllable, and reproducible training corpora; and (ii) existing evaluation protocols do not offer a unified, fine-grained and standardized benchmark spanning single-image, multi-image, and video inputs, making it challenging to objectively gauge model robustness. To address these challenges, we first develop Ostrakon-VL, an FSRS-oriented MLLM based on Qwen3-VL-8B. Second, we introduce ShopBench, the first public benchmark for FSRS. Third, we propose QUAD (Quality-aware Unbiased Automated Data-curation), a multi-stage multimodal instruction data curation pipeline. Leveraging a multi-stage training strategy, Ostrakon-VL achieves an average score of 60.1 on ShopBench, establishing a new state of the art among open-source MLLMs with comparable parameter scales and diverse architectures. Notably, it surpasses the substantially larger Qwen3-VL-235B-A22B (59.4) by +0.7, and exceeds the same-scale Qwen3-VL-8B (55.3) by +4.8, demonstrating significantly improved parameter efficiency. These results indicate that Ostrakon-VL delivers more robust and reliable FSRS-centric perception and decision-making capabilities. To facilitate reproducible research, we will publicly release Ostrakon-VL and the ShopBench benchmark.

HeteroCache: A Dynamic Retrieval Approach to Heterogeneous KV Cache Compression for Long-Context LLM Inference

Jan 20, 2026Abstract:The linear memory growth of the KV cache poses a significant bottleneck for LLM inference in long-context tasks. Existing static compression methods often fail to preserve globally important information, principally because they overlook the attention drift phenomenon where token significance evolves dynamically. Although recent dynamic retrieval approaches attempt to address this issue, they typically suffer from coarse-grained caching strategies and incur high I/O overhead due to frequent data transfers. To overcome these limitations, we propose HeteroCache, a training-free dynamic compression framework. Our method is built on two key insights: attention heads exhibit diverse temporal heterogeneity, and there is significant spatial redundancy among heads within the same layer. Guided by these insights, HeteroCache categorizes heads based on stability and redundancy. Consequently, we apply a fine-grained weighting strategy that allocates larger cache budgets to heads with rapidly shifting attention to capture context changes, thereby addressing the inefficiency of coarse-grained strategies. Furthermore, we employ a hierarchical storage mechanism in which a subset of representative heads monitors attention shift, and trigger an asynchronous, on-demand retrieval of contexts from the CPU, effectively hiding I/O latency. Finally, experiments demonstrate that HeteroCache achieves state-of-the-art performance on multiple long-context benchmarks and accelerates decoding by up to $3\times$ compared to the original model in the 224K context. Our code will be open-source.

Remember Me, Refine Me: A Dynamic Procedural Memory Framework for Experience-Driven Agent Evolution

Dec 11, 2025Abstract:Procedural memory enables large language model (LLM) agents to internalize "how-to" knowledge, theoretically reducing redundant trial-and-error. However, existing frameworks predominantly suffer from a "passive accumulation" paradigm, treating memory as a static append-only archive. To bridge the gap between static storage and dynamic reasoning, we propose $\textbf{ReMe}$ ($\textit{Remember Me, Refine Me}$), a comprehensive framework for experience-driven agent evolution. ReMe innovates across the memory lifecycle via three mechanisms: 1) $\textit{multi-faceted distillation}$, which extracts fine-grained experiences by recognizing success patterns, analyzing failure triggers and generating comparative insights; 2) $\textit{context-adaptive reuse}$, which tailors historical insights to new contexts via scenario-aware indexing; and 3) $\textit{utility-based refinement}$, which autonomously adds valid memories and prunes outdated ones to maintain a compact, high-quality experience pool. Extensive experiments on BFCL-V3 and AppWorld demonstrate that ReMe establishes a new state-of-the-art in agent memory system. Crucially, we observe a significant memory-scaling effect: Qwen3-8B equipped with ReMe outperforms larger, memoryless Qwen3-14B, suggesting that self-evolving memory provides a computation-efficient pathway for lifelong learning. We release our code and the $\texttt{reme.library}$ dataset to facilitate further research.

Learning to Tell Apart: Weakly Supervised Video Anomaly Detection via Disentangled Semantic Alignment

Nov 13, 2025Abstract:Recent advancements in weakly-supervised video anomaly detection have achieved remarkable performance by applying the multiple instance learning paradigm based on multimodal foundation models such as CLIP to highlight anomalous instances and classify categories. However, their objectives may tend to detect the most salient response segments, while neglecting to mine diverse normal patterns separated from anomalies, and are prone to category confusion due to similar appearance, leading to unsatisfactory fine-grained classification results. Therefore, we propose a novel Disentangled Semantic Alignment Network (DSANet) to explicitly separate abnormal and normal features from coarse-grained and fine-grained aspects, enhancing the distinguishability. Specifically, at the coarse-grained level, we introduce a self-guided normality modeling branch that reconstructs input video features under the guidance of learned normal prototypes, encouraging the model to exploit normality cues inherent in the video, thereby improving the temporal separation of normal patterns and anomalous events. At the fine-grained level, we present a decoupled contrastive semantic alignment mechanism, which first temporally decomposes each video into event-centric and background-centric components using frame-level anomaly scores and then applies visual-language contrastive learning to enhance class-discriminative representations. Comprehensive experiments on two standard benchmarks, namely XD-Violence and UCF-Crime, demonstrate that DSANet outperforms existing state-of-the-art methods.

AgentEvolver: Towards Efficient Self-Evolving Agent System

Nov 13, 2025Abstract:Autonomous agents powered by large language models (LLMs) have the potential to significantly enhance human productivity by reasoning, using tools, and executing complex tasks in diverse environments. However, current approaches to developing such agents remain costly and inefficient, as they typically require manually constructed task datasets and reinforcement learning (RL) pipelines with extensive random exploration. These limitations lead to prohibitively high data-construction costs, low exploration efficiency, and poor sample utilization. To address these challenges, we present AgentEvolver, a self-evolving agent system that leverages the semantic understanding and reasoning capabilities of LLMs to drive autonomous agent learning. AgentEvolver introduces three synergistic mechanisms: (i) self-questioning, which enables curiosity-driven task generation in novel environments, reducing dependence on handcrafted datasets; (ii) self-navigating, which improves exploration efficiency through experience reuse and hybrid policy guidance; and (iii) self-attributing, which enhances sample efficiency by assigning differentiated rewards to trajectory states and actions based on their contribution. By integrating these mechanisms into a unified framework, AgentEvolver enables scalable, cost-effective, and continual improvement of agent capabilities. Preliminary experiments indicate that AgentEvolver achieves more efficient exploration, better sample utilization, and faster adaptation compared to traditional RL-based baselines.

LENS: Learning to Segment Anything with Unified Reinforced Reasoning

Aug 19, 2025Abstract:Text-prompted image segmentation enables fine-grained visual understanding and is critical for applications such as human-computer interaction and robotics. However, existing supervised fine-tuning methods typically ignore explicit chain-of-thought (CoT) reasoning at test time, which limits their ability to generalize to unseen prompts and domains. To address this issue, we introduce LENS, a scalable reinforcement-learning framework that jointly optimizes the reasoning process and segmentation in an end-to-end manner. We propose unified reinforcement-learning rewards that span sentence-, box-, and segment-level cues, encouraging the model to generate informative CoT rationales while refining mask quality. Using a publicly available 3-billion-parameter vision-language model, i.e., Qwen2.5-VL-3B-Instruct, LENS achieves an average cIoU of 81.2% on the RefCOCO, RefCOCO+, and RefCOCOg benchmarks, outperforming the strong fine-tuned method, i.e., GLaMM, by up to 5.6%. These results demonstrate that RL-driven CoT reasoning serves as a robust prior for text-prompted segmentation and offers a practical path toward more generalizable Segment Anything models. Code is available at https://github.com/hustvl/LENS.

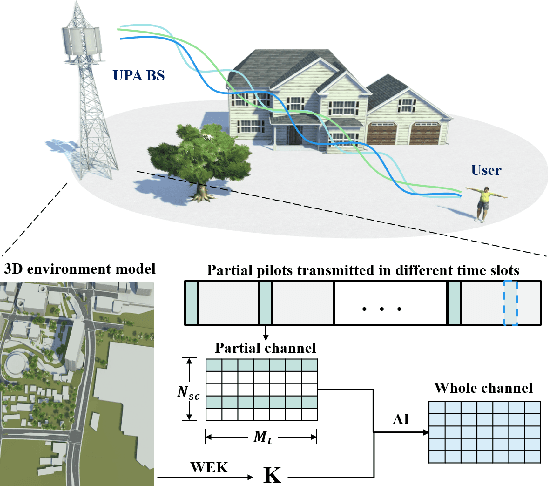

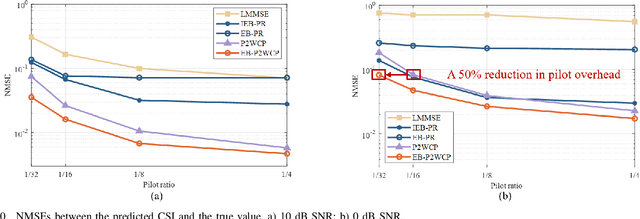

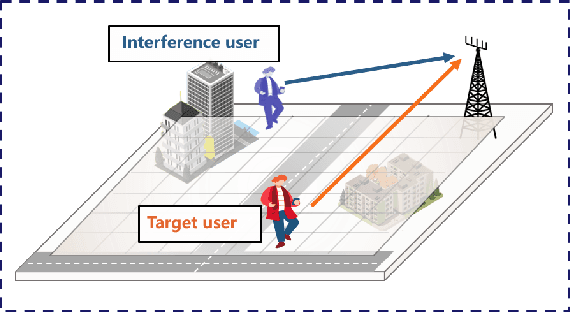

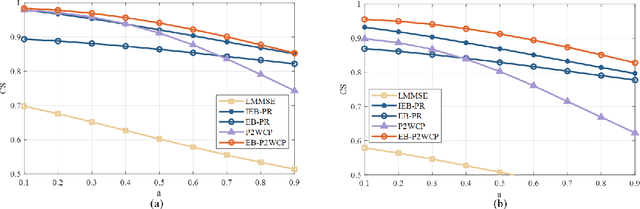

Digital Twin Channel-Aided CSI Prediction: A Environment-based Subspace Extraction Approach for Achieving Low Overhead and Robustness

Aug 07, 2025

Abstract:To meet the robust and high-speed communication requirements of the sixth-generation (6G) mobile communication system in complex scenarios, sensing- and artificial intelligence (AI)-based digital twin channel (DTC) techniques become a promising approach to reduce system overhead. In this paper, we propose an environment-specific channel subspace basis (EB)-aided partial-to-whole channel state information (CSI) prediction method (EB-P2WCP) for realizing DTC-enabled low-overhead channel prediction. Specifically, EB is utilized to characterize the static properties of the electromagnetic environment, which is extracted from the digital twin map, serving as environmental information prior to the prediction task. Then, we fuse EB with real-time estimated local CSI to predict the entire spatial-frequency domain channel for both the present and future time instances. Hence, an EB-based partial-to-whole CSI prediction network (EB-P2WNet) is designed to achieve a robust channel prediction scheme in various complex scenarios. Simulation results indicate that incorporating EB provides significant benefits under low signal-to-noise ratio and pilot ratio conditions, achieving a reduction of up to 50% in pilot overhead. Additionally, the proposed method maintains robustness against multi-user interference, tolerating 3-meter localization errors with only a 0.5 dB NMSE increase, and predicts CSI for the next channel coherent time within 1.3 milliseconds.

Multi-Modal Large Models Based Beam Prediction: An Example Empowered by DeepSeek

Jun 06, 2025Abstract:Beam prediction is an effective approach to reduce training overhead in massive multiple-input multiple-output (MIMO) systems. However, existing beam prediction models still exhibit limited generalization ability in diverse scenarios, which remains a critical challenge. In this paper, we propose MLM-BP, a beam prediction framework based on the multi-modal large model released by DeepSeek, with full consideration of multi-modal environmental information. Specifically, the distribution of scatterers that impact the optimal beam is captured by the sensing devices. Then positions are tokenized to generate text-based representations, and multi-view images are processed by an image encoder, which is fine-tuned with low-rank adaptation (LoRA), to extract environmental embeddings. Finally, these embeddings are fed into the large model, and an output projection module is designed to determine the optimal beam index. Simulation results show that MLM-BP achieves 98.1% Top-1 accuracy on the simulation dataset. Additionally, it demonstrates few-shot generalization on a real-world dataset, achieving 72.7% Top-1 accuracy and 92.4% Top-3 accuracy with only 30% of the dataset, outperforming the existing small models by over 15%.

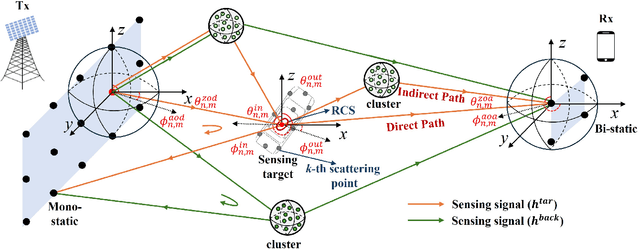

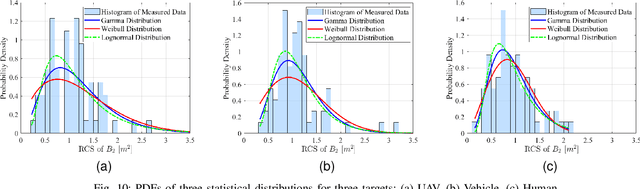

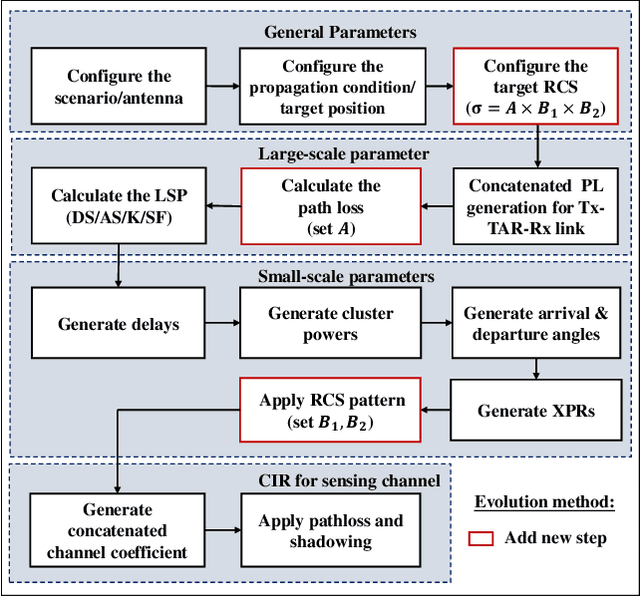

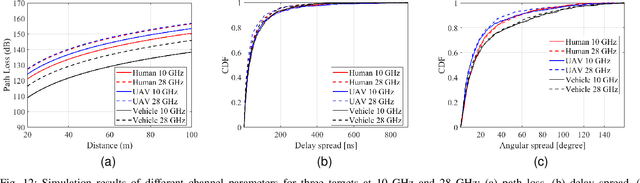

A Unified RCS Modeling of Typical Targets for 3GPP ISAC Channel Standardization and Experimental Analysis

May 27, 2025

Abstract:Accurate radar cross section (RCS) modeling is crucial for characterizing target scattering and improving the precision of Integrated Sensing and Communication (ISAC) channel modeling. Existing RCS models are typically designed for specific target types, leading to increased complexity and lack of generalization. This makes it difficult to standardize RCS models for 3GPP ISAC channels, which need to account for multiple typical target types simultaneously. Furthermore, 3GPP models must support both system-level and link-level simulations, requiring the integration of large-scale and small-scale scattering characteristics. To address these challenges, this paper proposes a unified RCS modeling framework that consolidates these two aspects. The model decomposes RCS into three components: (1) a large-scale power factor representing overall scattering strength, (2) a small-scale angular-dependent component describing directional scattering, and (3) a random component accounting for variations across target instances. We validate the model through mono-static RCS measurements for UAV, human, and vehicle targets across five frequency bands. The results demonstrate that the proposed model can effectively capture RCS variations for different target types. Finally, the model is incorporated into an ISAC channel simulation platform to assess the impact of target RCS characteristics on path loss, delay spread, and angular spread, providing valuable insights for future ISAC system design.

A Unified Deterministic Channel Model for Multi-Type RIS with Reflective, Transmissive, and Polarization Operations

May 12, 2025Abstract:Reconfigurable Intelligent Surface (RIS) technologies have been considered as a promising enabler for 6G, enabling advantageous control of electromagnetic (EM) propagation. RIS can be categorized into multiple types based on their reflective/transmissive modes and polarization control capabilities, all of which are expected to be widely deployed in practical environments. A reliable RIS channel model is essential for the design and development of RIS communication systems. While deterministic modeling approaches such as ray-tracing (RT) offer significant benefits, a unified model that accommodates all RIS types is still lacking. This paper addresses this gap by developing a high-precision deterministic channel model based on RT, supporting multiple RIS types: reflective, transmissive, hybrid, and three polarization operation modes. To achieve this, a unified EM response model for the aforementioned RIS types is developed. The reflection and transmission coefficients of RIS elements are derived using a tensor-based equivalent impedance approach, followed by calculating the scattered fields of the RIS to establish an EM response model. The performance of different RIS types is compared through simulations in typical scenarios. During this process, passive and lossless constraints on the reflection and transmission coefficients are incorporated to ensure fairness in the performance evaluation. Simulation results validate the framework's accuracy in characterizing the RIS channel, and specific cases tailored for dual-polarization independent control and polarization rotating RISs are highlighted as insights for their future deployment. This work can be helpful for the evaluation and optimization of RIS-enabled wireless communication systems.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge