Lecheng Ruan

BioPIE: A Biomedical Protocol Information Extraction Dataset for High-Reasoning-Complexity Experiment Question Answer

Jan 08, 2026Abstract:Question Answer (QA) systems for biomedical experiments facilitate cross-disciplinary communication, and serve as a foundation for downstream tasks, e.g., laboratory automation. High Information Density (HID) and Multi-Step Reasoning (MSR) pose unique challenges for biomedical experimental QA. While extracting structured knowledge, e.g., Knowledge Graphs (KGs), can substantially benefit biomedical experimental QA. Existing biomedical datasets focus on general or coarsegrained knowledge and thus fail to support the fine-grained experimental reasoning demanded by HID and MSR. To address this gap, we introduce Biomedical Protocol Information Extraction Dataset (BioPIE), a dataset that provides procedure-centric KGs of experimental entities, actions, and relations at a scale that supports reasoning over biomedical experiments across protocols. We evaluate information extraction methods on BioPIE, and implement a QA system that leverages BioPIE, showcasing performance gains on test, HID, and MSR question sets, showing that the structured experimental knowledge in BioPIE underpins both AI-assisted and more autonomous biomedical experimentation.

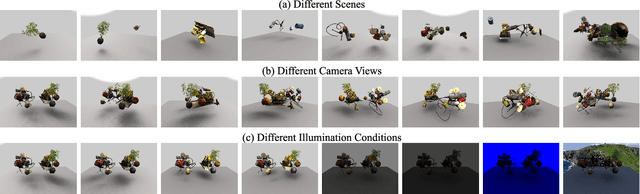

B*: Efficient and Optimal Base Placement for Fixed-Base Manipulators

Apr 17, 2025Abstract:B* is a novel optimization framework that addresses a critical challenge in fixed-base manipulator robotics: optimal base placement. Current methods rely on pre-computed kinematics databases generated through sampling to search for solutions. However, they face an inherent trade-off between solution optimality and computational efficiency when determining sampling resolution. To address these limitations, B* unifies multiple objectives without database dependence. The framework employs a two-layer hierarchical approach. The outer layer systematically manages terminal constraints through progressive tightening, particularly for base mobility, enabling feasible initialization and broad solution exploration. The inner layer addresses non-convexities in each outer-layer subproblem through sequential local linearization, converting the original problem into tractable sequential linear programming (SLP). Testing across multiple robot platforms demonstrates B*'s effectiveness. The framework achieves solution optimality five orders of magnitude better than sampling-based approaches while maintaining perfect success rates and reduced computational overhead. Operating directly in configuration space, B* enables simultaneous path planning with customizable optimization criteria. B* serves as a crucial initialization tool that bridges the gap between theoretical motion planning and practical deployment, where feasible trajectory existence is fundamental.

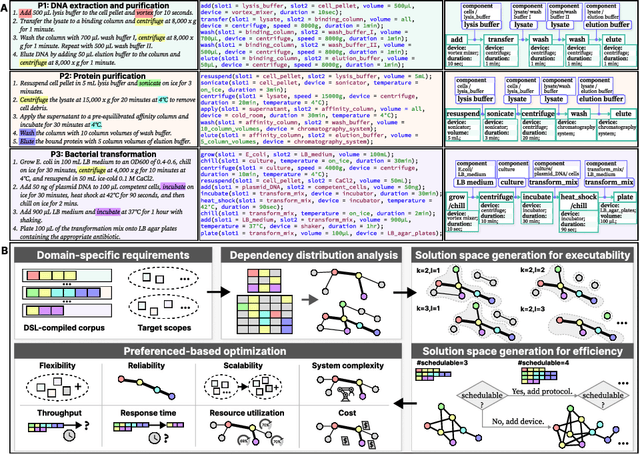

Hierarchically Encapsulated Representation for Protocol Design in Self-Driving Labs

Apr 04, 2025

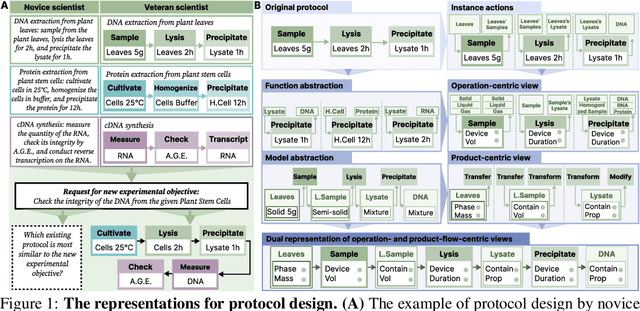

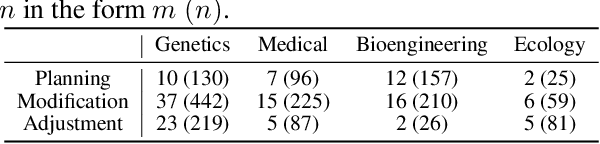

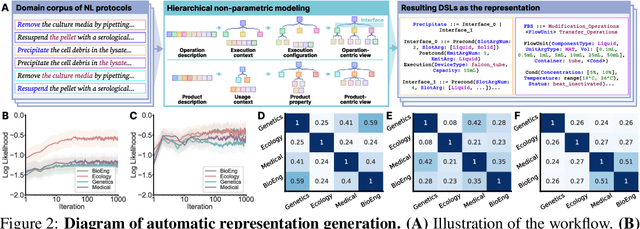

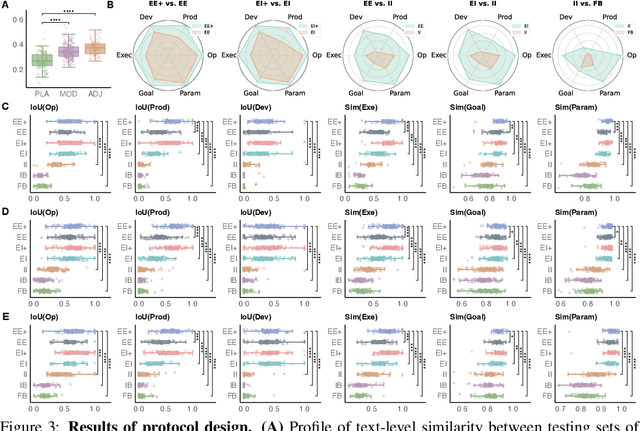

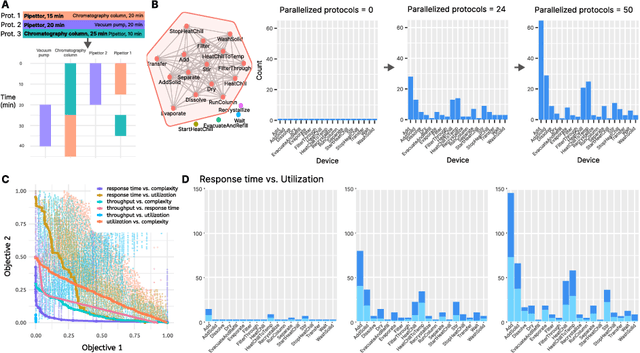

Abstract:Self-driving laboratories have begun to replace human experimenters in performing single experimental skills or predetermined experimental protocols. However, as the pace of idea iteration in scientific research has been intensified by Artificial Intelligence, the demand for rapid design of new protocols for new discoveries become evident. Efforts to automate protocol design have been initiated, but the capabilities of knowledge-based machine designers, such as Large Language Models, have not been fully elicited, probably for the absence of a systematic representation of experimental knowledge, as opposed to isolated, flatten pieces of information. To tackle this issue, we propose a multi-faceted, multi-scale representation, where instance actions, generalized operations, and product flow models are hierarchically encapsulated using Domain-Specific Languages. We further develop a data-driven algorithm based on non-parametric modeling that autonomously customizes these representations for specific domains. The proposed representation is equipped with various machine designers to manage protocol design tasks, including planning, modification, and adjustment. The results demonstrate that the proposed method could effectively complement Large Language Models in the protocol design process, serving as an auxiliary module in the realm of machine-assisted scientific exploration.

Physics-informed Neural Network Predictive Control for Quadruped Locomotion

Mar 10, 2025Abstract:This study introduces a unified control framework that addresses the challenge of precise quadruped locomotion with unknown payloads, named as online payload identification-based physics-informed neural network predictive control (OPI-PINNPC). By integrating online payload identification with physics-informed neural networks (PINNs), our approach embeds identified mass parameters directly into the neural network's loss function, ensuring physical consistency while adapting to changing load conditions. The physics-constrained neural representation serves as an efficient surrogate model within our nonlinear model predictive controller, enabling real-time optimization despite the complex dynamics of legged locomotion. Experimental validation on our quadruped robot platform demonstrates 35% improvement in position and orientation tracking accuracy across diverse payload conditions (25-100 kg), with substantially faster convergence compared to previous adaptive control methods. Our framework provides a adaptive solution for maintaining locomotion performance under variable payload conditions without sacrificing computational efficiency.

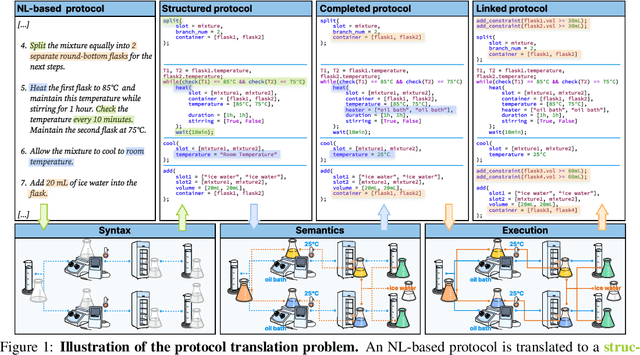

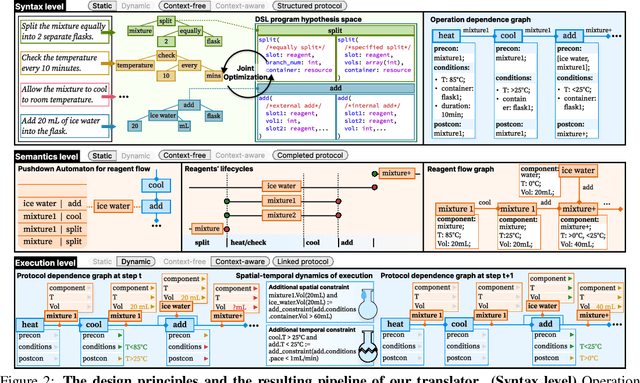

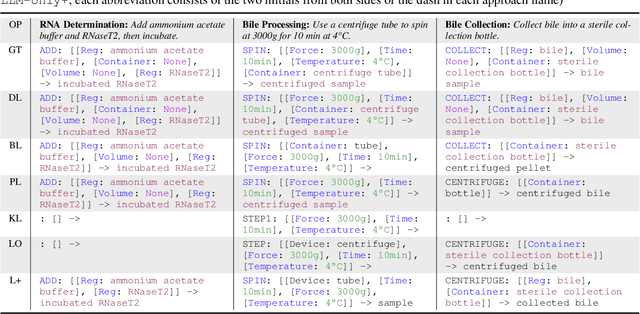

Expert-level protocol translation for self-driving labs

Nov 01, 2024

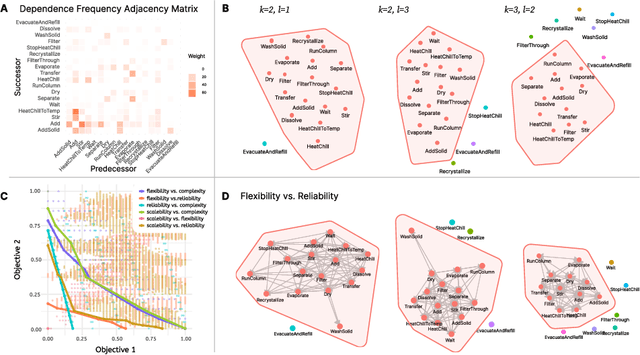

Abstract:Recent development in Artificial Intelligence (AI) models has propelled their application in scientific discovery, but the validation and exploration of these discoveries require subsequent empirical experimentation. The concept of self-driving laboratories promises to automate and thus boost the experimental process following AI-driven discoveries. However, the transition of experimental protocols, originally crafted for human comprehension, into formats interpretable by machines presents significant challenges, which, within the context of specific expert domain, encompass the necessity for structured as opposed to natural language, the imperative for explicit rather than tacit knowledge, and the preservation of causality and consistency throughout protocol steps. Presently, the task of protocol translation predominantly requires the manual and labor-intensive involvement of domain experts and information technology specialists, rendering the process time-intensive. To address these issues, we propose a framework that automates the protocol translation process through a three-stage workflow, which incrementally constructs Protocol Dependence Graphs (PDGs) that approach structured on the syntax level, completed on the semantics level, and linked on the execution level. Quantitative and qualitative evaluations have demonstrated its performance at par with that of human experts, underscoring its potential to significantly expedite and democratize the process of scientific discovery by elevating the automation capabilities within self-driving laboratories.

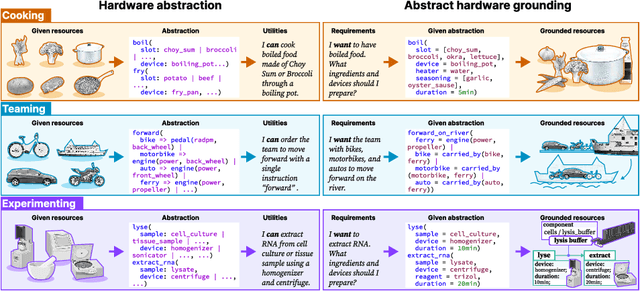

Abstract Hardware Grounding towards the Automated Design of Automation Systems

Oct 08, 2024

Abstract:Crafting automation systems tailored for specific domains requires aligning the space of human experts' semantics with the space of robot executable actions, and scheduling the required resources and system layout accordingly. Regrettably, there are three major gaps, fine-grained domain-specific knowledge injection, heterogeneity between human knowledge and robot instructions, and diversity of users' preferences, resulting automation system design a case-by-case and labour-intensive effort, thus hindering the democratization of automation. We refer to this challenging alignment as the abstract hardware grounding problem, where we firstly regard the procedural operations in humans' semantics space as the abstraction of hardware requirements, then we ground such abstractions to instantiated hardware devices, subject to constraints and preferences in the real world -- optimizing this problem is essentially standardizing and automating the design of automation systems. On this basis, we develop an automated design framework in a hybrid data-driven and principle-derived fashion. Results on designing self-driving laboratories for enhancing experiment-driven scientific discovery suggest our framework's potential to produce compact systems that fully satisfy domain-specific and user-customized requirements with no redundancy.

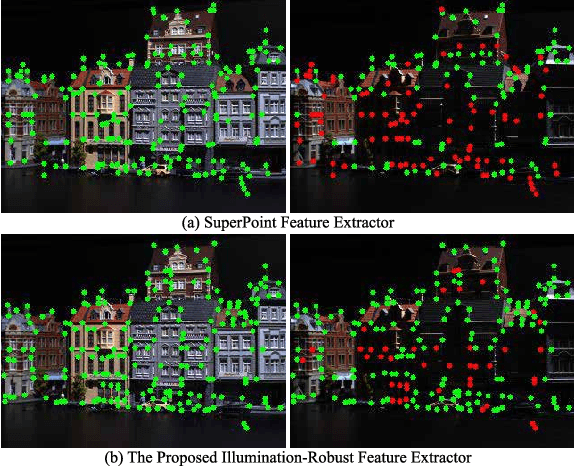

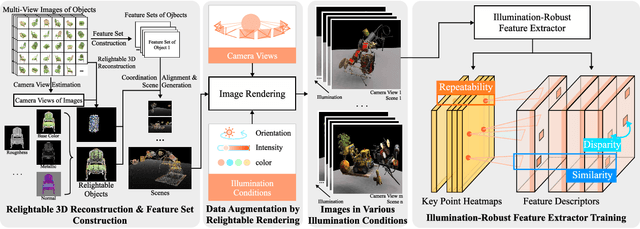

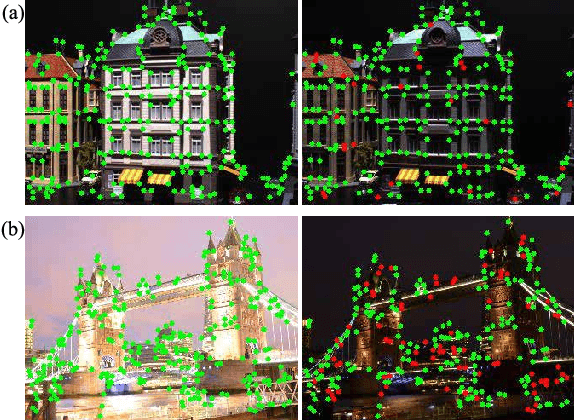

An Illumination-Robust Feature Extractor Augmented by Relightable 3D Reconstruction

Oct 01, 2024

Abstract:Visual features, whose description often relies on the local intensity and gradient direction, have found wide applications in robot navigation and localization in recent years. However, the extraction of visual features is usually disturbed by the variation of illumination conditions, making it challenging for real-world applications. Previous works have addressed this issue by establishing datasets with variations in illumination conditions, but can be costly and time-consuming. This paper proposes a design procedure for an illumination-robust feature extractor, where the recently developed relightable 3D reconstruction techniques are adopted for rapid and direct data generation with varying illumination conditions. A self-supervised framework is proposed for extracting features with advantages in repeatability for key points and similarity for descriptors across good and bad illumination conditions. Experiments are conducted to demonstrate the effectiveness of the proposed method for robust feature extraction. Ablation studies also indicate the effectiveness of the self-supervised framework design.

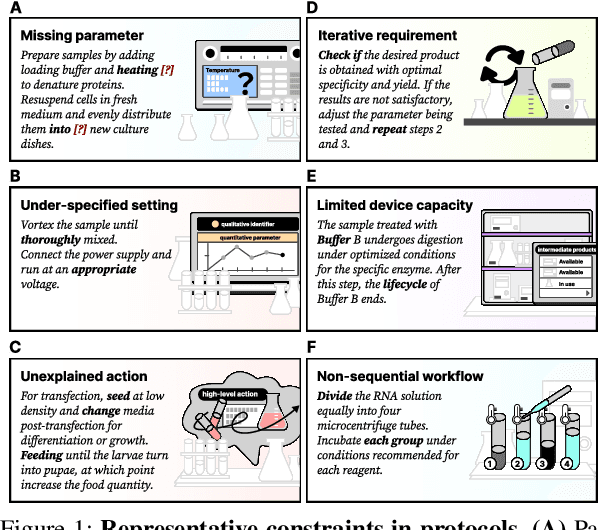

AutoDSL: Automated domain-specific language design for structural representation of procedures with constraints

Jun 18, 2024

Abstract:Accurate representation of procedures in restricted scenarios, such as non-standardized scientific experiments, requires precise depiction of constraints. Unfortunately, Domain-specific Language (DSL), as an effective tool to express constraints structurally, often requires case-by-case hand-crafting, necessitating customized, labor-intensive efforts. To overcome this challenge, we introduce the AutoDSL framework to automate DSL-based constraint design across various domains. Utilizing domain specified experimental protocol corpora, AutoDSL optimizes syntactic constraints and abstracts semantic constraints. Quantitative and qualitative analyses of the DSLs designed by AutoDSL across five distinct domains highlight its potential as an auxiliary module for language models, aiming to improve procedural planning and execution.

Tac-Man: Tactile-Informed Prior-Free Manipulation of Articulated Objects

Mar 04, 2024Abstract:Integrating robotics into human-centric environments such as homes, necessitates advanced manipulation skills as robotic devices will need to engage with articulated objects like doors and drawers. Key challenges in robotic manipulation are the unpredictability and diversity of these objects' internal structures, which render models based on priors, both explicit and implicit, inadequate. Their reliability is significantly diminished by pre-interaction ambiguities, imperfect structural parameters, encounters with unknown objects, and unforeseen disturbances. Here, we present a prior-free strategy, Tac-Man, focusing on maintaining stable robot-object contact during manipulation. Utilizing tactile feedback, but independent of object priors, Tac-Man enables robots to proficiently handle a variety of articulated objects, including those with complex joints, even when influenced by unexpected disturbances. Demonstrated in both real-world experiments and extensive simulations, it consistently achieves near-perfect success in dynamic and varied settings, outperforming existing methods. Our results indicate that tactile sensing alone suffices for managing diverse articulated objects, offering greater robustness and generalization than prior-based approaches. This underscores the importance of detailed contact modeling in complex manipulation tasks, especially with articulated objects. Advancements in tactile sensors significantly expand the scope of robotic applications in human-centric environments, particularly where accurate models are difficult to obtain.

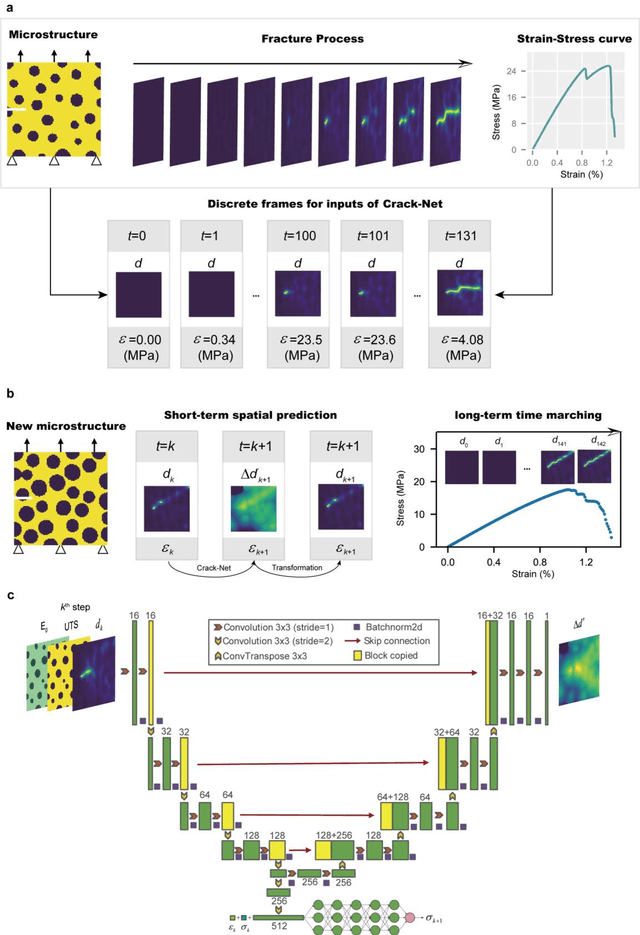

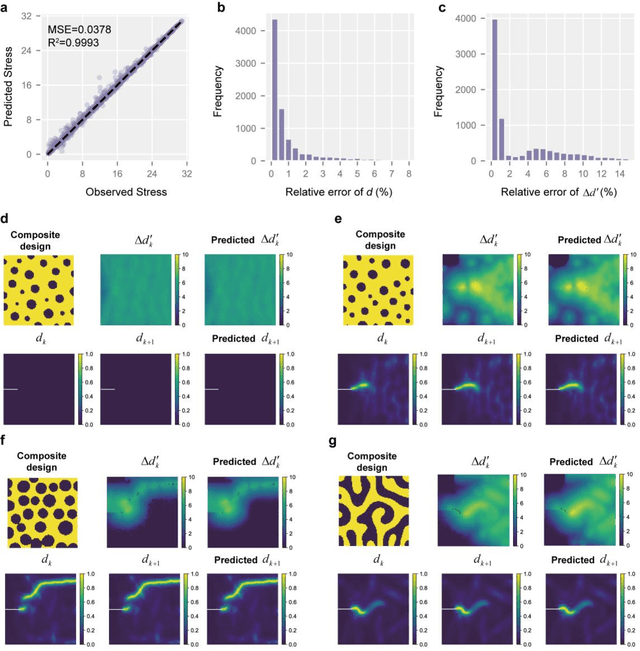

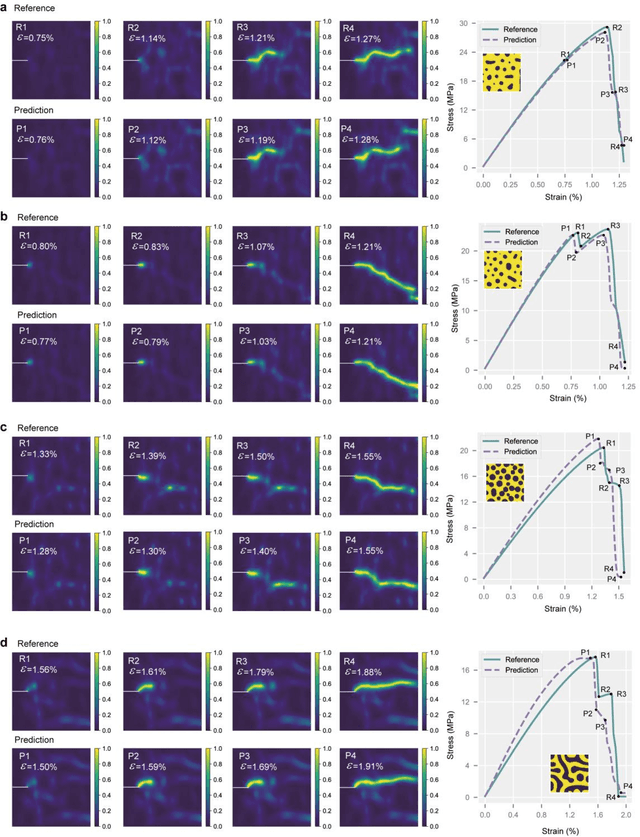

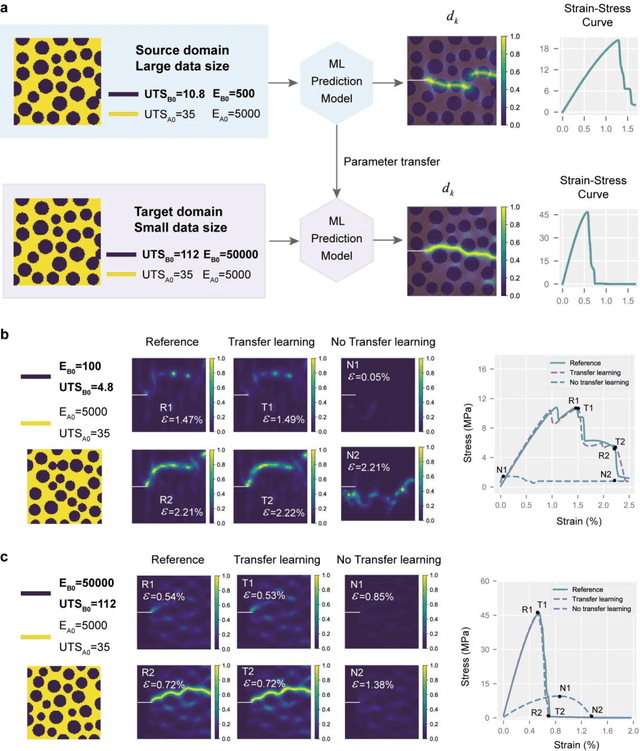

Crack-Net: Prediction of Crack Propagation in Composites

Sep 24, 2023

Abstract:Computational solid mechanics has become an indispensable approach in engineering, and numerical investigation of fracture in composites is essential as composites are widely used in structural applications. Crack evolution in composites is the bridge to elucidate the relationship between the microstructure and fracture performance, but crack-based finite element methods are computationally expensive and time-consuming, limiting their application in computation-intensive scenarios. Here we propose a deep learning framework called Crack-Net, which incorporates the relationship between crack evolution and stress response to predict the fracture process in composites. Trained on a high-precision fracture development dataset generated using the phase field method, Crack-Net demonstrates a remarkable capability to accurately forecast the long-term evolution of crack growth patterns and the stress-strain curve for a given composite design. The Crack-Net captures the essential principle of crack growth, which enables it to handle more complex microstructures such as binary co-continuous structures. Moreover, transfer learning is adopted to further improve the generalization ability of Crack-Net for composite materials with reinforcements of different strengths. The proposed Crack-Net holds great promise for practical applications in engineering and materials science, in which accurate and efficient fracture prediction is crucial for optimizing material performance and microstructural design.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge