Zihang Zhao

Simultaneous Tactile-Visual Perception for Learning Multimodal Robot Manipulation

Dec 10, 2025Abstract:Robotic manipulation requires both rich multimodal perception and effective learning frameworks to handle complex real-world tasks. See-through-skin (STS) sensors, which combine tactile and visual perception, offer promising sensing capabilities, while modern imitation learning provides powerful tools for policy acquisition. However, existing STS designs lack simultaneous multimodal perception and suffer from unreliable tactile tracking. Furthermore, integrating these rich multimodal signals into learning-based manipulation pipelines remains an open challenge. We introduce TacThru, an STS sensor enabling simultaneous visual perception and robust tactile signal extraction, and TacThru-UMI, an imitation learning framework that leverages these multimodal signals for manipulation. Our sensor features a fully transparent elastomer, persistent illumination, novel keyline markers, and efficient tracking, while our learning system integrates these signals through a Transformer-based Diffusion Policy. Experiments on five challenging real-world tasks show that TacThru-UMI achieves an average success rate of 85.5%, significantly outperforming the baselines of alternating tactile-visual (66.3%) and vision-only (55.4%). The system excels in critical scenarios, including contact detection with thin and soft objects and precision manipulation requiring multimodal coordination. This work demonstrates that combining simultaneous multimodal perception with modern learning frameworks enables more precise, adaptable robotic manipulation.

B*: Efficient and Optimal Base Placement for Fixed-Base Manipulators

Apr 17, 2025Abstract:B* is a novel optimization framework that addresses a critical challenge in fixed-base manipulator robotics: optimal base placement. Current methods rely on pre-computed kinematics databases generated through sampling to search for solutions. However, they face an inherent trade-off between solution optimality and computational efficiency when determining sampling resolution. To address these limitations, B* unifies multiple objectives without database dependence. The framework employs a two-layer hierarchical approach. The outer layer systematically manages terminal constraints through progressive tightening, particularly for base mobility, enabling feasible initialization and broad solution exploration. The inner layer addresses non-convexities in each outer-layer subproblem through sequential local linearization, converting the original problem into tractable sequential linear programming (SLP). Testing across multiple robot platforms demonstrates B*'s effectiveness. The framework achieves solution optimality five orders of magnitude better than sampling-based approaches while maintaining perfect success rates and reduced computational overhead. Operating directly in configuration space, B* enables simultaneous path planning with customizable optimization criteria. B* serves as a crucial initialization tool that bridges the gap between theoretical motion planning and practical deployment, where feasible trajectory existence is fundamental.

Taccel: Scaling Up Vision-based Tactile Robotics via High-performance GPU Simulation

Apr 17, 2025Abstract:Tactile sensing is crucial for achieving human-level robotic capabilities in manipulation tasks. VBTSs have emerged as a promising solution, offering high spatial resolution and cost-effectiveness by sensing contact through camera-captured deformation patterns of elastic gel pads. However, these sensors' complex physical characteristics and visual signal processing requirements present unique challenges for robotic applications. The lack of efficient and accurate simulation tools for VBTS has significantly limited the scale and scope of tactile robotics research. Here we present Taccel, a high-performance simulation platform that integrates IPC and ABD to model robots, tactile sensors, and objects with both accuracy and unprecedented speed, achieving an 18-fold acceleration over real-time across thousands of parallel environments. Unlike previous simulators that operate at sub-real-time speeds with limited parallelization, Taccel provides precise physics simulation and realistic tactile signals while supporting flexible robot-sensor configurations through user-friendly APIs. Through extensive validation in object recognition, robotic grasping, and articulated object manipulation, we demonstrate precise simulation and successful sim-to-real transfer. These capabilities position Taccel as a powerful tool for scaling up tactile robotics research and development. By enabling large-scale simulation and experimentation with tactile sensing, Taccel accelerates the development of more capable robotic systems, potentially transforming how robots interact with and understand their physical environment.

Embedding high-resolution touch across robotic hands enables adaptive human-like grasping

Dec 19, 2024

Abstract:Developing robotic hands that adapt to real-world dynamics remains a fundamental challenge in robotics and machine intelligence. Despite significant advances in replicating human hand kinematics and control algorithms, robotic systems still struggle to match human capabilities in dynamic environments, primarily due to inadequate tactile feedback. To bridge this gap, we present F-TAC Hand, a biomimetic hand featuring high-resolution tactile sensing (0.1mm spatial resolution) across 70% of its surface area. Through optimized hand design, we overcome traditional challenges in integrating high-resolution tactile sensors while preserving the full range of motion. The hand, powered by our generative algorithm that synthesizes human-like hand configurations, demonstrates robust grasping capabilities in dynamic real-world conditions. Extensive evaluation across 600 real-world trials demonstrates that this tactile-embodied system significantly outperforms non-tactile alternatives in complex manipulation tasks (p<0.0001). These results provide empirical evidence for the critical role of rich tactile embodiment in developing advanced robotic intelligence, offering new perspectives on the relationship between physical sensing capabilities and intelligent behavior.

MiniTac: An Ultra-Compact 8 mm Vision-Based Tactile Sensor for Enhanced Palpation in Robot-Assisted Minimally Invasive Surgery

Oct 30, 2024Abstract:Robot-assisted minimally invasive surgery (RAMIS) provides substantial benefits over traditional open and laparoscopic methods. However, a significant limitation of RAMIS is the surgeon's inability to palpate tissues, a crucial technique for examining tissue properties and detecting abnormalities, restricting the widespread adoption of RAMIS. To overcome this obstacle, we introduce MiniTac, a novel vision-based tactile sensor with an ultra-compact cross-sectional diameter of 8 mm, designed for seamless integration into mainstream RAMIS devices, particularly the Da Vinci surgical systems. MiniTac features a novel mechanoresponsive photonic elastomer membrane that changes color distribution under varying contact pressures. This color change is captured by an embedded miniature camera, allowing MiniTac to detect tumors both on the tissue surface and in deeper layers typically obscured from endoscopic view. MiniTac's efficacy has been rigorously tested on both phantoms and ex-vivo tissues. By leveraging advanced mechanoresponsive photonic materials, MiniTac represents a significant advancement in integrating tactile sensing into RAMIS, potentially expanding its applicability to a wider array of clinical scenarios that currently rely on traditional surgical approaches.

Tac-Man: Tactile-Informed Prior-Free Manipulation of Articulated Objects

Mar 04, 2024Abstract:Integrating robotics into human-centric environments such as homes, necessitates advanced manipulation skills as robotic devices will need to engage with articulated objects like doors and drawers. Key challenges in robotic manipulation are the unpredictability and diversity of these objects' internal structures, which render models based on priors, both explicit and implicit, inadequate. Their reliability is significantly diminished by pre-interaction ambiguities, imperfect structural parameters, encounters with unknown objects, and unforeseen disturbances. Here, we present a prior-free strategy, Tac-Man, focusing on maintaining stable robot-object contact during manipulation. Utilizing tactile feedback, but independent of object priors, Tac-Man enables robots to proficiently handle a variety of articulated objects, including those with complex joints, even when influenced by unexpected disturbances. Demonstrated in both real-world experiments and extensive simulations, it consistently achieves near-perfect success in dynamic and varied settings, outperforming existing methods. Our results indicate that tactile sensing alone suffices for managing diverse articulated objects, offering greater robustness and generalization than prior-based approaches. This underscores the importance of detailed contact modeling in complex manipulation tasks, especially with articulated objects. Advancements in tactile sensors significantly expand the scope of robotic applications in human-centric environments, particularly where accurate models are difficult to obtain.

Edge-assisted U-Shaped Split Federated Learning with Privacy-preserving for Internet of Things

Nov 08, 2023Abstract:In the realm of the Internet of Things (IoT), deploying deep learning models to process data generated or collected by IoT devices is a critical challenge. However, direct data transmission can cause network congestion and inefficient execution, given that IoT devices typically lack computation and communication capabilities. Centralized data processing in data centers is also no longer feasible due to concerns over data privacy and security. To address these challenges, we present an innovative Edge-assisted U-Shaped Split Federated Learning (EUSFL) framework, which harnesses the high-performance capabilities of edge servers to assist IoT devices in model training and optimization process. In this framework, we leverage Federated Learning (FL) to enable data holders to collaboratively train models without sharing their data, thereby enhancing data privacy protection by transmitting only model parameters. Additionally, inspired by Split Learning (SL), we split the neural network into three parts using U-shaped splitting for local training on IoT devices. By exploiting the greater computation capability of edge servers, our framework effectively reduces overall training time and allows IoT devices with varying capabilities to perform training tasks efficiently. Furthermore, we proposed a novel noise mechanism called LabelDP to ensure that data features and labels can securely resist reconstruction attacks, eliminating the risk of privacy leakage. Our theoretical analysis and experimental results demonstrate that EUSFL can be integrated with various aggregation algorithms, maintaining good performance across different computing capabilities of IoT devices, and significantly reducing training time and local computation overhead.

Rearrange Indoor Scenes for Human-Robot Co-Activity

Mar 10, 2023

Abstract:We present an optimization-based framework for rearranging indoor furniture to accommodate human-robot co-activities better. The rearrangement aims to afford sufficient accessible space for robot activities without compromising everyday human activities. To retain human activities, our algorithm preserves the functional relations among furniture by integrating spatial and semantic co-occurrence extracted from SUNCG and ConceptNet, respectively. By defining the robot's accessible space by the amount of open space it can traverse and the number of objects it can reach, we formulate the rearrangement for human-robot co-activity as an optimization problem, solved by adaptive simulated annealing (ASA) and covariance matrix adaptation evolution strategy (CMA-ES). Our experiments on the SUNCG dataset quantitatively show that rearranged scenes provide an average of 14% more accessible space and 30% more objects to interact with. The quality of the rearranged scenes is qualitatively validated by a human study, indicating the efficacy of the proposed strategy.

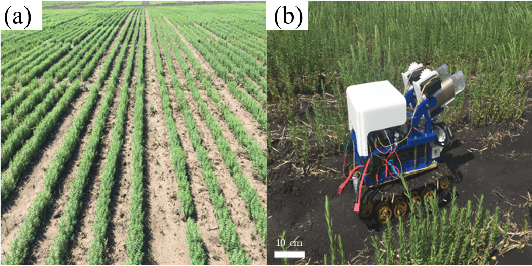

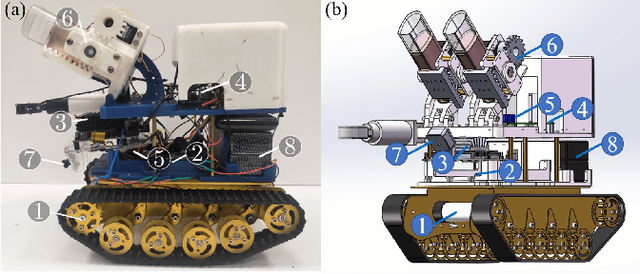

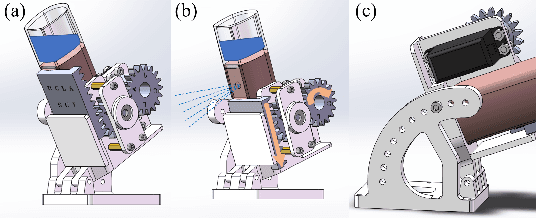

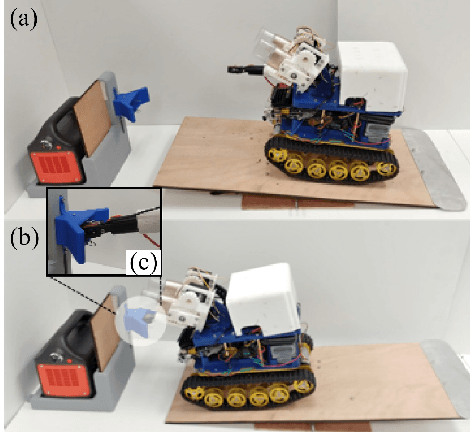

A Low-cost Robot with Autonomous Recharge and Navigation for Weed Control in Fields with Narrow Row Spacing

Dec 03, 2021

Abstract:Modern herbicide application in agricultural settings typically relies on either large scale sprayers that dispense herbicide over crops and weeds alike or portable sprayers that require labor intensive manual operation. The former method results in overuse of herbicide and reduction in crop yield while the latter is often untenable in large scale operations. This paper presents the first fully autonomous robot for weed management for row crops capable of computer vision based navigation, weed detection, complete field coverage, and automatic recharge for under \$400. The target application is autonomous inter-row weed control in crop fields, e.g. flax and canola, where the spacing between croplines is as small as one foot. The proposed robot is small enough to pass between croplines at all stages of plant growth while detecting weeds and spraying herbicide. A recharging system incorporates newly designed robotic hardware, a ramp, a robotic charging arm, and a mobile charging station. An integrated vision algorithm is employed to assist with charger alignment effectively. Combined, they enable the robot to work continuously in the field without access to electricity. In addition, a color-based contour algorithm combined with preprocessing techniques is applied for robust navigation relying on the input from the onboard monocular camera. Incorporating such compact robots into farms could help automate weed control, even during late stages of growth, and reduce herbicide use by targeting weeds with precision. The robotic platform is field-tested in the flaxseed fields of North Dakota.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge