Kaspar Althoefer

Enabling High-Curvature Navigation in Eversion Robots through Buckle-Inducing Constrictive Bands

Jan 18, 2026Abstract:Tip-growing eversion robots are renowned for their ability to access remote spaces through narrow passages. However, achieving reliable navigation remains a significant challenge. Existing solutions often rely on artificial muscles integrated into the robot body or active tip-steering mechanisms. While effective, these additions introduce structural complexity and compromise the defining advantages of eversion robots: their inherent softness and compliance. In this paper, we propose a passive approach to reduce bending stiffness by purposefully introducing buckling points along the robot's outer wall. We achieve this by integrating inextensible diameter-reducing circumferential bands at regular intervals along the robot body facilitating forward motion through tortuous, obstacle cluttered paths. Rather than relying on active steering, our approach leverages the robot's natural interaction with the environment, allowing for smooth, compliant navigation. We present a Cosserat rod-based mathematical model to quantify this behavior, capturing the local stiffness reductions caused by the constricting bands and their impact on global bending mechanics. Experimental results demonstrate that these bands reduce the robot's stiffness when bent at the tip by up to 91 percent, enabling consistent traversal of 180 degree bends with a bending radius of as low as 25 mm-notably lower than the 35 mm achievable by standard eversion robots under identical conditions. The feasibility of the proposed method is further demonstrated through a case study in a colon phantom. By significantly improving maneuverability without sacrificing softness or increasing mechanical complexity, this approach expands the applicability of eversion robots in highly curved pathways, whether in relation to pipe inspection or medical procedures such as colonoscopy.

Differential Analysis of Pseudo Haptic Feedback: Novel Comparative Study of Visual and Auditory Cue Integration for Psychophysical Evaluation

Oct 10, 2025Abstract:Pseudo-haptics exploit carefully crafted visual or auditory cues to trick the brain into "feeling" forces that are never physically applied, offering a low-cost alternative to traditional haptic hardware. Here, we present a comparative psychophysical study that quantifies how visual and auditory stimuli combine to evoke pseudo-haptic pressure sensations on a commodity tablet. Using a Unity-based Rollball game, participants (n = 4) guided a virtual ball across three textured terrains while their finger forces were captured in real time with a Robotous RFT40 force-torque sensor. Each terrain was paired with a distinct rolling-sound profile spanning 440 Hz - 4.7 kHz, 440 Hz - 13.1 kHz, or 440 Hz - 8.9 kHz; crevice collisions triggered additional "knocking" bursts to heighten realism. Average tactile forces increased systematically with cue intensity: 0.40 N, 0.79 N and 0.88 N for visual-only trials and 0.41 N, 0.81 N and 0.90 N for audio-only trials on Terrains 1-3, respectively. Higher audio frequencies and denser visual textures both elicited stronger muscle activation, and their combination further reduced the force needed to perceive surface changes, confirming multisensory integration. These results demonstrate that consumer-grade isometric devices can reliably induce and measure graded pseudo-haptic feedback without specialized actuators, opening a path toward affordable rehabilitation tools, training simulators and assistive interfaces.

Advancing Embodied Intelligence in Robotic-Assisted Endovascular Procedures: A Systematic Review of AI Solutions

Apr 21, 2025Abstract:Endovascular procedures have revolutionized the treatment of vascular diseases thanks to minimally invasive solutions that significantly reduce patient recovery time and enhance clinical outcomes. However, the precision and dexterity required during these procedures poses considerable challenges for interventionists. Robotic systems have emerged offering transformative solutions, addressing issues such as operator fatigue, radiation exposure, and the inherent limitations of human precision. The integration of Embodied Intelligence (EI) into these systems signifies a paradigm shift, enabling robots to navigate complex vascular networks and adapt to dynamic physiological conditions. Data-driven approaches, advanced computer vision, medical image analysis, and machine learning techniques, are at the forefront of this evolution. These methods augment procedural intelligence by facilitating real-time vessel segmentation, device tracking, and anatomical landmark detection. Reinforcement learning and imitation learning further refine navigation strategies and replicate experts' techniques. This review systematically examines the integration of EI principles into robotic technologies, in relation to endovascular procedures. We discuss recent advancements in intelligent perception and data-driven control, and their practical applications in robot-assisted endovascular procedures. By critically evaluating current limitations and emerging opportunities, this review establishes a framework for future developments, emphasizing the potential for greater autonomy and improved clinical outcomes. Emerging trends and specific areas of research, such as federated learning for medical data sharing, explainable AI for clinical decision support, and advanced human-robot collaboration paradigms, are also explored, offering insights into the future direction of this rapidly evolving field.

Embedding high-resolution touch across robotic hands enables adaptive human-like grasping

Dec 19, 2024

Abstract:Developing robotic hands that adapt to real-world dynamics remains a fundamental challenge in robotics and machine intelligence. Despite significant advances in replicating human hand kinematics and control algorithms, robotic systems still struggle to match human capabilities in dynamic environments, primarily due to inadequate tactile feedback. To bridge this gap, we present F-TAC Hand, a biomimetic hand featuring high-resolution tactile sensing (0.1mm spatial resolution) across 70% of its surface area. Through optimized hand design, we overcome traditional challenges in integrating high-resolution tactile sensors while preserving the full range of motion. The hand, powered by our generative algorithm that synthesizes human-like hand configurations, demonstrates robust grasping capabilities in dynamic real-world conditions. Extensive evaluation across 600 real-world trials demonstrates that this tactile-embodied system significantly outperforms non-tactile alternatives in complex manipulation tasks (p<0.0001). These results provide empirical evidence for the critical role of rich tactile embodiment in developing advanced robotic intelligence, offering new perspectives on the relationship between physical sensing capabilities and intelligent behavior.

Variable Stiffness & Dynamic Force Sensor for Tissue Palpation

Dec 13, 2024Abstract:Palpation of human tissue during Minimally Invasive Surgery is hampered due to restricted access. In this extended abstract, we present a variable stiffness and dynamic force range sensor that has the potential to address this challenge. The sensor utilises light reflection to estimate sensor deformation, and from this, the force applied. Experimental testing at different pressures (0, 0.5 and 1 PSI) shows that stiffness and force range increases with pressure. The force calibration results when compared with measured forces produced an average RMSE of 0.016, 0.0715 and 0.1284 N respectively, for these pressures.

Haptic Stiffness Perception Using Hand Exoskeletons in Tactile Robotic Telemanipulation

Dec 03, 2024Abstract:Robotic telemanipulation - the human-guided manipulation of remote objects - plays a pivotal role in several applications, from healthcare to operations in harsh environments. While visual feedback from cameras can provide valuable information to the human operator, haptic feedback is essential for accessing specific object properties that are difficult to be perceived by vision, such as stiffness. For the first time, we present a participant study demonstrating that operators can perceive the stiffness of remote objects during real-world telemanipulation with a dexterous robotic hand, when haptic feedback is generated from tactile sensing fingertips. Participants were tasked with squeezing soft objects by teleoperating a robotic hand, using two methods of haptic feedback: one based solely on the measured contact force, while the second also includes the squeezing displacement between the leader and follower devices. Our results demonstrate that operators are indeed capable of discriminating objects of different stiffness, relying on haptic feedback alone and without any visual feedback. Additionally, our findings suggest that the displacement feedback component may enhance discrimination with objects of similar stiffness.

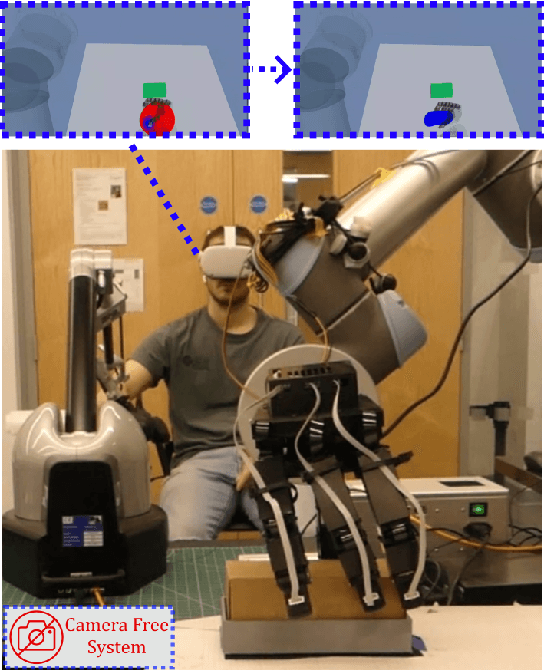

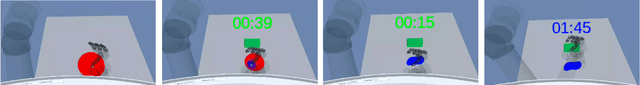

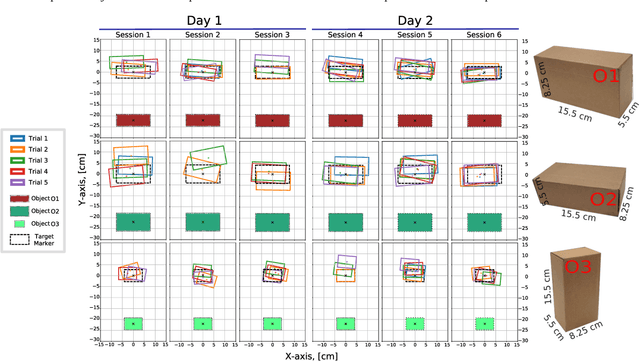

Leveraging Tactile Sensing to Render both Haptic Feedback and Virtual Reality 3D Object Reconstruction in Robotic Telemanipulation

Dec 03, 2024

Abstract:Dexterous robotic manipulator teleoperation is widely used in many applications, either where it is convenient to keep the human inside the control loop, or to train advanced robot agents. So far, this technology has been used in combination with camera systems with remarkable success. On the other hand, only a limited number of studies have focused on leveraging haptic feedback from tactile sensors in contexts where camera-based systems fail, such as due to self-occlusions or poor light conditions like smoke. This study demonstrates the feasibility of precise pick-and-place teleoperation without cameras by leveraging tactile-based 3D object reconstruction in VR and providing haptic feedback to a blindfolded user. Our preliminary results show that integrating these technologies enables the successful completion of telemanipulation tasks previously dependent on cameras, paving the way for more complex future applications.

Hydraulic Volumetric Soft Everting Vine Robot Steering Mechanism for Underwater Exploration

Sep 25, 2024Abstract:Despite a significant proportion of the Earth being covered in water, exploration of what lies below has been limited due to the challenges and difficulties inherent in the process. Current state of the art robots such as Remotely Operated Vehicles (ROVs) and Autonomous Underwater Vehicles (AUVs) are bulky, rigid and unable to conform to their environment. Soft robotics offers solutions to this issue. Fluid-actuated eversion or growing robots, in particular, are a good example. While current eversion robots have found many applications on land, their inherent properties make them particularly well suited to underwater environments. An important factor when considering underwater eversion robots is the establishment of a suitable steering mechanism that can enable the robot to change direction as required. This project proposes a design for an eversion robot that is capable of steering while underwater, through the use of bending pouches, a design commonly seen in the literature on land-based eversion robots. These bending pouches contract to enable directional change. Similar to their land-based counterparts, the underwater eversion robot uses the same fluid in the medium it operates in to achieve extension and bending but also to additionally aid in neutral buoyancy. The actuation method of bending pouches meant that robots needed to fully extend before steering was possible. Three robots, with the same design and dimensions were constructed from polyethylene tubes and tested. Our research shows that although the soft eversion robot design in this paper was not capable of consistently generating the same amounts of bending for the inflation volume, it still achieved suitable bending at a range of inflation volumes and was observed to bend to a maximum angle of 68 degrees at 2000 ml, which is in line with the bending angles reported for land-based eversion robots in the literature.

Large-scale Deployment of Vision-based Tactile Sensors on Multi-fingered Grippers

Aug 05, 2024Abstract:Vision-based Tactile Sensors (VBTSs) show significant promise in that they can leverage image measurements to provide high-spatial-resolution human-like performance. However, current VBTS designs, typically confined to the fingertips of robotic grippers, prove somewhat inadequate, as many grasping and manipulation tasks require multiple contact points with the object. With an end goal of enabling large-scale, multi-surface tactile sensing via VBTSs, our research (i) develops a synchronized image acquisition system with minimal latency,(ii) proposes a modularized VBTS design for easy integration into finger phalanges, and (iii) devises a zero-shot calibration approach to improve data efficiency in the simultaneous calibration of multiple VBTSs. In validating the system within a miniature 3-fingered robotic gripper equipped with 7 VBTSs we demonstrate improved tactile perception performance by covering the contact surfaces of both gripper fingers and palm. Additionally, we show that our VBTS design can be seamlessly integrated into various end-effector morphologies significantly reducing the data requirements for calibration.

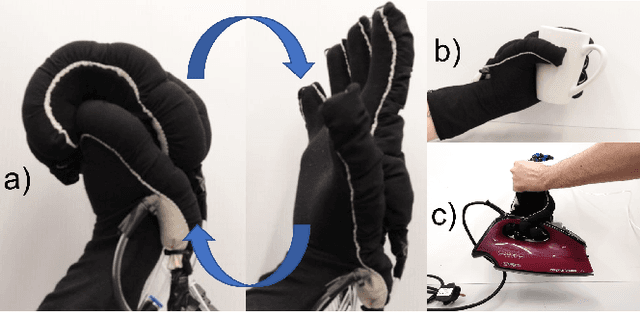

A User Study Method on Healthy Participants for Assessing an Assistive Wearable Robot Utilising EMG Sensing

Jul 31, 2024

Abstract:Hand-wearable robots, specifically exoskeletons, are designed to aid hands in daily activities, playing a crucial role in post-stroke rehabilitation and assisting the elderly. Our contribution to this field is a textile robotic glove with integrated actuators. These actuators, powered by pneumatic pressure, guide the user's hand to a desired position. Crafted from textile materials, our soft robotic glove prioritizes safety, lightweight construction, and user comfort. Utilizing the ruffles technique, integrated actuators guarantee high performance in blocking force and bending effectiveness. Here, we present a participant study confirming the effectiveness of our robotic device on a healthy participant group, exploiting EMG sensing.

* 3 pages, 4 figures, conference. arXiv admin note: text overlap with arXiv:2305.17720

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge