Benoit Clement

CROSSING, ENSTA Bretagne, Lab-STICC\_MATRIX

Towards Bio-inspired Heuristically Accelerated Reinforcement Learning for Adaptive Underwater Multi-Agents Behaviour

Feb 10, 2025

Abstract:This paper describes the problem of coordination of an autonomous Multi-Agent System which aims to solve the coverage planning problem in a complex environment. The considered applications are the detection and identification of objects of interest while covering an area. These tasks, which are highly relevant for space applications, are also of interest among various domains including the underwater context, which is the focus of this study. In this context, coverage planning is traditionally modelled as a Markov Decision Process where a coordinated MAS, a swarm of heterogeneous autonomous underwater vehicles, is required to survey an area and search for objects. This MDP is associated with several challenges: environment uncertainties, communication constraints, and an ensemble of hazards, including time-varying and unpredictable changes in the underwater environment. MARL algorithms can solve highly non-linear problems using deep neural networks and display great scalability against an increased number of agents. Nevertheless, most of the current results in the underwater domain are limited to simulation due to the high learning time of MARL algorithms. For this reason, a novel strategy is introduced to accelerate this convergence rate by incorporating biologically inspired heuristics to guide the policy during training. The PSO method, which is inspired by the behaviour of a group of animals, is selected as a heuristic. It allows the policy to explore the highest quality regions of the action and state spaces, from the beginning of the training, optimizing the exploration/exploitation trade-off. The resulting agent requires fewer interactions to reach optimal performance. The method is applied to the MSAC algorithm and evaluated for a 2D covering area mission in a continuous control environment.

Hydraulic Volumetric Soft Everting Vine Robot Steering Mechanism for Underwater Exploration

Sep 25, 2024Abstract:Despite a significant proportion of the Earth being covered in water, exploration of what lies below has been limited due to the challenges and difficulties inherent in the process. Current state of the art robots such as Remotely Operated Vehicles (ROVs) and Autonomous Underwater Vehicles (AUVs) are bulky, rigid and unable to conform to their environment. Soft robotics offers solutions to this issue. Fluid-actuated eversion or growing robots, in particular, are a good example. While current eversion robots have found many applications on land, their inherent properties make them particularly well suited to underwater environments. An important factor when considering underwater eversion robots is the establishment of a suitable steering mechanism that can enable the robot to change direction as required. This project proposes a design for an eversion robot that is capable of steering while underwater, through the use of bending pouches, a design commonly seen in the literature on land-based eversion robots. These bending pouches contract to enable directional change. Similar to their land-based counterparts, the underwater eversion robot uses the same fluid in the medium it operates in to achieve extension and bending but also to additionally aid in neutral buoyancy. The actuation method of bending pouches meant that robots needed to fully extend before steering was possible. Three robots, with the same design and dimensions were constructed from polyethylene tubes and tested. Our research shows that although the soft eversion robot design in this paper was not capable of consistently generating the same amounts of bending for the inflation volume, it still achieved suitable bending at a range of inflation volumes and was observed to bend to a maximum angle of 68 degrees at 2000 ml, which is in line with the bending angles reported for land-based eversion robots in the literature.

Feature Expansion and enhanced Compression for Class Incremental Learning

May 13, 2024Abstract:Class incremental learning consists in training discriminative models to classify an increasing number of classes over time. However, doing so using only the newly added class data leads to the known problem of catastrophic forgetting of the previous classes. Recently, dynamic deep learning architectures have been shown to exhibit a better stability-plasticity trade-off by dynamically adding new feature extractors to the model in order to learn new classes followed by a compression step to scale the model back to its original size, thus avoiding a growing number of parameters. In this context, we propose a new algorithm that enhances the compression of previous class knowledge by cutting and mixing patches of previous class samples with the new images during compression using our Rehearsal-CutMix method. We show that this new data augmentation reduces catastrophic forgetting by specifically targeting past class information and improving its compression. Extensive experiments performed on the CIFAR and ImageNet datasets under diverse incremental learning evaluation protocols demonstrate that our approach consistently outperforms the state-of-the-art . The code will be made available upon publication of our work.

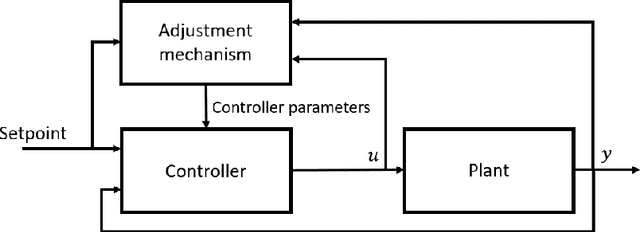

PID Tuning using Cross-Entropy Deep Learning: a Lyapunov Stability Analysis

Apr 18, 2024Abstract:Underwater Unmanned Vehicles (UUVs) have to constantly compensate for the external disturbing forces acting on their body. Adaptive Control theory is commonly used there to grant the control law some flexibility in its response to process variation. Today, learning-based (LB) adaptive methods are leading the field where model-based control structures are combined with deep model-free learning algorithms. This work proposes experiments and metrics to empirically study the stability of such a controller. We perform this stability analysis on a LB adaptive control system whose adaptive parameters are determined using a Cross-Entropy Deep Learning method.

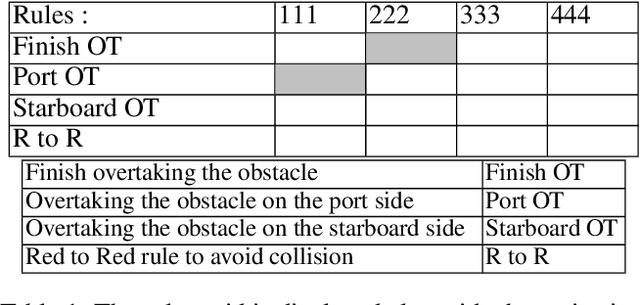

Hybrid Navigation Acceptability and Safety

Apr 18, 2024

Abstract:Autonomous vessels have emerged as a prominent and accepted solution, particularly in the naval defence sector. However, achieving full autonomy for marine vessels demands the development of robust and reliable control and guidance systems that can handle various encounters with manned and unmanned vessels while operating effectively under diverse weather and sea conditions. A significant challenge in this pursuit is ensuring the autonomous vessels' compliance with the International Regulations for Preventing Collisions at Sea (COLREGs). These regulations present a formidable hurdle for the human-level understanding by autonomous systems as they were originally designed from common navigation practices created since the mid-19th century. Their ambiguous language assumes experienced sailors' interpretation and execution, and therefore demands a high-level (cognitive) understanding of language and agent intentions. These capabilities surpass the current state-of-the-art in intelligent systems. This position paper highlights the critical requirements for a trustworthy control and guidance system, exploring the complexity of adapting COLREGs for safe vessel-on-vessel encounters considering autonomous maritime technology competing and/or cooperating with manned vessels.

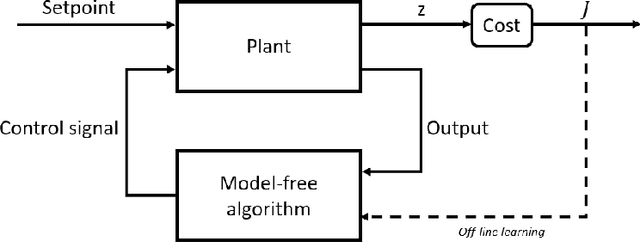

Sim-to-Real Transfer of Adaptive Control Parameters for AUV Stabilization under Current Disturbance

Oct 17, 2023Abstract:Learning-based adaptive control methods hold the premise of enabling autonomous agents to reduce the effect of process variations with minimal human intervention. However, its application to autonomous underwater vehicles (AUVs) has so far been restricted due to 1) unknown dynamics under the form of sea current disturbance that we can not model properly nor measure due to limited sensor capability and 2) the nonlinearity of AUVs tasks where the controller response at some operating points must be overly conservative in order to satisfy the specification at other operating points. Deep Reinforcement Learning (DRL) can alleviates these limitations by training general-purpose neural network policies, but applications of DRL algorithms to AUVs have been restricted to simulated environments, due to their inherent high sample complexity and distribution shift problem. This paper presents a novel approach, merging the Maximum Entropy Deep Reinforcement Learning framework with a classic model-based control architecture, to formulate an adaptive controller. Within this framework, we introduce a Sim-to-Real transfer strategy comprising the following components: a bio-inspired experience replay mechanism, an enhanced domain randomisation technique, and an evaluation protocol executed on a physical platform. Our experimental assessments demonstrate that this method effectively learns proficient policies from suboptimal simulated models of the AUV, resulting in control performance 3 times higher when transferred to a real-world vehicle, compared to its model-based nonadaptive but optimal counterpart.

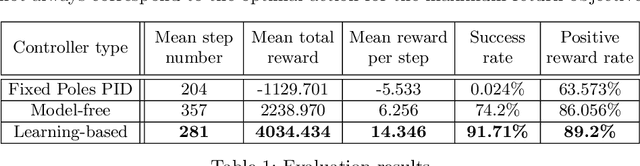

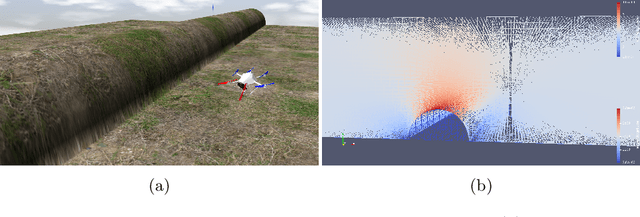

Learning-based vs Model-free Adaptive Control of a MAV under Wind Gust

Jan 29, 2021

Abstract:Navigation problems under unknown varying conditions are among the most important and well-studied problems in the control field. Classic model-based adaptive control methods can be applied only when a convenient model of the plant or environment is provided. Recent model-free adaptive control methods aim at removing this dependency by learning the physical characteristics of the plant and/or process directly from sensor feedback. Although there have been prior attempts at improving these techniques, it remains an open question as to whether it is possible to cope with real-world uncertainties in a control system that is fully based on either paradigm. We propose a conceptually simple learning-based approach composed of a full state feedback controller, tuned robustly by a deep reinforcement learning framework based on the Soft Actor-Critic algorithm. We compare it, in realistic simulations, to a model-free controller that uses the same deep reinforcement learning framework for the control of a micro aerial vehicle under wind gust. The results indicate the great potential of learning-based adaptive control methods in modern dynamical systems.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge