Andrew Lammas

Sim-to-Real Transfer of Adaptive Control Parameters for AUV Stabilization under Current Disturbance

Oct 17, 2023Abstract:Learning-based adaptive control methods hold the premise of enabling autonomous agents to reduce the effect of process variations with minimal human intervention. However, its application to autonomous underwater vehicles (AUVs) has so far been restricted due to 1) unknown dynamics under the form of sea current disturbance that we can not model properly nor measure due to limited sensor capability and 2) the nonlinearity of AUVs tasks where the controller response at some operating points must be overly conservative in order to satisfy the specification at other operating points. Deep Reinforcement Learning (DRL) can alleviates these limitations by training general-purpose neural network policies, but applications of DRL algorithms to AUVs have been restricted to simulated environments, due to their inherent high sample complexity and distribution shift problem. This paper presents a novel approach, merging the Maximum Entropy Deep Reinforcement Learning framework with a classic model-based control architecture, to formulate an adaptive controller. Within this framework, we introduce a Sim-to-Real transfer strategy comprising the following components: a bio-inspired experience replay mechanism, an enhanced domain randomisation technique, and an evaluation protocol executed on a physical platform. Our experimental assessments demonstrate that this method effectively learns proficient policies from suboptimal simulated models of the AUV, resulting in control performance 3 times higher when transferred to a real-world vehicle, compared to its model-based nonadaptive but optimal counterpart.

Real-time Quasi-Optimal Trajectory Planning for Autonomous Underwater Docking

May 03, 2016

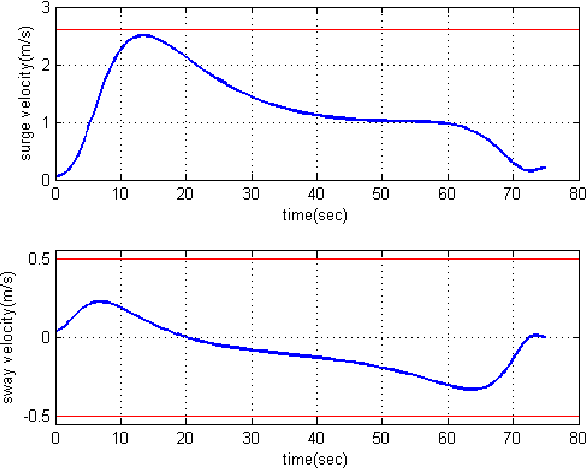

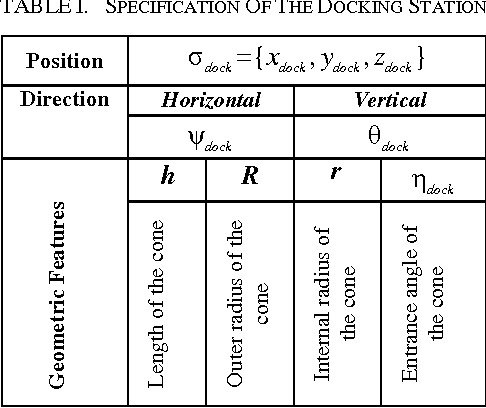

Abstract:In this paper, a real-time quasi-optimal trajectory planning scheme is employed to guide an autonomous underwater vehicle (AUV) safely into a funnel-shape stationary docking station. By taking advantage of the direct method of calculus of variation and inverse dynamics optimization, the proposed trajectory planner provides a computationally efficient framework for autonomous underwater docking in a 3D cluttered undersea environment. Vehicular constraints, such as constraints on AUV states and actuators; boundary conditions, including initial and final vehicle poses; and environmental constraints, for instance no-fly zones and current disturbances, are all modelled and considered in the problem formulation. The performance of the proposed planner algorithm is analyzed through simulation studies. To show the reliability and robustness of the method in dealing with uncertainty, Monte Carlo runs and statistical analysis are carried out. The results of the simulations indicate that the proposed planner is well suited for real-time implementation in a dynamic and uncertain environment.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge