Kai Wu

Sid

MedConsultBench: A Full-Cycle, Fine-Grained, Process-Aware Benchmark for Medical Consultation Agents

Jan 19, 2026Abstract:Current evaluations of medical consultation agents often prioritize outcome-oriented tasks, frequently overlooking the end-to-end process integrity and clinical safety essential for real-world practice. While recent interactive benchmarks have introduced dynamic scenarios, they often remain fragmented and coarse-grained, failing to capture the structured inquiry logic and diagnostic rigor required in professional consultations. To bridge this gap, we propose MedConsultBench, a comprehensive framework designed to evaluate the complete online consultation cycle by covering the entire clinical workflow from history taking and diagnosis to treatment planning and follow-up Q\&A. Our methodology introduces Atomic Information Units (AIUs) to track clinical information acquisition at a sub-turn level, enabling precise monitoring of how key facts are elicited through 22 fine-grained metrics. By addressing the underspecification and ambiguity inherent in online consultations, the benchmark evaluates uncertainty-aware yet concise inquiry while emphasizing medication regimen compatibility and the ability to handle realistic post-prescription follow-up Q\&A via constraint-respecting plan revisions. Systematic evaluation of 19 large language models reveals that high diagnostic accuracy often masks significant deficiencies in information-gathering efficiency and medication safety. These results underscore a critical gap between theoretical medical knowledge and clinical practice ability, establishing MedConsultBench as a rigorous foundation for aligning medical AI with the nuanced requirements of real-world clinical care.

Boosting Latent Diffusion Models via Disentangled Representation Alignment

Jan 09, 2026Abstract:Latent Diffusion Models (LDMs) generate high-quality images by operating in a compressed latent space, typically obtained through image tokenizers such as Variational Autoencoders (VAEs). In pursuit of a generation-friendly VAE, recent studies have explored leveraging Vision Foundation Models (VFMs) as representation alignment targets for VAEs, mirroring the approach commonly adopted for LDMs. Although this yields certain performance gains, using the same alignment target for both VAEs and LDMs overlooks their fundamentally different representational requirements. We advocate that while LDMs benefit from latents retaining high-level semantic concepts, VAEs should excel in semantic disentanglement, enabling encoding of attribute-level information in a structured way. To address this, we propose the Semantic disentangled VAE (Send-VAE), explicitly optimized for disentangled representation learning through aligning its latent space with the semantic hierarchy of pre-trained VFMs. Our approach employs a non-linear mapper network to transform VAE latents, aligning them with VFMs to bridge the gap between attribute-level disentanglement and high-level semantics, facilitating effective guidance for VAE learning. We evaluate semantic disentanglement via linear probing on attribute prediction tasks, showing strong correlation with improved generation performance. Finally, using Send-VAE, we train flow-based transformers SiTs; experiments show Send-VAE significantly speeds up training and achieves a state-of-the-art FID of 1.21 and 1.75 with and without classifier-free guidance on ImageNet 256x256.

A Style is Worth One Code: Unlocking Code-to-Style Image Generation with Discrete Style Space

Nov 19, 2025Abstract:Innovative visual stylization is a cornerstone of artistic creation, yet generating novel and consistent visual styles remains a significant challenge. Existing generative approaches typically rely on lengthy textual prompts, reference images, or parameter-efficient fine-tuning to guide style-aware image generation, but often struggle with style consistency, limited creativity, and complex style representations. In this paper, we affirm that a style is worth one numerical code by introducing the novel task, code-to-style image generation, which produces images with novel, consistent visual styles conditioned solely on a numerical style code. To date, this field has only been primarily explored by the industry (e.g., Midjourney), with no open-source research from the academic community. To fill this gap, we propose CoTyle, the first open-source method for this task. Specifically, we first train a discrete style codebook from a collection of images to extract style embeddings. These embeddings serve as conditions for a text-to-image diffusion model (T2I-DM) to generate stylistic images. Subsequently, we train an autoregressive style generator on the discrete style embeddings to model their distribution, allowing the synthesis of novel style embeddings. During inference, a numerical style code is mapped to a unique style embedding by the style generator, and this embedding guides the T2I-DM to generate images in the corresponding style. Unlike existing methods, our method offers unparalleled simplicity and diversity, unlocking a vast space of reproducible styles from minimal input. Extensive experiments validate that CoTyle effectively turns a numerical code into a style controller, demonstrating a style is worth one code.

Textual Self-attention Network: Test-Time Preference Optimization through Textual Gradient-based Attention

Nov 10, 2025

Abstract:Large Language Models (LLMs) have demonstrated remarkable generalization capabilities, but aligning their outputs with human preferences typically requires expensive supervised fine-tuning. Recent test-time methods leverage textual feedback to overcome this, but they often critique and revise a single candidate response, lacking a principled mechanism to systematically analyze, weigh, and synthesize the strengths of multiple promising candidates. Such a mechanism is crucial because different responses may excel in distinct aspects (e.g., clarity, factual accuracy, or tone), and combining their best elements may produce a far superior outcome. This paper proposes the Textual Self-Attention Network (TSAN), a new paradigm for test-time preference optimization that requires no parameter updates. TSAN emulates self-attention entirely in natural language to overcome this gap: it analyzes multiple candidates by formatting them into textual keys and values, weighs their relevance using an LLM-based attention module, and synthesizes their strengths into a new, preference-aligned response under the guidance of the learned textual attention. This entire process operates in a textual gradient space, enabling iterative and interpretable optimization. Empirical evaluations demonstrate that with just three test-time iterations on a base SFT model, TSAN outperforms supervised models like Llama-3.1-70B-Instruct and surpasses the current state-of-the-art test-time alignment method by effectively leveraging multiple candidate solutions.

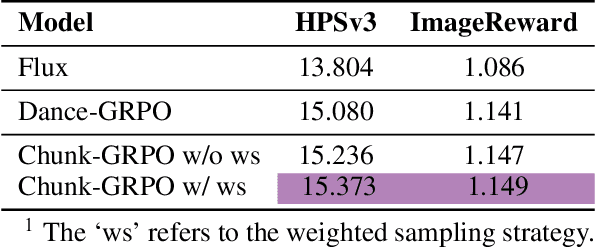

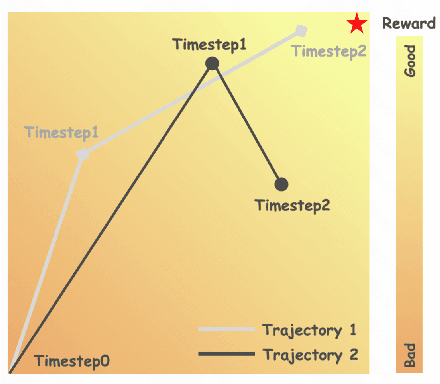

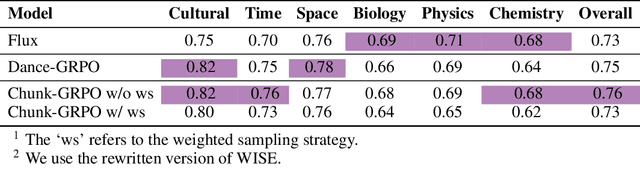

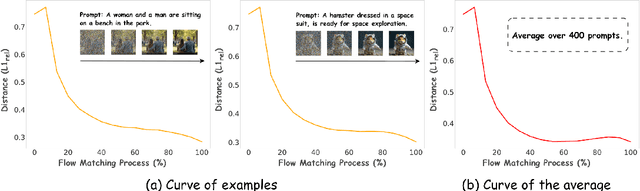

Sample By Step, Optimize By Chunk: Chunk-Level GRPO For Text-to-Image Generation

Oct 24, 2025

Abstract:Group Relative Policy Optimization (GRPO) has shown strong potential for flow-matching-based text-to-image (T2I) generation, but it faces two key limitations: inaccurate advantage attribution, and the neglect of temporal dynamics of generation. In this work, we argue that shifting the optimization paradigm from the step level to the chunk level can effectively alleviate these issues. Building on this idea, we propose Chunk-GRPO, the first chunk-level GRPO-based approach for T2I generation. The insight is to group consecutive steps into coherent 'chunk's that capture the intrinsic temporal dynamics of flow matching, and to optimize policies at the chunk level. In addition, we introduce an optional weighted sampling strategy to further enhance performance. Extensive experiments show that ChunkGRPO achieves superior results in both preference alignment and image quality, highlighting the promise of chunk-level optimization for GRPO-based methods.

BaseReward: A Strong Baseline for Multimodal Reward Model

Sep 19, 2025

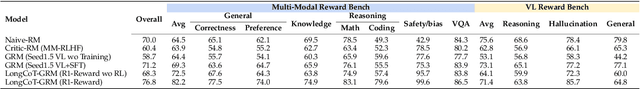

Abstract:The rapid advancement of Multimodal Large Language Models (MLLMs) has made aligning them with human preferences a critical challenge. Reward Models (RMs) are a core technology for achieving this goal, but a systematic guide for building state-of-the-art Multimodal Reward Models (MRMs) is currently lacking in both academia and industry. Through exhaustive experimental analysis, this paper aims to provide a clear ``recipe'' for constructing high-performance MRMs. We systematically investigate every crucial component in the MRM development pipeline, including \textit{reward modeling paradigms} (e.g., Naive-RM, Critic-based RM, and Generative RM), \textit{reward head architecture}, \textit{training strategies}, \textit{data curation} (covering over ten multimodal and text-only preference datasets), \textit{backbone model} and \textit{model scale}, and \textit{ensemble methods}. Based on these experimental insights, we introduce \textbf{BaseReward}, a powerful and efficient baseline for multimodal reward modeling. BaseReward adopts a simple yet effective architecture, built upon a {Qwen2.5-VL} backbone, featuring an optimized two-layer reward head, and is trained on a carefully curated mixture of high-quality multimodal and text-only preference data. Our results show that BaseReward establishes a new SOTA on major benchmarks such as MM-RLHF-Reward Bench, VL-Reward Bench, and Multimodal Reward Bench, outperforming previous models. Furthermore, to validate its practical utility beyond static benchmarks, we integrate BaseReward into a real-world reinforcement learning pipeline, successfully enhancing an MLLM's performance across various perception, reasoning, and conversational tasks. This work not only delivers a top-tier MRM but, more importantly, provides the community with a clear, empirically-backed guide for developing robust reward models for the next generation of MLLMs.

Towards SISO Bistatic Sensing for ISAC

Aug 18, 2025

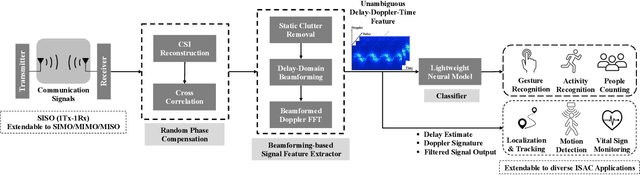

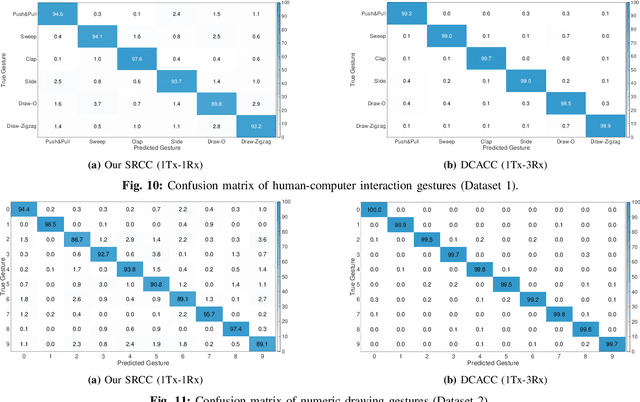

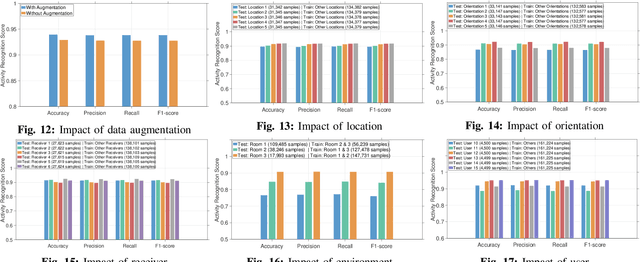

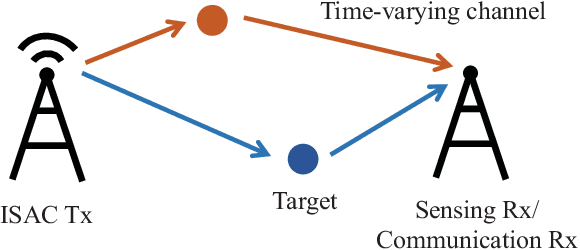

Abstract:Integrated Sensing and Communication (ISAC) is a key enabler for next-generation wireless systems. However, real-world deployment is often limited to low-cost, single-antenna transceivers. In such bistatic Single-Input Single-Output (SISO) setup, clock asynchrony introduces random phase offsets in Channel State Information (CSI), which cannot be mitigated using conventional multi-antenna methods. This work proposes WiDFS 3.0, a lightweight bistatic SISO sensing framework that enables accurate delay and Doppler estimation from distorted CSI by effectively suppressing Doppler mirroring ambiguity. It operates with only a single antenna at both the transmitter and receiver, making it suitable for low-complexity deployments. We propose a self-referencing cross-correlation (SRCC) method for SISO random phase removal and employ delay-domain beamforming to resolve Doppler ambiguity. The resulting unambiguous delay-Doppler-time features enable robust sensing with compact neural networks. Extensive experiments show that WiDFS 3.0 achieves accurate parameter estimation, with performance comparable to or even surpassing that of prior multi-antenna methods, especially in delay estimation. Validated under single- and multi-target scenarios, the extracted ambiguity-resolved features show strong sensing accuracy and generalization. For example, when deployed on the embedded-friendly MobileViT-XXS with only 1.3M parameters, WiDFS 3.0 consistently outperforms conventional features such as CSI amplitude, mirrored Doppler, and multi-receiver aggregated Doppler.

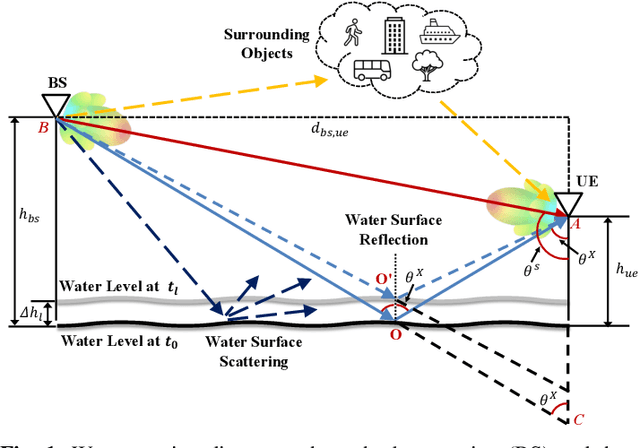

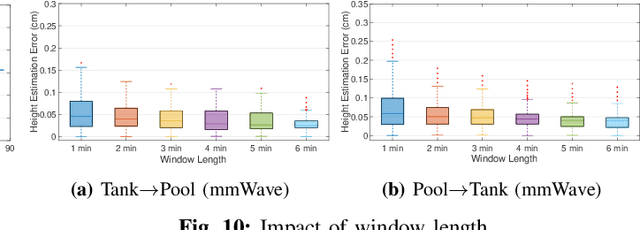

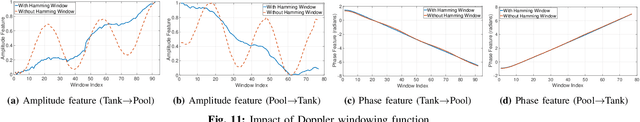

Water Level Sensing via Communication Signals in a Bi-Static System

May 26, 2025

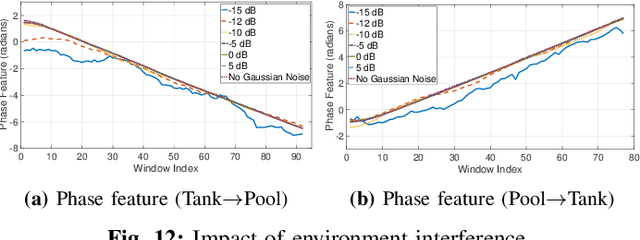

Abstract:Accurate water level sensing is essential for flood monitoring, agricultural irrigation, and water resource optimization. Traditional methods require dedicated sensor deployments, leading to high installation costs, vulnerability to interference, and limited resolution. This work proposes PMNs-WaterSense, a novel scheme leveraging Channel State Information (CSI) from existing mobile networks for water level sensing. Our scheme begins with a CSI-power method to eliminate phase offsets caused by clock asynchrony in bi-static systems. We then apply multi-domain filtering across the time (Doppler), frequency (delay), and spatial (Angle-of-Arrival, AoA) domains to extract phase features that finely capture variations in path length over water. To resolve the $2\pi$ phase ambiguity, we introduce a Kalman filter-based unwrapping technique. Additionally, we exploit transceiver geometry to convert path length variations into water level height changes, even with limited antenna configurations. We validate our framework through controlled experiments with 28 GHz mmWave and 3.1 GHz LTE signals in real time, achieving average height estimation errors of 0.025 cm and 0.198 cm, respectively. Moreover, real-world river monitoring with 2.6 GHz LTE signals achieves an average error of 4.8 cm for a 1-meter water level change, demonstrating its effectiveness in practical deployments.

Bayesian Sensing for Time-Varying Channels in ISAC Systems

Apr 21, 2025

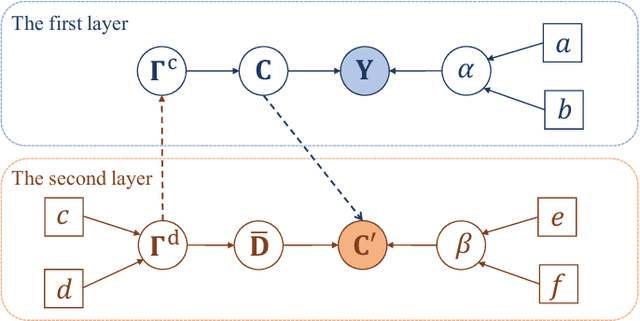

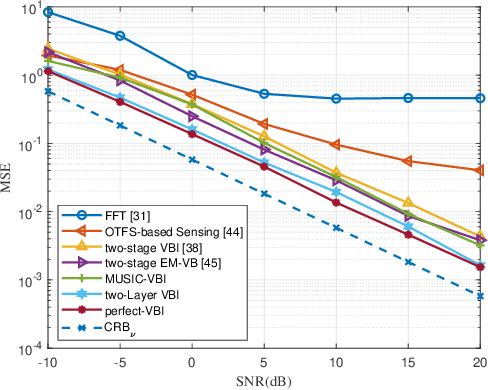

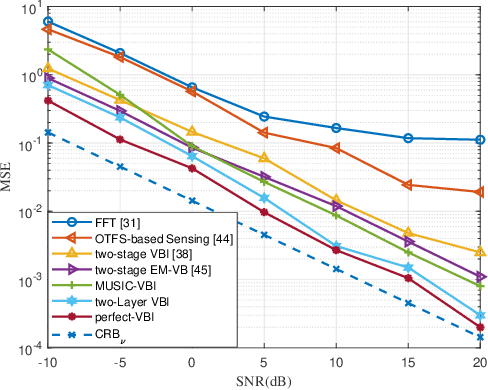

Abstract:Future mobile networks are projected to support integrated sensing and communications in high-speed communication scenarios. Nevertheless, large Doppler shifts induced by time-varying channels may cause severe inter-carrier interference (ICI). Frequency domain shows the potential of reducing ISAC complexity as compared with other domains. However, parameter mismatching issue still exists for such sensing. In this paper, we develop a novel sensing scheme based on sparse Bayesian framework, where the delay and Doppler estimation problem in time-varying channels is formulated as a 3D multiple measurement-sparse signal recovery (MM-SSR) problem. We then propose a novel two-layer variational Bayesian inference (VBI) method to decompose the 3D MM-SSR problem into two layers and estimate the Doppler in the first layer and the delay in the second layer alternatively. Subsequently, as is benefited from newly unveiled signal construction, a simplified two-stage multiple signal classification (MUSIC)-based VBI method is proposed, where the delay and the Doppler are estimated by MUSIC and VBI, respectively. Additionally, the Cram\'er-Rao bound (CRB) of the considered sensing parameters is derived to characterize the lower bound for the proposed estimators. Corroborated by extensive simulation results, our proposed method can achieve improved mean square error (MSE) than its conventional counterparts and is robust against the target number and target speed, thereby validating its wide applicability and advantages over prior arts.

TeleAntiFraud-28k: An Audio-Text Slow-Thinking Dataset for Telecom Fraud Detection

Apr 01, 2025

Abstract:The detection of telecom fraud faces significant challenges due to the lack of high-quality multimodal training data that integrates audio signals with reasoning-oriented textual analysis. To address this gap, we present TeleAntiFraud-28k, the first open-source audio-text slow-thinking dataset specifically designed for automated telecom fraud analysis. Our dataset is constructed through three strategies: (1) Privacy-preserved text-truth sample generation using automatically speech recognition (ASR)-transcribed call recordings (with anonymized original audio), ensuring real-world consistency through text-to-speech (TTS) model regeneration; (2) Semantic enhancement via large language model (LLM)-based self-instruction sampling on authentic ASR outputs to expand scenario coverage; (3) Multi-agent adversarial synthesis that simulates emerging fraud tactics through predefined communication scenarios and fraud typologies. The generated dataset contains 28,511 rigorously processed speech-text pairs, complete with detailed annotations for fraud reasoning. The dataset is divided into three tasks: scenario classification, fraud detection, fraud type classification. Furthermore, we construct TeleAntiFraud-Bench, a standardized evaluation benchmark comprising proportionally sampled instances from the dataset, to facilitate systematic testing of model performance on telecom fraud detection tasks. We also contribute a production-optimized supervised fine-tuning (SFT) model trained on hybrid real/synthetic data, while open-sourcing the data processing framework to enable community-driven dataset expansion. This work establishes a foundational framework for multimodal anti-fraud research while addressing critical challenges in data privacy and scenario diversity. The project will be released at https://github.com/JimmyMa99/TeleAntiFraud.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge