Jincai Huang

TempDiffReg: Temporal Diffusion Model for Non-Rigid 2D-3D Vascular Registration

Jan 26, 2026Abstract:Transarterial chemoembolization (TACE) is a preferred treatment option for hepatocellular carcinoma and other liver malignancies, yet it remains a highly challenging procedure due to complex intra-operative vascular navigation and anatomical variability. Accurate and robust 2D-3D vessel registration is essential to guide microcatheter and instruments during TACE, enabling precise localization of vascular structures and optimal therapeutic targeting. To tackle this issue, we develop a coarse-to-fine registration strategy. First, we introduce a global alignment module, structure-aware perspective n-point (SA-PnP), to establish correspondence between 2D and 3D vessel structures. Second, we propose TempDiffReg, a temporal diffusion model that performs vessel deformation iteratively by leveraging temporal context to capture complex anatomical variations and local structural changes. We collected data from 23 patients and constructed 626 paired multi-frame samples for comprehensive evaluation. Experimental results demonstrate that the proposed method consistently outperforms state-of-the-art (SOTA) methods in both accuracy and anatomical plausibility. Specifically, our method achieves a mean squared error (MSE) of 0.63 mm and a mean absolute error (MAE) of 0.51 mm in registration accuracy, representing 66.7\% lower MSE and 17.7\% lower MAE compared to the most competitive existing approaches. It has the potential to assist less-experienced clinicians in safely and efficiently performing complex TACE procedures, ultimately enhancing both surgical outcomes and patient care. Code and data are available at: \textcolor{blue}{https://github.com/LZH970328/TempDiffReg.git}

Surgical Scene Segmentation using a Spike-Driven Video Transformer with Real-Time Potential

Dec 24, 2025Abstract:Modern surgical systems increasingly rely on intelligent scene understanding to provide timely situational awareness for enhanced intra-operative safety. Within this pipeline, surgical scene segmentation plays a central role in accurately perceiving operative events. Although recent deep learning models, particularly large-scale foundation models, achieve remarkable segmentation accuracy, their substantial computational demands and power consumption hinder real-time deployment in resource-constrained surgical environments. To address this limitation, we explore the emerging SNN as a promising paradigm for highly efficient surgical intelligence. However, their performance is still constrained by the scarcity of labeled surgical data and the inherently sparse nature of surgical video representations. To this end, we propose \textit{SpikeSurgSeg}, the first spike-driven video Transformer framework tailored for surgical scene segmentation with real-time potential on non-GPU platforms. To address the limited availability of surgical annotations, we introduce a surgical-scene masked autoencoding pretraining strategy for SNNs that enables robust spatiotemporal representation learning via layer-wise tube masking. Building on this pretrained backbone, we further adopt a lightweight spike-driven segmentation head that produces temporally consistent predictions while preserving the low-latency characteristics of SNNs. Extensive experiments on EndoVis18 and our in-house SurgBleed dataset demonstrate that SpikeSurgSeg achieves mIoU comparable to SOTA ANN-based models while reducing inference latency by at least $8\times$. Notably, it delivers over $20\times$ acceleration relative to most foundation-model baselines, underscoring its potential for time-critical surgical scene segmentation.

T3DM: Test-Time Training-Guided Distribution Shift Modelling for Temporal Knowledge Graph Reasoning

Jul 02, 2025Abstract:Temporal Knowledge Graph (TKG) is an efficient method for describing the dynamic development of facts along a timeline. Most research on TKG reasoning (TKGR) focuses on modelling the repetition of global facts and designing patterns of local historical facts. However, they face two significant challenges: inadequate modeling of the event distribution shift between training and test samples, and reliance on random entity substitution for generating negative samples, which often results in low-quality sampling. To this end, we propose a novel distributional feature modeling approach for training TKGR models, Test-Time Training-guided Distribution shift Modelling (T3DM), to adjust the model based on distribution shift and ensure the global consistency of model reasoning. In addition, we design a negative-sampling strategy to generate higher-quality negative quadruples based on adversarial training. Extensive experiments show that T3DM provides better and more robust results than the state-of-the-art baselines in most cases.

A Comprehensive Survey on Underwater Acoustic Target Positioning and Tracking: Progress, Challenges, and Perspectives

Jun 17, 2025Abstract:Underwater target tracking technology plays a pivotal role in marine resource exploration, environmental monitoring, and national defense security. Given that acoustic waves represent an effective medium for long-distance transmission in aquatic environments, underwater acoustic target tracking has become a prominent research area of underwater communications and networking. Existing literature reviews often offer a narrow perspective or inadequately address the paradigm shifts driven by emerging technologies like deep learning and reinforcement learning. To address these gaps, this work presents a systematic survey of this field and introduces an innovative multidimensional taxonomy framework based on target scale, sensor perception modes, and sensor collaboration patterns. Within this framework, we comprehensively survey the literature (more than 180 publications) over the period 2016-2025, spanning from the theoretical foundations to diverse algorithmic approaches in underwater acoustic target tracking. Particularly, we emphasize the transformative potential and recent advancements of machine learning techniques, including deep learning and reinforcement learning, in enhancing the performance and adaptability of underwater tracking systems. Finally, this survey concludes by identifying key challenges in the field and proposing future avenues based on emerging technologies such as federated learning, blockchain, embodied intelligence, and large models.

TrojanTO: Action-Level Backdoor Attacks against Trajectory Optimization Models

Jun 15, 2025Abstract:Recent advances in Trajectory Optimization (TO) models have achieved remarkable success in offline reinforcement learning. However, their vulnerabilities against backdoor attacks are poorly understood. We find that existing backdoor attacks in reinforcement learning are based on reward manipulation, which are largely ineffective against the TO model due to its inherent sequence modeling nature. Moreover, the complexities introduced by high-dimensional action spaces further compound the challenge of action manipulation. To address these gaps, we propose TrojanTO, the first action-level backdoor attack against TO models. TrojanTO employs alternating training to enhance the connection between triggers and target actions for attack effectiveness. To improve attack stealth, it utilizes precise poisoning via trajectory filtering for normal performance and batch poisoning for trigger consistency. Extensive evaluations demonstrate that TrojanTO effectively implants backdoor attacks across diverse tasks and attack objectives with a low attack budget (0.3\% of trajectories). Furthermore, TrojanTO exhibits broad applicability to DT, GDT, and DC, underscoring its scalability across diverse TO model architectures.

CityEQA: A Hierarchical LLM Agent on Embodied Question Answering Benchmark in City Space

Feb 20, 2025Abstract:Embodied Question Answering (EQA) has primarily focused on indoor environments, leaving the complexities of urban settings - spanning environment, action, and perception - largely unexplored. To bridge this gap, we introduce CityEQA, a new task where an embodied agent answers open-vocabulary questions through active exploration in dynamic city spaces. To support this task, we present CityEQA-EC, the first benchmark dataset featuring 1,412 human-annotated tasks across six categories, grounded in a realistic 3D urban simulator. Moreover, we propose Planner-Manager-Actor (PMA), a novel agent tailored for CityEQA. PMA enables long-horizon planning and hierarchical task execution: the Planner breaks down the question answering into sub-tasks, the Manager maintains an object-centric cognitive map for spatial reasoning during the process control, and the specialized Actors handle navigation, exploration, and collection sub-tasks. Experiments demonstrate that PMA achieves 60.7% of human-level answering accuracy, significantly outperforming frontier-based baselines. While promising, the performance gap compared to humans highlights the need for enhanced visual reasoning in CityEQA. This work paves the way for future advancements in urban spatial intelligence. Dataset and code are available at https://github.com/BiluYong/CityEQA.git.

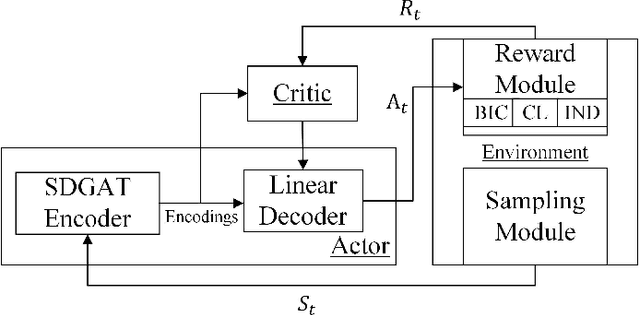

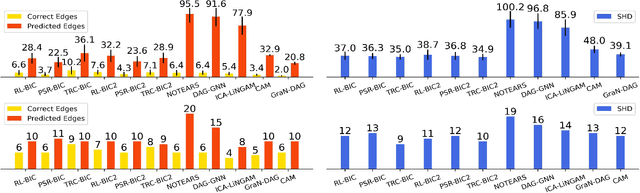

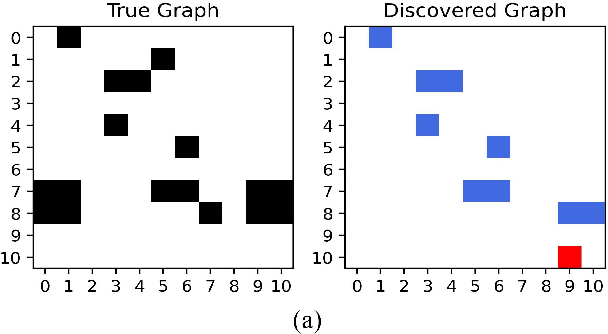

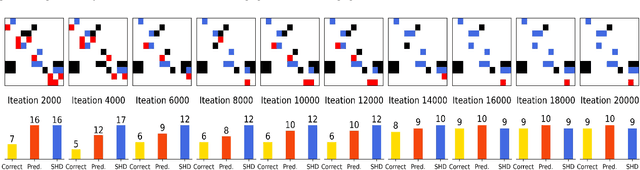

Graph-attention-based Casual Discovery with Trust Region-navigated Clipping Policy Optimization

Dec 27, 2024

Abstract:In many domains of empirical sciences, discovering the causal structure within variables remains an indispensable task. Recently, to tackle with unoriented edges or latent assumptions violation suffered by conventional methods, researchers formulated a reinforcement learning (RL) procedure for causal discovery, and equipped REINFORCE algorithm to search for the best-rewarded directed acyclic graph. The two keys to the overall performance of the procedure are the robustness of RL methods and the efficient encoding of variables. However, on the one hand, REINFORCE is prone to local convergence and unstable performance during training. Neither trust region policy optimization, being computationally-expensive, nor proximal policy optimization (PPO), suffering from aggregate constraint deviation, is decent alternative for combinatory optimization problems with considerable individual subactions. We propose a trust region-navigated clipping policy optimization method for causal discovery that guarantees both better search efficiency and steadiness in policy optimization, in comparison with REINFORCE, PPO and our prioritized sampling-guided REINFORCE implementation. On the other hand, to boost the efficient encoding of variables, we propose a refined graph attention encoder called SDGAT that can grasp more feature information without priori neighbourhood information. With these improvements, the proposed method outperforms former RL method in both synthetic and benchmark datasets in terms of output results and optimization robustness.

Heterogeneous Graph Reinforcement Learning for Dependency-aware Multi-task Allocation in Spatial Crowdsourcing

Oct 20, 2024Abstract:Spatial Crowdsourcing (SC) is gaining traction in both academia and industry, with tasks on SC platforms becoming increasingly complex and requiring collaboration among workers with diverse skills. Recent research works address complex tasks by dividing them into subtasks with dependencies and assigning them to suitable workers. However, the dependencies among subtasks and their heterogeneous skill requirements, as well as the need for efficient utilization of workers' limited work time in the multi-task allocation mode, pose challenges in achieving an optimal task allocation scheme. Therefore, this paper formally investigates the problem of Dependency-aware Multi-task Allocation (DMA) and presents a well-designed framework to solve it, known as Heterogeneous Graph Reinforcement Learning-based Task Allocation (HGRL-TA). To address the challenges associated with representing and embedding diverse problem instances to ensure robust generalization, we propose a multi-relation graph model and a Compound-path-based Heterogeneous Graph Attention Network (CHANet) for effectively representing and capturing intricate relations among tasks and workers, as well as providing embedding of problem state. The task allocation decision is determined sequentially by a policy network, which undergoes simultaneous training with CHANet using the proximal policy optimization algorithm. Extensive experiment results demonstrate the effectiveness and generality of the proposed HGRL-TA in solving the DMA problem, leading to average profits that is 21.78% higher than those achieved using the metaheuristic methods.

Is Mamba Compatible with Trajectory Optimization in Offline Reinforcement Learning?

May 20, 2024

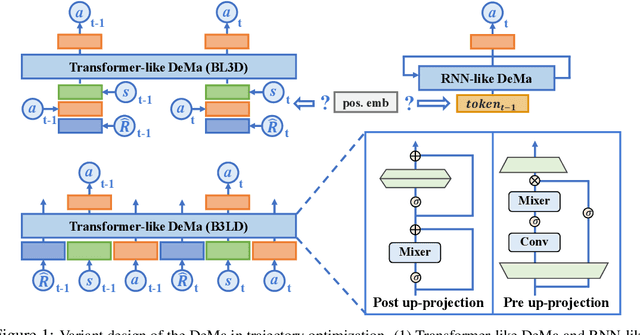

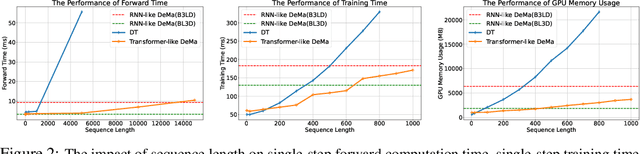

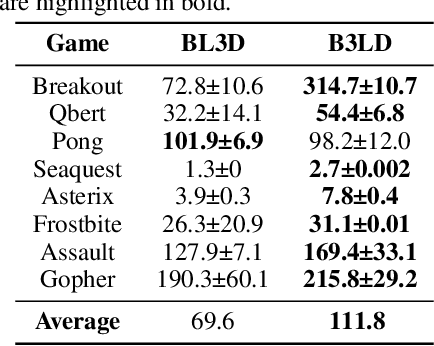

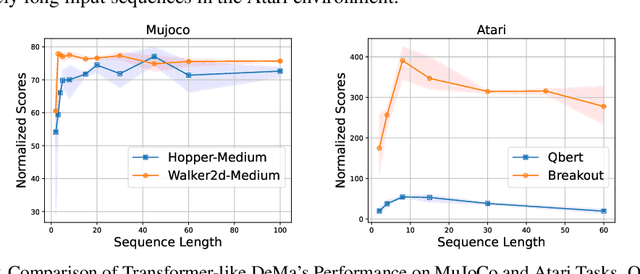

Abstract:Transformer-based trajectory optimization methods have demonstrated exceptional performance in offline Reinforcement Learning (offline RL), yet it poses challenges due to substantial parameter size and limited scalability, which is particularly critical in sequential decision-making scenarios where resources are constrained such as in robots and drones with limited computational power. Mamba, a promising new linear-time sequence model, offers performance on par with transformers while delivering substantially fewer parameters on long sequences. As it remains unclear whether Mamba is compatible with trajectory optimization, this work aims to conduct comprehensive experiments to explore the potential of Decision Mamba in offline RL (dubbed DeMa) from the aspect of data structures and network architectures with the following insights: (1) Long sequences impose a significant computational burden without contributing to performance improvements due to the fact that DeMa's focus on sequences diminishes approximately exponentially. Consequently, we introduce a Transformer-like DeMa as opposed to an RNN-like DeMa. (2) For the components of DeMa, we identify that the hidden attention mechanism is key to its success, which can also work well with other residual structures and does not require position embedding. Extensive evaluations from eight Atari games demonstrate that our specially designed DeMa is compatible with trajectory optimization and surpasses previous state-of-the-art methods, outdoing Decision Transformer (DT) by 80\% with 30\% fewer parameters, and exceeds DT in MuJoCo with only a quarter of the parameters.

Conversational Crowdsensing: A Parallel Intelligence Powered Novel Sensing Approach

Feb 04, 2024Abstract:The transition from CPS-based Industry 4.0 to CPSS-based Industry 5.0 brings new requirements and opportunities to current sensing approaches, especially in light of recent progress in Chatbots and Large Language Models (LLMs). Therefore, the advancement of parallel intelligence-powered Crowdsensing Intelligence (CSI) is witnessed, which is currently advancing towards linguistic intelligence. In this paper, we propose a novel sensing paradigm, namely conversational crowdsensing, for Industry 5.0. It can alleviate workload and professional requirements of individuals and promote the organization and operation of diverse workforce, thereby facilitating faster response and wider popularization of crowdsensing systems. Specifically, we design the architecture of conversational crowdsensing to effectively organize three types of participants (biological, robotic, and digital) from diverse communities. Through three levels of effective conversation (i.e., inter-human, human-AI, and inter-AI), complex interactions and service functionalities of different workers can be achieved to accomplish various tasks across three sensing phases (i.e., requesting, scheduling, and executing). Moreover, we explore the foundational technologies for realizing conversational crowdsensing, encompassing LLM-based multi-agent systems, scenarios engineering and conversational human-AI cooperation. Finally, we present potential industrial applications of conversational crowdsensing and discuss its implications. We envision that conversations in natural language will become the primary communication channel during crowdsensing process, enabling richer information exchange and cooperative problem-solving among humans, robots, and AI.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge