Xiang Zhao

Panning for Gold: Expanding Domain-Specific Knowledge Graphs with General Knowledge

Jan 15, 2026Abstract:Domain-specific knowledge graphs (DKGs) often lack coverage compared to general knowledge graphs (GKGs). To address this, we introduce Domain-specific Knowledge Graph Fusion (DKGF), a novel task that enriches DKGs by integrating relevant facts from GKGs. DKGF faces two key challenges: high ambiguity in domain relevance and misalignment in knowledge granularity across graphs. We propose ExeFuse, a simple yet effective Fact-as-Program paradigm. It treats each GKG fact as a latent semantic program, maps abstract relations to granularity-aware operators, and verifies domain relevance via program executability on the target DKG. This unified probabilistic framework jointly resolves relevance and granularity issues. We construct two benchmarks, DKGF(W-I) and DKGF(Y-I), with 21 evaluation configurations. Extensive experiments validate the task's importance and our model's effectiveness, providing the first standardized testbed for DKGF.

Prism: Towards Lowering User Cognitive Load in LLMs via Complex Intent Understanding

Jan 13, 2026Abstract:Large Language Models are rapidly emerging as web-native interfaces to social platforms. On the social web, users frequently have ambiguous and dynamic goals, making complex intent understanding-rather than single-turn execution-the cornerstone of effective human-LLM collaboration. Existing approaches attempt to clarify user intents through sequential or parallel questioning, yet they fall short of addressing the core challenge: modeling the logical dependencies among clarification questions. Inspired by the Cognitive Load Theory, we propose Prism, a novel framework for complex intent understanding that enables logically coherent and efficient intent clarification. Prism comprises four tailored modules: a complex intent decomposition module, which decomposes user intents into smaller, well-structured elements and identifies logical dependencies among them; a logical clarification generation module, which organizes clarification questions based on these dependencies to ensure coherent, low-friction interactions; an intent-aware reward module, which evaluates the quality of clarification trajectories via an intent-aware reward function and leverages Monte Carlo Sample to simulate user-LLM interactions for large-scale,high-quality training data generation; and a self-evolved intent tuning module, which iteratively refines the LLM's logical clarification capability through data-driven feedback and optimization. Prism consistently outperforms existing approaches across clarification interactions, intent execution, and cognitive load benchmarks. It achieves stateof-the-art logical consistency, reduces logical conflicts to 11.5%, increases user satisfaction by 14.4%, and decreases task completion time by 34.8%. All data and code are released.

NeSTR: A Neuro-Symbolic Abductive Framework for Temporal Reasoning in Large Language Models

Dec 08, 2025Abstract:Large Language Models (LLMs) have demonstrated remarkable performance across a wide range of natural language processing tasks. However, temporal reasoning, particularly under complex temporal constraints, remains a major challenge. To this end, existing approaches have explored symbolic methods, which encode temporal structure explicitly, and reflective mechanisms, which revise reasoning errors through multi-step inference. Nonetheless, symbolic approaches often underutilize the reasoning capabilities of LLMs, while reflective methods typically lack structured temporal representations, which can result in inconsistent or hallucinated reasoning. As a result, even when the correct temporal context is available, LLMs may still misinterpret or misapply time-related information, leading to incomplete or inaccurate answers. To address these limitations, in this work, we propose Neuro-Symbolic Temporal Reasoning (NeSTR), a novel framework that integrates structured symbolic representations with hybrid reflective reasoning to enhance the temporal sensitivity of LLM inference. NeSTR preserves explicit temporal relations through symbolic encoding, enforces logical consistency via verification, and corrects flawed inferences using abductive reflection. Extensive experiments on diverse temporal question answering benchmarks demonstrate that NeSTR achieves superior zero-shot performance and consistently improves temporal reasoning without any fine-tuning, showcasing the advantage of neuro-symbolic integration in enhancing temporal understanding in large language models.

A Question Answering Dataset for Temporal-Sensitive Retrieval-Augmented Generation

Aug 17, 2025Abstract:We introduce ChronoQA, a large-scale benchmark dataset for Chinese question answering, specifically designed to evaluate temporal reasoning in Retrieval-Augmented Generation (RAG) systems. ChronoQA is constructed from over 300,000 news articles published between 2019 and 2024, and contains 5,176 high-quality questions covering absolute, aggregate, and relative temporal types with both explicit and implicit time expressions. The dataset supports both single- and multi-document scenarios, reflecting the real-world requirements for temporal alignment and logical consistency. ChronoQA features comprehensive structural annotations and has undergone multi-stage validation, including rule-based, LLM-based, and human evaluation, to ensure data quality. By providing a dynamic, reliable, and scalable resource, ChronoQA enables structured evaluation across a wide range of temporal tasks, and serves as a robust benchmark for advancing time-sensitive retrieval-augmented question answering systems.

HydraNet: Momentum-Driven State Space Duality for Multi-Granularity Tennis Tournaments Analysis

May 29, 2025Abstract:In tennis tournaments, momentum, a critical yet elusive phenomenon, reflects the dynamic shifts in performance of athletes that can decisively influence match outcomes. Despite its significance, momentum in terms of effective modeling and multi-granularity analysis across points, games, sets, and matches in tennis tournaments remains underexplored. In this study, we define a novel Momentum Score (MS) metric to quantify a player's momentum level in multi-granularity tennis tournaments, and design HydraNet, a momentum-driven state-space duality-based framework, to model MS by integrating thirty-two heterogeneous dimensions of athletes performance in serve, return, psychology and fatigue. HydraNet integrates a Hydra module, which builds upon a state-space duality (SSD) framework, capturing explicit momentum with a sliding-window mechanism and implicit momentum through cross-game state propagation. It also introduces a novel Versus Learning method to better enhance the adversarial nature of momentum between the two athletes at a macro level, along with a Collaborative-Adversarial Attention Mechanism (CAAM) for capturing and integrating intra-player and inter-player dynamic momentum at a micro level. Additionally, we construct a million-level tennis cross-tournament dataset spanning from 2012-2023 Wimbledon and 2013-2023 US Open, and validate the multi-granularity modeling capability of HydraNet for the MS metric on this dataset. Extensive experimental evaluations demonstrate that the MS metric constructed by the HydraNet framework provides actionable insights into how momentum impacts outcomes at different granularities, establishing a new foundation for momentum modeling and sports analysis. To the best of our knowledge, this is the first work to explore and effectively model momentum across multiple granularities in professional tennis tournaments.

Enhanced Multi-Tuple Extraction for Alloys: Integrating Pointer Networks and Augmented Attention

Mar 10, 2025Abstract:Extracting high-quality structured information from scientific literature is crucial for advancing material design through data-driven methods. Despite the considerable research in natural language processing for dataset extraction, effective approaches for multi-tuple extraction in scientific literature remain scarce due to the complex interrelations of tuples and contextual ambiguities. In the study, we illustrate the multi-tuple extraction of mechanical properties from multi-principal-element alloys and presents a novel framework that combines an entity extraction model based on MatSciBERT with pointer networks and an allocation model utilizing inter- and intra-entity attention. Our rigorous experiments on tuple extraction demonstrate impressive F1 scores of 0.963, 0.947, 0.848, and 0.753 across datasets with 1, 2, 3, and 4 tuples, confirming the effectiveness of the model. Furthermore, an F1 score of 0.854 was achieved on a randomly curated dataset. These results highlight the model's capacity to deliver precise and structured information, offering a robust alternative to large language models and equipping researchers with essential data for fostering data-driven innovations.

Learning Accurate, Efficient, and Interpretable MLPs on Multiplex Graphs via Node-wise Multi-View Ensemble Distillation

Feb 09, 2025

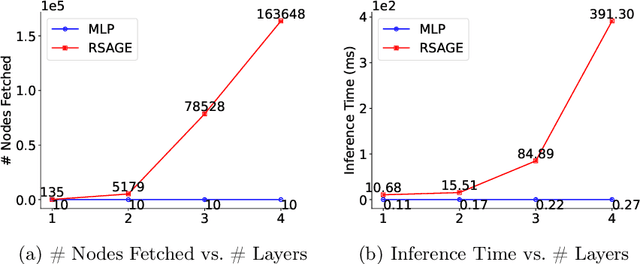

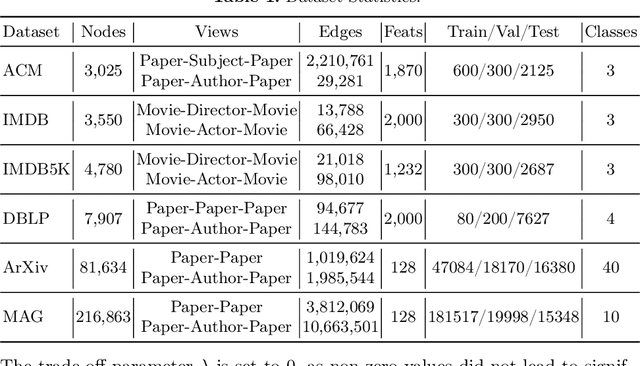

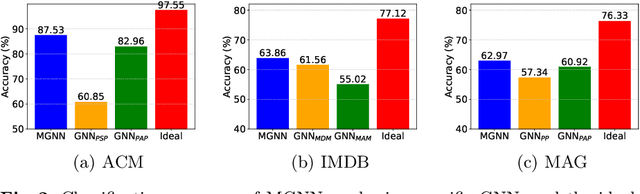

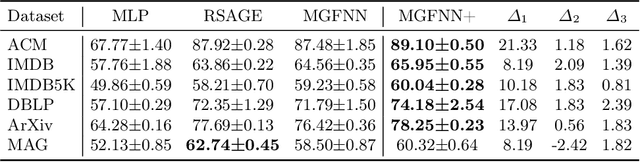

Abstract:Multiplex graphs, with multiple edge types (graph views) among common nodes, provide richer structural semantics and better modeling capabilities. Multiplex Graph Neural Networks (MGNNs), typically comprising view-specific GNNs and a multi-view integration layer, have achieved advanced performance in various downstream tasks. However, their reliance on neighborhood aggregation poses challenges for deployment in latency-sensitive applications. Motivated by recent GNN-to-MLP knowledge distillation frameworks, we propose Multiplex Graph-Free Neural Networks (MGFNN and MGFNN+) to combine MGNNs' superior performance and MLPs' efficient inference via knowledge distillation. MGFNN directly trains student MLPs with node features as input and soft labels from teacher MGNNs as targets. MGFNN+ further employs a low-rank approximation-based reparameterization to learn node-wise coefficients, enabling adaptive knowledge ensemble from each view-specific GNN. This node-wise multi-view ensemble distillation strategy allows student MLPs to learn more informative multiplex semantic knowledge for different nodes. Experiments show that MGFNNs achieve average accuracy improvements of about 10% over vanilla MLPs and perform comparably or even better to teacher MGNNs (accurate); MGFNNs achieve a 35.40$\times$-89.14$\times$ speedup in inference over MGNNs (efficient); MGFNN+ adaptively assigns different coefficients for multi-view ensemble distillation regarding different nodes (interpretable).

PSSD: Making Large Language Models Self-denial via Human Psyche Structure

Feb 03, 2025

Abstract:The enhance of accuracy in reasoning results of LLMs arouses the community's interests, wherein pioneering studies investigate post-hoc strategies to rectify potential mistakes. Despite extensive efforts, they are all stuck in a state of resource competition demanding significant time and computing expenses. The cause of the situation lies in the failure of identifying the fundamental feature of the solutions in this line, coined as the self-denial of LLMs. In other words, LLMs should confidently determine the potential existence of mistakes and carefully execute the targeted correction. As the whole procedure conducts within LLMs, supporting and persuasive references are hard to acquire, while the absence of specific steps towards refining hidden mistakes persists even when errors are acknowledged. In response to the challenges, we present PSSD, which refers to and implements the human psyche structure such that three distinct and interconnected roles contribute to human reasoning. Specifically, PSSD leverages the recent multi-agent paradigm, and is further enhanced with three innovatively conceived roles: (1) the intuition-based id role that provides initial attempts based on benign LLMs; (2) the rule-driven superego role that summarizes rules to regulate the above attempts, and returns specific key points as guidance; and (3) the script-centric ego role that absorbs all procedural information to generate executable script for the final answer prediction. Extensive experiments demonstrate that the proposed design not only better enhance reasoning capabilities, but also seamlessly integrate with current models, leading to superior performance.

Fusion of Millimeter-wave Radar and Pulse Oximeter Data for Low-burden Diagnosis of Obstructive Sleep Apnea-Hypopnea Syndrome

Jan 25, 2025

Abstract:Objective: The aim of the study is to develop a novel method for improved diagnosis of obstructive sleep apnea-hypopnea syndrome (OSAHS) in clinical or home settings, with the focus on achieving diagnostic performance comparable to the gold-standard polysomnography (PSG) with significantly reduced monitoring burden. Methods: We propose a method using millimeter-wave radar and pulse oximeter for OSAHS diagnosis (ROSA). It contains a sleep apnea-hypopnea events (SAE) detection network, which directly predicts the temporal localization of SAE, and a sleep staging network, which predicts the sleep stages throughout the night, based on radar signals. It also fuses oxygen saturation (SpO2) information from the pulse oximeter to adjust the score of SAE detected by radar. Results: Experimental results on a real-world dataset (>800 hours of overnight recordings, 100 subjects) demonstrated high agreement (ICC=0.9870) on apnea-hypopnea index (AHI) between ROSA and PSG. ROSA also exhibited excellent diagnostic performance, exceeding 90% in accuracy across AHI diagnostic thresholds of 5, 15 and 30 events/h. Conclusion: ROSA improves diagnostic accuracy by fusing millimeter-wave radar and pulse oximeter data. It provides a reliable and low-burden solution for OSAHS diagnosis. Significance: ROSA addresses the limitations of high complexity and monitoring burden associated with traditional PSG. The high accuracy and low burden of ROSA show its potential to improve the accessibility of OSAHS diagnosis among population.

Each Fake News is Fake in its Own Way: An Attribution Multi-Granularity Benchmark for Multimodal Fake News Detection

Dec 19, 2024

Abstract:Social platforms, while facilitating access to information, have also become saturated with a plethora of fake news, resulting in negative consequences. Automatic multimodal fake news detection is a worthwhile pursuit. Existing multimodal fake news datasets only provide binary labels of real or fake. However, real news is alike, while each fake news is fake in its own way. These datasets fail to reflect the mixed nature of various types of multimodal fake news. To bridge the gap, we construct an attributing multi-granularity multimodal fake news detection dataset \amg, revealing the inherent fake pattern. Furthermore, we propose a multi-granularity clue alignment model \our to achieve multimodal fake news detection and attribution. Experimental results demonstrate that \amg is a challenging dataset, and its attribution setting opens up new avenues for future research.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge