Fuxian Li

Spatio-Temporal Graph Neural Point Process for Traffic Congestion Event Prediction

Nov 15, 2023

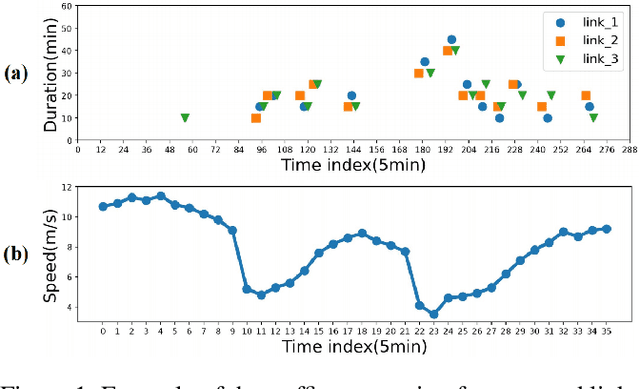

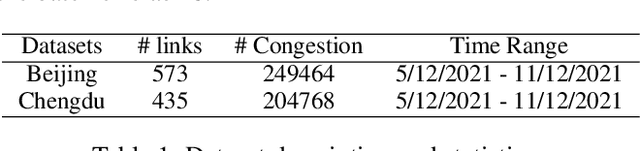

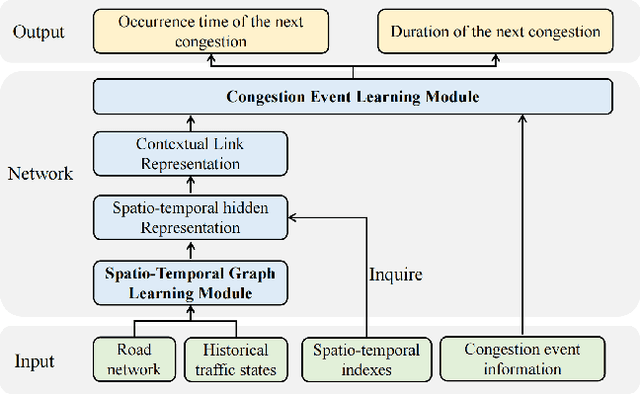

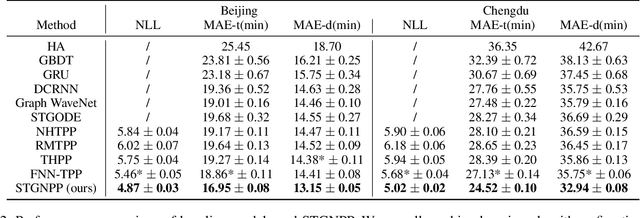

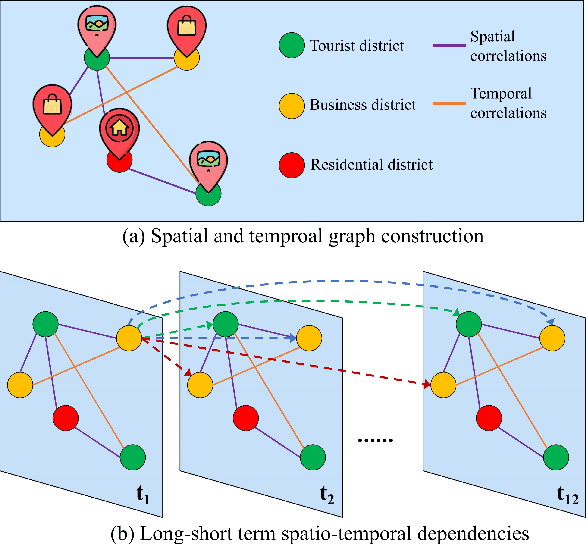

Abstract:Traffic congestion event prediction is an important yet challenging task in intelligent transportation systems. Many existing works about traffic prediction integrate various temporal encoders and graph convolution networks (GCNs), called spatio-temporal graph-based neural networks, which focus on predicting dense variables such as flow, speed and demand in time snapshots, but they can hardly forecast the traffic congestion events that are sparsely distributed on the continuous time axis. In recent years, neural point process (NPP) has emerged as an appropriate framework for event prediction in continuous time scenarios. However, most conventional works about NPP cannot model the complex spatio-temporal dependencies and congestion evolution patterns. To address these limitations, we propose a spatio-temporal graph neural point process framework, named STGNPP for traffic congestion event prediction. Specifically, we first design the spatio-temporal graph learning module to fully capture the long-range spatio-temporal dependencies from the historical traffic state data along with the road network. The extracted spatio-temporal hidden representation and congestion event information are then fed into a continuous gated recurrent unit to model the congestion evolution patterns. In particular, to fully exploit the periodic information, we also improve the intensity function calculation of the point process with a periodic gated mechanism. Finally, our model simultaneously predicts the occurrence time and duration of the next congestion. Extensive experiments on two real-world datasets demonstrate that our method achieves superior performance in comparison to existing state-of-the-art approaches.

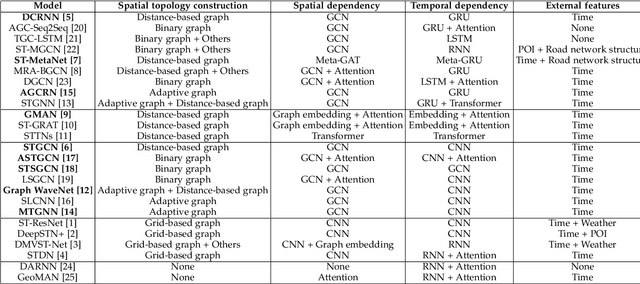

Automated Dilated Spatio-Temporal Synchronous Graph Modeling for Traffic Prediction

Jul 22, 2022

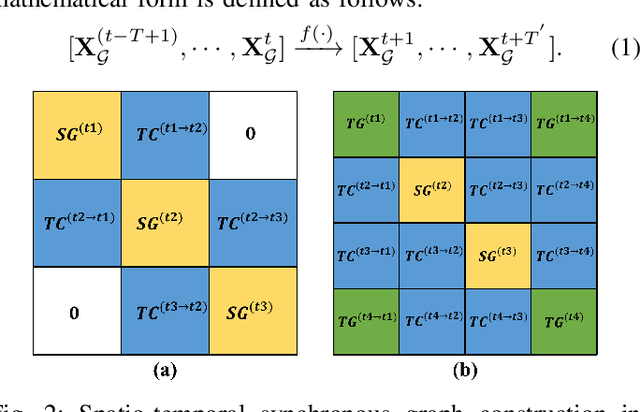

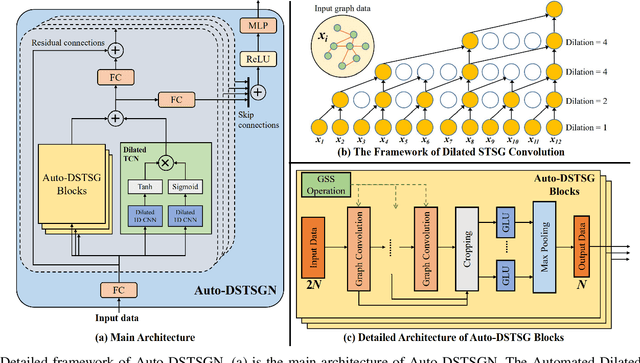

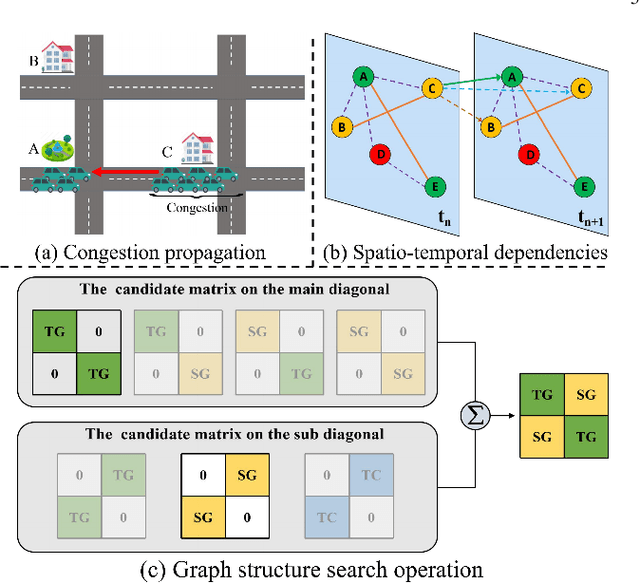

Abstract:Accurate traffic prediction is a challenging task in intelligent transportation systems because of the complex spatio-temporal dependencies in transportation networks. Many existing works utilize sophisticated temporal modeling approaches to incorporate with graph convolution networks (GCNs) for capturing short-term and long-term spatio-temporal dependencies. However, these separated modules with complicated designs could restrict effectiveness and efficiency of spatio-temporal representation learning. Furthermore, most previous works adopt the fixed graph construction methods to characterize the global spatio-temporal relations, which limits the learning capability of the model for different time periods and even different data scenarios. To overcome these limitations, we propose an automated dilated spatio-temporal synchronous graph network, named Auto-DSTSGN for traffic prediction. Specifically, we design an automated dilated spatio-temporal synchronous graph (Auto-DSTSG) module to capture the short-term and long-term spatio-temporal correlations by stacking deeper layers with dilation factors in an increasing order. Further, we propose a graph structure search approach to automatically construct the spatio-temporal synchronous graph that can adapt to different data scenarios. Extensive experiments on four real-world datasets demonstrate that our model can achieve about 10% improvements compared with the state-of-art methods. Source codes are available at https://github.com/jinguangyin/Auto-DSTSGN.

Spatial-Temporal Dual Graph Neural Networks for Travel Time Estimation

May 28, 2021

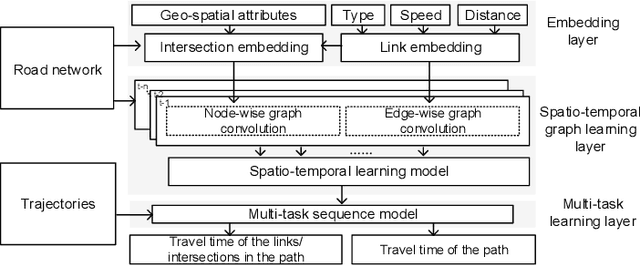

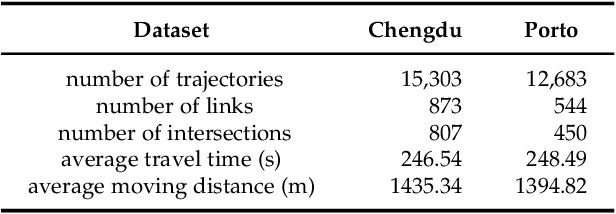

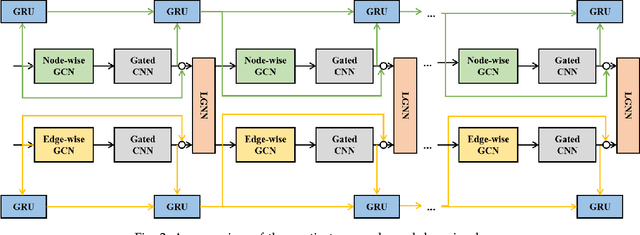

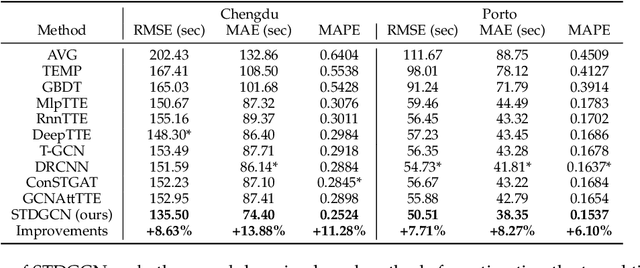

Abstract:Travel time estimation is a basic but important part in intelligent transportation systems, especially widely applied in online map services to help travel navigation and route planning. Most previous works commonly model the road segments or intersections separately and obtain their spatial-temporal characteristics for travel time estimation. However, due to the continuous alternation of the road segments and intersections, the dynamic features of them are supposed to be coupled and interactive. Therefore, modeling one of them limits further improvement in accuracy of estimating travel time. To address the above problems, we propose a novel graph-based deep learning framework for travel time estimation, namely Spatial-Temporal Dual Graph Neural Networks (STDGNN). Specifically, we first establish the spatial-temporal dual graph architecture to capture the complex correlations of both intersections and road segments. The adjacency relations of intersections and that of road segments are respectively characterized by node-wise graph and edge-wise graph. In order to capture the joint spatial-temporal dynamics of the intersections and road segments, we adopt the spatial-temporal learning layer that incorporates the multi-scale spatial-temporal graph convolution networks and dual graph interaction networks. Followed by the spatial-temporal learning layer, we also employ the multi-task learning layer to estimate the travel time of a given whole route and each road segment simultaneously. We conduct extensive experiments to evaluate our proposed model on two real-world trajectory datasets, and the experimental results show that STDGNN significantly outperforms several state-of-art baselines.

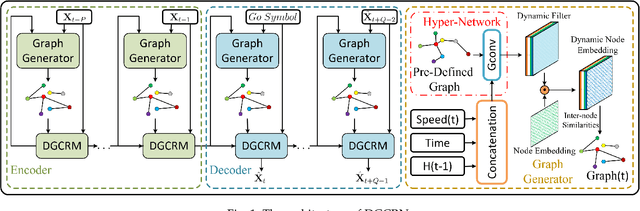

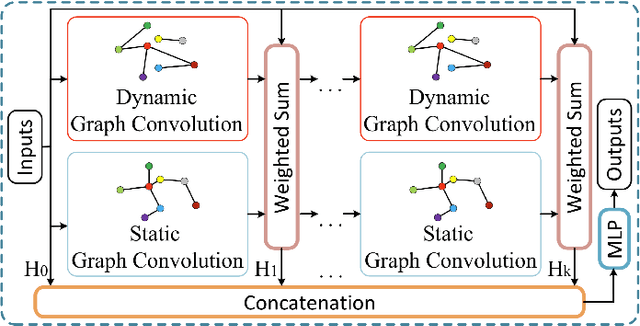

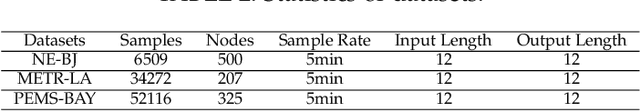

Dynamic Graph Convolutional Recurrent Network for Traffic Prediction: Benchmark and Solution

May 03, 2021

Abstract:Traffic prediction is the cornerstone of an intelligent transportation system. Accurate traffic forecasting is essential for the applications of smart cities, i.e., intelligent traffic management and urban planning. Although various methods are proposed for spatio-temporal modeling, they ignore the dynamic characteristics of correlations among locations on road networks. Meanwhile, most Recurrent Neural Network (RNN) based works are not efficient enough due to their recurrent operations. Additionally, there is a severe lack of fair comparison among different methods on the same datasets. To address the above challenges, in this paper, we propose a novel traffic prediction framework, named Dynamic Graph Convolutional Recurrent Network (DGCRN). In DGCRN, hyper-networks are designed to leverage and extract dynamic characteristics from node attributes, while the parameters of dynamic filters are generated at each time step. We filter the node embeddings and then use them to generate a dynamic graph, which is integrated with a pre-defined static graph. As far as we know, we are the first to employ a generation method to model fine topology of dynamic graph at each time step. Further, to enhance efficiency and performance, we employ a training strategy for DGCRN by restricting the iteration number of decoder during forward and backward propagation. Finally, a reproducible standardized benchmark and a brand new representative traffic dataset are opened for fair comparison and further research. Extensive experiments on three datasets demonstrate that our model outperforms 15 baselines consistently.

MV-C3D: A Spatial Correlated Multi-View 3D Convolutional Neural Networks

Jun 15, 2019

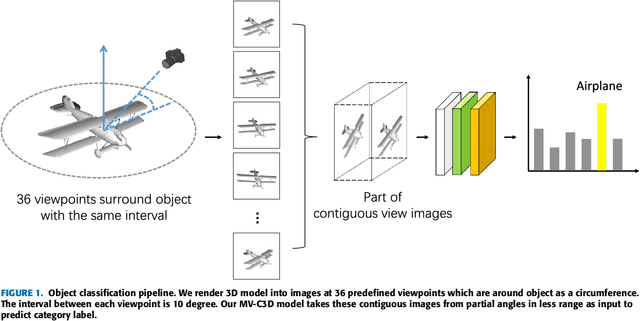

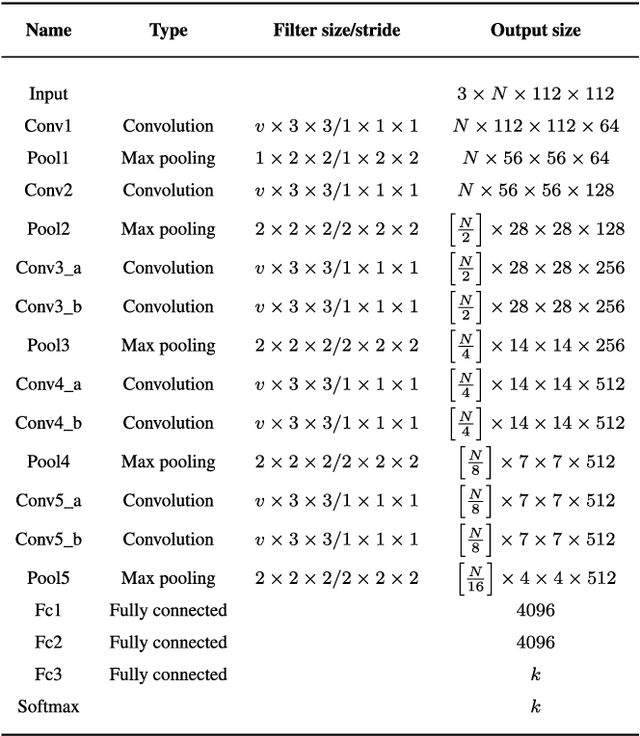

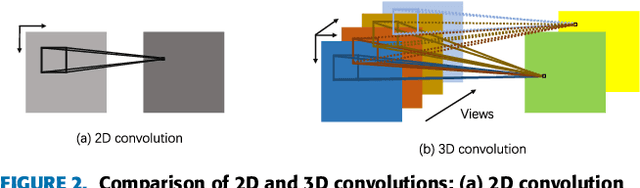

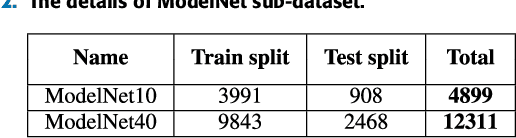

Abstract:As the development of deep neural networks, 3D object recognition is becoming increasingly popular in computer vision community. Many multi-view based methods are proposed to improve the category recognition accuracy. These approaches mainly rely on multi-view images which are rendered with the whole circumference. In real-world applications, however, 3D objects are mostly observed from partial viewpoints in a less range. Therefore, we propose a multi-view based 3D convolutional neural network, which takes only part of contiguous multi-view images as input and can still maintain high accuracy. Moreover, our model takes these view images as a joint variable to better learn spatially correlated features using 3D convolution and 3D max-pooling layers. Experimental results on ModelNet10 and ModelNet40 datasets show that our MV-C3D technique can achieve outstanding performance with multi-view images which are captured from partial angles with less range. The results on 3D rotated real image dataset MIRO further demonstrate that MV-C3D is more adaptable in real-world scenarios. The classification accuracy can be further improved with the increasing number of view images.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge