Qi Xuan

Improving the Convergence Rate of Ray Search Optimization for Query-Efficient Hard-Label Attacks

Dec 24, 2025Abstract:In hard-label black-box adversarial attacks, where only the top-1 predicted label is accessible, the prohibitive query complexity poses a major obstacle to practical deployment. In this paper, we focus on optimizing a representative class of attacks that search for the optimal ray direction yielding the minimum $\ell_2$-norm perturbation required to move a benign image into the adversarial region. Inspired by Nesterov's Accelerated Gradient (NAG), we propose a momentum-based algorithm, ARS-OPT, which proactively estimates the gradient with respect to a future ray direction inferred from accumulated momentum. We provide a theoretical analysis of its convergence behavior, showing that ARS-OPT enables more accurate directional updates and achieves faster, more stable optimization. To further accelerate convergence, we incorporate surrogate-model priors into ARS-OPT's gradient estimation, resulting in PARS-OPT with enhanced performance. The superiority of our approach is supported by theoretical guarantees under standard assumptions. Extensive experiments on ImageNet and CIFAR-10 demonstrate that our method surpasses 13 state-of-the-art approaches in query efficiency.

\emph{FoQuS}: A Forgetting-Quality Coreset Selection Framework for Automatic Modulation Recognition

Sep 10, 2025Abstract:Deep learning-based Automatic Modulation Recognition (AMR) model has made significant progress with the support of large-scale labeled data. However, when developing new models or performing hyperparameter tuning, the time and energy consumption associated with repeated training using massive amounts of data are often unbearable. To address the above challenges, we propose \emph{FoQuS}, which approximates the effect of full training by selecting a coreset from the original dataset, thereby significantly reducing training overhead. Specifically, \emph{FoQuS} records the prediction trajectory of each sample during full-dataset training and constructs three importance metrics based on training dynamics. Experiments show that \emph{FoQuS} can maintain high recognition accuracy and good cross-architecture generalization on multiple AMR datasets using only 1\%-30\% of the original data.

Boosting Ray Search Procedure of Hard-label Attacks with Transfer-based Priors

Jul 23, 2025Abstract:One of the most practical and challenging types of black-box adversarial attacks is the hard-label attack, where only the top-1 predicted label is available. One effective approach is to search for the optimal ray direction from the benign image that minimizes the $\ell_p$-norm distance to the adversarial region. The unique advantage of this approach is that it transforms the hard-label attack into a continuous optimization problem. The objective function value is the ray's radius, which can be obtained via binary search at a high query cost. Existing methods use a "sign trick" in gradient estimation to reduce the number of queries. In this paper, we theoretically analyze the quality of this gradient estimation and propose a novel prior-guided approach to improve ray search efficiency both theoretically and empirically. Specifically, we utilize the transfer-based priors from surrogate models, and our gradient estimators appropriately integrate them by approximating the projection of the true gradient onto the subspace spanned by these priors and random directions, in a query-efficient manner. We theoretically derive the expected cosine similarities between the obtained gradient estimators and the true gradient, and demonstrate the improvement achieved by incorporating priors. Extensive experiments on the ImageNet and CIFAR-10 datasets show that our approach significantly outperforms 11 state-of-the-art methods in terms of query efficiency.

DUSE: A Data Expansion Framework for Low-resource Automatic Modulation Recognition based on Active Learning

Jul 16, 2025Abstract:Although deep neural networks have made remarkable achievements in the field of automatic modulation recognition (AMR), these models often require a large amount of labeled data for training. However, in many practical scenarios, the available target domain data is scarce and difficult to meet the needs of model training. The most direct way is to collect data manually and perform expert annotation, but the high time and labor costs are unbearable. Another common method is data augmentation. Although it can enrich training samples to a certain extent, it does not introduce new data and therefore cannot fundamentally solve the problem of data scarcity. To address these challenges, we introduce a data expansion framework called Dynamic Uncertainty-driven Sample Expansion (DUSE). Specifically, DUSE uses an uncertainty scoring function to filter out useful samples from relevant AMR datasets and employs an active learning strategy to continuously refine the scorer. Extensive experiments demonstrate that DUSE consistently outperforms 8 coreset selection baselines in both class-balance and class-imbalance settings. Besides, DUSE exhibits strong cross-architecture generalization for unseen models.

Better Reasoning with Less Data: Enhancing VLMs Through Unified Modality Scoring

Jun 10, 2025

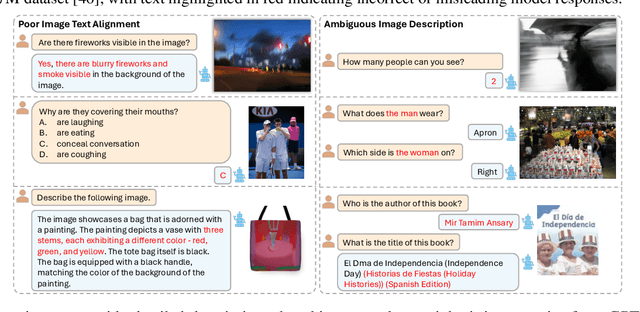

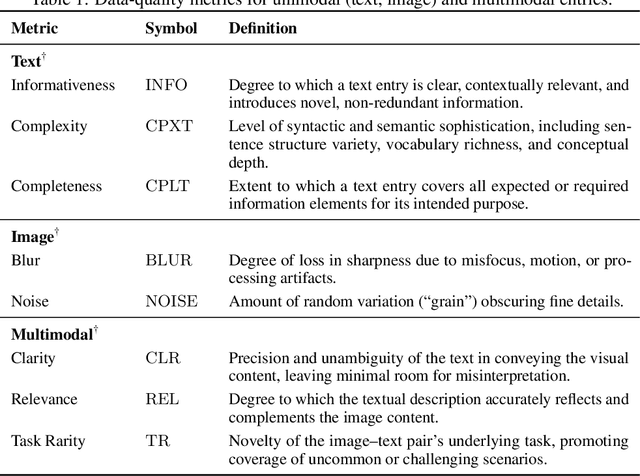

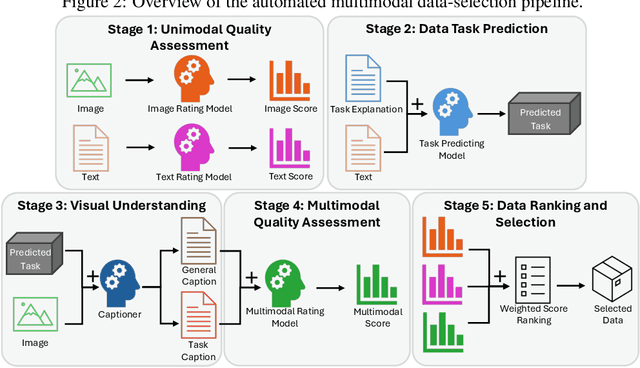

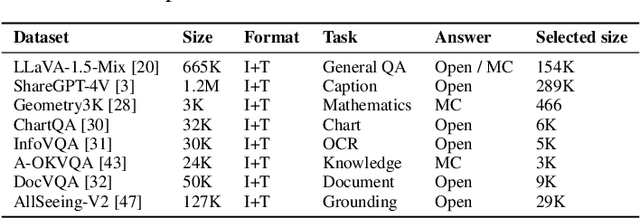

Abstract:The application of visual instruction tuning and other post-training techniques has significantly enhanced the capabilities of Large Language Models (LLMs) in visual understanding, enriching Vision-Language Models (VLMs) with more comprehensive visual language datasets. However, the effectiveness of VLMs is highly dependent on large-scale, high-quality datasets that ensure precise recognition and accurate reasoning. Two key challenges hinder progress: (1) noisy alignments between images and the corresponding text, which leads to misinterpretation, and (2) ambiguous or misleading text, which obscures visual content. To address these challenges, we propose SCALE (Single modality data quality and Cross modality Alignment Evaluation), a novel quality-driven data selection pipeline for VLM instruction tuning datasets. Specifically, SCALE integrates a cross-modality assessment framework that first assigns each data entry to its appropriate vision-language task, generates general and task-specific captions (covering scenes, objects, style, etc.), and evaluates the alignment, clarity, task rarity, text coherence, and image clarity of each entry based on the generated captions. We reveal that: (1) current unimodal quality assessment methods evaluate one modality while overlooking the rest, which can underestimate samples essential for specific tasks and discard the lower-quality instances that help build model robustness; and (2) appropriately generated image captions provide an efficient way to transfer the image-text multimodal task into a unified text modality.

ReStNet: A Reusable & Stitchable Network for Dynamic Adaptation on IoT Devices

Jun 08, 2025Abstract:With the rapid development of deep learning, a growing number of pre-trained models have been publicly available. However, deploying these fixed models in real-world IoT applications is challenging because different devices possess heterogeneous computational and memory resources, making it impossible to deploy a single model across all platforms. Although traditional compression methods, such as pruning, quantization, and knowledge distillation, can improve efficiency, they become inflexible once applied and cannot adapt to changing resource constraints. To address these issues, we propose ReStNet, a Reusable and Stitchable Network that dynamically constructs a hybrid network by stitching two pre-trained models together. Implementing ReStNet requires addressing several key challenges, including how to select the optimal stitching points, determine the stitching order of the two pre-trained models, and choose an effective fine-tuning strategy. To systematically address these challenges and adapt to varying resource constraints, ReStNet determines the stitching point by calculating layer-wise similarity via Centered Kernel Alignment (CKA). It then constructs the hybrid model by retaining early layers from a larger-capacity model and appending deeper layers from a smaller one. To facilitate efficient deployment, only the stitching layer is fine-tuned. This design enables rapid adaptation to changing budgets while fully leveraging available resources. Moreover, ReStNet supports both homogeneous (CNN-CNN, Transformer-Transformer) and heterogeneous (CNN-Transformer) stitching, allowing to combine different model families flexibly. Extensive experiments on multiple benchmarks demonstrate that ReStNet achieve flexible accuracy-efficiency trade-offs at runtime while significantly reducing training cost.

FCOS: A Two-Stage Recoverable Model Pruning Framework for Automatic Modulation Recognition

May 27, 2025Abstract:With the rapid development of wireless communications and the growing complexity of digital modulation schemes, traditional manual modulation recognition methods struggle to extract reliable signal features and meet real-time requirements in modern scenarios. Recently, deep learning based Automatic Modulation Recognition (AMR) approaches have greatly improved classification accuracy. However, their large model sizes and high computational demands hinder deployment on resource-constrained devices. Model pruning provides a general approach to reduce model complexity, but existing weight, channel, and layer pruning techniques each present a trade-off between compression rate, hardware acceleration, and accuracy preservation. To this end, in this paper, we introduce FCOS, a novel Fine-to-COarse two-Stage pruning framework that combines channel-level pruning with layer-level collapse diagnosis to achieve extreme compression, high performance and efficient inference. In the first stage of FCOS, hierarchical clustering and parameter fusion are applied to channel weights to achieve channel-level pruning. Then a Layer Collapse Diagnosis (LaCD) module uses linear probing to identify layer collapse and removes the collapsed layers due to high channel compression ratio. Experiments on multiple AMR benchmarks demonstrate that FCOS outperforms existing channel and layer pruning methods. Specifically, FCOS achieves 95.51% FLOPs reduction and 95.31% parameter reduction while still maintaining performance close to the original ResNet56, with only a 0.46% drop in accuracy on Sig2019-12. Code is available at https://github.com/yaolu-zjut/FCOS.

GUARD: Generation-time LLM Unlearning via Adaptive Restriction and Detection

May 19, 2025Abstract:Large Language Models (LLMs) have demonstrated strong capabilities in memorizing vast amounts of knowledge across diverse domains. However, the ability to selectively forget specific knowledge is critical for ensuring the safety and compliance of deployed models. Existing unlearning efforts typically fine-tune the model with resources such as forget data, retain data, and a calibration model. These additional gradient steps blur the decision boundary between forget and retain knowledge, making unlearning often at the expense of overall performance. To avoid the negative impact of fine-tuning, it would be better to unlearn solely at inference time by safely guarding the model against generating responses related to the forget target, without destroying the fluency of text generation. In this work, we propose Generation-time Unlearning via Adaptive Restriction and Detection (GUARD), a framework that enables dynamic unlearning during LLM generation. Specifically, we first employ a prompt classifier to detect unlearning targets and extract the corresponding forbidden token. We then dynamically penalize and filter candidate tokens during generation using a combination of token matching and semantic matching, effectively preventing the model from leaking the forgotten content. Experimental results on copyright content unlearning tasks over the Harry Potter dataset and the MUSE benchmark, as well as entity unlearning tasks on the TOFU dataset, demonstrate that GUARD achieves strong forget quality across various tasks while causing almost no degradation to the LLM's general capabilities, striking an excellent trade-off between forgetting and utility.

Adaptive Substructure-Aware Expert Model for Molecular Property Prediction

Apr 08, 2025Abstract:Molecular property prediction is essential for applications such as drug discovery and toxicity assessment. While Graph Neural Networks (GNNs) have shown promising results by modeling molecules as molecular graphs, their reliance on data-driven learning limits their ability to generalize, particularly in the presence of data imbalance and diverse molecular substructures. Existing methods often overlook the varying contributions of different substructures to molecular properties, treating them uniformly. To address these challenges, we propose ASE-Mol, a novel GNN-based framework that leverages a Mixture-of-Experts (MoE) approach for molecular property prediction. ASE-Mol incorporates BRICS decomposition and significant substructure awareness to dynamically identify positive and negative substructures. By integrating a MoE architecture, it reduces the adverse impact of negative motifs while improving adaptability to positive motifs. Experimental results on eight benchmark datasets demonstrate that ASE-Mol achieves state-of-the-art performance, with significant improvements in both accuracy and interpretability.

Unveiling Latent Information in Transaction Hashes: Hypergraph Learning for Ethereum Ponzi Scheme Detection

Mar 27, 2025Abstract:With the widespread adoption of Ethereum, financial frauds such as Ponzi schemes have become increasingly rampant in the blockchain ecosystem, posing significant threats to the security of account assets. Existing Ethereum fraud detection methods typically model account transactions as graphs, but this approach primarily focuses on binary transactional relationships between accounts, failing to adequately capture the complex multi-party interaction patterns inherent in Ethereum. To address this, we propose a hypergraph modeling method for the Ponzi scheme detection method in Ethereum, called HyperDet. Specifically, we treat transaction hashes as hyperedges that connect all the relevant accounts involved in a transaction. Additionally, we design a two-step hypergraph sampling strategy to significantly reduce computational complexity. Furthermore, we introduce a dual-channel detection module, including the hypergraph detection channel and the hyper-homo graph detection channel, to be compatible with existing detection methods. Experimental results show that, compared to traditional homogeneous graph-based methods, the hyper-homo graph detection channel achieves significant performance improvements, demonstrating the superiority of hypergraph in Ponzi scheme detection. This research offers innovations for modeling complex relationships in blockchain data.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge