Jiajun Zhou

Unveiling Latent Information in Transaction Hashes: Hypergraph Learning for Ethereum Ponzi Scheme Detection

Mar 27, 2025Abstract:With the widespread adoption of Ethereum, financial frauds such as Ponzi schemes have become increasingly rampant in the blockchain ecosystem, posing significant threats to the security of account assets. Existing Ethereum fraud detection methods typically model account transactions as graphs, but this approach primarily focuses on binary transactional relationships between accounts, failing to adequately capture the complex multi-party interaction patterns inherent in Ethereum. To address this, we propose a hypergraph modeling method for the Ponzi scheme detection method in Ethereum, called HyperDet. Specifically, we treat transaction hashes as hyperedges that connect all the relevant accounts involved in a transaction. Additionally, we design a two-step hypergraph sampling strategy to significantly reduce computational complexity. Furthermore, we introduce a dual-channel detection module, including the hypergraph detection channel and the hyper-homo graph detection channel, to be compatible with existing detection methods. Experimental results show that, compared to traditional homogeneous graph-based methods, the hyper-homo graph detection channel achieves significant performance improvements, demonstrating the superiority of hypergraph in Ponzi scheme detection. This research offers innovations for modeling complex relationships in blockchain data.

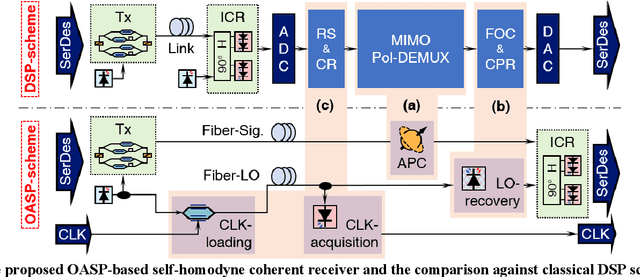

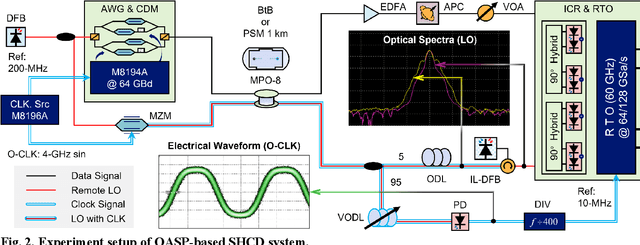

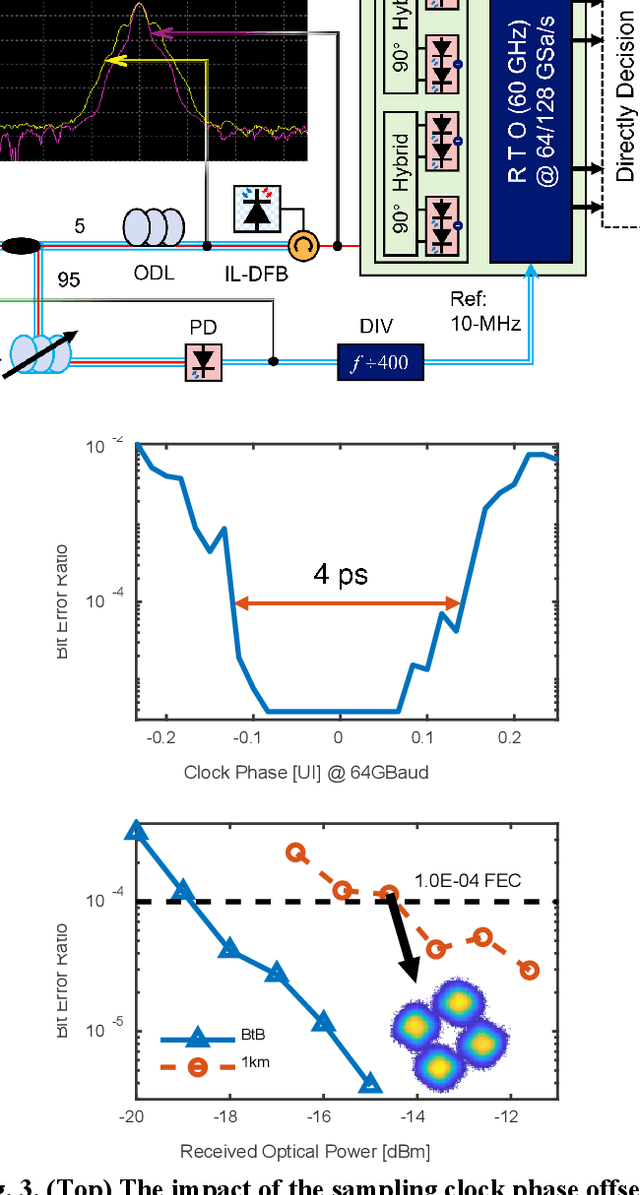

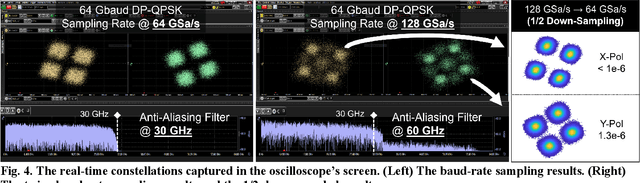

Pushing DSP-Free Coherent Interconnect to the Last Inch by Optically Analog Signal Processing

Mar 14, 2025

Abstract:To support the boosting interconnect capacity of the AI-related data centers, novel techniques enabled high-speed and low-cost optics are continuously emerging. When the baud rate approaches 200 GBaud per lane, the bottle-neck of traditional intensity modulation direct detection (IM-DD) architectures becomes increasingly evident. The simplified coherent solutions are widely discussed and considered as one of the most promising candidates. In this paper, a novel coherent architecture based on self-homodyne coherent detection and optically analog signal processing (OASP) is demonstrated. Proved by experiment, the first DSP-free baud-rate sampled 64-GBaud QPSK/16-QAM receptions are achieved, with BERs of 1e-6 and 2e-2, respectively. Even with 1-km fiber link propagation, the BER for QPSK reception remains at 3.6e-6. When an ultra-simple 1-sps SISO filter is utilized, the performance degradation of the proposed scheme is less than 1 dB compared to legacy DSP-based coherent reception. The proposed results pave the way for the ultra-high-speed coherent optical interconnections, offering high power and cost efficiency.

QuZO: Quantized Zeroth-Order Fine-Tuning for Large Language Models

Feb 17, 2025

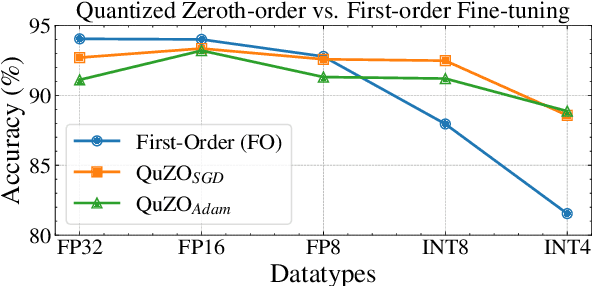

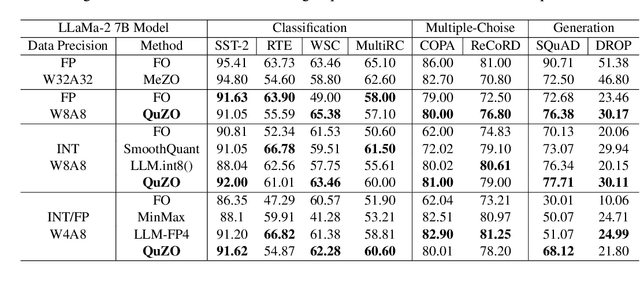

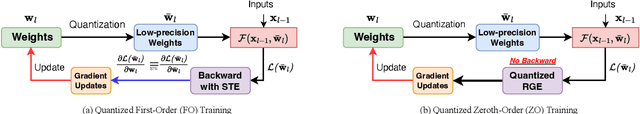

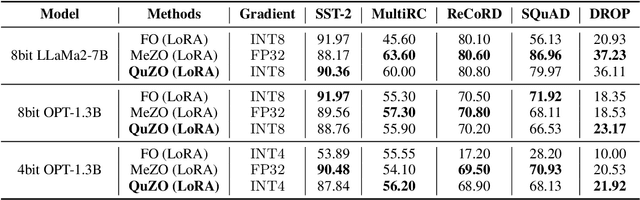

Abstract:Language Models (LLMs) are often quantized to lower precision to reduce the memory cost and latency in inference. However, quantization often degrades model performance, thus fine-tuning is required for various down-stream tasks. Traditional fine-tuning methods such as stochastic gradient descent and Adam optimization require backpropagation, which are error-prone in the low-precision settings. To overcome these limitations, we propose the Quantized Zeroth-Order (QuZO) framework, specifically designed for fine-tuning LLMs through low-precision (e.g., 4- or 8-bit) forward passes. Our method can avoid the error-prone low-precision straight-through estimator, and utilizes optimized stochastic rounding to mitigate the increased bias. QuZO simplifies the training process, while achieving results comparable to first-order methods in ${\rm FP}8$ and superior accuracy in ${\rm INT}8$ and ${\rm INT}4$ training. Experiments demonstrate that low-bit training QuZO achieves performance comparable to MeZO optimization on GLUE, Multi-Choice, and Generation tasks, while reducing memory cost by $2.94 \times$ in LLaMA2-7B fine-tuning compared to quantized first-order methods.

Mixture of Decoupled Message Passing Experts with Entropy Constraint for General Node Classification

Feb 12, 2025

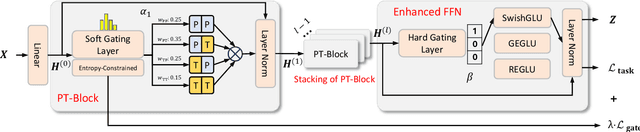

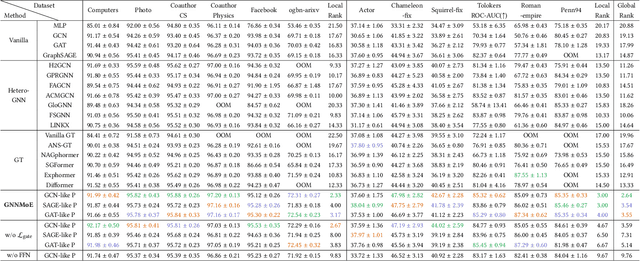

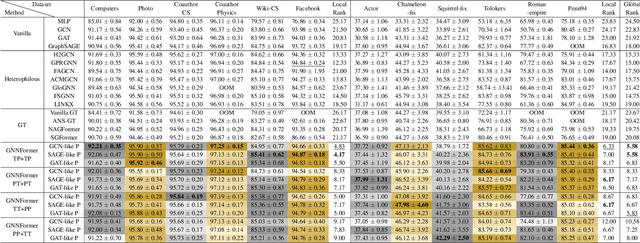

Abstract:The varying degrees of homophily and heterophily in real-world graphs persistently constrain the universality of graph neural networks (GNNs) for node classification. Adopting a data-centric perspective, this work reveals an inherent preference of different graphs towards distinct message encoding schemes: homophilous graphs favor local propagation, while heterophilous graphs exhibit preference for flexible combinations of propagation and transformation. To address this, we propose GNNMoE, a universal node classification framework based on the Mixture-of-Experts (MoE) mechanism. The framework first constructs diverse message-passing experts through recombination of fine-grained encoding operators, then designs soft and hard gating layers to allocate the most suitable expert networks for each node's representation learning, thereby enhancing both model expressiveness and adaptability to diverse graphs. Furthermore, considering that soft gating might introduce encoding noise in homophilous scenarios, we introduce an entropy constraint to guide sharpening of soft gates, achieving organic integration of weighted combination and Top-K selection. Extensive experiments demonstrate that GNNMoE significantly outperforms mainstream GNNs, heterophilous GNNs, and graph transformers in both node classification performance and universality across diverse graph datasets.

Mixture of Experts Meets Decoupled Message Passing: Towards General and Adaptive Node Classification

Dec 11, 2024

Abstract:Graph neural networks excel at graph representation learning but struggle with heterophilous data and long-range dependencies. And graph transformers address these issues through self-attention, yet face scalability and noise challenges on large-scale graphs. To overcome these limitations, we propose GNNMoE, a universal model architecture for node classification. This architecture flexibly combines fine-grained message-passing operations with a mixture-of-experts mechanism to build feature encoding blocks. Furthermore, by incorporating soft and hard gating layers to assign the most suitable expert networks to each node, we enhance the model's expressive power and adaptability to different graph types. In addition, we introduce adaptive residual connections and an enhanced FFN module into GNNMoE, further improving the expressiveness of node representation. Extensive experimental results demonstrate that GNNMoE performs exceptionally well across various types of graph data, effectively alleviating the over-smoothing issue and global noise, enhancing model robustness and adaptability, while also ensuring computational efficiency on large-scale graphs.

Lateral Movement Detection via Time-aware Subgraph Classification on Authentication Logs

Nov 15, 2024Abstract:Lateral movement is a crucial component of advanced persistent threat (APT) attacks in networks. Attackers exploit security vulnerabilities in internal networks or IoT devices, expanding their control after initial infiltration to steal sensitive data or carry out other malicious activities, posing a serious threat to system security. Existing research suggests that attackers generally employ seemingly unrelated operations to mask their malicious intentions, thereby evading existing lateral movement detection methods and hiding their intrusion traces. In this regard, we analyze host authentication log data from a graph perspective and propose a multi-scale lateral movement detection framework called LMDetect. The main workflow of this framework proceeds as follows: 1) Construct a heterogeneous multigraph from host authentication log data to strengthen the correlations among internal system entities; 2) Design a time-aware subgraph generator to extract subgraphs centered on authentication events from the heterogeneous authentication multigraph; 3) Design a multi-scale attention encoder that leverages both local and global attention to capture hidden anomalous behavior patterns in the authentication subgraphs, thereby achieving lateral movement detection. Extensive experiments on two real-world authentication log datasets demonstrate the effectiveness and superiority of our framework in detecting lateral movement behaviors.

Rethinking Graph Transformer Architecture Design for Node Classification

Oct 15, 2024

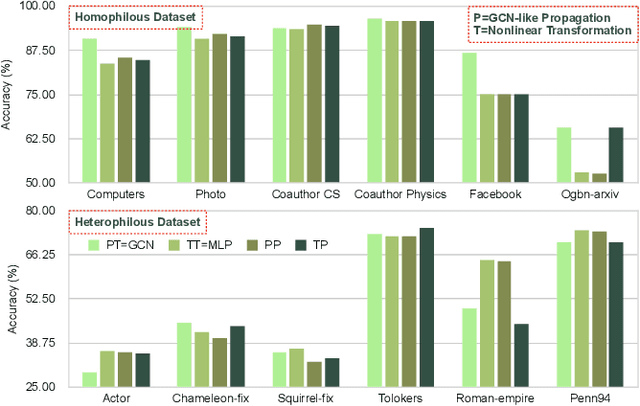

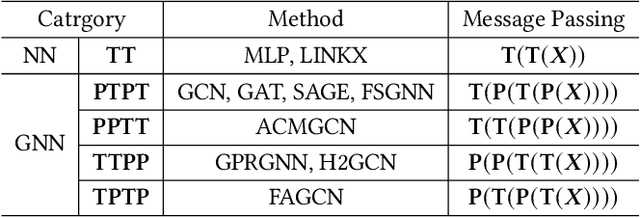

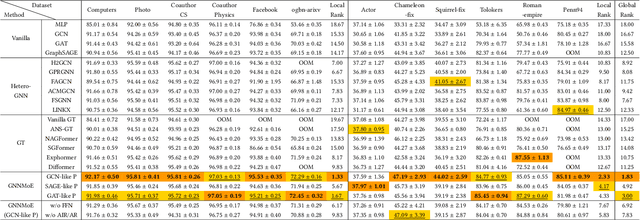

Abstract:Graph Transformer (GT), as a special type of Graph Neural Networks (GNNs), utilizes multi-head attention to facilitate high-order message passing. However, this also imposes several limitations in node classification applications: 1) nodes are susceptible to global noise; 2) self-attention computation cannot scale well to large graphs. In this work, we conduct extensive observational experiments to explore the adaptability of the GT architecture in node classification tasks and draw several conclusions: the current multi-head self-attention module in GT can be completely replaceable, while the feed-forward neural network module proves to be valuable. Based on this, we decouple the propagation (P) and transformation (T) of GNNs and explore a powerful GT architecture, named GNNFormer, which is based on the P/T combination message passing and adapted for node classification in both homophilous and heterophilous scenarios. Extensive experiments on 12 benchmark datasets demonstrate that our proposed GT architecture can effectively adapt to node classification tasks without being affected by global noise and computational efficiency limitations.

Network Anomaly Traffic Detection via Multi-view Feature Fusion

Sep 12, 2024Abstract:Traditional anomalous traffic detection methods are based on single-view analysis, which has obvious limitations in dealing with complex attacks and encrypted communications. In this regard, we propose a Multi-view Feature Fusion (MuFF) method for network anomaly traffic detection. MuFF models the temporal and interactive relationships of packets in network traffic based on the temporal and interactive viewpoints respectively. It learns temporal and interactive features. These features are then fused from different perspectives for anomaly traffic detection. Extensive experiments on six real traffic datasets show that MuFF has excellent performance in network anomalous traffic detection, which makes up for the shortcomings of detection under a single perspective.

PolyCL: Contrastive Learning for Polymer Representation Learning via Explicit and Implicit Augmentations

Aug 14, 2024

Abstract:Polymers play a crucial role in a wide array of applications due to their diverse and tunable properties. Establishing the relationship between polymer representations and their properties is crucial to the computational design and screening of potential polymers via machine learning. The quality of the representation significantly influences the effectiveness of these computational methods. Here, we present a self-supervised contrastive learning paradigm, PolyCL, for learning high-quality polymer representation without the need for labels. Our model combines explicit and implicit augmentation strategies for improved learning performance. The results demonstrate that our model achieves either better, or highly competitive, performances on transfer learning tasks as a feature extractor without an overcomplicated training strategy or hyperparameter optimisation. Further enhancing the efficacy of our model, we conducted extensive analyses on various augmentation combinations used in contrastive learning. This led to identifying the most effective combination to maximise PolyCL's performance.

Enhancing Ethereum Fraud Detection via Generative and Contrastive Self-supervision

Aug 01, 2024

Abstract:The rampant fraudulent activities on Ethereum hinder the healthy development of the blockchain ecosystem, necessitating the reinforcement of regulations. However, multiple imbalances involving account interaction frequencies and interaction types in the Ethereum transaction environment pose significant challenges to data mining-based fraud detection research. To address this, we first propose the concept of meta-interactions to refine interaction behaviors in Ethereum, and based on this, we present a dual self-supervision enhanced Ethereum fraud detection framework, named Meta-IFD. This framework initially introduces a generative self-supervision mechanism to augment the interaction features of accounts, followed by a contrastive self-supervision mechanism to differentiate various behavior patterns, and ultimately characterizes the behavioral representations of accounts and mines potential fraud risks through multi-view interaction feature learning. Extensive experiments on real Ethereum datasets demonstrate the effectiveness and superiority of our framework in detecting common Ethereum fraud behaviors such as Ponzi schemes and phishing scams. Additionally, the generative module can effectively alleviate the interaction distribution imbalance in Ethereum data, while the contrastive module significantly enhances the framework's ability to distinguish different behavior patterns. The source code will be released on GitHub soon.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge