Huan Yan

Breaking Coordinate Overfitting: Geometry-Aware WiFi Sensing for Cross-Layout 3D Pose Estimation

Jan 18, 2026Abstract:WiFi-based 3D human pose estimation offers a low-cost and privacy-preserving alternative to vision-based systems for smart interaction. However, existing approaches rely on visual 3D poses as supervision and directly regress CSI to a camera-based coordinate system. We find that this practice leads to coordinate overfitting: models memorize deployment-specific WiFi transceiver layouts rather than only learning activity-relevant representations, resulting in severe generalization failures. To address this challenge, we present PerceptAlign, the first geometry-conditioned framework for WiFi-based cross-layout pose estimation. PerceptAlign introduces a lightweight coordinate unification procedure that aligns WiFi and vision measurements in a shared 3D space using only two checkerboards and a few photos. Within this unified space, it encodes calibrated transceiver positions into high-dimensional embeddings and fuses them with CSI features, making the model explicitly aware of device geometry as a conditional variable. This design forces the network to disentangle human motion from deployment layouts, enabling robust and, for the first time, layout-invariant WiFi pose estimation. To support systematic evaluation, we construct the largest cross-domain 3D WiFi pose estimation dataset to date, comprising 21 subjects, 5 scenes, 18 actions, and 7 device layouts. Experiments show that PerceptAlign reduces in-domain error by 12.3% and cross-domain error by more than 60% compared to state-of-the-art baselines. These results establish geometry-conditioned learning as a viable path toward scalable and practical WiFi sensing.

Beyond Physical Labels: Redefining Domains for Robust WiFi-based Gesture Recognition

Jan 08, 2026Abstract:In this paper, we propose GesFi, a novel WiFi-based gesture recognition system that introduces WiFi latent domain mining to redefine domains directly from the data itself. GesFi first processes raw sensing data collected from WiFi receivers using CSI-ratio denoising, Short-Time Fast Fourier Transform, and visualization techniques to generate standardized input representations. It then employs class-wise adversarial learning to suppress gesture semantic and leverages unsupervised clustering to automatically uncover latent domain factors responsible for distributional shifts. These latent domains are then aligned through adversarial learning to support robust cross-domain generalization. Finally, the system is applied to the target environment for robust gesture inference. We deployed GesFi under both single-pair and multi-pair settings using commodity WiFi transceivers, and evaluated it across multiple public datasets and real-world environments. Compared to state-of-the-art baselines, GesFi achieves up to 78% and 50% performance improvements over existing adversarial methods, and consistently outperforms prior generalization approaches across most cross-domain tasks.

Wi-CBR: WiFi-based Cross-domain Behavior Recognition via Multimodal Collaborative Awareness

Jun 13, 2025Abstract:WiFi-based human behavior recognition aims to recognize gestures and activities by analyzing wireless signal variations. However, existing methods typically focus on a single type of data, neglecting the interaction and fusion of multiple features. To this end, we propose a novel multimodal collaborative awareness method. By leveraging phase data reflecting changes in dynamic path length and Doppler Shift (DFS) data corresponding to frequency changes related to the speed of gesture movement, we enable efficient interaction and fusion of these features to improve recognition accuracy. Specifically, we first introduce a dual-branch self-attention module to capture spatial-temporal cues within each modality. Then, a group attention mechanism is applied to the concatenated phase and DFS features to mine key group features critical for behavior recognition. Through a gating mechanism, the combined features are further divided into PD-strengthen and PD-weaken branches, optimizing information entropy and promoting cross-modal collaborative awareness. Extensive in-domain and cross-domain experiments on two large publicly available datasets, Widar3.0 and XRF55, demonstrate the superior performance of our method.

MRGRP: Empowering Courier Route Prediction in Food Delivery Service with Multi-Relational Graph

May 17, 2025

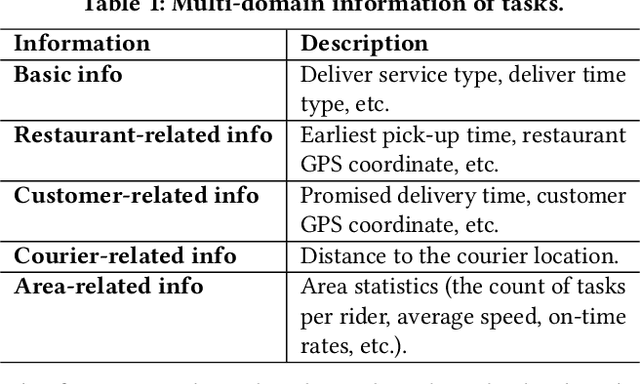

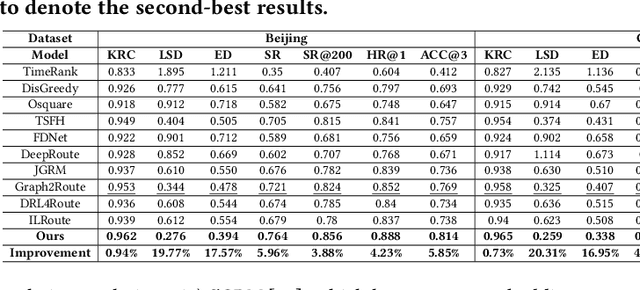

Abstract:Instant food delivery has become one of the most popular web services worldwide due to its convenience in daily life. A fundamental challenge is accurately predicting courier routes to optimize task dispatch and improve delivery efficiency. This enhances satisfaction for couriers and users and increases platform profitability. The current heuristic prediction method uses only limited human-selected task features and ignores couriers preferences, causing suboptimal results. Additionally, existing learning-based methods do not fully capture the diverse factors influencing courier decisions or the complex relationships among them. To address this, we propose a Multi-Relational Graph-based Route Prediction (MRGRP) method that models fine-grained correlations among tasks affecting courier decisions for accurate prediction. We encode spatial and temporal proximity, along with pickup-delivery relationships, into a multi-relational graph and design a GraphFormer architecture to capture these complex connections. We also introduce a route decoder that leverages courier information and dynamic distance and time contexts for prediction, using existing route solutions as references to improve outcomes. Experiments show our model achieves state-of-the-art route prediction on offline data from cities of various sizes. Deployed on the Meituan Turing platform, it surpasses the current heuristic algorithm, reaching a high route prediction accuracy of 0.819, essential for courier and user satisfaction in instant food delivery.

kFuse: A novel density based agglomerative clustering

May 09, 2025Abstract:Agglomerative clustering has emerged as a vital tool in data analysis due to its intuitive and flexible characteristics. However, existing agglomerative clustering methods often involve additional parameters for sub-cluster partitioning and inter-cluster similarity assessment. This necessitates different parameter settings across various datasets, which is undoubtedly challenging in the absence of prior knowledge. Moreover, existing agglomerative clustering techniques are constrained by the calculation method of connection distance, leading to unstable clustering results. To address these issues, this paper introduces a novel density-based agglomerative clustering method, termed kFuse. kFuse comprises four key components: (1) sub-cluster partitioning based on natural neighbors; (2) determination of boundary connectivity between sub-clusters through the computation of adjacent samples and shortest distances; (3) assessment of density similarity between sub-clusters via the calculation of mean density and variance; and (4) establishment of merging rules between sub-clusters based on boundary connectivity and density similarity. kFuse requires the specification of the number of clusters only at the final merging stage. Additionally, by comprehensively considering adjacent samples, distances, and densities among different sub-clusters, kFuse significantly enhances accuracy during the merging phase, thereby greatly improving its identification capability. Experimental results on both synthetic and real-world datasets validate the effectiveness of kFuse.

DeepSTA: A Spatial-Temporal Attention Network for Logistics Delivery Timely Rate Prediction in Anomaly Conditions

May 01, 2025Abstract:Prediction of couriers' delivery timely rates in advance is essential to the logistics industry, enabling companies to take preemptive measures to ensure the normal operation of delivery services. This becomes even more critical during anomaly conditions like the epidemic outbreak, during which couriers' delivery timely rate will decline markedly and fluctuates significantly. Existing studies pay less attention to the logistics scenario. Moreover, many works focusing on prediction tasks in anomaly scenarios fail to explicitly model abnormal events, e.g., treating external factors equally with other features, resulting in great information loss. Further, since some anomalous events occur infrequently, traditional data-driven methods perform poorly in these scenarios. To deal with them, we propose a deep spatial-temporal attention model, named DeepSTA. To be specific, to avoid information loss, we design an anomaly spatio-temporal learning module that employs a recurrent neural network to model incident information. Additionally, we utilize Node2vec to model correlations between road districts, and adopt graph neural networks and long short-term memory to capture the spatial-temporal dependencies of couriers. To tackle the issue of insufficient training data in abnormal circumstances, we propose an anomaly pattern attention module that adopts a memory network for couriers' anomaly feature patterns storage via attention mechanisms. The experiments on real-world logistics datasets during the COVID-19 outbreak in 2022 show the model outperforms the best baselines by 12.11% in MAE and 13.71% in MSE, demonstrating its superior performance over multiple competitive baselines.

Learning to Estimate Package Delivery Time in Mixed Imbalanced Delivery and Pickup Logistics Services

May 01, 2025Abstract:Accurately estimating package delivery time is essential to the logistics industry, which enables reasonable work allocation and on-time service guarantee. This becomes even more necessary in mixed logistics scenarios where couriers handle a high volume of delivery and a smaller number of pickup simultaneously. However, most of the related works treat the pickup and delivery patterns on couriers' decision behavior equally, neglecting that the pickup has a greater impact on couriers' decision-making compared to the delivery due to its tighter time constraints. In such context, we have three main challenges: 1) multiple spatiotemporal factors are intricately interconnected, significantly affecting couriers' delivery behavior; 2) pickups have stricter time requirements but are limited in number, making it challenging to model their effects on couriers' delivery process; 3) couriers' spatial mobility patterns are critical determinants of their delivery behavior, but have been insufficiently explored. To deal with these, we propose TransPDT, a Transformer-based multi-task package delivery time prediction model. We first employ the Transformer encoder architecture to capture the spatio-temporal dependencies of couriers' historical travel routes and pending package sets. Then we design the pattern memory to learn the patterns of pickup in the imbalanced dataset via attention mechanism. We also set the route prediction as an auxiliary task of delivery time prediction, and incorporate the prior courier spatial movement regularities in prediction. Extensive experiments on real industry-scale datasets demonstrate the superiority of our method. A system based on TransPDT is deployed internally in JD Logistics to track more than 2000 couriers handling hundreds of thousands of packages per day in Beijing.

Mix-Domain Contrastive Learning for Unpaired H&E-to-IHC Stain Translation

Jun 17, 2024Abstract:H&E-to-IHC stain translation techniques offer a promising solution for precise cancer diagnosis, especially in low-resource regions where there is a shortage of health professionals and limited access to expensive equipment. Considering the pixel-level misalignment of H&E-IHC image pairs, current research explores the pathological consistency between patches from the same positions of the image pair. However, most of them overemphasize the correspondence between domains or patches, overlooking the side information provided by the non-corresponding objects. In this paper, we propose a Mix-Domain Contrastive Learning (MDCL) method to leverage the supervision information in unpaired H&E-to-IHC stain translation. Specifically, the proposed MDCL method aggregates the inter-domain and intra-domain pathology information by estimating the correlation between the anchor patch and all the patches from the matching images, encouraging the network to learn additional contrastive knowledge from mixed domains. With the mix-domain pathology information aggregation, MDCL enhances the pathological consistency between the corresponding patches and the component discrepancy of the patches from the different positions of the generated IHC image. Extensive experiments on two H&E-to-IHC stain translation datasets, namely MIST and BCI, demonstrate that the proposed method achieves state-of-the-art performance across multiple metrics.

WiOpen: A Robust Wi-Fi-based Open-set Gesture Recognition Framework

Feb 01, 2024Abstract:Recent years have witnessed a growing interest in Wi-Fi-based gesture recognition. However, existing works have predominantly focused on closed-set paradigms, where all testing gestures are predefined during training. This poses a significant challenge in real-world applications, as unseen gestures might be misclassified as known classes during testing. To address this issue, we propose WiOpen, a robust Wi-Fi-based Open-Set Gesture Recognition (OSGR) framework. Implementing OSGR requires addressing challenges caused by the unique uncertainty in Wi-Fi sensing. This uncertainty, resulting from noise and domains, leads to widely scattered and irregular data distributions in collected Wi-Fi sensing data. Consequently, data ambiguity between classes and challenges in defining appropriate decision boundaries to identify unknowns arise. To tackle these challenges, WiOpen adopts a two-fold approach to eliminate uncertainty and define precise decision boundaries. Initially, it addresses uncertainty induced by noise during data preprocessing by utilizing the CSI ratio. Next, it designs the OSGR network based on an uncertainty quantification method. Throughout the learning process, this network effectively mitigates uncertainty stemming from domains. Ultimately, the network leverages relationships among samples' neighbors to dynamically define open-set decision boundaries, successfully realizing OSGR. Comprehensive experiments on publicly accessible datasets confirm WiOpen's effectiveness. Notably, WiOpen also demonstrates superiority in cross-domain tasks when compared to state-of-the-art approaches.

A Survey of Generative AI for Intelligent Transportation Systems

Dec 13, 2023Abstract:Intelligent transportation systems play a crucial role in modern traffic management and optimization, greatly improving traffic efficiency and safety. With the rapid development of generative artificial intelligence (Generative AI) technologies in the fields of image generation and natural language processing, generative AI has also played a crucial role in addressing key issues in intelligent transportation systems, such as data sparsity, difficulty in observing abnormal scenarios, and in modeling data uncertainty. In this review, we systematically investigate the relevant literature on generative AI techniques in addressing key issues in different types of tasks in intelligent transportation systems. First, we introduce the principles of different generative AI techniques, and their potential applications. Then, we classify tasks in intelligent transportation systems into four types: traffic perception, traffic prediction, traffic simulation, and traffic decision-making. We systematically illustrate how generative AI techniques addresses key issues in these four different types of tasks. Finally, we summarize the challenges faced in applying generative AI to intelligent transportation systems, and discuss future research directions based on different application scenarios.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge