Guanghui Wang

Department of Computer Science, Ryerson University, Toronto, ON, Canada M5B 2K3

Privacy-Concealing Cooperative Perception for BEV Scene Segmentation

Feb 14, 2026Abstract:Cooperative perception systems for autonomous driving aim to overcome the limited perception range of a single vehicle by communicating with adjacent agents to share sensing information. While this improves perception performance, these systems also face a significant privacy-leakage issue, as sensitive visual content can potentially be reconstructed from the shared data. In this paper, we propose a novel Privacy-Concealing Cooperation (PCC) framework for Bird's Eye View (BEV) semantic segmentation. Based on commonly shared BEV features, we design a hiding network to prevent an image reconstruction network from recovering the input images from the shared features. An adversarial learning mechanism is employed to train the network, where the hiding network works to conceal the visual clues in the BEV features while the reconstruction network attempts to uncover these clues. To maintain segmentation performance, the perception network is integrated with the hiding network and optimized end-to-end. The experimental results demonstrate that the proposed PCC framework effectively degrades the quality of the reconstructed images with minimal impact on segmentation performance, providing privacy protection for cooperating vehicles. The source code will be made publicly available upon publication.

Faster Rates For Federated Variational Inequalities

Feb 09, 2026Abstract:In this paper, we study federated optimization for solving stochastic variational inequalities (VIs), a problem that has attracted growing attention in recent years. Despite substantial progress, a significant gap remains between existing convergence rates and the state-of-the-art bounds known for federated convex optimization. In this work, we address this limitation by establishing a series of improved convergence rates. First, we show that, for general smooth and monotone variational inequalities, the classical Local Extra SGD algorithm admits tighter guarantees under a refined analysis. Next, we identify an inherent limitation of Local Extra SGD, which can lead to excessive client drift. Motivated by this observation, we propose a new algorithm, the Local Inexact Proximal Point Algorithm with Extra Step (LIPPAX), and show that it mitigates client drift and achieves improved guarantees in several regimes, including bounded Hessian, bounded operator, and low-variance settings. Finally, we extend our results to federated composite variational inequalities and establish improved convergence guarantees.

T-CACE: A Time-Conditioned Autoregressive Contrast Enhancement Multi-Task Framework for Contrast-Free Liver MRI Synthesis, Segmentation, and Diagnosis

Aug 13, 2025Abstract:Magnetic resonance imaging (MRI) is a leading modality for the diagnosis of liver cancer, significantly improving the classification of the lesion and patient outcomes. However, traditional MRI faces challenges including risks from contrast agent (CA) administration, time-consuming manual assessment, and limited annotated datasets. To address these limitations, we propose a Time-Conditioned Autoregressive Contrast Enhancement (T-CACE) framework for synthesizing multi-phase contrast-enhanced MRI (CEMRI) directly from non-contrast MRI (NCMRI). T-CACE introduces three core innovations: a conditional token encoding (CTE) mechanism that unifies anatomical priors and temporal phase information into latent representations; and a dynamic time-aware attention mask (DTAM) that adaptively modulates inter-phase information flow using a Gaussian-decayed attention mechanism, ensuring smooth and physiologically plausible transitions across phases. Furthermore, a constraint for temporal classification consistency (TCC) aligns the lesion classification output with the evolution of the physiological signal, further enhancing diagnostic reliability. Extensive experiments on two independent liver MRI datasets demonstrate that T-CACE outperforms state-of-the-art methods in image synthesis, segmentation, and lesion classification. This framework offers a clinically relevant and efficient alternative to traditional contrast-enhanced imaging, improving safety, diagnostic efficiency, and reliability for the assessment of liver lesion. The implementation of T-CACE is publicly available at: https://github.com/xiaojiao929/T-CACE.

Pyramid Hierarchical Masked Diffusion Model for Imaging Synthesis

Jul 22, 2025Abstract:Medical image synthesis plays a crucial role in clinical workflows, addressing the common issue of missing imaging modalities due to factors such as extended scan times, scan corruption, artifacts, patient motion, and intolerance to contrast agents. The paper presents a novel image synthesis network, the Pyramid Hierarchical Masked Diffusion Model (PHMDiff), which employs a multi-scale hierarchical approach for more detailed control over synthesizing high-quality images across different resolutions and layers. Specifically, this model utilizes randomly multi-scale high-proportion masks to speed up diffusion model training, and balances detail fidelity and overall structure. The integration of a Transformer-based Diffusion model process incorporates cross-granularity regularization, modeling the mutual information consistency across each granularity's latent spaces, thereby enhancing pixel-level perceptual accuracy. Comprehensive experiments on two challenging datasets demonstrate that PHMDiff achieves superior performance in both the Peak Signal-to-Noise Ratio (PSNR) and Structural Similarity Index Measure (SSIM), highlighting its capability to produce high-quality synthesized images with excellent structural integrity. Ablation studies further confirm the contributions of each component. Furthermore, the PHMDiff model, a multi-scale image synthesis framework across and within medical imaging modalities, shows significant advantages over other methods. The source code is available at https://github.com/xiaojiao929/PHMDiff

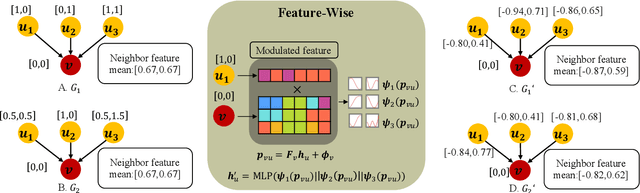

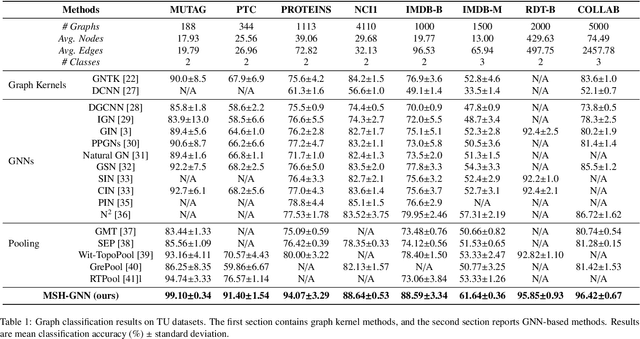

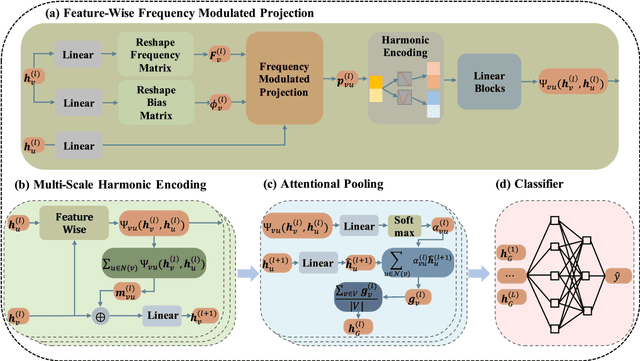

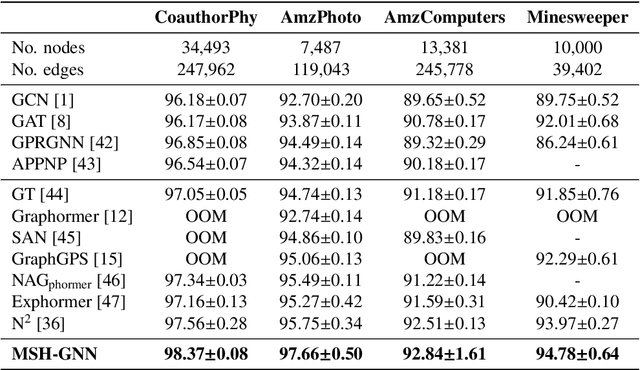

Beyond Node Attention: Multi-Scale Harmonic Encoding for Feature-Wise Graph Message Passing

May 21, 2025

Abstract:Conventional Graph Neural Networks (GNNs) aggregate neighbor embeddings as holistic vectors, lacking the ability to identify fine-grained, direction-specific feature relevance. We propose MSH-GNN (Multi-Scale Harmonic Graph Neural Network), a novel architecture that performs feature-wise adaptive message passing through node-specific harmonic projections. For each node, MSH-GNN dynamically projects neighbor features onto frequency-sensitive directions determined by the target node's own representation. These projections are further modulated using learnable sinusoidal encodings at multiple frequencies, enabling the model to capture both smooth and oscillatory structural patterns across scales. A frequency-aware attention pooling mechanism is introduced to emphasize spectrally and structurally salient nodes during readout. Theoretically, we prove that MSH-GNN approximates shift-invariant kernels and matches the expressive power of the 1-Weisfeiler-Lehman (1-WL) test. Empirically, MSH-GNN consistently outperforms state-of-the-art models on a wide range of graph and node classification tasks. Furthermore, in challenging classification settings involving joint variations in graph topology and spectral frequency, MSH-GNN excels at capturing structural asymmetries and high-frequency modulations, enabling more accurate graph discrimination.

Keypoints as Dynamic Centroids for Unified Human Pose and Segmentation

May 17, 2025Abstract:The dynamic movement of the human body presents a fundamental challenge for human pose estimation and body segmentation. State-of-the-art approaches primarily rely on combining keypoint heatmaps with segmentation masks but often struggle in scenarios involving overlapping joints or rapidly changing poses during instance-level segmentation. To address these limitations, we propose Keypoints as Dynamic Centroid (KDC), a new centroid-based representation for unified human pose estimation and instance-level segmentation. KDC adopts a bottom-up paradigm to generate keypoint heatmaps for both easily distinguishable and complex keypoints and improves keypoint detection and confidence scores by introducing KeyCentroids using a keypoint disk. It leverages high-confidence keypoints as dynamic centroids in the embedding space to generate MaskCentroids, allowing for swift clustering of pixels to specific human instances during rapid body movements in live environments. Our experimental evaluations on the CrowdPose, OCHuman, and COCO benchmarks demonstrate KDC's effectiveness and generalizability in challenging scenarios in terms of both accuracy and runtime performance. The implementation is available at: https://sites.google.com/view/niazahmad/projects/kdc.

Autoencoder-Based Hybrid Replay for Class-Incremental Learning

May 09, 2025Abstract:In class-incremental learning (CIL), effective incremental learning strategies are essential to mitigate task confusion and catastrophic forgetting, especially as the number of tasks $t$ increases. Current exemplar replay strategies impose $\mathcal{O}(t)$ memory/compute complexities. We propose an autoencoder-based hybrid replay (AHR) strategy that leverages our new hybrid autoencoder (HAE) to function as a compressor to alleviate the requirement for large memory, achieving $\mathcal{O}(0.1 t)$ at the worst case with the computing complexity of $\mathcal{O}(t)$ while accomplishing state-of-the-art performance. The decoder later recovers the exemplar data stored in the latent space, rather than in raw format. Additionally, HAE is designed for both discriminative and generative modeling, enabling classification and replay capabilities, respectively. HAE adopts the charged particle system energy minimization equations and repulsive force algorithm for the incremental embedding and distribution of new class centroids in its latent space. Our results demonstrate that AHR consistently outperforms recent baselines across multiple benchmarks while operating with the same memory/compute budgets. The source code is included in the supplementary material and will be open-sourced upon publication.

ABKD: Pursuing a Proper Allocation of the Probability Mass in Knowledge Distillation via $α$-$β$-Divergence

May 07, 2025Abstract:Knowledge Distillation (KD) transfers knowledge from a large teacher model to a smaller student model by minimizing the divergence between their output distributions, typically using forward Kullback-Leibler divergence (FKLD) or reverse KLD (RKLD). It has become an effective training paradigm due to the broader supervision information provided by the teacher distribution compared to one-hot labels. We identify that the core challenge in KD lies in balancing two mode-concentration effects: the \textbf{\textit{Hardness-Concentration}} effect, which refers to focusing on modes with large errors, and the \textbf{\textit{Confidence-Concentration}} effect, which refers to focusing on modes with high student confidence. Through an analysis of how probabilities are reassigned during gradient updates, we observe that these two effects are entangled in FKLD and RKLD, but in extreme forms. Specifically, both are too weak in FKLD, causing the student to fail to concentrate on the target class. In contrast, both are too strong in RKLD, causing the student to overly emphasize the target class while ignoring the broader distributional information from the teacher. To address this imbalance, we propose ABKD, a generic framework with $\alpha$-$\beta$-divergence. Our theoretical results show that ABKD offers a smooth interpolation between FKLD and RKLD, achieving an effective trade-off between these effects. Extensive experiments on 17 language/vision datasets with 12 teacher-student settings confirm its efficacy. The code is available at https://github.com/ghwang-s/abkd.

VISUALCENT: Visual Human Analysis using Dynamic Centroid Representation

Apr 26, 2025Abstract:We introduce VISUALCENT, a unified human pose and instance segmentation framework to address generalizability and scalability limitations to multi person visual human analysis. VISUALCENT leverages centroid based bottom up keypoint detection paradigm and uses Keypoint Heatmap incorporating Disk Representation and KeyCentroid to identify the optimal keypoint coordinates. For the unified segmentation task, an explicit keypoint is defined as a dynamic centroid called MaskCentroid to swiftly cluster pixels to specific human instance during rapid changes in human body movement or significantly occluded environment. Experimental results on COCO and OCHuman datasets demonstrate VISUALCENTs accuracy and real time performance advantages, outperforming existing methods in mAP scores and execution frame rate per second. The implementation is available on the project page.

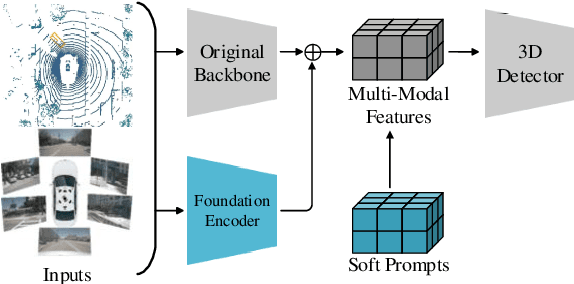

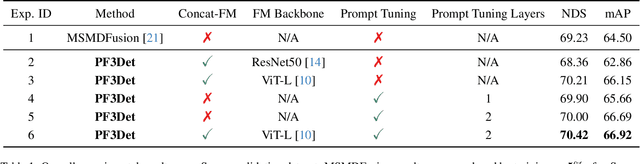

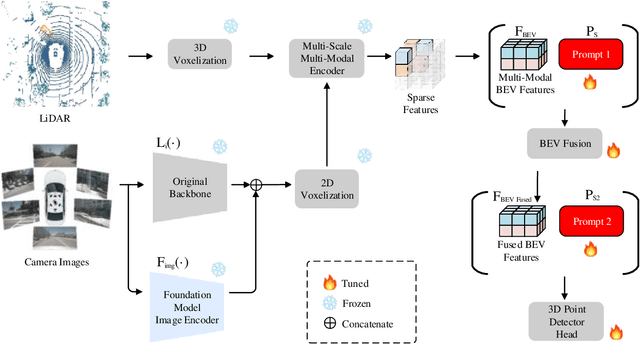

PF3Det: A Prompted Foundation Feature Assisted Visual LiDAR 3D Detector

Apr 04, 2025

Abstract:3D object detection is crucial for autonomous driving, leveraging both LiDAR point clouds for precise depth information and camera images for rich semantic information. Therefore, the multi-modal methods that combine both modalities offer more robust detection results. However, efficiently fusing LiDAR points and images remains challenging due to the domain gaps. In addition, the performance of many models is limited by the amount of high quality labeled data, which is expensive to create. The recent advances in foundation models, which use large-scale pre-training on different modalities, enable better multi-modal fusion. Combining the prompt engineering techniques for efficient training, we propose the Prompted Foundational 3D Detector (PF3Det), which integrates foundation model encoders and soft prompts to enhance LiDAR-camera feature fusion. PF3Det achieves the state-of-the-art results under limited training data, improving NDS by 1.19% and mAP by 2.42% on the nuScenes dataset, demonstrating its efficiency in 3D detection.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge