Jay Patrikar

Co-Me: Confidence-Guided Token Merging for Visual Geometric Transformers

Nov 18, 2025Abstract:We propose Confidence-Guided Token Merging (Co-Me), an acceleration mechanism for visual geometric transformers without retraining or finetuning the base model. Co-Me distilled a light-weight confidence predictor to rank tokens by uncertainty and selectively merge low-confidence ones, effectively reducing computation while maintaining spatial coverage. Compared to similarity-based merging or pruning, the confidence signal in Co-Me reliably indicates regions emphasized by the transformer, enabling substantial acceleration without degrading performance. Co-Me applies seamlessly to various multi-view and streaming visual geometric transformers, achieving speedups that scale with sequence length. When applied to VGGT and MapAnything, Co-Me achieves up to $11.3\times$ and $7.2\times$ speedup, making visual geometric transformers practical for real-time 3D perception and reconstruction.

AutoODD: Agentic Audits via Bayesian Red Teaming in Black-Box Models

Sep 10, 2025Abstract:Specialized machine learning models, regardless of architecture and training, are susceptible to failures in deployment. With their increasing use in high risk situations, the ability to audit these models by determining their operational design domain (ODD) is crucial in ensuring safety and compliance. However, given the high-dimensional input spaces, this process often requires significant human resources and domain expertise. To alleviate this, we introduce \coolname, an LLM-Agent centric framework for automated generation of semantically relevant test cases to search for failure modes in specialized black-box models. By leveraging LLM-Agents as tool orchestrators, we aim to fit a uncertainty-aware failure distribution model on a learned text-embedding manifold by projecting the high-dimension input space to low-dimension text-embedding latent space. The LLM-Agent is tasked with iteratively building the failure landscape by leveraging tools for generating test-cases to probe the model-under-test (MUT) and recording the response. The agent also guides the search using tools to probe uncertainty estimate on the low dimensional manifold. We demonstrate this process in a simple case using models trained with missing digits on the MNIST dataset and in the real world setting of vision-based intruder detection for aerial vehicles.

Demonstrating ViSafe: Vision-enabled Safety for High-speed Detect and Avoid

May 08, 2025

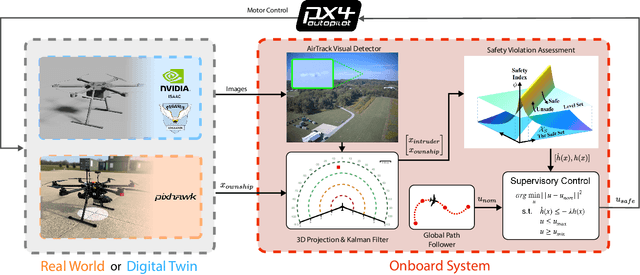

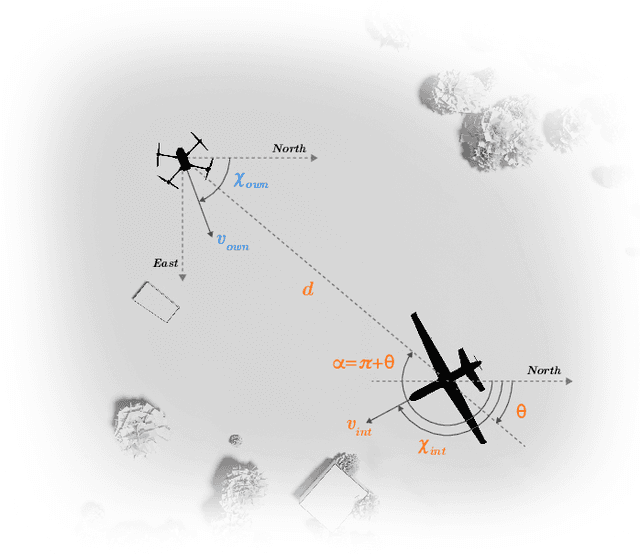

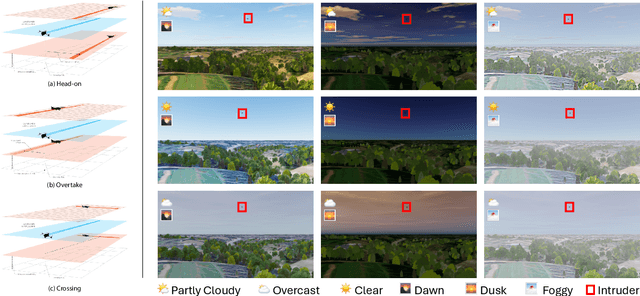

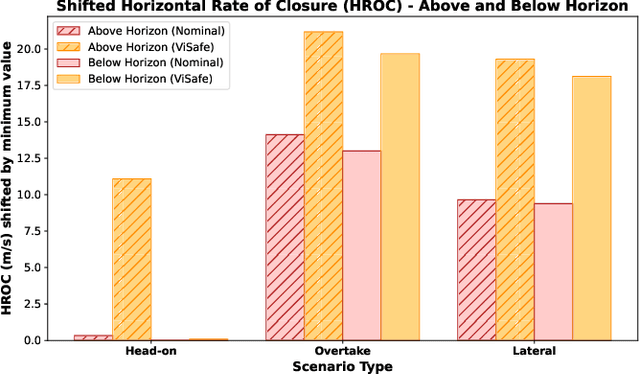

Abstract:Assured safe-separation is essential for achieving seamless high-density operation of airborne vehicles in a shared airspace. To equip resource-constrained aerial systems with this safety-critical capability, we present ViSafe, a high-speed vision-only airborne collision avoidance system. ViSafe offers a full-stack solution to the Detect and Avoid (DAA) problem by tightly integrating a learning-based edge-AI framework with a custom multi-camera hardware prototype designed under SWaP-C constraints. By leveraging perceptual input-focused control barrier functions (CBF) to design, encode, and enforce safety thresholds, ViSafe can provide provably safe runtime guarantees for self-separation in high-speed aerial operations. We evaluate ViSafe's performance through an extensive test campaign involving both simulated digital twins and real-world flight scenarios. By independently varying agent types, closure rates, interaction geometries, and environmental conditions (e.g., weather and lighting), we demonstrate that ViSafe consistently ensures self-separation across diverse scenarios. In first-of-its-kind real-world high-speed collision avoidance tests with closure rates reaching 144 km/h, ViSafe sets a new benchmark for vision-only autonomous collision avoidance, establishing a new standard for safety in high-speed aerial navigation.

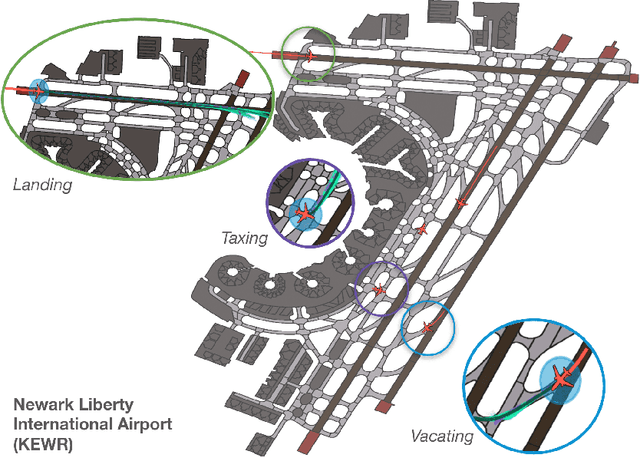

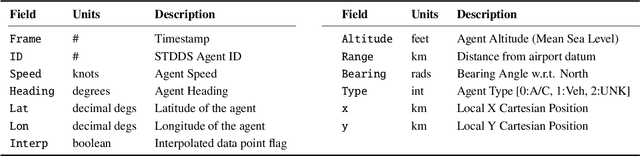

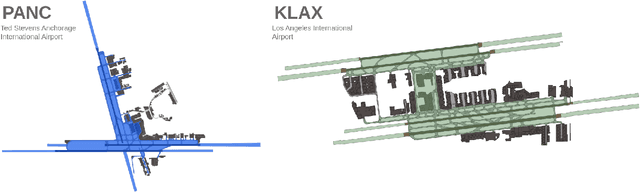

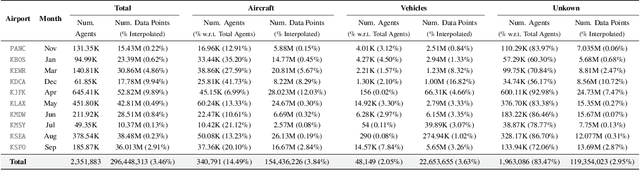

Amelia: A Large Model and Dataset for Airport Surface Movement Forecasting

Jul 30, 2024

Abstract:The growing demand for air travel requires technological advancements in air traffic management as well as mechanisms for monitoring and ensuring safe and efficient operations. In terminal airspaces, predictive models of future movements and traffic flows can help with proactive planning and efficient coordination; however, varying airport topologies, and interactions with other agents, among other factors, make accurate predictions challenging. Data-driven predictive models have shown promise for handling numerous variables to enable various downstream tasks, including collision risk assessment, taxi-out time prediction, departure metering, and emission estimations. While data-driven methods have shown improvements in these tasks, prior works lack large-scale curated surface movement datasets within the public domain and the development of generalizable trajectory forecasting models. In response to this, we propose two contributions: (1) Amelia-48, a large surface movement dataset collected using the System Wide Information Management (SWIM) Surface Movement Event Service (SMES). With data collection beginning in Dec 2022, the dataset provides more than a year's worth of SMES data (~30TB) and covers 48 airports within the US National Airspace System. In addition to releasing this data in the public domain, we also provide post-processing scripts and associated airport maps to enable research in the forecasting domain and beyond. (2) Amelia-TF model, a transformer-based next-token-prediction large multi-agent multi-airport trajectory forecasting model trained on 292 days or 9.4 billion tokens of position data encompassing 10 different airports with varying topology. The open-sourced model is validated on unseen airports with experiments showcasing the different prediction horizon lengths, ego-agent selection strategies, and training recipes to demonstrate the generalization capabilities.

RuleFuser: Injecting Rules in Evidential Networks for Robust Out-of-Distribution Trajectory Prediction

May 18, 2024

Abstract:Modern neural trajectory predictors in autonomous driving are developed using imitation learning (IL) from driving logs. Although IL benefits from its ability to glean nuanced and multi-modal human driving behaviors from large datasets, the resulting predictors often struggle with out-of-distribution (OOD) scenarios and with traffic rule compliance. On the other hand, classical rule-based predictors, by design, can predict traffic rule satisfying behaviors while being robust to OOD scenarios, but these predictors fail to capture nuances in agent-to-agent interactions and human driver's intent. In this paper, we present RuleFuser, a posterior-net inspired evidential framework that combines neural predictors with classical rule-based predictors to draw on the complementary benefits of both, thereby striking a balance between performance and traffic rule compliance. The efficacy of our approach is demonstrated on the real-world nuPlan dataset where RuleFuser leverages the higher performance of the neural predictor in in-distribution (ID) scenarios and the higher safety offered by the rule-based predictor in OOD scenarios.

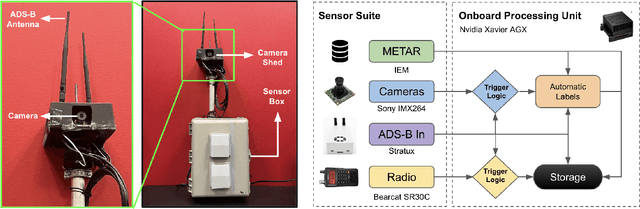

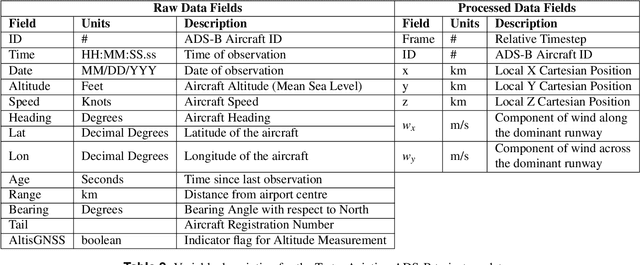

TartanAviation: Image, Speech, and ADS-B Trajectory Datasets for Terminal Airspace Operations

Mar 05, 2024

Abstract:We introduce TartanAviation, an open-source multi-modal dataset focused on terminal-area airspace operations. TartanAviation provides a holistic view of the airport environment by concurrently collecting image, speech, and ADS-B trajectory data using setups installed inside airport boundaries. The datasets were collected at both towered and non-towered airfields across multiple months to capture diversity in aircraft operations, seasons, aircraft types, and weather conditions. In total, TartanAviation provides 3.1M images, 3374 hours of Air Traffic Control speech data, and 661 days of ADS-B trajectory data. The data was filtered, processed, and validated to create a curated dataset. In addition to the dataset, we also open-source the code-base used to collect and pre-process the dataset, further enhancing accessibility and usability. We believe this dataset has many potential use cases and would be particularly vital in allowing AI and machine learning technologies to be integrated into air traffic control systems and advance the adoption of autonomous aircraft in the airspace.

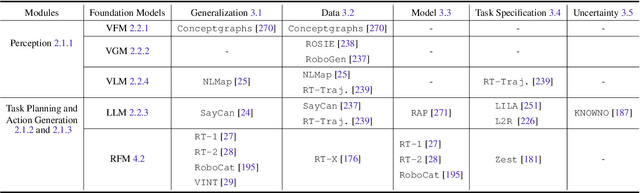

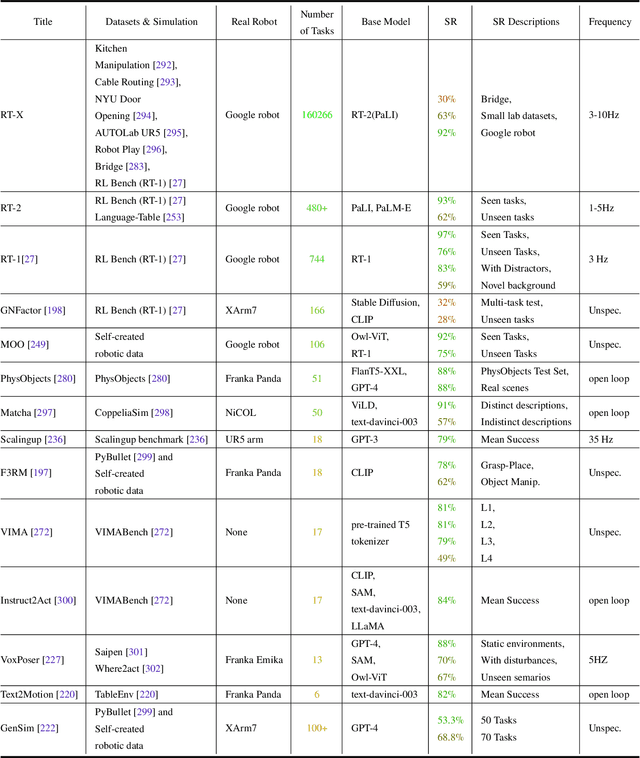

Toward General-Purpose Robots via Foundation Models: A Survey and Meta-Analysis

Dec 15, 2023

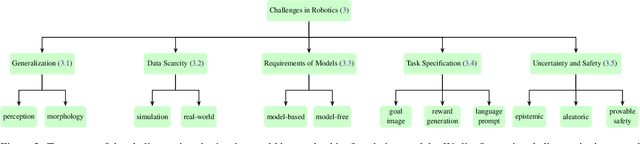

Abstract:Building general-purpose robots that can operate seamlessly, in any environment, with any object, and utilizing various skills to complete diverse tasks has been a long-standing goal in Artificial Intelligence. Unfortunately, however, most existing robotic systems have been constrained - having been designed for specific tasks, trained on specific datasets, and deployed within specific environments. These systems usually require extensively-labeled data, rely on task-specific models, have numerous generalization issues when deployed in real-world scenarios, and struggle to remain robust to distribution shifts. Motivated by the impressive open-set performance and content generation capabilities of web-scale, large-capacity pre-trained models (i.e., foundation models) in research fields such as Natural Language Processing (NLP) and Computer Vision (CV), we devote this survey to exploring (i) how these existing foundation models from NLP and CV can be applied to the field of robotics, and also exploring (ii) what a robotics-specific foundation model would look like. We begin by providing an overview of what constitutes a conventional robotic system and the fundamental barriers to making it universally applicable. Next, we establish a taxonomy to discuss current work exploring ways to leverage existing foundation models for robotics and develop ones catered to robotics. Finally, we discuss key challenges and promising future directions in using foundation models for enabling general-purpose robotic systems. We encourage readers to view our living GitHub repository of resources, including papers reviewed in this survey as well as related projects and repositories for developing foundation models for robotics.

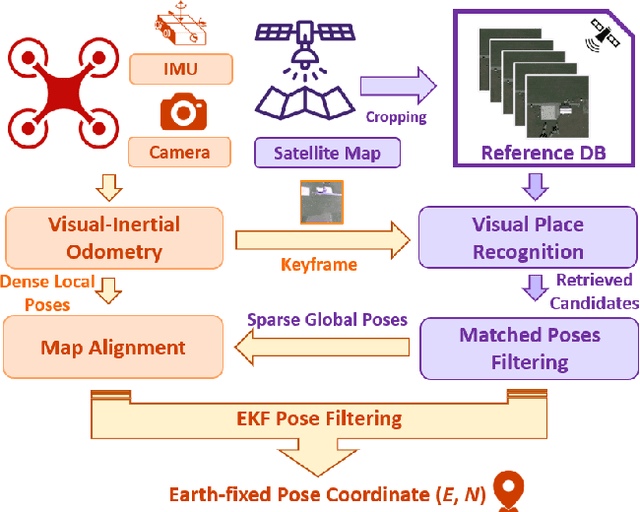

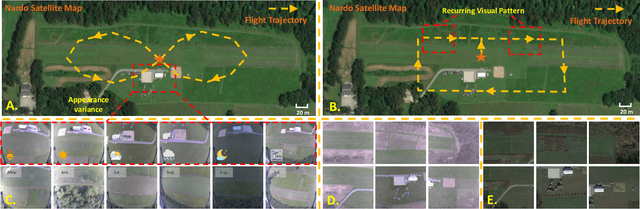

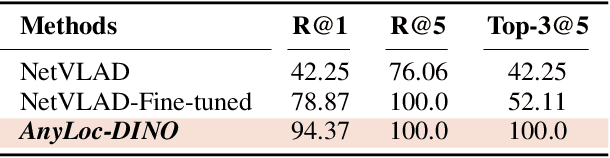

FoundLoc: Vision-based Onboard Aerial Localization in the Wild

Oct 25, 2023

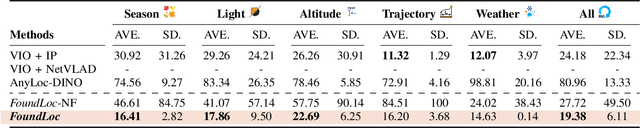

Abstract:Robust and accurate localization for Unmanned Aerial Vehicles (UAVs) is an essential capability to achieve autonomous, long-range flights. Current methods either rely heavily on GNSS, face limitations in visual-based localization due to appearance variances and stylistic dissimilarities between camera and reference imagery, or operate under the assumption of a known initial pose. In this paper, we developed a GNSS-denied localization approach for UAVs that harnesses both Visual-Inertial Odometry (VIO) and Visual Place Recognition (VPR) using a foundation model. This paper presents a novel vision-based pipeline that works exclusively with a nadir-facing camera, an Inertial Measurement Unit (IMU), and pre-existing satellite imagery for robust, accurate localization in varied environments and conditions. Our system demonstrated average localization accuracy within a $20$-meter range, with a minimum error below $1$ meter, under real-world conditions marked by drastic changes in environmental appearance and with no assumption of the vehicle's initial pose. The method is proven to be effective and robust, addressing the crucial need for reliable UAV localization in GNSS-denied environments, while also being computationally efficient enough to be deployed on resource-constrained platforms.

SoRTS: Learned Tree Search for Long Horizon Social Robot Navigation

Sep 22, 2023

Abstract:The fast-growing demand for fully autonomous robots in shared spaces calls for the development of trustworthy agents that can safely and seamlessly navigate in crowded environments. Recent models for motion prediction show promise in characterizing social interactions in such environments. Still, adapting them for navigation is challenging as they often suffer from generalization failures. Prompted by this, we propose Social Robot Tree Search (SoRTS), an algorithm for safe robot navigation in social domains. SoRTS aims to augment existing socially aware motion prediction models for long-horizon navigation using Monte Carlo Tree Search. We use social navigation in general aviation as a case study to evaluate our approach and further the research in full-scale aerial autonomy. In doing so, we introduce XPlaneROS, a high-fidelity aerial simulator that enables human-robot interaction. We use XPlaneROS to conduct a first-of-its-kind user study where 26 FAA-certified pilots interact with a human pilot, our algorithm, and its ablation. Our results, supported by statistical evidence, show that SoRTS exhibits a comparable performance to competent human pilots, significantly outperforming its ablation. Finally, we complement these results with a broad set of self-play experiments to showcase our algorithm's performance in scenarios with increasing complexity.

Pegasus Simulator: An Isaac Sim Framework for Multiple Aerial Vehicles Simulation

Jul 11, 2023Abstract:Developing and testing novel control and motion planning algorithms for aerial vehicles can be a challenging task, with the robotics community relying more than ever on 3D simulation technologies to evaluate the performance of new algorithms in a variety of conditions and environments. In this work, we introduce the Pegasus Simulator, a modular framework implemented as an NVIDIA Isaac Sim extension that enables real-time simulation of multiple multirotor vehicles in photo-realistic environments, while providing out-of-the-box integration with the widely adopted PX4-Autopilot and ROS2 through its modular implementation and intuitive graphical user interface. To demonstrate some of its capabilities, a nonlinear controller was implemented and simulation results for two drones performing aggressive flight maneuvers are presented. Code and documentation for this framework are also provided as supplementary material.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge