Sourish Ghosh

TartanAviation: Image, Speech, and ADS-B Trajectory Datasets for Terminal Airspace Operations

Mar 05, 2024

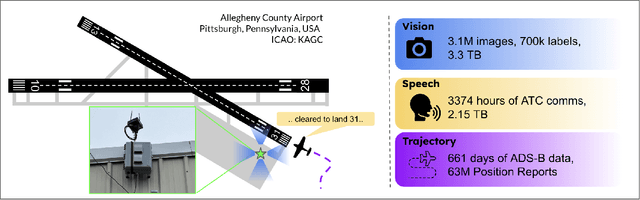

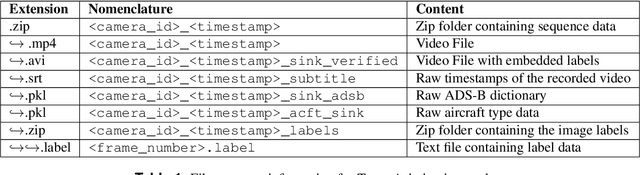

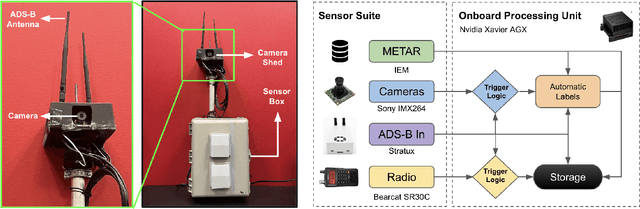

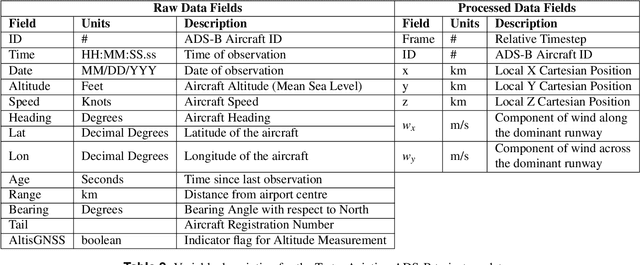

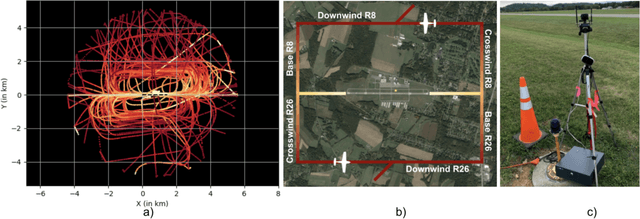

Abstract:We introduce TartanAviation, an open-source multi-modal dataset focused on terminal-area airspace operations. TartanAviation provides a holistic view of the airport environment by concurrently collecting image, speech, and ADS-B trajectory data using setups installed inside airport boundaries. The datasets were collected at both towered and non-towered airfields across multiple months to capture diversity in aircraft operations, seasons, aircraft types, and weather conditions. In total, TartanAviation provides 3.1M images, 3374 hours of Air Traffic Control speech data, and 661 days of ADS-B trajectory data. The data was filtered, processed, and validated to create a curated dataset. In addition to the dataset, we also open-source the code-base used to collect and pre-process the dataset, further enhancing accessibility and usability. We believe this dataset has many potential use cases and would be particularly vital in allowing AI and machine learning technologies to be integrated into air traffic control systems and advance the adoption of autonomous aircraft in the airspace.

Challenges in Close-Proximity Safe and Seamless Operation of Manned and Unmanned Aircraft in Shared Airspace

Nov 13, 2022

Abstract:We propose developing an integrated system to keep autonomous unmanned aircraft safely separated and behave as expected in conjunction with manned traffic. The main goal is to achieve safe manned-unmanned vehicle teaming to improve system performance, have each (robot/human) teammate learn from each other in various aircraft operations, and reduce the manning needs of manned aircraft. The proposed system anticipates and reacts to other aircraft using natural language instructions and can serve as a co-pilot or operate entirely autonomously. We point out the main technical challenges where improvements on current state-of-the-art are needed to enable Visual Flight Rules to fully autonomous aerial operations, bringing insights to these critical areas. Furthermore, we present an interactive demonstration in a prototypical scenario with one AI pilot and one human pilot sharing the same terminal airspace, interacting with each other using language, and landing safely on the same runway. We also show a demonstration of a vision-only aircraft detection system.

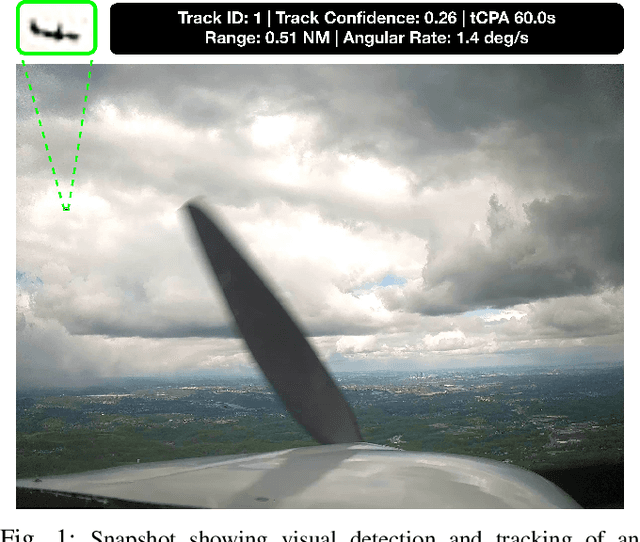

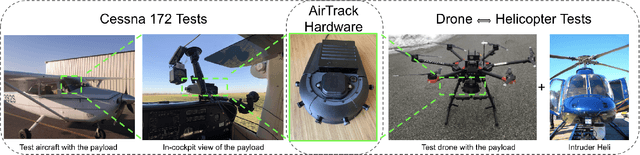

AirTrack: Onboard Deep Learning Framework for Long-Range Aircraft Detection and Tracking

Sep 26, 2022

Abstract:Detect-and-Avoid (DAA) capabilities are critical for safe operations of unmanned aircraft systems (UAS). This paper introduces, AirTrack, a real-time vision-only detect and tracking framework that respects the size, weight, and power (SWaP) constraints of sUAS systems. Given the low Signal-to-Noise ratios (SNR) of far away aircraft, we propose using full resolution images in a deep learning framework that aligns successive images to remove ego-motion. The aligned images are then used downstream in cascaded primary and secondary classifiers to improve detection and tracking performance on multiple metrics. We show that AirTrack outperforms state-of-the art baselines on the Amazon Airborne Object Tracking (AOT) Dataset. Multiple real world flight tests with a Cessna 172 interacting with general aviation traffic and additional near-collision flight tests with a Bell helicopter flying towards a UAS in a controlled setting showcase that the proposed approach satisfies the newly introduced ASTM F3442/F3442M standard for DAA. Empirical evaluations show that our system has a probability of track of more than 95% up to a range of 700m. Video available at https://youtu.be/H3lL_Wjxjpw .

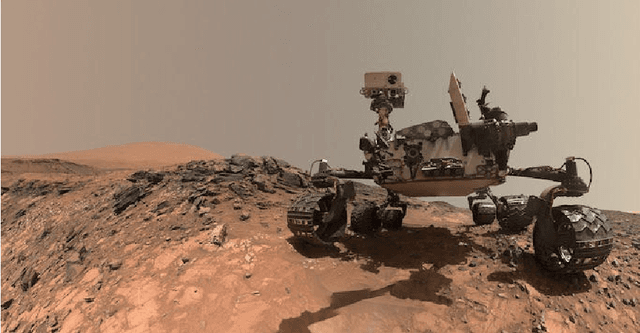

Fast Approximate Clearance Evaluation for Kinematically Constrained Articulated Suspension Systems

Jul 31, 2018

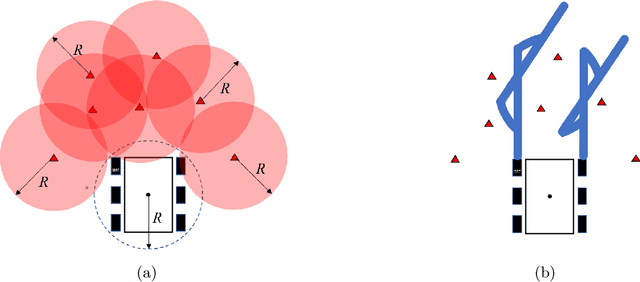

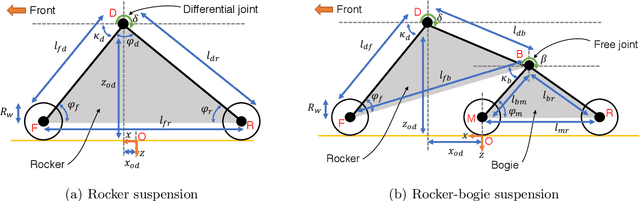

Abstract:In this paper, we present a light-weight collision detection algorithm for motion planning of planetary rovers with articulated suspension systems. Extraterrestrial path planning is challenging due to the combination of terrain roughness and severe limitation in computational resources. Path planning on cluttered and/or uneven terrains requires repeated collision detection on all the candidate paths at a small interval. Solving the exact collision detection problem for articulated suspension systems requires simulating the vehicle settling on the terrain, which involves an inverse-kinematics problem with iterative nonlinear optimization under geometric constraints. However, such expensive computation is intractable for slow spacecraft computers, such as the RAD750 that is used by the Curiosity Mars rover and upcoming Mars 2020 rover. We propose the Approximate Clearance Evaluation (ACE) algorithm, which obtains conservative bounds on vehicle clearance, attitude, and suspension angles without iterative computation. It obtains those bounds by estimating the lowest and highest heights that each wheel may reach given the underlying terrain, and calculating the worst-case vehicle configuration associated with those extreme wheel heights. The bounds are guaranteed to be conservative, hence ensuring vehicle safety during autonomous navigation. ACE is planned to be used as part of the new onboard path planner of the Mars 2020 rover. This paper describes the algorithm in detail and validates our claim of conservatism and fast computation through experiments.

A Fuzzy Logic System to Analyze a Student's Lifestyle

Nov 08, 2016

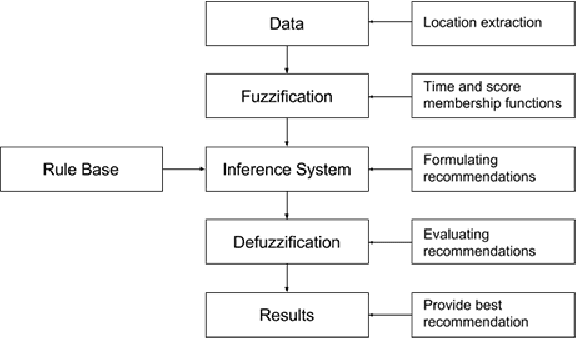

Abstract:A college student's life can be primarily categorized into domains such as education, health, social and other activities which may include daily chores and travelling time. Time management is crucial for every student. A self realisation of one's daily time expenditure in various domains is therefore essential to maximize one's effective output. This paper presents how a mobile application using Fuzzy Logic and Global Positioning System (GPS) analyzes a student's lifestyle and provides recommendations and suggestions based on the results.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge