Hongbo Fu

StructLayoutFormer:Conditional Structured Layout Generation via Structure Serialization and Disentanglement

Oct 30, 2025Abstract:Structured layouts are preferable in many 2D visual contents (\eg, GUIs, webpages) since the structural information allows convenient layout editing. Computational frameworks can help create structured layouts but require heavy labor input. Existing data-driven approaches are effective in automatically generating fixed layouts but fail to produce layout structures. We present StructLayoutFormer, a novel Transformer-based approach for conditional structured layout generation. We use a structure serialization scheme to represent structured layouts as sequences. To better control the structures of generated layouts, we disentangle the structural information from the element placements. Our approach is the first data-driven approach that achieves conditional structured layout generation and produces realistic layout structures explicitly. We compare our approach with existing data-driven layout generation approaches by including post-processing for structure extraction. Extensive experiments have shown that our approach exceeds these baselines in conditional structured layout generation. We also demonstrate that our approach is effective in extracting and transferring layout structures. The code is publicly available at %\href{https://github.com/Teagrus/StructLayoutFormer} {https://github.com/Teagrus/StructLayoutFormer}.

PartHOI: Part-based Hand-Object Interaction Transfer via Generalized Cylinders

Apr 29, 2025Abstract:Learning-based methods to understand and model hand-object interactions (HOI) require a large amount of high-quality HOI data. One way to create HOI data is to transfer hand poses from a source object to another based on the objects' geometry. However, current methods for transferring hand poses between objects rely on shape matching, limiting the ability to transfer poses across different categories due to differences in their shapes and sizes. We observe that HOI often involves specific semantic parts of objects, which often have more consistent shapes across categories. In addition, constructing size-invariant correspondences between these parts is important for cross-category transfer. Based on these insights, we introduce a novel method PartHOI for part-based HOI transfer. Using a generalized cylinder representation to parameterize an object parts' geometry, PartHOI establishes a robust geometric correspondence between object parts, and enables the transfer of contact points. Given the transferred points, we optimize a hand pose to fit the target object well. Qualitative and quantitative results demonstrate that our method can generalize HOI transfers well even for cross-category objects, and produce high-fidelity results that are superior to the existing methods.

SketchVideo: Sketch-based Video Generation and Editing

Mar 30, 2025

Abstract:Video generation and editing conditioned on text prompts or images have undergone significant advancements. However, challenges remain in accurately controlling global layout and geometry details solely by texts, and supporting motion control and local modification through images. In this paper, we aim to achieve sketch-based spatial and motion control for video generation and support fine-grained editing of real or synthetic videos. Based on the DiT video generation model, we propose a memory-efficient control structure with sketch control blocks that predict residual features of skipped DiT blocks. Sketches are drawn on one or two keyframes (at arbitrary time points) for easy interaction. To propagate such temporally sparse sketch conditions across all frames, we propose an inter-frame attention mechanism to analyze the relationship between the keyframes and each video frame. For sketch-based video editing, we design an additional video insertion module that maintains consistency between the newly edited content and the original video's spatial feature and dynamic motion. During inference, we use latent fusion for the accurate preservation of unedited regions. Extensive experiments demonstrate that our SketchVideo achieves superior performance in controllable video generation and editing.

GCRayDiffusion: Pose-Free Surface Reconstruction via Geometric Consistent Ray Diffusion

Mar 28, 2025Abstract:Accurate surface reconstruction from unposed images is crucial for efficient 3D object or scene creation. However, it remains challenging, particularly for the joint camera pose estimation. Previous approaches have achieved impressive pose-free surface reconstruction results in dense-view settings, but could easily fail for sparse-view scenarios without sufficient visual overlap. In this paper, we propose a new technique for pose-free surface reconstruction, which follows triplane-based signed distance field (SDF) learning but regularizes the learning by explicit points sampled from ray-based diffusion of camera pose estimation. Our key contribution is a novel Geometric Consistent Ray Diffusion model (GCRayDiffusion), where we represent camera poses as neural bundle rays and regress the distribution of noisy rays via a diffusion model. More importantly, we further condition the denoising process of RGRayDiffusion using the triplane-based SDF of the entire scene, which provides effective 3D consistent regularization to achieve multi-view consistent camera pose estimation. Finally, we incorporate RGRayDiffusion into the triplane-based SDF learning by introducing on-surface geometric regularization from the sampling points of the neural bundle rays, which leads to highly accurate pose-free surface reconstruction results even for sparse-view inputs. Extensive evaluations on public datasets show that our GCRayDiffusion achieves more accurate camera pose estimation than previous approaches, with geometrically more consistent surface reconstruction results, especially given sparse-view inputs.

Real-time 3D-aware Portrait Video Relighting

Oct 24, 2024Abstract:Synthesizing realistic videos of talking faces under custom lighting conditions and viewing angles benefits various downstream applications like video conferencing. However, most existing relighting methods are either time-consuming or unable to adjust the viewpoints. In this paper, we present the first real-time 3D-aware method for relighting in-the-wild videos of talking faces based on Neural Radiance Fields (NeRF). Given an input portrait video, our method can synthesize talking faces under both novel views and novel lighting conditions with a photo-realistic and disentangled 3D representation. Specifically, we infer an albedo tri-plane, as well as a shading tri-plane based on a desired lighting condition for each video frame with fast dual-encoders. We also leverage a temporal consistency network to ensure smooth transitions and reduce flickering artifacts. Our method runs at 32.98 fps on consumer-level hardware and achieves state-of-the-art results in terms of reconstruction quality, lighting error, lighting instability, temporal consistency and inference speed. We demonstrate the effectiveness and interactivity of our method on various portrait videos with diverse lighting and viewing conditions.

Sketch2Human: Deep Human Generation with Disentangled Geometry and Appearance Control

Apr 24, 2024Abstract:Geometry- and appearance-controlled full-body human image generation is an interesting but challenging task. Existing solutions are either unconditional or dependent on coarse conditions (e.g., pose, text), thus lacking explicit geometry and appearance control of body and garment. Sketching offers such editing ability and has been adopted in various sketch-based face generation and editing solutions. However, directly adapting sketch-based face generation to full-body generation often fails to produce high-fidelity and diverse results due to the high complexity and diversity in the pose, body shape, and garment shape and texture. Recent geometrically controllable diffusion-based methods mainly rely on prompts to generate appearance and it is hard to balance the realism and the faithfulness of their results to the sketch when the input is coarse. This work presents Sketch2Human, the first system for controllable full-body human image generation guided by a semantic sketch (for geometry control) and a reference image (for appearance control). Our solution is based on the latent space of StyleGAN-Human with inverted geometry and appearance latent codes as input. Specifically, we present a sketch encoder trained with a large synthetic dataset sampled from StyleGAN-Human's latent space and directly supervised by sketches rather than real images. Considering the entangled information of partial geometry and texture in StyleGAN-Human and the absence of disentangled datasets, we design a novel training scheme that creates geometry-preserved and appearance-transferred training data to tune a generator to achieve disentangled geometry and appearance control. Although our method is trained with synthetic data, it can handle hand-drawn sketches as well. Qualitative and quantitative evaluations demonstrate the superior performance of our method to state-of-the-art methods.

MonoHair: High-Fidelity Hair Modeling from a Monocular Video

Mar 27, 2024

Abstract:Undoubtedly, high-fidelity 3D hair is crucial for achieving realism, artistic expression, and immersion in computer graphics. While existing 3D hair modeling methods have achieved impressive performance, the challenge of achieving high-quality hair reconstruction persists: they either require strict capture conditions, making practical applications difficult, or heavily rely on learned prior data, obscuring fine-grained details in images. To address these challenges, we propose MonoHair,a generic framework to achieve high-fidelity hair reconstruction from a monocular video, without specific requirements for environments. Our approach bifurcates the hair modeling process into two main stages: precise exterior reconstruction and interior structure inference. The exterior is meticulously crafted using our Patch-based Multi-View Optimization (PMVO). This method strategically collects and integrates hair information from multiple views, independent of prior data, to produce a high-fidelity exterior 3D line map. This map not only captures intricate details but also facilitates the inference of the hair's inner structure. For the interior, we employ a data-driven, multi-view 3D hair reconstruction method. This method utilizes 2D structural renderings derived from the reconstructed exterior, mirroring the synthetic 2D inputs used during training. This alignment effectively bridges the domain gap between our training data and real-world data, thereby enhancing the accuracy and reliability of our interior structure inference. Lastly, we generate a strand model and resolve the directional ambiguity by our hair growth algorithm. Our experiments demonstrate that our method exhibits robustness across diverse hairstyles and achieves state-of-the-art performance. For more results, please refer to our project page https://keyuwu-cs.github.io/MonoHair/.

CustomSketching: Sketch Concept Extraction for Sketch-based Image Synthesis and Editing

Feb 27, 2024Abstract:Personalization techniques for large text-to-image (T2I) models allow users to incorporate new concepts from reference images. However, existing methods primarily rely on textual descriptions, leading to limited control over customized images and failing to support fine-grained and local editing (e.g., shape, pose, and details). In this paper, we identify sketches as an intuitive and versatile representation that can facilitate such control, e.g., contour lines capturing shape information and flow lines representing texture. This motivates us to explore a novel task of sketch concept extraction: given one or more sketch-image pairs, we aim to extract a special sketch concept that bridges the correspondence between the images and sketches, thus enabling sketch-based image synthesis and editing at a fine-grained level. To accomplish this, we introduce CustomSketching, a two-stage framework for extracting novel sketch concepts. Considering that an object can often be depicted by a contour for general shapes and additional strokes for internal details, we introduce a dual-sketch representation to reduce the inherent ambiguity in sketch depiction. We employ a shape loss and a regularization loss to balance fidelity and editability during optimization. Through extensive experiments, a user study, and several applications, we show our method is effective and superior to the adapted baselines.

Mesh-based Gaussian Splatting for Real-time Large-scale Deformation

Feb 07, 2024Abstract:Neural implicit representations, including Neural Distance Fields and Neural Radiance Fields, have demonstrated significant capabilities for reconstructing surfaces with complicated geometry and topology, and generating novel views of a scene. Nevertheless, it is challenging for users to directly deform or manipulate these implicit representations with large deformations in the real-time fashion. Gaussian Splatting(GS) has recently become a promising method with explicit geometry for representing static scenes and facilitating high-quality and real-time synthesis of novel views. However,it cannot be easily deformed due to the use of discrete Gaussians and lack of explicit topology. To address this, we develop a novel GS-based method that enables interactive deformation. Our key idea is to design an innovative mesh-based GS representation, which is integrated into Gaussian learning and manipulation. 3D Gaussians are defined over an explicit mesh, and they are bound with each other: the rendering of 3D Gaussians guides the mesh face split for adaptive refinement, and the mesh face split directs the splitting of 3D Gaussians. Moreover, the explicit mesh constraints help regularize the Gaussian distribution, suppressing poor-quality Gaussians(e.g. misaligned Gaussians,long-narrow shaped Gaussians), thus enhancing visual quality and avoiding artifacts during deformation. Based on this representation, we further introduce a large-scale Gaussian deformation technique to enable deformable GS, which alters the parameters of 3D Gaussians according to the manipulation of the associated mesh. Our method benefits from existing mesh deformation datasets for more realistic data-driven Gaussian deformation. Extensive experiments show that our approach achieves high-quality reconstruction and effective deformation, while maintaining the promising rendering results at a high frame rate(65 FPS on average).

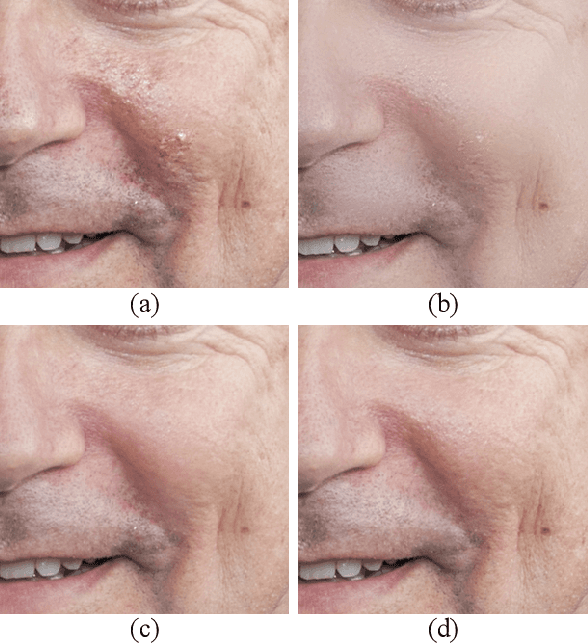

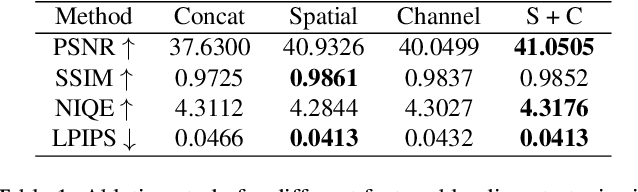

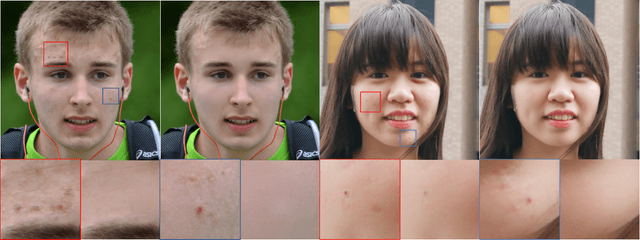

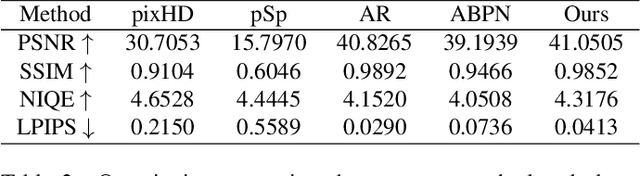

StyleRetoucher: Generalized Portrait Image Retouching with GAN Priors

Dec 22, 2023

Abstract:Creating fine-retouched portrait images is tedious and time-consuming even for professional artists. There exist automatic retouching methods, but they either suffer from over-smoothing artifacts or lack generalization ability. To address such issues, we present StyleRetoucher, a novel automatic portrait image retouching framework, leveraging StyleGAN's generation and generalization ability to improve an input portrait image's skin condition while preserving its facial details. Harnessing the priors of pretrained StyleGAN, our method shows superior robustness: a). performing stably with fewer training samples and b). generalizing well on the out-domain data. Moreover, by blending the spatial features of the input image and intermediate features of the StyleGAN layers, our method preserves the input characteristics to the largest extent. We further propose a novel blemish-aware feature selection mechanism to effectively identify and remove the skin blemishes, improving the image skin condition. Qualitative and quantitative evaluations validate the great generalization capability of our method. Further experiments show StyleRetoucher's superior performance to the alternative solutions in the image retouching task. We also conduct a user perceptive study to confirm the superior retouching performance of our method over the existing state-of-the-art alternatives.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge