Chanyoung Park

Self-EvolveRec: Self-Evolving Recommender Systems with LLM-based Directional Feedback

Feb 13, 2026Abstract:Traditional methods for automating recommender system design, such as Neural Architecture Search (NAS), are often constrained by a fixed search space defined by human priors, limiting innovation to pre-defined operators. While recent LLM-driven code evolution frameworks shift fixed search space target to open-ended program spaces, they primarily rely on scalar metrics (e.g., NDCG, Hit Ratio) that fail to provide qualitative insights into model failures or directional guidance for improvement. To address this, we propose Self-EvolveRec, a novel framework that establishes a directional feedback loop by integrating a User Simulator for qualitative critiques and a Model Diagnosis Tool for quantitative internal verification. Furthermore, we introduce a Diagnosis Tool - Model Co-Evolution strategy to ensure that evaluation criteria dynamically adapt as the recommendation architecture evolves. Extensive experiments demonstrate that Self-EvolveRec significantly outperforms state-of-the-art NAS and LLM-driven code evolution baselines in both recommendation performance and user satisfaction. Our code is available at https://github.com/Sein-Kim/self_evolverec.

PathCRF: Ball-Free Soccer Event Detection via Possession Path Inference from Player Trajectories

Feb 12, 2026Abstract:Despite recent advances in AI, event data collection in soccer still relies heavily on labor-intensive manual annotation. Although prior work has explored automatic event detection using player and ball trajectories, ball tracking also remains difficult to scale due to high infrastructural and operational costs. As a result, comprehensive data collection in soccer is largely confined to top-tier competitions, limiting the broader adoption of data-driven analysis in this domain. To address this challenge, this paper proposes PathCRF, a framework for detecting on-ball soccer events using only player tracking data. We model player trajectories as a fully connected dynamic graph and formulate event detection as the problem of selecting exactly one edge corresponding to the current possession state at each time step. To ensure logical consistency of the resulting edge sequence, we employ a Conditional Random Field (CRF) that forbids impossible transitions between consecutive edges. Both emission and transition scores dynamically computed from edge embeddings produced by a Set Attention-based backbone architecture. During inference, the most probable edge sequence is obtained via Viterbi decoding, and events such as ball controls or passes are detected whenever the selected edge changes between adjacent time steps. Experiments show that PathCRF produces accurate, logically consistent possession paths, enabling reliable downstream analyses while substantially reducing the need for manual event annotation. The source code is available at https://github.com/hyunsungkim-ds/pathcrf.git.

Progressive Multi-Agent Reasoning for Biological Perturbation Prediction

Feb 07, 2026Abstract:Predicting gene regulation responses to biological perturbations requires reasoning about underlying biological causalities. While large language models (LLMs) show promise for such tasks, they are often overwhelmed by the entangled nature of high-dimensional perturbation results. Moreover, recent works have primarily focused on genetic perturbations in single-cell experiments, leaving bulk-cell chemical perturbations, which is central to drug discovery, largely unexplored. Motivated by this, we present LINCSQA, a novel benchmark for predicting target gene regulation under complex chemical perturbations in bulk-cell environments. We further propose PBio-Agent, a multi-agent framework that integrates difficulty-aware task sequencing with iterative knowledge refinement. Our key insight is that genes affected by the same perturbation share causal structure, allowing confidently predicted genes to contextualize more challenging cases. The framework employs specialized agents enriched with biological knowledge graphs, while a synthesis agent integrates outputs and specialized judges ensure logical coherence. PBio-Agent outperforms existing baselines on both LINCSQA and PerturbQA, enabling even smaller models to predict and explain complex biological processes without additional training.

MSP-LLM: A Unified Large Language Model Framework for Complete Material Synthesis Planning

Feb 07, 2026Abstract:Material synthesis planning (MSP) remains a fundamental and underexplored bottleneck in AI-driven materials discovery, as it requires not only identifying suitable precursor materials but also designing coherent sequences of synthesis operations to realize a target material. Although several AI-based approaches have been proposed to address isolated subtasks of MSP, a unified methodology for solving the entire MSP task has yet to be established. We propose MSP-LLM, a unified LLM-based framework that formulates MSP as a structured process composed of two constituent subproblems: precursor prediction (PP) and synthesis operation prediction (SOP). Our approach introduces a discrete material class as an intermediate decision variable that organizes both tasks into a chemically consistent decision chain. For OP, we further incorporate hierarchical precursor types as synthesis-relevant inductive biases and employ an explicit conditioning strategy that preserves precursor-related information in the autoregressive decoding state. Extensive experiments show that MSP-LLM consistently outperforms existing methods on both PP and SOP, as well as on the complete MSP task, demonstrating an effective and scalable framework for MSP that can accelerate real-world materials discovery.

Electron-Informed Coarse-Graining Molecular Representation Learning for Real-World Molecular Physics

Feb 06, 2026Abstract:Various representation learning methods for molecular structures have been devised to accelerate data-driven chemistry. However, the representation capabilities of existing methods are essentially limited to atom-level information, which is not sufficient to describe real-world molecular physics. Although electron-level information can provide fundamental knowledge about chemical compounds beyond the atom-level information, obtaining the electron-level information in real-world molecules is computationally impractical and sometimes infeasible. We propose a method for learning electron-informed molecular representations without additional computation costs by transferring readily accessible electron-level information about small molecules to large molecules of our interest. The proposed method achieved state-of-the-art prediction accuracy on extensive benchmark datasets containing experimentally observed molecular physics. The source code for HEDMoL is available at https://github.com/ngs00/HEDMoL.

PULSE: Socially-Aware User Representation Modeling Toward Parameter-Efficient Graph Collaborative Filtering

Jan 21, 2026Abstract:Graph-based social recommendation (SocialRec) has emerged as a powerful extension of graph collaborative filtering (GCF), which leverages graph neural networks (GNNs) to capture multi-hop collaborative signals from user-item interactions. These methods enrich user representations by incorporating social network information into GCF, thereby integrating additional collaborative signals from social relations. However, existing GCF and graph-based SocialRec approaches face significant challenges: they incur high computational costs and suffer from limited scalability due to the large number of parameters required to assign explicit embeddings to all users and items. In this work, we propose PULSE (Parameter-efficient User representation Learning with Social Knowledge), a framework that addresses this limitation by constructing user representations from socially meaningful signals without creating an explicit learnable embedding for each user. PULSE reduces the parameter size by up to 50% compared to the most lightweight GCF baseline. Beyond parameter efficiency, our method achieves state-of-the-art performance, outperforming 13 GCF and graph-based social recommendation baselines across varying levels of interaction sparsity, from cold-start to highly active users, through a time- and memory-efficient modeling process.

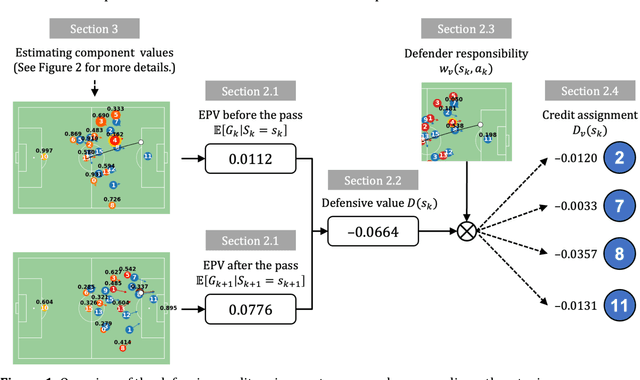

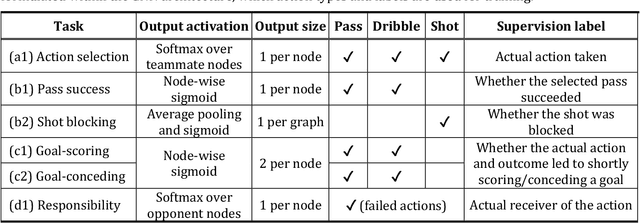

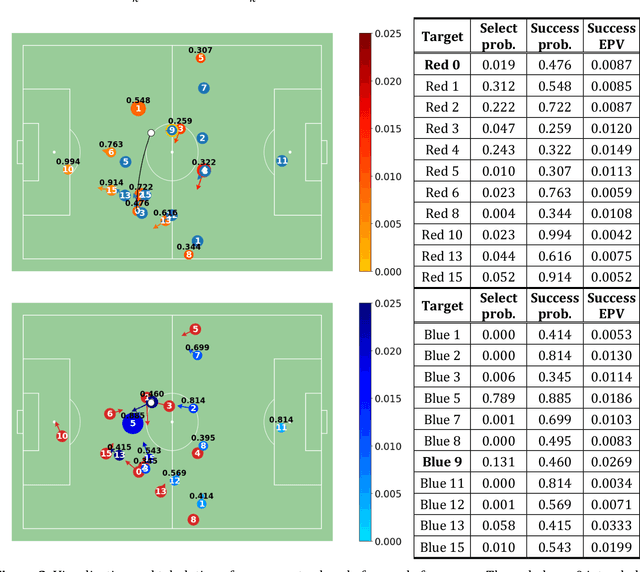

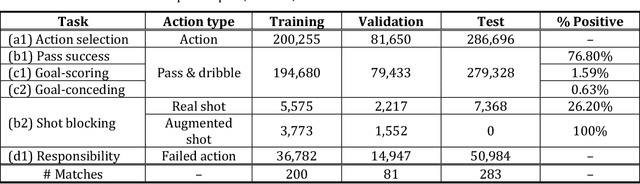

Better Prevent than Tackle: Valuing Defense in Soccer Based on Graph Neural Networks

Dec 11, 2025

Abstract:Evaluating defensive performance in soccer remains challenging, as effective defending is often expressed not through visible on-ball actions such as interceptions and tackles, but through preventing dangerous opportunities before they arise. Existing approaches have largely focused on valuing on-ball actions, leaving much of defenders' true impact unmeasured. To address this gap, we propose DEFCON (DEFensive CONtribution evaluator), a comprehensive framework that quantifies player-level defensive contributions for every attacking situation in soccer. Leveraging Graph Attention Networks, DEFCON estimates the success probability and expected value of each attacking option, along with each defender's responsibility for stopping it. These components yield an Expected Possession Value (EPV) for the attacking team before and after each action, and DEFCON assigns positive or negative credits to defenders according to whether they reduced or increased the opponent's EPV. Trained on 2023-24 and evaluated on 2024-25 Eredivisie event and tracking data, DEFCON's aggregated player credits exhibit strong positive correlations with market valuations. Finally, we showcase several practical applications, including in-game timelines of defensive contributions, spatial analyses across pitch zones, and pairwise summaries of attacker-defender interactions.

Adaptive Graph Rewiring to Mitigate Over-Squashing in Mesh-Based GNNs for Fluid Dynamics Simulations

Nov 16, 2025Abstract:Mesh-based simulation using Graph Neural Networks (GNNs) has been recognized as a promising approach for modeling fluid dynamics. However, the mesh refinement techniques which allocate finer resolution to regions with steep gradients can induce the over-squashing problem in mesh-based GNNs, which prevents the capture of long-range physical interactions. Conventional graph rewiring methods attempt to alleviate this issue by adding new edges, but they typically complete all rewiring operations before applying them to the GNN. These approaches are physically unrealistic, as they assume instantaneous interactions between distant nodes and disregard the distance information between particles. To address these limitations, we propose a novel framework, called Adaptive Graph Rewiring in Mesh-Based Graph Neural Networks (AdaMeshNet), that introduces an adaptive rewiring process into the message-passing procedure to model the gradual propagation of physical interactions. Our method computes a rewiring delay score for bottleneck nodes in the mesh graph, based on the shortest-path distance and the velocity difference. Using this score, it dynamically selects the message-passing layer at which new edges are rewired, which can lead to adaptive rewiring in a mesh graph. Extensive experiments on mesh-based fluid simulations demonstrate that AdaMeshNet outperforms conventional rewiring methods, effectively modeling the sequential nature of physical interactions and enabling more accurate predictions.

R1-ACT: Efficient Reasoning Model Safety Alignment by Activating Safety Knowledge

Aug 01, 2025Abstract:Although large reasoning models (LRMs) have demonstrated impressive capabilities on complex tasks, recent studies reveal that these models frequently fulfill harmful user instructions, raising significant safety concerns. In this paper, we investigate the underlying cause of LRM safety risks and find that models already possess sufficient safety knowledge but fail to activate it during reasoning. Based on this insight, we propose R1-Act, a simple and efficient post-training method that explicitly triggers safety knowledge through a structured reasoning process. R1-Act achieves strong safety improvements while preserving reasoning performance, outperforming prior alignment methods. Notably, it requires only 1,000 training examples and 90 minutes of training on a single RTX A6000 GPU. Extensive experiments across multiple LRM backbones and sizes demonstrate the robustness, scalability, and practical efficiency of our approach.

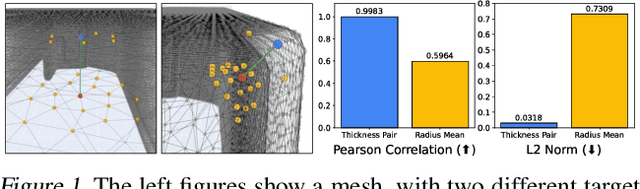

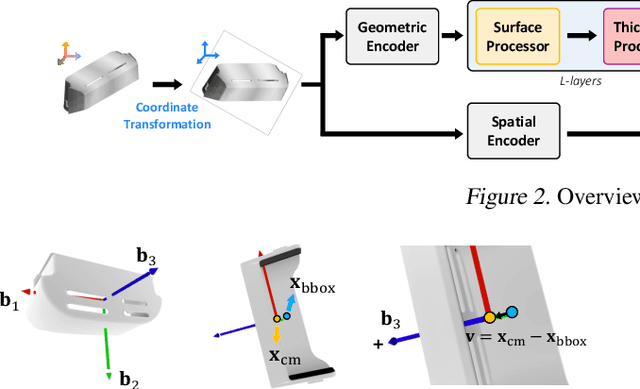

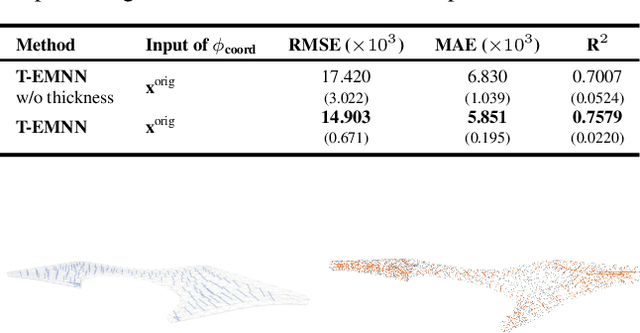

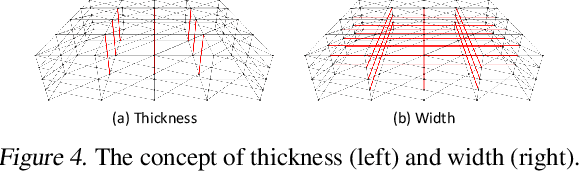

Thickness-aware E(3)-Equivariant 3D Mesh Neural Networks

May 27, 2025

Abstract:Mesh-based 3D static analysis methods have recently emerged as efficient alternatives to traditional computational numerical solvers, significantly reducing computational costs and runtime for various physics-based analyses. However, these methods primarily focus on surface topology and geometry, often overlooking the inherent thickness of real-world 3D objects, which exhibits high correlations and similar behavior between opposing surfaces. This limitation arises from the disconnected nature of these surfaces and the absence of internal edge connections within the mesh. In this work, we propose a novel framework, the Thickness-aware E(3)-Equivariant 3D Mesh Neural Network (T-EMNN), that effectively integrates the thickness of 3D objects while maintaining the computational efficiency of surface meshes. Additionally, we introduce data-driven coordinates that encode spatial information while preserving E(3)-equivariance or invariance properties, ensuring consistent and robust analysis. Evaluations on a real-world industrial dataset demonstrate the superior performance of T-EMNN in accurately predicting node-level 3D deformations, effectively capturing thickness effects while maintaining computational efficiency.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge