Bhakti Baheti

AI for Mycetoma Diagnosis in Histopathological Images: The MICCAI 2024 Challenge

Dec 25, 2025

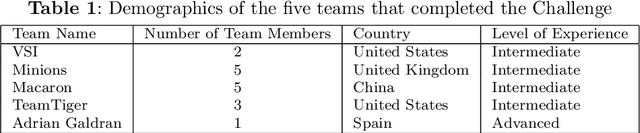

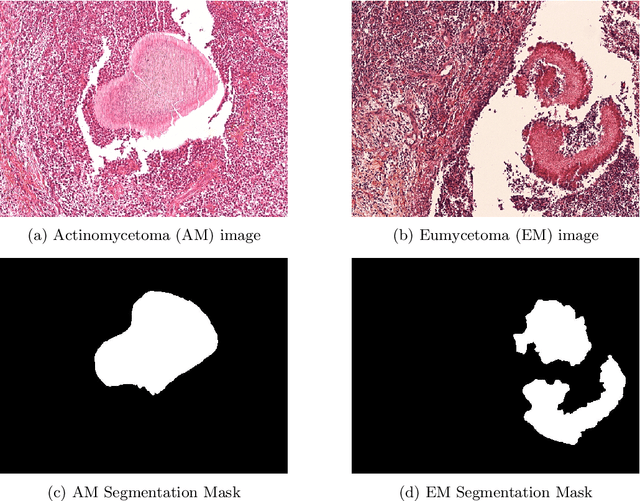

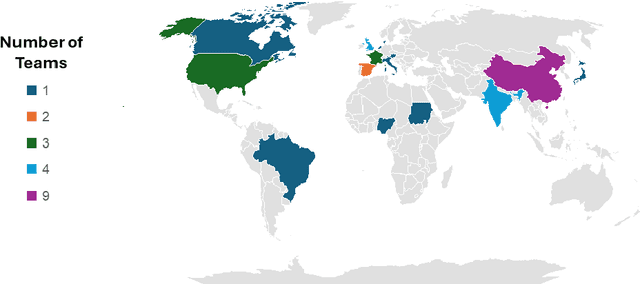

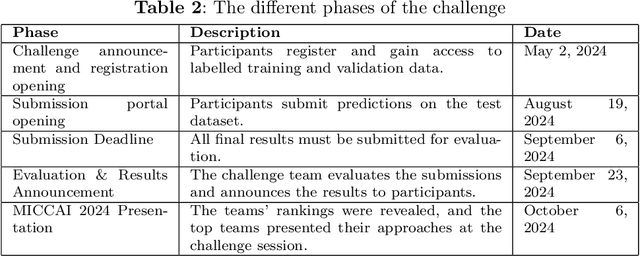

Abstract:Mycetoma is a neglected tropical disease caused by fungi or bacteria leading to severe tissue damage and disabilities. It affects poor and rural communities and presents medical challenges and socioeconomic burdens on patients and healthcare systems in endemic regions worldwide. Mycetoma diagnosis is a major challenge in mycetoma management, particularly in low-resource settings where expert pathologists are limited. To address this challenge, this paper presents an overview of the Mycetoma MicroImage: Detect and Classify Challenge (mAIcetoma) which was organized to advance mycetoma diagnosis through AI solutions. mAIcetoma focused on developing automated models for segmenting mycetoma grains and classifying mycetoma types from histopathological images. The challenge attracted the attention of several teams worldwide to participate and five finalist teams fulfilled the challenge objectives. The teams proposed various deep learning architectures for the ultimate goal of this challenge. Mycetoma database (MyData) was provided to participants as a standardized dataset to run the proposed models. Those models were evaluated using evaluation metrics. Results showed that all the models achieved high segmentation accuracy, emphasizing the necessitate of grain detection as a critical step in mycetoma diagnosis. In addition, the top-performing models show a significant performance in classifying mycetoma types.

Biochemical Prostate Cancer Recurrence Prediction: Thinking Fast & Slow

Sep 03, 2024

Abstract:Time to biochemical recurrence in prostate cancer is essential for prognostic monitoring of the progression of patients after prostatectomy, which assesses the efficacy of the surgery. In this work, we proposed to leverage multiple instance learning through a two-stage ``thinking fast \& slow'' strategy for the time to recurrence (TTR) prediction. The first (``thinking fast'') stage finds the most relevant WSI area for biochemical recurrence and the second (``thinking slow'') stage leverages higher resolution patches to predict TTR. Our approach reveals a mean C-index ($Ci$) of 0.733 ($\theta=0.059$) on our internal validation and $Ci=0.603$ on the LEOPARD challenge validation set. Post hoc attention visualization shows that the most attentive area contributes to the TTR prediction.

BraTS-Path Challenge: Assessing Heterogeneous Histopathologic Brain Tumor Sub-regions

May 17, 2024Abstract:Glioblastoma is the most common primary adult brain tumor, with a grim prognosis - median survival of 12-18 months following treatment, and 4 months otherwise. Glioblastoma is widely infiltrative in the cerebral hemispheres and well-defined by heterogeneous molecular and micro-environmental histopathologic profiles, which pose a major obstacle in treatment. Correctly diagnosing these tumors and assessing their heterogeneity is crucial for choosing the precise treatment and potentially enhancing patient survival rates. In the gold-standard histopathology-based approach to tumor diagnosis, detecting various morpho-pathological features of distinct histology throughout digitized tissue sections is crucial. Such "features" include the presence of cellular tumor, geographic necrosis, pseudopalisading necrosis, areas abundant in microvascular proliferation, infiltration into the cortex, wide extension in subcortical white matter, leptomeningeal infiltration, regions dense with macrophages, and the presence of perivascular or scattered lymphocytes. With these features in mind and building upon the main aim of the BraTS Cluster of Challenges https://www.synapse.org/brats2024, the goal of the BraTS-Path challenge is to provide a systematically prepared comprehensive dataset and a benchmarking environment to develop and fairly compare deep-learning models capable of identifying tumor sub-regions of distinct histologic profile. These models aim to further our understanding of the disease and assist in the diagnosis and grading of conditions in a consistent manner.

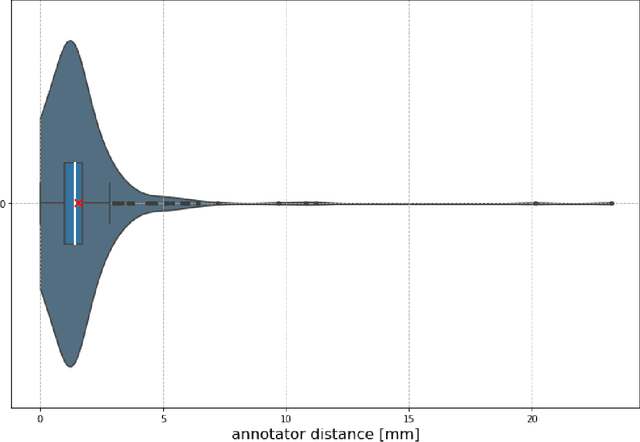

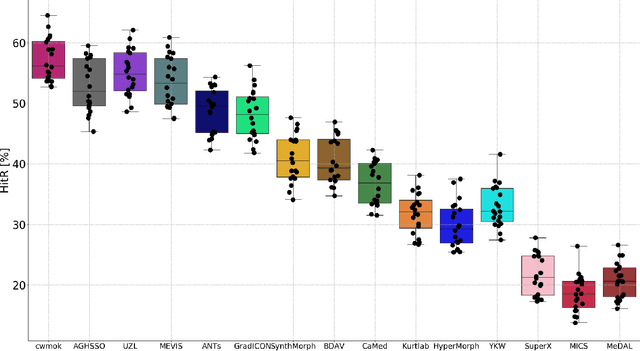

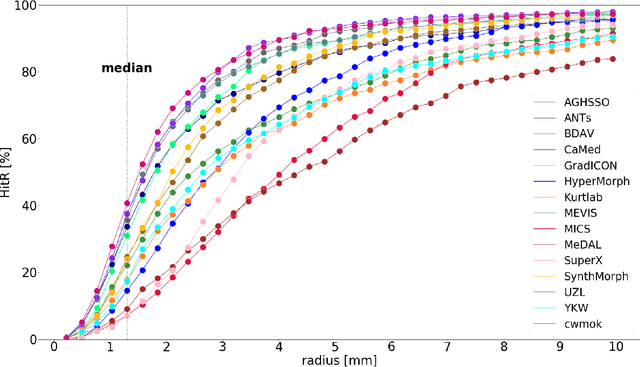

Framing image registration as a landmark detection problem for better representation of clinical relevance

Jul 31, 2023

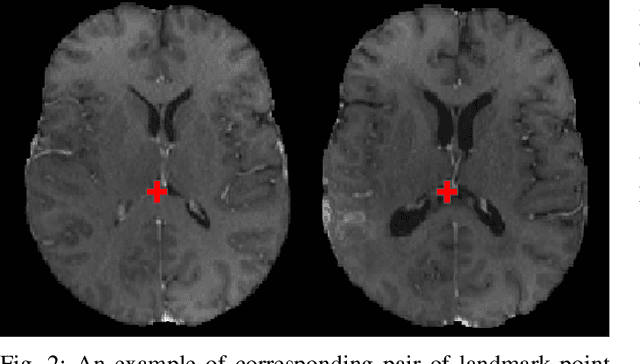

Abstract:Nowadays, registration methods are typically evaluated based on sub-resolution tracking error differences. In an effort to reinfuse this evaluation process with clinical relevance, we propose to reframe image registration as a landmark detection problem. Ideally, landmark-specific detection thresholds are derived from an inter-rater analysis. To approximate this costly process, we propose to compute hit rate curves based on the distribution of errors of a sub-sample inter-rater analysis. Therefore, we suggest deriving thresholds from the error distribution using the formula: median + delta * median absolute deviation. The method promises differentiation of previously indistinguishable registration algorithms and further enables assessing the clinical significance in algorithm development.

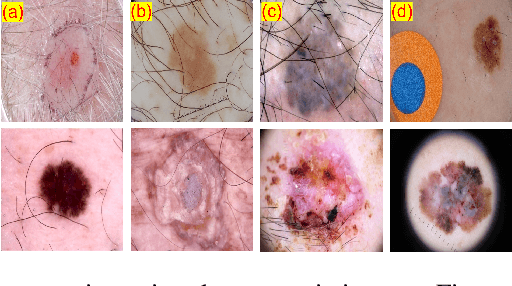

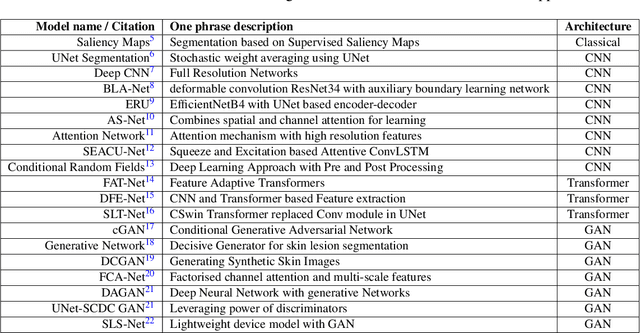

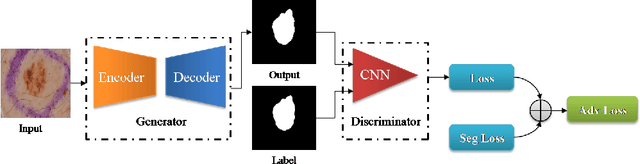

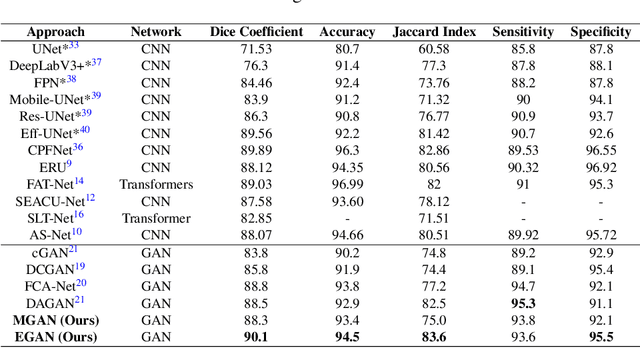

Generative Adversarial Networks based Skin Lesion Segmentation

May 29, 2023

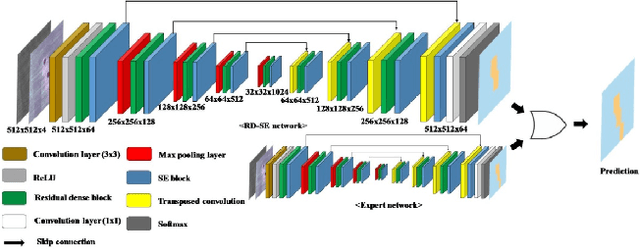

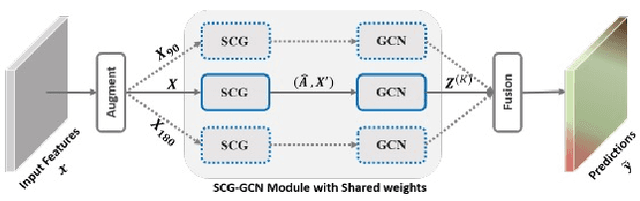

Abstract:Skin cancer is a serious condition that requires accurate identification and treatment. One way to assist clinicians in this task is by using computer-aided diagnosis (CAD) tools that can automatically segment skin lesions from dermoscopic images. To this end, a new adversarial learning-based framework called EGAN has been developed. This framework uses an unsupervised generative network to generate accurate lesion masks. It consists of a generator module with a top-down squeeze excitation-based compound scaled path and an asymmetric lateral connection-based bottom-up path, and a discriminator module that distinguishes between original and synthetic masks. Additionally, a morphology-based smoothing loss is implemented to encourage the network to create smooth semantic boundaries of lesions. The framework is evaluated on the International Skin Imaging Collaboration (ISIC) Lesion Dataset 2018 and outperforms the current state-of-the-art skin lesion segmentation approaches with a Dice coefficient, Jaccard similarity, and Accuracy of 90.1%, 83.6%, and 94.5%, respectively. This represents a 2% increase in Dice Coefficient, 1% increase in Jaccard Index, and 1% increase in Accuracy.

Detecting Histologic Glioblastoma Regions of Prognostic Relevance

Feb 01, 2023

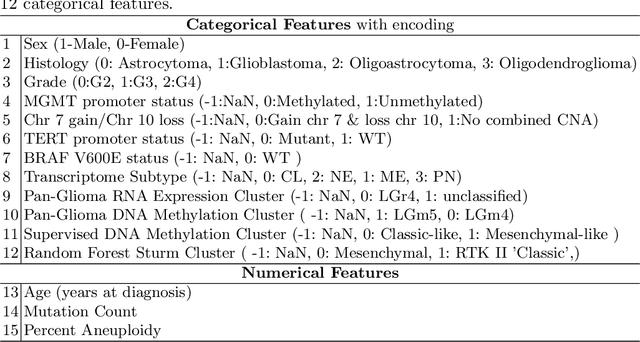

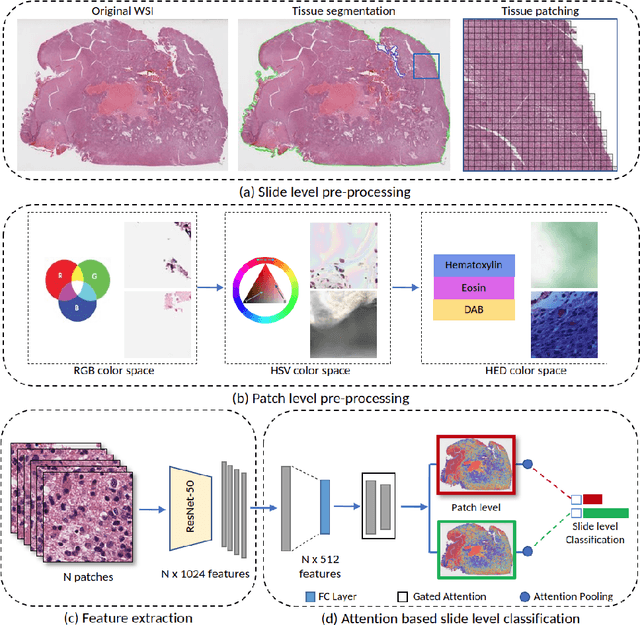

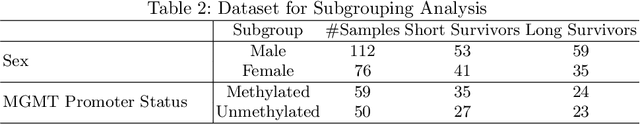

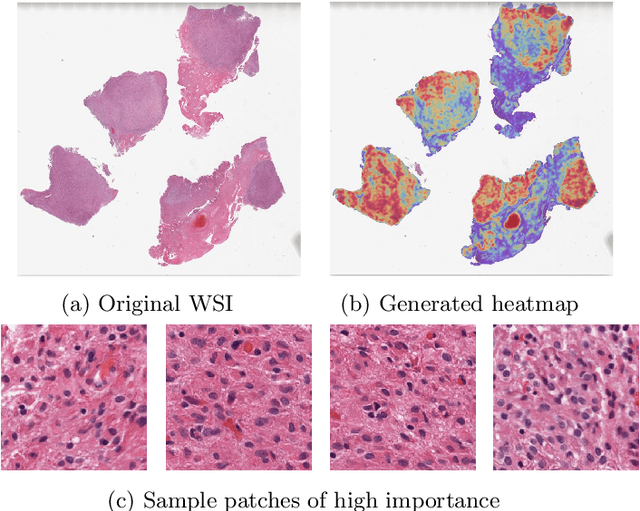

Abstract:Glioblastoma is the most common and aggressive malignant adult tumor of the central nervous system, with grim prognosis and heterogeneous morphologic and molecular profiles. Since the adoption of the current standard of care treatment, 18 years ago, there are no substantial prognostic improvements noticed. Accurate prediction of patient overall survival (OS) from clinical histopathology whole slide images (WSI) using advanced computational methods could contribute to optimization of clinical decision making and patient management. Here, we focus on identifying prognostically relevant glioblastoma morphologic patterns on H&E stained WSI. The exact approach capitalizes on the comprehensive WSI curation of apparent artifactual content and on an interpretability mechanism via a weakly supervised attention based multiple instance learning algorithm that further utilizes clustering to constrain the search space. The automatically identified patterns of high diagnostic value are used to classify the WSI as representative of a short or a long survivor. Identifying tumor morphologic patterns associated with short and long OS will allow the clinical neuropathologist to provide additional prognostic information gleaned during microscopic assessment to the treating team, as well as suggest avenues of biological investigation for understanding and potentially treating glioblastoma.

Deep Learning based Novel Cascaded Approach for Skin Lesion Analysis

Jan 16, 2023

Abstract:Automatic lesion analysis is critical in skin cancer diagnosis and ensures effective treatment. The computer aided diagnosis of such skin cancer in dermoscopic images can significantly reduce the clinicians workload and help improve diagnostic accuracy. Although researchers are working extensively to address this problem, early detection and accurate identification of skin lesions remain challenging. This research focuses on a two step framework for skin lesion segmentation followed by classification for lesion analysis. We explored the effectiveness of deep convolutional neural network based architectures by designing an encoder-decoder architecture for skin lesion segmentation and CNN based classification network. The proposed approaches are evaluated quantitatively in terms of the Accuracy, mean Intersection over Union and Dice Similarity Coefficient. Our cascaded end to end deep learning based approach is the first of its kind, where the classification accuracy of the lesion is significantly improved because of prior segmentation.

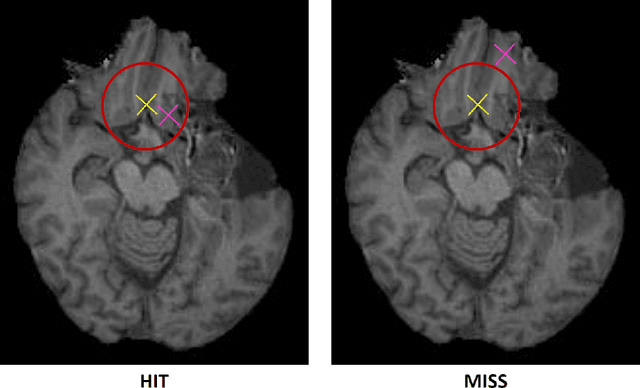

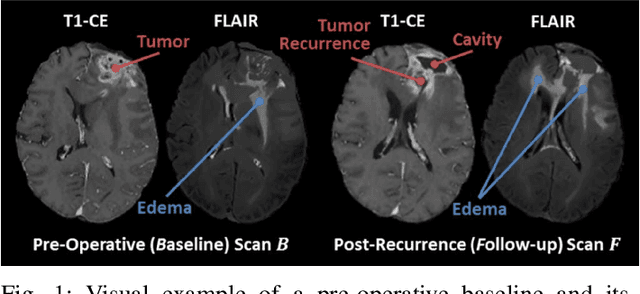

The Brain Tumor Sequence Registration Challenge: Establishing Correspondence between Pre-Operative and Follow-up MRI scans of diffuse glioma patients

Dec 13, 2021

Abstract:Registration of longitudinal brain Magnetic Resonance Imaging (MRI) scans containing pathologies is challenging due to tissue appearance changes, and still an unsolved problem. This paper describes the first Brain Tumor Sequence Registration (BraTS-Reg) challenge, focusing on estimating correspondences between pre-operative and follow-up scans of the same patient diagnosed with a brain diffuse glioma. The BraTS-Reg challenge intends to establish a public benchmark environment for deformable registration algorithms. The associated dataset comprises de-identified multi-institutional multi-parametric MRI (mpMRI) data, curated for each scan's size and resolution, according to a common anatomical template. Clinical experts have generated extensive annotations of landmarks points within the scans, descriptive of distinct anatomical locations across the temporal domain. The training data along with these ground truth annotations will be released to participants to design and develop their registration algorithms, whereas the annotations for the validation and the testing data will be withheld by the organizers and used to evaluate the containerized algorithms of the participants. Each submitted algorithm will be quantitatively evaluated using several metrics, such as the Median Absolute Error (MAE), Robustness, and the Jacobian determinant.

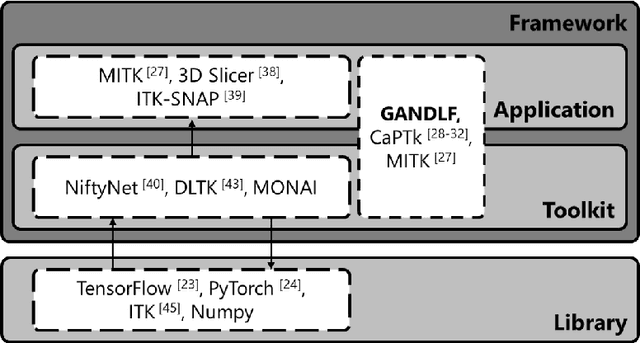

GaNDLF: A Generally Nuanced Deep Learning Framework for Scalable End-to-End Clinical Workflows in Medical Imaging

Feb 26, 2021

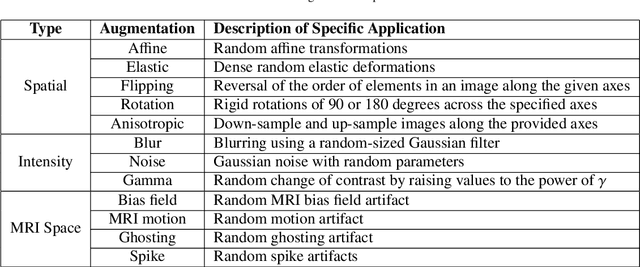

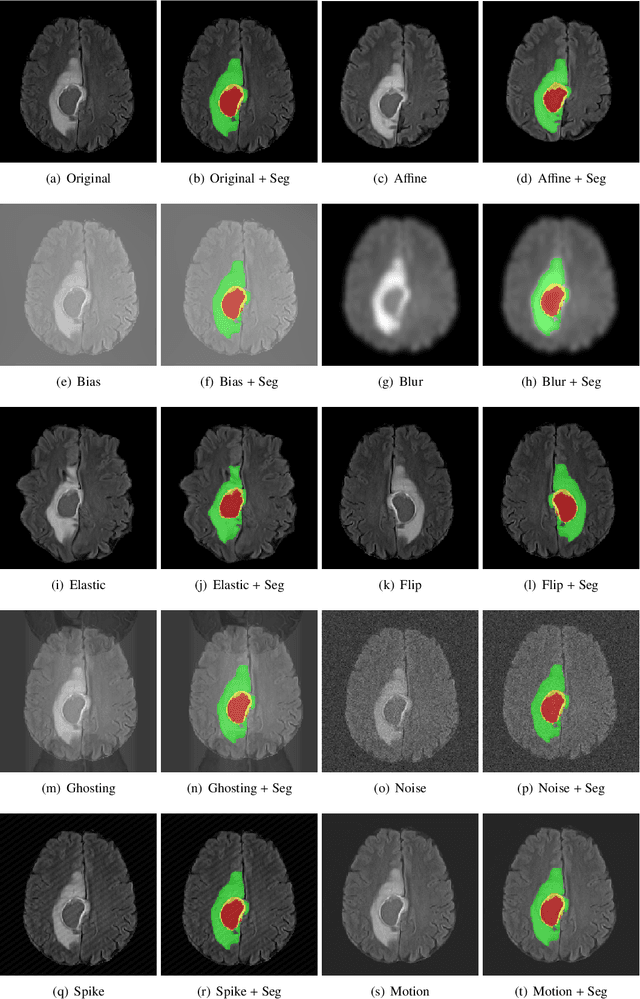

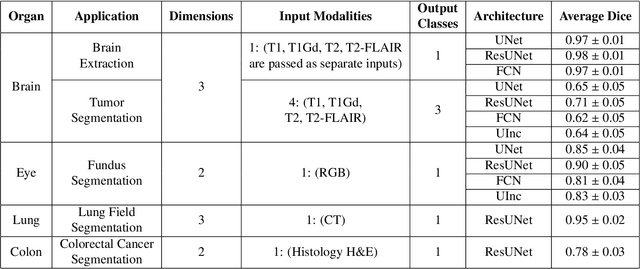

Abstract:Deep Learning (DL) has greatly highlighted the potential impact of optimized machine learning in both the scientific and clinical communities. The advent of open-source DL libraries from major industrial entities, such as TensorFlow (Google), PyTorch (Facebook), and MXNet (Apache), further contributes to DL promises on the democratization of computational analytics. However, increased technical and specialized background is required to develop DL algorithms, and the variability of implementation details hinders their reproducibility. Towards lowering the barrier and making the mechanism of DL development, training, and inference more stable, reproducible, and scalable, without requiring an extensive technical background, this manuscript proposes the \textbf{G}ener\textbf{a}lly \textbf{N}uanced \textbf{D}eep \textbf{L}earning \textbf{F}ramework (GaNDLF). With built-in support for $k$-fold cross-validation, data augmentation, multiple modalities and output classes, and multi-GPU training, as well as the ability to work with both radiographic and histologic imaging, GaNDLF aims to provide an end-to-end solution for all DL-related tasks, to tackle problems in medical imaging and provide a robust application framework for deployment in clinical workflows.

The 1st Agriculture-Vision Challenge: Methods and Results

Apr 23, 2020

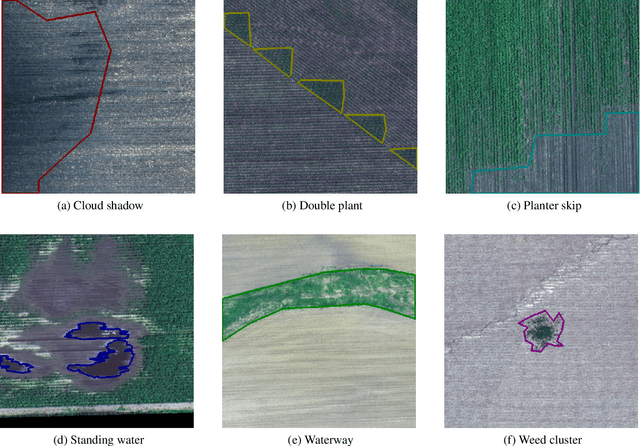

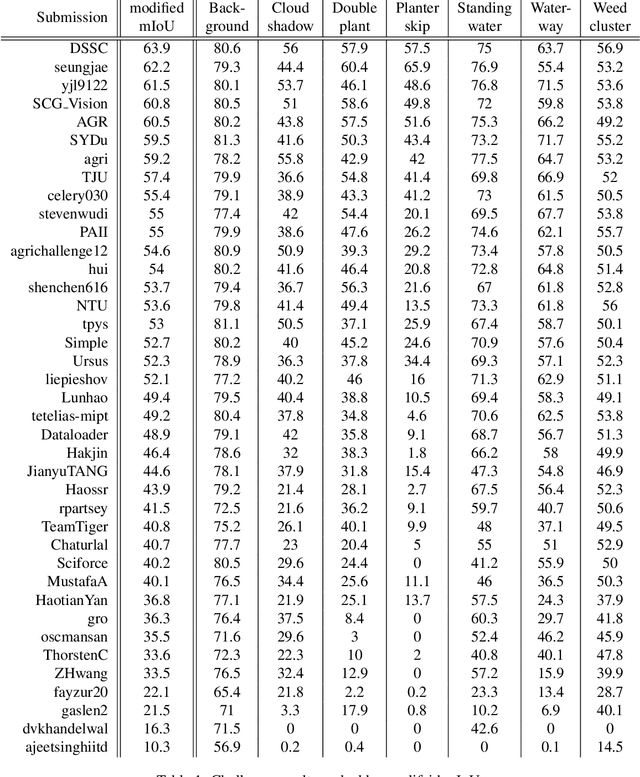

Abstract:The first Agriculture-Vision Challenge aims to encourage research in developing novel and effective algorithms for agricultural pattern recognition from aerial images, especially for the semantic segmentation task associated with our challenge dataset. Around 57 participating teams from various countries compete to achieve state-of-the-art in aerial agriculture semantic segmentation. The Agriculture-Vision Challenge Dataset was employed, which comprises of 21,061 aerial and multi-spectral farmland images. This paper provides a summary of notable methods and results in the challenge. Our submission server and leaderboard will continue to open for researchers that are interested in this challenge dataset and task; the link can be found here.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge