Ivan Ezhov

for the ALFA study

Addressing Annotation Scarcity in Hyperspectral Brain Image Segmentation with Unsupervised Domain Adaptation

Aug 23, 2025Abstract:This work presents a novel deep learning framework for segmenting cerebral vasculature in hyperspectral brain images. We address the critical challenge of severe label scarcity, which impedes conventional supervised training. Our approach utilizes a novel unsupervised domain adaptation methodology, using a small, expert-annotated ground truth alongside unlabeled data. Quantitative and qualitative evaluations confirm that our method significantly outperforms existing state-of-the-art approaches, demonstrating the efficacy of domain adaptation for label-scarce biomedical imaging tasks.

Analysis of the MICCAI Brain Tumor Segmentation -- Metastases (BraTS-METS) 2025 Lighthouse Challenge: Brain Metastasis Segmentation on Pre- and Post-treatment MRI

Apr 16, 2025Abstract:Despite continuous advancements in cancer treatment, brain metastatic disease remains a significant complication of primary cancer and is associated with an unfavorable prognosis. One approach for improving diagnosis, management, and outcomes is to implement algorithms based on artificial intelligence for the automated segmentation of both pre- and post-treatment MRI brain images. Such algorithms rely on volumetric criteria for lesion identification and treatment response assessment, which are still not available in clinical practice. Therefore, it is critical to establish tools for rapid volumetric segmentations methods that can be translated to clinical practice and that are trained on high quality annotated data. The BraTS-METS 2025 Lighthouse Challenge aims to address this critical need by establishing inter-rater and intra-rater variability in dataset annotation by generating high quality annotated datasets from four individual instances of segmentation by neuroradiologists while being recorded on video (two instances doing "from scratch" and two instances after AI pre-segmentation). This high-quality annotated dataset will be used for testing phase in 2025 Lighthouse challenge and will be publicly released at the completion of the challenge. The 2025 Lighthouse challenge will also release the 2023 and 2024 segmented datasets that were annotated using an established pipeline of pre-segmentation, student annotation, two neuroradiologists checking, and one neuroradiologist finalizing the process. It builds upon its previous edition by including post-treatment cases in the dataset. Using these high-quality annotated datasets, the 2025 Lighthouse challenge plans to test benchmark algorithms for automated segmentation of pre-and post-treatment brain metastases (BM), trained on diverse and multi-institutional datasets of MRI images obtained from patients with brain metastases.

Efficient Deep Learning-based Forward Solvers for Brain Tumor Growth Models

Jan 14, 2025Abstract:Glioblastoma, a highly aggressive brain tumor, poses major challenges due to its poor prognosis and high morbidity rates. Partial differential equation-based models offer promising potential to enhance therapeutic outcomes by simulating patient-specific tumor behavior for improved radiotherapy planning. However, model calibration remains a bottleneck due to the high computational demands of optimization methods like Monte Carlo sampling and evolutionary algorithms. To address this, we recently introduced an approach leveraging a neural forward solver with gradient-based optimization to significantly reduce calibration time. This approach requires a highly accurate and fully differentiable forward model. We investigate multiple architectures, including (i) an enhanced TumorSurrogate, (ii) a modified nnU-Net, and (iii) a 3D Vision Transformer (ViT). The optimized TumorSurrogate achieved the best overall results, excelling in both tumor outline matching and voxel-level prediction of tumor cell concentration. It halved the MSE relative to the baseline model and achieved the highest Dice score across all tumor cell concentration thresholds. Our study demonstrates significant enhancement in forward solver performance and outlines important future research directions.

Spatial Brain Tumor Concentration Estimation for Individualized Radiotherapy Planning

Dec 18, 2024

Abstract:Biophysical modeling of brain tumors has emerged as a promising strategy for personalizing radiotherapy planning by estimating the otherwise hidden distribution of tumor cells within the brain. However, many existing state-of-the-art methods are computationally intensive, limiting their widespread translation into clinical practice. In this work, we propose an efficient and direct method that utilizes soft physical constraints to estimate the tumor cell concentration from preoperative MRI of brain tumor patients. Our approach optimizes a 3D tumor concentration field by simultaneously minimizing the difference between the observed MRI and a physically informed loss function. Compared to existing state-of-the-art techniques, our method significantly improves predicting tumor recurrence on two public datasets with a total of 192 patients while maintaining a clinically viable runtime of under one minute - a substantial reduction from the 30 minutes required by the current best approach. Furthermore, we showcase the generalizability of our framework by incorporating additional imaging information and physical constraints, highlighting its potential to translate to various medical diffusion phenomena with imperfect data.

FedPID: An Aggregation Method for Federated Learning

Nov 04, 2024Abstract:This paper presents FedPID, our submission to the Federated Tumor Segmentation Challenge 2024 (FETS24). Inspired by FedCostWAvg and FedPIDAvg, our winning contributions to FETS21 and FETS2022, we propose an improved aggregation strategy for federated and collaborative learning. FedCostWAvg is a method that averages results by considering both the number of training samples in each group and how much the cost function decreased in the last round of training. This is similar to how the derivative part of a PID controller works. In FedPIDAvg, we also included the integral part that was missing. Another challenge we faced were vastly differing dataset sizes at each center. We solved this by assuming the sizes follow a Poisson distribution and adjusting the training iterations for each center accordingly. Essentially, this part of the method controls that outliers that require too much training time are less frequently used. Based on these contributions we now adapted FedPIDAvg by changing how the integral part is computed. Instead of integrating the loss function we measure the global drop in cost since the first round.

MultiOrg: A Multi-rater Organoid-detection Dataset

Oct 18, 2024Abstract:High-throughput image analysis in the biomedical domain has gained significant attention in recent years, driving advancements in drug discovery, disease prediction, and personalized medicine. Organoids, specifically, are an active area of research, providing excellent models for human organs and their functions. Automating the quantification of organoids in microscopy images would provide an effective solution to overcome substantial manual quantification bottlenecks, particularly in high-throughput image analysis. However, there is a notable lack of open biomedical datasets, in contrast to other domains, such as autonomous driving, and, notably, only few of them have attempted to quantify annotation uncertainty. In this work, we present MultiOrg a comprehensive organoid dataset tailored for object detection tasks with uncertainty quantification. This dataset comprises over 400 high-resolution 2d microscopy images and curated annotations of more than 60,000 organoids. Most importantly, it includes three label sets for the test data, independently annotated by two experts at distinct time points. We additionally provide a benchmark for organoid detection, and make the best model available through an easily installable, interactive plugin for the popular image visualization tool Napari, to perform organoid quantification.

Physics-Regularized Multi-Modal Image Assimilation for Brain Tumor Localization

Sep 30, 2024

Abstract:Physical models in the form of partial differential equations represent an important prior for many under-constrained problems. One example is tumor treatment planning, which heavily depends on accurate estimates of the spatial distribution of tumor cells in a patient's anatomy. Medical imaging scans can identify the bulk of the tumor, but they cannot reveal its full spatial distribution. Tumor cells at low concentrations remain undetectable, for example, in the most frequent type of primary brain tumors, glioblastoma. Deep-learning-based approaches fail to estimate the complete tumor cell distribution due to a lack of reliable training data. Most existing works therefore rely on physics-based simulations to match observed tumors, providing anatomically and physiologically plausible estimations. However, these approaches struggle with complex and unknown initial conditions and are limited by overly rigid physical models. In this work, we present a novel method that balances data-driven and physics-based cost functions. In particular, we propose a unique discretization scheme that quantifies the adherence of our learned spatiotemporal tumor and brain tissue distributions to their corresponding growth and elasticity equations. This quantification, serving as a regularization term rather than a hard constraint, enables greater flexibility and proficiency in assimilating patient data than existing models. We demonstrate improved coverage of tumor recurrence areas compared to existing techniques on real-world data from a cohort of patients. The method holds the potential to enhance clinical adoption of model-driven treatment planning for glioblastoma.

Don't You (Project Around Discs)? Neural Network Surrogate and Projected Gradient Descent for Calibrating an Intervertebral Disc Finite Element Model

Aug 12, 2024Abstract:Accurate calibration of finite element (FE) models of human intervertebral discs (IVDs) is essential for their reliability and application in diagnosing and planning treatments for spinal conditions. Traditional calibration methods are computationally intensive, requiring iterative, derivative-free optimization algorithms that often take hours or days to converge. This study addresses these challenges by introducing a novel, efficient, and effective calibration method for an L4-L5 IVD FE model using a neural network (NN) surrogate. The NN surrogate predicts simulation outcomes with high accuracy, outperforming other machine learning models, and significantly reduces the computational cost associated with traditional FE simulations. Next, a Projected Gradient Descent (PGD) approach guided by gradients of the NN surrogate is proposed to efficiently calibrate FE models. Our method explicitly enforces feasibility with a projection step, thus maintaining material bounds throughout the optimization process. The proposed method is evaluated against state-of-the-art Genetic Algorithm (GA) and inverse model baselines on synthetic and in vitro experimental datasets. Our approach demonstrates superior performance on synthetic data, achieving a Mean Absolute Error (MAE) of 0.06 compared to the baselines' MAE of 0.18 and 0.54, respectively. On experimental specimens, our method outperforms the baseline in 5 out of 6 cases. Most importantly, our approach reduces calibration time to under three seconds, compared to up to 8 days per sample required by traditional calibration. Such efficiency paves the way for applying more complex FE models, enabling accurate patient-specific simulations and advancing spinal treatment planning.

BraTS-PEDs: Results of the Multi-Consortium International Pediatric Brain Tumor Segmentation Challenge 2023

Jul 11, 2024

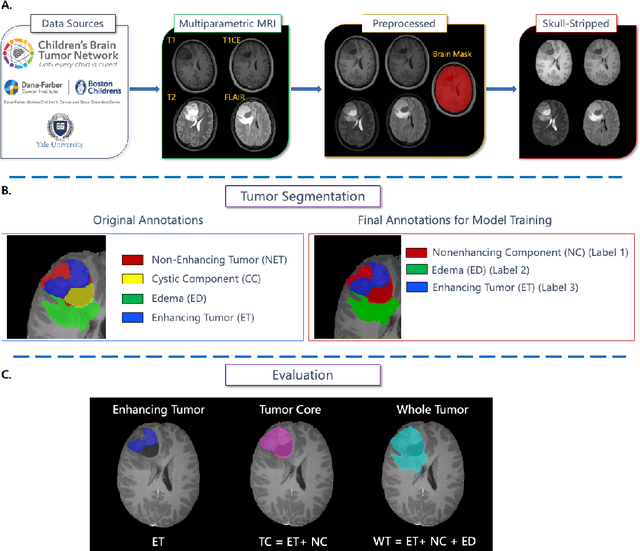

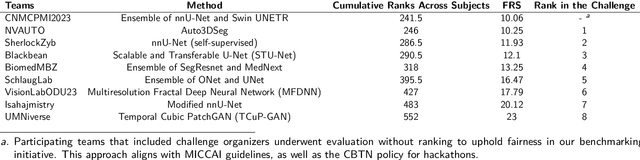

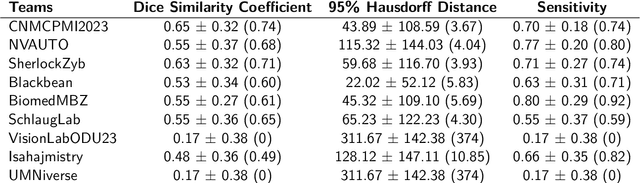

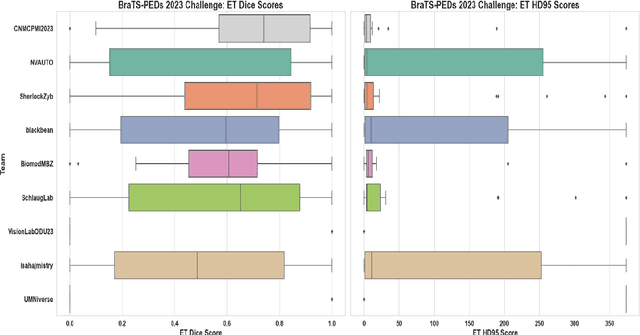

Abstract:Pediatric central nervous system tumors are the leading cause of cancer-related deaths in children. The five-year survival rate for high-grade glioma in children is less than 20%. The development of new treatments is dependent upon multi-institutional collaborative clinical trials requiring reproducible and accurate centralized response assessment. We present the results of the BraTS-PEDs 2023 challenge, the first Brain Tumor Segmentation (BraTS) challenge focused on pediatric brain tumors. This challenge utilized data acquired from multiple international consortia dedicated to pediatric neuro-oncology and clinical trials. BraTS-PEDs 2023 aimed to evaluate volumetric segmentation algorithms for pediatric brain gliomas from magnetic resonance imaging using standardized quantitative performance evaluation metrics employed across the BraTS 2023 challenges. The top-performing AI approaches for pediatric tumor analysis included ensembles of nnU-Net and Swin UNETR, Auto3DSeg, or nnU-Net with a self-supervised framework. The BraTSPEDs 2023 challenge fostered collaboration between clinicians (neuro-oncologists, neuroradiologists) and AI/imaging scientists, promoting faster data sharing and the development of automated volumetric analysis techniques. These advancements could significantly benefit clinical trials and improve the care of children with brain tumors.

Analysis of the BraTS 2023 Intracranial Meningioma Segmentation Challenge

May 16, 2024

Abstract:We describe the design and results from the BraTS 2023 Intracranial Meningioma Segmentation Challenge. The BraTS Meningioma Challenge differed from prior BraTS Glioma challenges in that it focused on meningiomas, which are typically benign extra-axial tumors with diverse radiologic and anatomical presentation and a propensity for multiplicity. Nine participating teams each developed deep-learning automated segmentation models using image data from the largest multi-institutional systematically expert annotated multilabel multi-sequence meningioma MRI dataset to date, which included 1000 training set cases, 141 validation set cases, and 283 hidden test set cases. Each case included T2, T2/FLAIR, T1, and T1Gd brain MRI sequences with associated tumor compartment labels delineating enhancing tumor, non-enhancing tumor, and surrounding non-enhancing T2/FLAIR hyperintensity. Participant automated segmentation models were evaluated and ranked based on a scoring system evaluating lesion-wise metrics including dice similarity coefficient (DSC) and 95% Hausdorff Distance. The top ranked team had a lesion-wise median dice similarity coefficient (DSC) of 0.976, 0.976, and 0.964 for enhancing tumor, tumor core, and whole tumor, respectively and a corresponding average DSC of 0.899, 0.904, and 0.871, respectively. These results serve as state-of-the-art benchmarks for future pre-operative meningioma automated segmentation algorithms. Additionally, we found that 1286 of 1424 cases (90.3%) had at least 1 compartment voxel abutting the edge of the skull-stripped image edge, which requires further investigation into optimal pre-processing face anonymization steps.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge