Adam J. Stewart

Earth Embeddings as Products: Taxonomy, Ecosystem, and Standardized Access

Jan 19, 2026Abstract:Geospatial Foundation Models (GFMs) provide powerful representations, but high compute costs hinder their widespread use. Pre-computed embedding data products offer a practical "frozen" alternative, yet they currently exist in a fragmented ecosystem of incompatible formats and resolutions. This lack of standardization creates an engineering bottleneck that prevents meaningful model comparison and reproducibility. We formalize this landscape through a three-layer taxonomy: Data, Tools, and Value. We survey existing products to identify interoperability barriers. To bridge this gap, we extend TorchGeo with a unified API that standardizes the loading and querying of diverse embedding products. By treating embeddings as first-class geospatial datasets, we decouple downstream analysis from model-specific engineering, providing a roadmap for more transparent and accessible Earth observation workflows.

Towards a Unified Copernicus Foundation Model for Earth Vision

Mar 14, 2025Abstract:Advances in Earth observation (EO) foundation models have unlocked the potential of big satellite data to learn generic representations from space, benefiting a wide range of downstream applications crucial to our planet. However, most existing efforts remain limited to fixed spectral sensors, focus solely on the Earth's surface, and overlook valuable metadata beyond imagery. In this work, we take a step towards next-generation EO foundation models with three key components: 1) Copernicus-Pretrain, a massive-scale pretraining dataset that integrates 18.7M aligned images from all major Copernicus Sentinel missions, spanning from the Earth's surface to its atmosphere; 2) Copernicus-FM, a unified foundation model capable of processing any spectral or non-spectral sensor modality using extended dynamic hypernetworks and flexible metadata encoding; and 3) Copernicus-Bench, a systematic evaluation benchmark with 15 hierarchical downstream tasks ranging from preprocessing to specialized applications for each Sentinel mission. Our dataset, model, and benchmark greatly improve the scalability, versatility, and multimodal adaptability of EO foundation models, while also creating new opportunities to connect EO, weather, and climate research. Codes, datasets and models are available at https://github.com/zhu-xlab/Copernicus-FM.

Panopticon: Advancing Any-Sensor Foundation Models for Earth Observation

Mar 13, 2025Abstract:Earth observation (EO) data features diverse sensing platforms with varying spectral bands, spatial resolutions, and sensing modalities. While most prior work has constrained inputs to fixed sensors, a new class of any-sensor foundation models able to process arbitrary sensors has recently emerged. Contributing to this line of work, we propose Panopticon, an any-sensor foundation model built on the DINOv2 framework. We extend DINOv2 by (1) treating images of the same geolocation across sensors as natural augmentations, (2) subsampling channels to diversify spectral input, and (3) adding a cross attention over channels as a flexible patch embedding mechanism. By encoding the wavelength and modes of optical and synthetic aperture radar sensors, respectively, Panopticon can effectively process any combination of arbitrary channels. In extensive evaluations, we achieve state-of-the-art performance on GEO-Bench, especially on the widely-used Sentinel-1 and Sentinel-2 sensors, while out-competing other any-sensor models, as well as domain adapted fixed-sensor models on unique sensor configurations. Panopticon enables immediate generalization to both existing and future satellite platforms, advancing sensor-agnostic EO.

Lightning UQ Box: A Comprehensive Framework for Uncertainty Quantification in Deep Learning

Oct 04, 2024

Abstract:Uncertainty quantification (UQ) is an essential tool for applying deep neural networks (DNNs) to real world tasks, as it attaches a degree of confidence to DNN outputs. However, despite its benefits, UQ is often left out of the standard DNN workflow due to the additional technical knowledge required to apply and evaluate existing UQ procedures. Hence there is a need for a comprehensive toolbox that allows the user to integrate UQ into their modelling workflow, without significant overhead. We introduce \texttt{Lightning UQ Box}: a unified interface for applying and evaluating various approaches to UQ. In this paper, we provide a theoretical and quantitative comparison of the wide range of state-of-the-art UQ methods implemented in our toolbox. We focus on two challenging vision tasks: (i) estimating tropical cyclone wind speeds from infrared satellite imagery and (ii) estimating the power output of solar panels from RGB images of the sky. By highlighting the differences between methods our results demonstrate the need for a broad and approachable experimental framework for UQ, that can be used for benchmarking UQ methods. The toolbox, example implementations, and further information are available at: https://github.com/lightning-uq-box/lightning-uq-box

On the Generalizability of Foundation Models for Crop Type Mapping

Sep 14, 2024Abstract:Foundation models pre-trained using self-supervised and weakly-supervised learning have shown powerful transfer learning capabilities on various downstream tasks, including language understanding, text generation, and image recognition. Recently, the Earth observation (EO) field has produced several foundation models pre-trained directly on multispectral satellite imagery (e.g., Sentinel-2) for applications like precision agriculture, wildfire and drought monitoring, and natural disaster response. However, few studies have investigated the ability of these models to generalize to new geographic locations, and potential concerns of geospatial bias -- models trained on data-rich developed countries not transferring well to data-scarce developing countries -- remain. We investigate the ability of popular EO foundation models to transfer to new geographic regions in the agricultural domain, where differences in farming practices and class imbalance make transfer learning particularly challenging. We first select six crop classification datasets across five continents, normalizing for dataset size and harmonizing classes to focus on four major cereal grains: maize, soybean, rice, and wheat. We then compare three popular foundation models, pre-trained on SSL4EO-S12, SatlasPretrain, and ImageNet, using in-distribution (ID) and out-of-distribution (OOD) evaluation. Experiments show that pre-trained weights designed explicitly for Sentinel-2, such as SSL4EO-S12, outperform general pre-trained weights like ImageNet. Furthermore, the benefits of pre-training on OOD data are the most significant when only 10--100 ID training samples are used. Transfer learning and pre-training with OOD and limited ID data show promising applications, as many developing regions have scarce crop type labels. All harmonized datasets and experimental code are open-source and available for download.

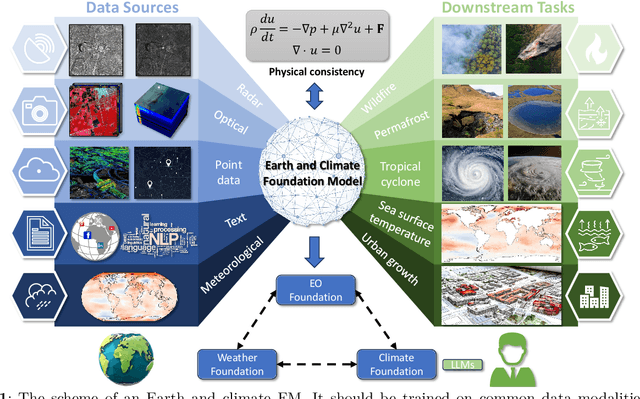

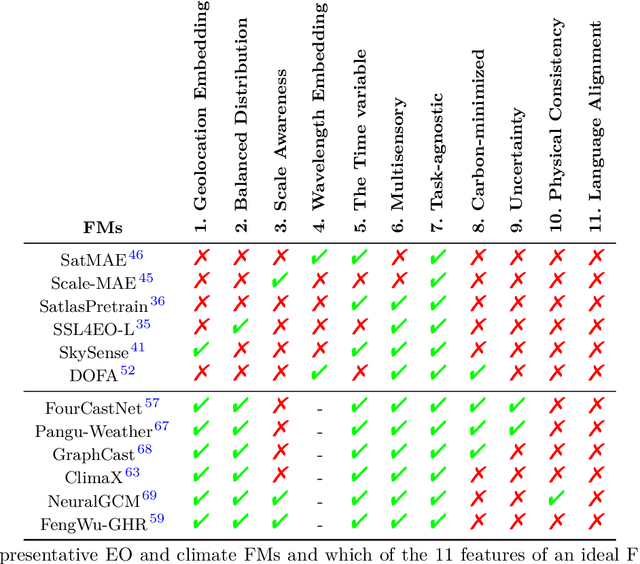

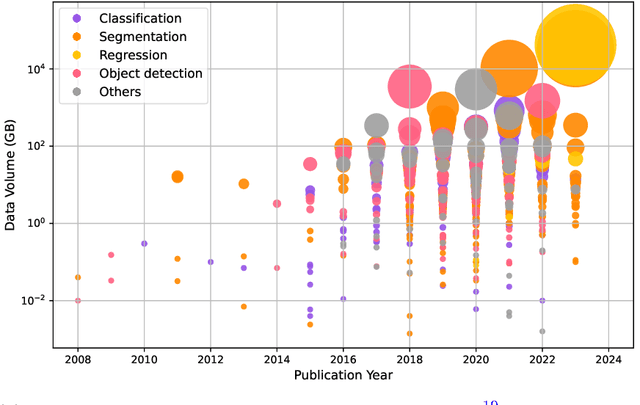

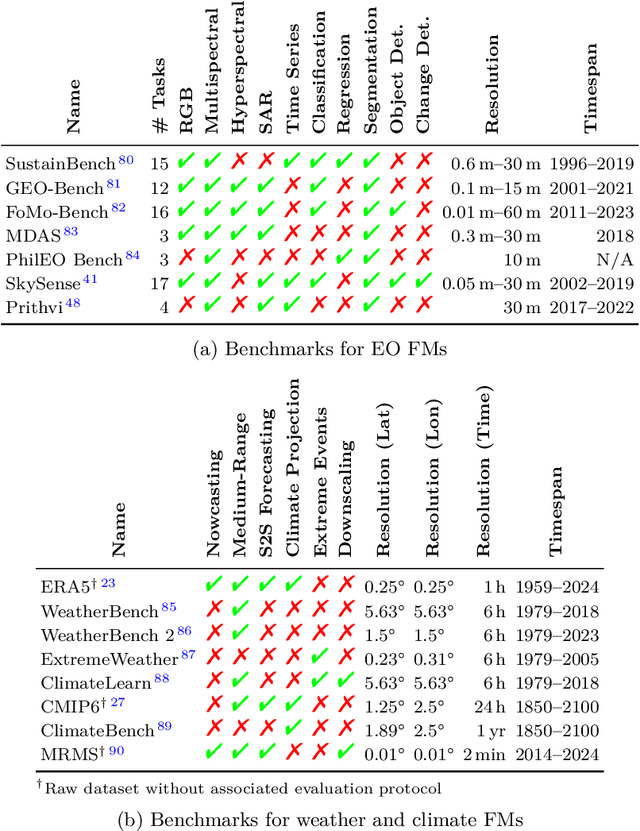

On the Foundations of Earth and Climate Foundation Models

May 07, 2024

Abstract:Foundation models have enormous potential in advancing Earth and climate sciences, however, current approaches may not be optimal as they focus on a few basic features of a desirable Earth and climate foundation model. Crafting the ideal Earth foundation model, we define eleven features which would allow such a foundation model to be beneficial for any geoscientific downstream application in an environmental- and human-centric manner.We further shed light on the way forward to achieve the ideal model and to evaluate Earth foundation models. What comes after foundation models? Energy efficient adaptation, adversarial defenses, and interpretability are among the emerging directions.

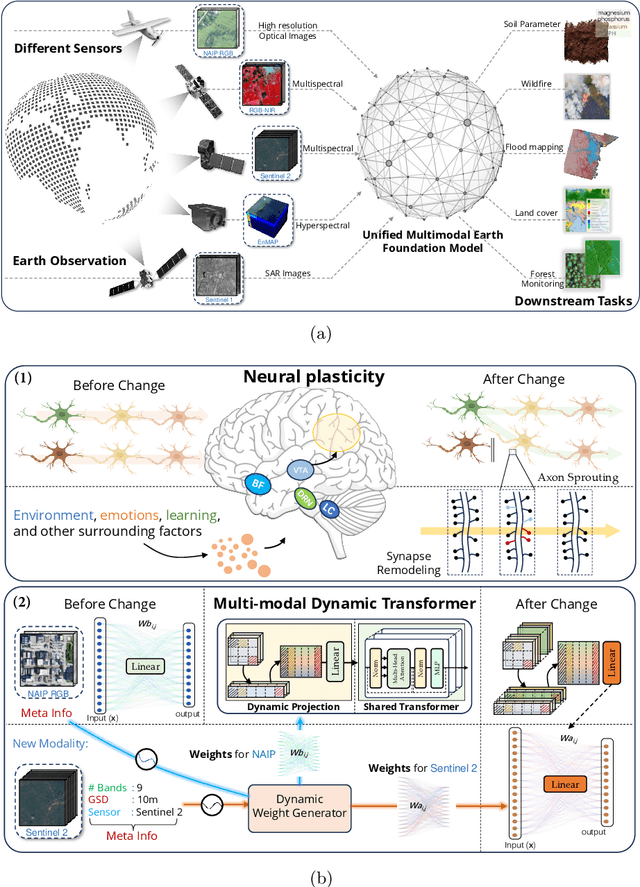

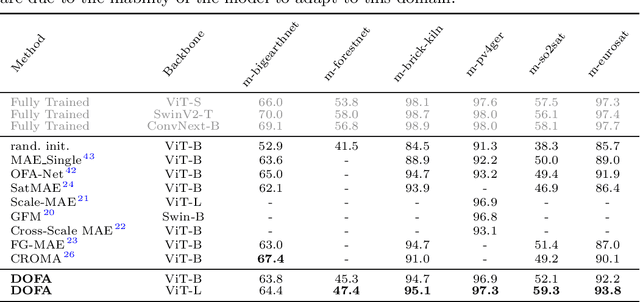

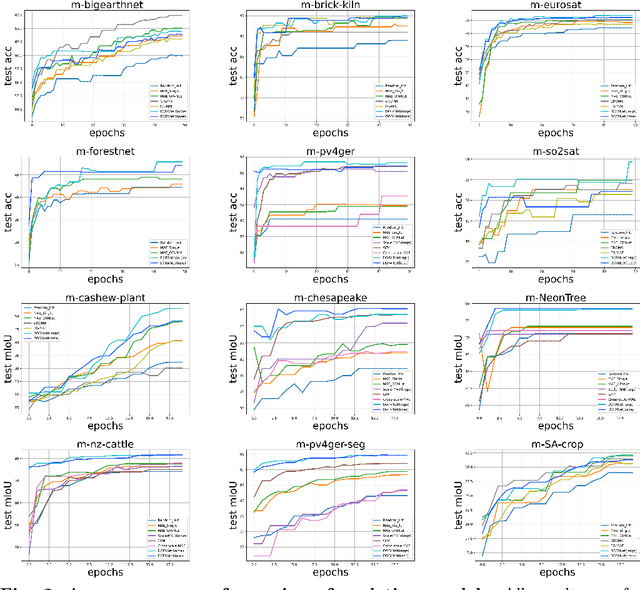

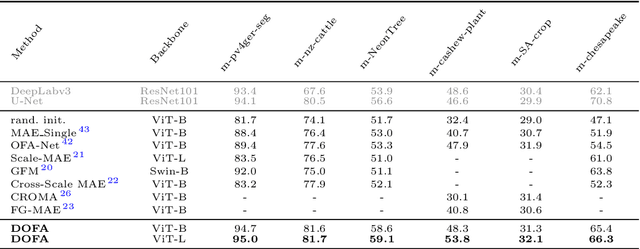

Neural Plasticity-Inspired Foundation Model for Observing the Earth Crossing Modalities

Mar 22, 2024

Abstract:The development of foundation models has revolutionized our ability to interpret the Earth's surface using satellite observational data. Traditional models have been siloed, tailored to specific sensors or data types like optical, radar, and hyperspectral, each with its own unique characteristics. This specialization hinders the potential for a holistic analysis that could benefit from the combined strengths of these diverse data sources. Our novel approach introduces the Dynamic One-For-All (DOFA) model, leveraging the concept of neural plasticity in brain science to integrate various data modalities into a single framework adaptively. This dynamic hypernetwork, adjusting to different wavelengths, enables a single versatile Transformer jointly trained on data from five sensors to excel across 12 distinct Earth observation tasks, including sensors never seen during pretraining. DOFA's innovative design offers a promising leap towards more accurate, efficient, and unified Earth observation analysis, showcasing remarkable adaptability and performance in harnessing the potential of multimodal Earth observation data.

SSL4EO-L: Datasets and Foundation Models for Landsat Imagery

Jun 15, 2023

Abstract:The Landsat program is the longest-running Earth observation program in history, with 50+ years of data acquisition by 8 satellites. The multispectral imagery captured by sensors onboard these satellites is critical for a wide range of scientific fields. Despite the increasing popularity of deep learning and remote sensing, the majority of researchers still use decision trees and random forests for Landsat image analysis due to the prevalence of small labeled datasets and lack of foundation models. In this paper, we introduce SSL4EO-L, the first ever dataset designed for Self-Supervised Learning for Earth Observation for the Landsat family of satellites (including 3 sensors and 2 product levels) and the largest Landsat dataset in history (5M image patches). Additionally, we modernize and re-release the L7 Irish and L8 Biome cloud detection datasets, and introduce the first ML benchmark datasets for Landsats 4-5 TM and Landsat 7 ETM+ SR. Finally, we pre-train the first foundation models for Landsat imagery using SSL4EO-L and evaluate their performance on multiple semantic segmentation tasks. All datasets and model weights are available via the TorchGeo (https://github.com/microsoft/torchgeo) library, making reproducibility and experimentation easy, and enabling scientific advancements in the burgeoning field of remote sensing for a myriad of downstream applications.

TorchGeo: deep learning with geospatial data

Nov 17, 2021

Abstract:Remotely sensed geospatial data are critical for applications including precision agriculture, urban planning, disaster monitoring and response, and climate change research, among others. Deep learning methods are particularly promising for modeling many remote sensing tasks given the success of deep neural networks in similar computer vision tasks and the sheer volume of remotely sensed imagery available. However, the variance in data collection methods and handling of geospatial metadata make the application of deep learning methodology to remotely sensed data nontrivial. For example, satellite imagery often includes additional spectral bands beyond red, green, and blue and must be joined to other geospatial data sources that can have differing coordinate systems, bounds, and resolutions. To help realize the potential of deep learning for remote sensing applications, we introduce TorchGeo, a Python library for integrating geospatial data into the PyTorch deep learning ecosystem. TorchGeo provides data loaders for a variety of benchmark datasets, composable datasets for generic geospatial data sources, samplers for geospatial data, and transforms that work with multispectral imagery. TorchGeo is also the first library to provide pre-trained models for multispectral satellite imagery (e.g. models that use all bands from the Sentinel 2 satellites), allowing for advances in transfer learning on downstream remote sensing tasks with limited labeled data. We use TorchGeo to create reproducible benchmark results on existing datasets and benchmark our proposed method for preprocessing geospatial imagery on-the-fly. TorchGeo is open-source and available on GitHub: https://github.com/microsoft/torchgeo.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge