Ioannis Papoutsis

Magnifying change: Rapid burn scar mapping with multi-resolution, multi-source satellite imagery

Jan 14, 2026Abstract:Delineating wildfire affected areas using satellite imagery remains challenging due to irregular and spatially heterogeneous spectral changes across the electromagnetic spectrum. While recent deep learning approaches achieve high accuracy when high-resolution multispectral data are available, their applicability in operational settings, where a quick delineation of the burn scar shortly after a wildfire incident is required, is limited by the trade-off between spatial resolution and temporal revisit frequency of current satellite systems. To address this limitation, we propose a novel deep learning model, namely BAM-MRCD, which employs multi-resolution, multi-source satellite imagery (MODIS and Sentinel-2) for the timely production of detailed burnt area maps with high spatial and temporal resolution. Our model manages to detect even small scale wildfires with high accuracy, surpassing similar change detection models as well as solid baselines. All data and code are available in the GitHub repository: https://github.com/Orion-AI-Lab/BAM-MRCD.

Wildfire spread forecasting with Deep Learning

May 23, 2025Abstract:Accurate prediction of wildfire spread is crucial for effective risk management, emergency response, and strategic resource allocation. In this study, we present a deep learning (DL)-based framework for forecasting the final extent of burned areas, using data available at the time of ignition. We leverage a spatio-temporal dataset that covers the Mediterranean region from 2006 to 2022, incorporating remote sensing data, meteorological observations, vegetation maps, land cover classifications, anthropogenic factors, topography data, and thermal anomalies. To evaluate the influence of temporal context, we conduct an ablation study examining how the inclusion of pre- and post-ignition data affects model performance, benchmarking the temporal-aware DL models against a baseline trained exclusively on ignition-day inputs. Our results indicate that multi-day observational data substantially improve predictive accuracy. Particularly, the best-performing model, incorporating a temporal window of four days before to five days after ignition, improves both the F1 score and the Intersection over Union by almost 5% in comparison to the baseline on the test dataset. We publicly release our dataset and models to enhance research into data-driven approaches for wildfire modeling and response.

Hephaestus Minicubes: A Global, Multi-Modal Dataset for Volcanic Unrest Monitoring

May 23, 2025Abstract:Ground deformation is regarded in volcanology as a key precursor signal preceding volcanic eruptions. Satellite-based Interferometric Synthetic Aperture Radar (InSAR) enables consistent, global-scale deformation tracking; however, deep learning methods remain largely unexplored in this domain, mainly due to the lack of a curated machine learning dataset. In this work, we build on the existing Hephaestus dataset, and introduce Hephaestus Minicubes, a global collection of 38 spatiotemporal datacubes offering high resolution, multi-source and multi-temporal information, covering 44 of the world's most active volcanoes over a 7-year period. Each spatiotemporal datacube integrates InSAR products, topographic data, as well as atmospheric variables which are known to introduce signal delays that can mimic ground deformation in InSAR imagery. Furthermore, we provide expert annotations detailing the type, intensity and spatial extent of deformation events, along with rich text descriptions of the observed scenes. Finally, we present a comprehensive benchmark, demonstrating Hephaestus Minicubes' ability to support volcanic unrest monitoring as a multi-modal, multi-temporal classification and semantic segmentation task, establishing strong baselines with state-of-the-art architectures. This work aims to advance machine learning research in volcanic monitoring, contributing to the growing integration of data-driven methods within Earth science applications.

Probabilistic Machine Learning for Noisy Labels in Earth Observation

Apr 04, 2025Abstract:Label noise poses a significant challenge in Earth Observation (EO), often degrading the performance and reliability of supervised Machine Learning (ML) models. Yet, given the critical nature of several EO applications, developing robust and trustworthy ML solutions is essential. In this study, we take a step in this direction by leveraging probabilistic ML to model input-dependent label noise and quantify data uncertainty in EO tasks, accounting for the unique noise sources inherent in the domain. We train uncertainty-aware probabilistic models across a broad range of high-impact EO applications-spanning diverse noise sources, input modalities, and ML configurations-and introduce a dedicated pipeline to assess their accuracy and reliability. Our experimental results show that the uncertainty-aware models consistently outperform the standard deterministic approaches across most datasets and evaluation metrics. Moreover, through rigorous uncertainty evaluation, we validate the reliability of the predicted uncertainty estimates, enhancing the interpretability of model predictions. Our findings emphasize the importance of modeling label noise and incorporating uncertainty quantification in EO, paving the way for more accurate, reliable, and trustworthy ML solutions in the field.

Towards a Unified Copernicus Foundation Model for Earth Vision

Mar 14, 2025Abstract:Advances in Earth observation (EO) foundation models have unlocked the potential of big satellite data to learn generic representations from space, benefiting a wide range of downstream applications crucial to our planet. However, most existing efforts remain limited to fixed spectral sensors, focus solely on the Earth's surface, and overlook valuable metadata beyond imagery. In this work, we take a step towards next-generation EO foundation models with three key components: 1) Copernicus-Pretrain, a massive-scale pretraining dataset that integrates 18.7M aligned images from all major Copernicus Sentinel missions, spanning from the Earth's surface to its atmosphere; 2) Copernicus-FM, a unified foundation model capable of processing any spectral or non-spectral sensor modality using extended dynamic hypernetworks and flexible metadata encoding; and 3) Copernicus-Bench, a systematic evaluation benchmark with 15 hierarchical downstream tasks ranging from preprocessing to specialized applications for each Sentinel mission. Our dataset, model, and benchmark greatly improve the scalability, versatility, and multimodal adaptability of EO foundation models, while also creating new opportunities to connect EO, weather, and climate research. Codes, datasets and models are available at https://github.com/zhu-xlab/Copernicus-FM.

On the Generalization of Representation Uncertainty in Earth Observation

Mar 10, 2025Abstract:Recent advances in Computer Vision have introduced the concept of pretrained representation uncertainty, enabling zero-shot uncertainty estimation. This holds significant potential for Earth Observation (EO), where trustworthiness is critical, yet the complexity of EO data poses challenges to uncertainty-aware methods. In this work, we investigate the generalization of representation uncertainty in EO, considering the domain's unique semantic characteristics. We pretrain uncertainties on large EO datasets and propose an evaluation framework to assess their zero-shot performance in multi-label classification and segmentation EO tasks. Our findings reveal that, unlike uncertainties pretrained on natural images, EO-pretraining exhibits strong generalization across unseen EO domains, geographic locations, and target granularities, while maintaining sensitivity to variations in ground sampling distance. We demonstrate the practical utility of pretrained uncertainties showcasing their alignment with task-specific uncertainties in downstream tasks, their sensitivity to real-world EO image noise, and their ability to generate spatial uncertainty estimates out-of-the-box. Initiating the discussion on representation uncertainty in EO, our study provides insights into its strengths and limitations, paving the way for future research in the field. Code and weights are available at: https://github.com/Orion-AI-Lab/EOUncertaintyGeneralization.

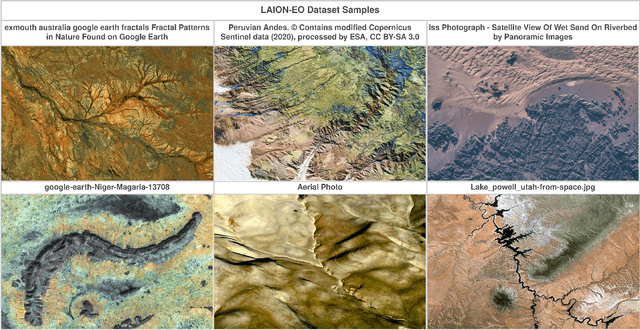

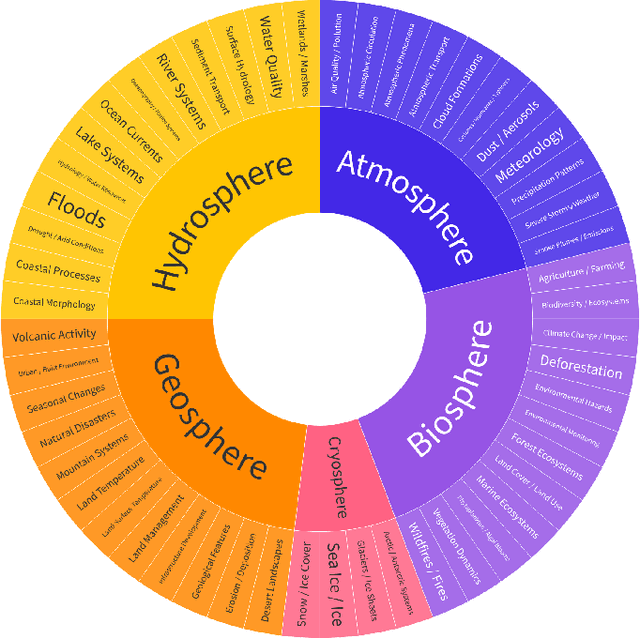

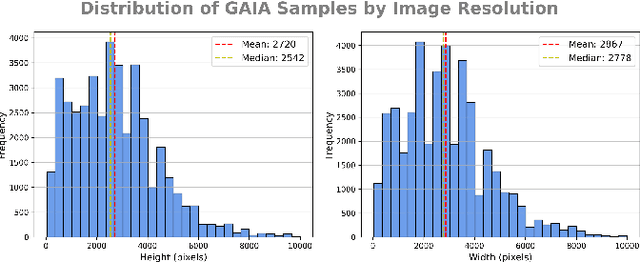

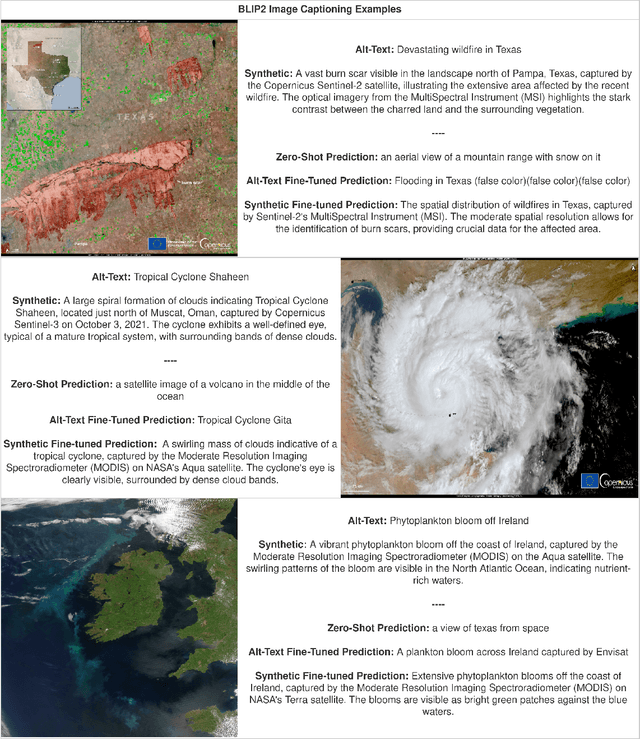

GAIA: A Global, Multi-modal, Multi-scale Vision-Language Dataset for Remote Sensing Image Analysis

Feb 13, 2025

Abstract:The continuous operation of Earth-orbiting satellites generates vast and ever-growing archives of Remote Sensing (RS) images. Natural language presents an intuitive interface for accessing, querying, and interpreting the data from such archives. However, existing Vision-Language Models (VLMs) are predominantly trained on web-scraped, noisy image-text data, exhibiting limited exposure to the specialized domain of RS. This deficiency results in poor performance on RS-specific tasks, as commonly used datasets often lack detailed, scientifically accurate textual descriptions and instead emphasize solely on attributes like date and location. To bridge this critical gap, we introduce GAIA, a novel dataset designed for multi-scale, multi-sensor, and multi-modal RS image analysis. GAIA comprises of 205,150 meticulously curated RS image-text pairs, representing a diverse range of RS modalities associated to different spatial resolutions. Unlike existing vision-language datasets in RS, GAIA specifically focuses on capturing a diverse range of RS applications, providing unique information about environmental changes, natural disasters, and various other dynamic phenomena. The dataset provides a spatially and temporally balanced distribution, spanning across the globe, covering the last 25 years with a balanced temporal distribution of observations. GAIA's construction involved a two-stage process: (1) targeted web-scraping of images and accompanying text from reputable RS-related sources, and (2) generation of five high-quality, scientifically grounded synthetic captions for each image using carefully crafted prompts that leverage the advanced vision-language capabilities of GPT-4o. Our extensive experiments, including fine-tuning of CLIP and BLIP2 models, demonstrate that GAIA significantly improves performance on RS image classification, cross-modal retrieval and image captioning tasks.

FireCastNet: Earth-as-a-Graph for Seasonal Fire Prediction

Feb 03, 2025

Abstract:With climate change expected to exacerbate fire weather conditions, the accurate and timely anticipation of wildfires becomes increasingly crucial for disaster mitigation. In this study, we utilize SeasFire, a comprehensive global wildfire dataset with climate, vegetation, oceanic indices, and human-related variables, to enable seasonal wildfire forecasting with machine learning. For the predictive analysis, we present FireCastNet, a novel architecture which combines a 3D convolutional encoder with GraphCast, originally developed for global short-term weather forecasting using graph neural networks. FireCastNet is trained to capture the context leading to wildfires, at different spatial and temporal scales. Our investigation focuses on assessing the effectiveness of our model in predicting the presence of burned areas at varying forecasting time horizons globally, extending up to six months into the future, and on how different spatial or/and temporal context affects the performance. Our findings demonstrate the potential of deep learning models in seasonal fire forecasting; longer input time-series leads to more robust predictions, while integrating spatial information to capture wildfire spatio-temporal dynamics boosts performance. Finally, our results hint that in order to enhance performance at longer forecasting horizons, a larger receptive field spatially needs to be considered.

AI for Extreme Event Modeling and Understanding: Methodologies and Challenges

Jun 28, 2024

Abstract:In recent years, artificial intelligence (AI) has deeply impacted various fields, including Earth system sciences. Here, AI improved weather forecasting, model emulation, parameter estimation, and the prediction of extreme events. However, the latter comes with specific challenges, such as developing accurate predictors from noisy, heterogeneous and limited annotated data. This paper reviews how AI is being used to analyze extreme events (like floods, droughts, wildfires and heatwaves), highlighting the importance of creating accurate, transparent, and reliable AI models. We discuss the hurdles of dealing with limited data, integrating information in real-time, deploying models, and making them understandable, all crucial for gaining the trust of stakeholders and meeting regulatory needs. We provide an overview of how AI can help identify and explain extreme events more effectively, improving disaster response and communication. We emphasize the need for collaboration across different fields to create AI solutions that are practical, understandable, and trustworthy for analyzing and predicting extreme events. Such collaborative efforts aim to enhance disaster readiness and disaster risk reduction.

Seasonal Fire Prediction using Spatio-Temporal Deep Neural Networks

Apr 09, 2024

Abstract:With climate change expected to exacerbate fire weather conditions, the accurate anticipation of wildfires on a global scale becomes increasingly crucial for disaster mitigation. In this study, we utilize SeasFire, a comprehensive global wildfire dataset with climate, vegetation, oceanic indices, and human-related variables, to enable seasonal wildfire forecasting with machine learning. For the predictive analysis, we train deep learning models with different architectures that capture the spatio-temporal context leading to wildfires. Our investigation focuses on assessing the effectiveness of these models in predicting the presence of burned areas at varying forecasting time horizons globally, extending up to six months into the future, and on how different spatial or/and temporal context affects the performance of the models. Our findings demonstrate the great potential of deep learning models in seasonal fire forecasting; longer input time-series leads to more robust predictions across varying forecasting horizons, while integrating spatial information to capture wildfire spatio-temporal dynamics boosts performance. Finally, our results hint that in order to enhance performance at longer forecasting horizons, a larger receptive field spatially needs to be considered.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge