Adrian Höhl

AI for Extreme Event Modeling and Understanding: Methodologies and Challenges

Jun 28, 2024

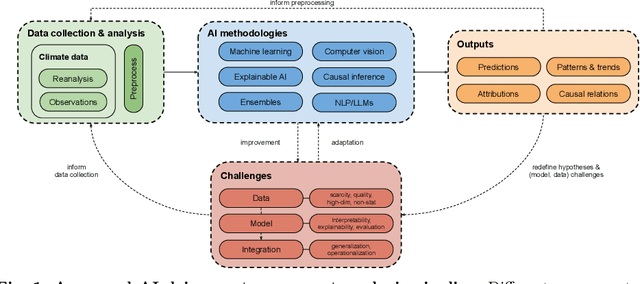

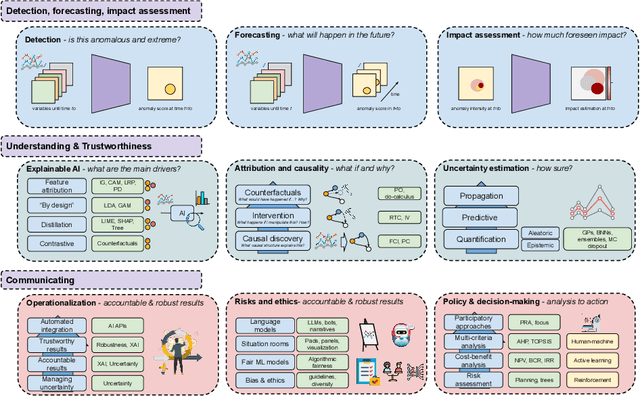

Abstract:In recent years, artificial intelligence (AI) has deeply impacted various fields, including Earth system sciences. Here, AI improved weather forecasting, model emulation, parameter estimation, and the prediction of extreme events. However, the latter comes with specific challenges, such as developing accurate predictors from noisy, heterogeneous and limited annotated data. This paper reviews how AI is being used to analyze extreme events (like floods, droughts, wildfires and heatwaves), highlighting the importance of creating accurate, transparent, and reliable AI models. We discuss the hurdles of dealing with limited data, integrating information in real-time, deploying models, and making them understandable, all crucial for gaining the trust of stakeholders and meeting regulatory needs. We provide an overview of how AI can help identify and explain extreme events more effectively, improving disaster response and communication. We emphasize the need for collaboration across different fields to create AI solutions that are practical, understandable, and trustworthy for analyzing and predicting extreme events. Such collaborative efforts aim to enhance disaster readiness and disaster risk reduction.

Opening the Black-Box: A Systematic Review on Explainable AI in Remote Sensing

Feb 21, 2024Abstract:In recent years, black-box machine learning approaches have become a dominant modeling paradigm for knowledge extraction in Remote Sensing. Despite the potential benefits of uncovering the inner workings of these models with explainable AI, a comprehensive overview summarizing the used explainable AI methods and their objectives, findings, and challenges in Remote Sensing applications is still missing. In this paper, we address this issue by performing a systematic review to identify the key trends of how explainable AI is used in Remote Sensing and shed light on novel explainable AI approaches and emerging directions that tackle specific Remote Sensing challenges. We also reveal the common patterns of explanation interpretation, discuss the extracted scientific insights in Remote Sensing, and reflect on the approaches used for explainable AI methods evaluation. Our review provides a complete summary of the state-of-the-art in the field. Further, we give a detailed outlook on the challenges and promising research directions, representing a basis for novel methodological development and a useful starting point for new researchers in the field of explainable AI in Remote Sensing.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge