Ivica Obadic

MedGNN: Capturing the Links Between Urban Characteristics and Medical Prescriptions

Apr 07, 2025Abstract:Understanding how urban socio-demographic and environmental factors relate with health is essential for public health and urban planning. However, traditional statistical methods struggle with nonlinear effects, while machine learning models often fail to capture geographical (nearby areas being more similar) and topological (unequal connectivity between places) effects in an interpretable way. To address this, we propose MedGNN, a spatio-topologically explicit framework that constructs a 2-hop spatial graph, integrating positional and locational node embeddings with urban characteristics in a graph neural network. Applied to MEDSAT, a comprehensive dataset covering over 150 environmental and socio-demographic factors and six prescription outcomes (depression, anxiety, diabetes, hypertension, asthma, and opioids) across 4,835 Greater London neighborhoods, MedGNN improved predictions by over 25% on average compared to baseline methods. Using depression prescriptions as a case study, we analyzed graph embeddings via geographical principal component analysis, identifying findings that: align with prior research (e.g., higher antidepressant prescriptions among older and White populations), contribute to ongoing debates (e.g., greenery linked to higher and NO2 to lower prescriptions), and warrant further study (e.g., canopy evaporation correlated with fewer prescriptions). These results demonstrate MedGNN's potential, and more broadly, of carefully applied machine learning, to advance transdisciplinary public health research.

i-WiViG: Interpretable Window Vision GNN

Mar 11, 2025Abstract:Deep learning models based on graph neural networks have emerged as a popular approach for solving computer vision problems. They encode the image into a graph structure and can be beneficial for efficiently capturing the long-range dependencies typically present in remote sensing imagery. However, an important drawback of these methods is their black-box nature which may hamper their wider usage in critical applications. In this work, we tackle the self-interpretability of the graph-based vision models by proposing our Interpretable Window Vision GNN (i-WiViG) approach, which provides explanations by automatically identifying the relevant subgraphs for the model prediction. This is achieved with window-based image graph processing that constrains the node receptive field to a local image region and by using a self-interpretable graph bottleneck that ranks the importance of the long-range relations between the image regions. We evaluate our approach to remote sensing classification and regression tasks, showing it achieves competitive performance while providing inherent and faithful explanations through the identified relations. Further, the quantitative evaluation reveals that our model reduces the infidelity of post-hoc explanations compared to other Vision GNN models, without sacrificing explanation sparsity.

Contrastive Pretraining for Visual Concept Explanations of Socioeconomic Outcomes

Apr 15, 2024

Abstract:Predicting socioeconomic indicators from satellite imagery with deep learning has become an increasingly popular research direction. Post-hoc concept-based explanations can be an important step towards broader adoption of these models in policy-making as they enable the interpretation of socioeconomic outcomes based on visual concepts that are intuitive to humans. In this paper, we study the interplay between representation learning using an additional task-specific contrastive loss and post-hoc concept explainability for socioeconomic studies. Our results on two different geographical locations and tasks indicate that the task-specific pretraining imposes a continuous ordering of the latent space embeddings according to the socioeconomic outcomes. This improves the model's interpretability as it enables the latent space of the model to associate urban concepts with continuous intervals of socioeconomic outcomes. Further, we illustrate how analyzing the model's conceptual sensitivity for the intervals of socioeconomic outcomes can shed light on new insights for urban studies.

Opening the Black-Box: A Systematic Review on Explainable AI in Remote Sensing

Feb 21, 2024Abstract:In recent years, black-box machine learning approaches have become a dominant modeling paradigm for knowledge extraction in Remote Sensing. Despite the potential benefits of uncovering the inner workings of these models with explainable AI, a comprehensive overview summarizing the used explainable AI methods and their objectives, findings, and challenges in Remote Sensing applications is still missing. In this paper, we address this issue by performing a systematic review to identify the key trends of how explainable AI is used in Remote Sensing and shed light on novel explainable AI approaches and emerging directions that tackle specific Remote Sensing challenges. We also reveal the common patterns of explanation interpretation, discuss the extracted scientific insights in Remote Sensing, and reflect on the approaches used for explainable AI methods evaluation. Our review provides a complete summary of the state-of-the-art in the field. Further, we give a detailed outlook on the challenges and promising research directions, representing a basis for novel methodological development and a useful starting point for new researchers in the field of explainable AI in Remote Sensing.

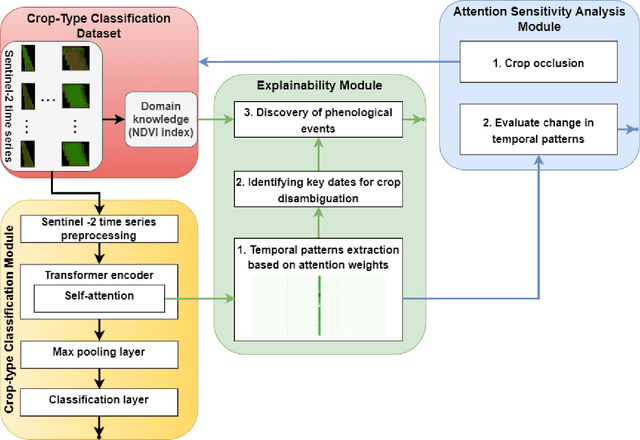

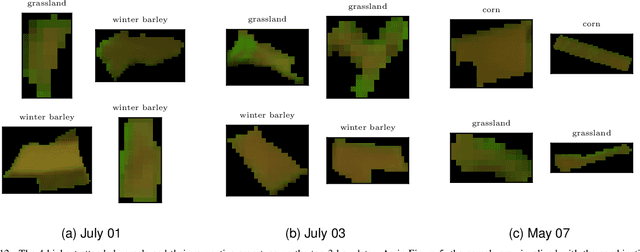

Exploring Self-Attention for Crop-type Classification Explainability

Oct 24, 2022

Abstract:Automated crop-type classification using Sentinel-2 satellite time series is essential to support agriculture monitoring. Recently, deep learning models based on transformer encoders became a promising approach for crop-type classification. Using explainable machine learning to reveal the inner workings of these models is an important step towards improving stakeholders' trust and efficient agriculture monitoring. In this paper, we introduce a novel explainability framework that aims to shed a light on the essential crop disambiguation patterns learned by a state-of-the-art transformer encoder model. More specifically, we process the attention weights of a trained transformer encoder to reveal the critical dates for crop disambiguation and use domain knowledge to uncover the phenological events that support the model performance. We also present a sensitivity analysis approach to understand better the attention capability for revealing crop-specific phenological events. We report compelling results showing that attention patterns strongly relate to key dates, and consequently, to the critical phenological events for crop-type classification. These findings might be relevant for improving stakeholder trust and optimizing agriculture monitoring processes. Additionally, our sensitivity analysis demonstrates the limitation of attention weights for identifying the important events in the crop phenology as we empirically show that the unveiled phenological events depend on the other crops in the data considered during training.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge