Andreas Karavias

Hephaestus Minicubes: A Global, Multi-Modal Dataset for Volcanic Unrest Monitoring

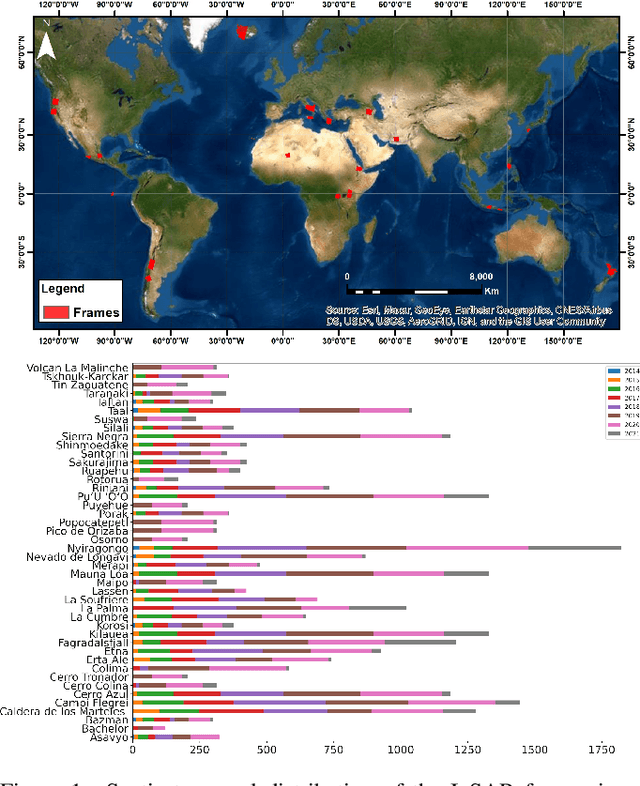

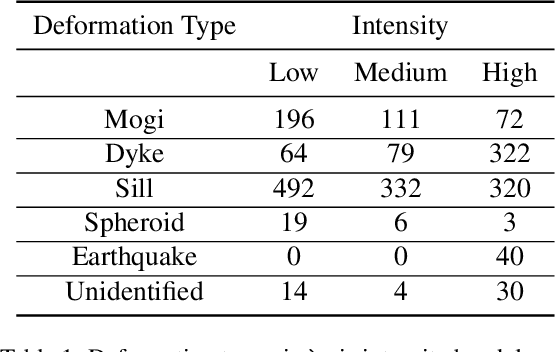

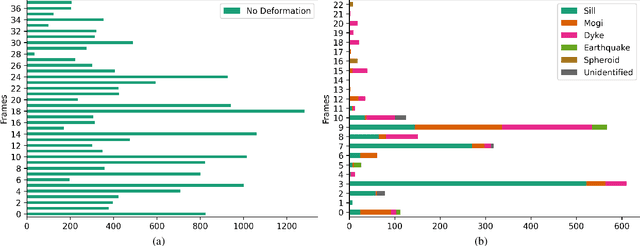

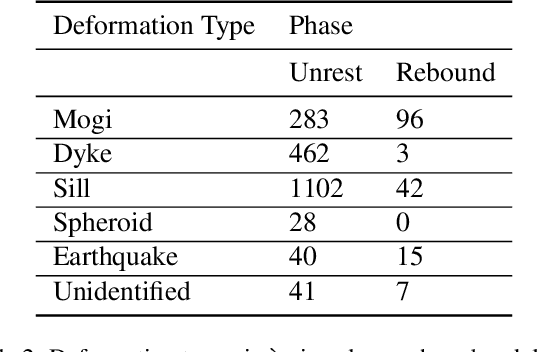

May 23, 2025Abstract:Ground deformation is regarded in volcanology as a key precursor signal preceding volcanic eruptions. Satellite-based Interferometric Synthetic Aperture Radar (InSAR) enables consistent, global-scale deformation tracking; however, deep learning methods remain largely unexplored in this domain, mainly due to the lack of a curated machine learning dataset. In this work, we build on the existing Hephaestus dataset, and introduce Hephaestus Minicubes, a global collection of 38 spatiotemporal datacubes offering high resolution, multi-source and multi-temporal information, covering 44 of the world's most active volcanoes over a 7-year period. Each spatiotemporal datacube integrates InSAR products, topographic data, as well as atmospheric variables which are known to introduce signal delays that can mimic ground deformation in InSAR imagery. Furthermore, we provide expert annotations detailing the type, intensity and spatial extent of deformation events, along with rich text descriptions of the observed scenes. Finally, we present a comprehensive benchmark, demonstrating Hephaestus Minicubes' ability to support volcanic unrest monitoring as a multi-modal, multi-temporal classification and semantic segmentation task, establishing strong baselines with state-of-the-art architectures. This work aims to advance machine learning research in volcanic monitoring, contributing to the growing integration of data-driven methods within Earth science applications.

Tiered approach for rapid damage characterisation of infrastructure enabled by remote sensing and deep learning technologies

Feb 01, 2024Abstract:Critical infrastructure such as bridges are systematically targeted during wars and conflicts. This is because critical infrastructure is vital for enabling connectivity and transportation of people and goods, and hence, underpinning the national and international defence planning and economic growth. Mass destruction of bridges, along with minimal or no accessibility to these assets during natural and anthropogenic disasters, prevents us from delivering rapid recovery. As a result, systemic resilience is drastically reduced. A solution to this challenge is to use technology for stand-off observations. Yet, no method exists to characterise damage at different scales, i.e. regional, asset, and structural (component), and more so there is little or no systematic correlation between assessments at scale. We propose an integrated three-level tiered approach to fill this capability gap, and we demonstrate the methods for damage characterisation enabled by fit-for-purpose digital technologies. Next, this method is applied and validated to a case study in Ukraine that includes 17 bridges. From macro to micro, we deploy technology at scale, from Sentinel-1 SAR images, crowdsourced information, and high-resolution images to deep learning for damaged infrastructure. For the first time, the interferometric coherence difference and semantic segmentation of images were deployed to improve the reliability of damage characterisations from regional to infrastructure component level, when enhanced assessment accuracy is required. This integrated method improves the speed of decision-making, and thus, enhances resilience. Keywords: critical infrastructure, damage characterisation, targeted attacks, restoration

Kuro Siwo: 12.1 billion $m^2$ under the water. A global multi-temporal satellite dataset for rapid flood mapping

Nov 18, 2023

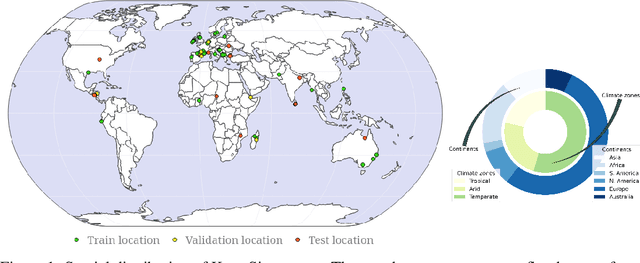

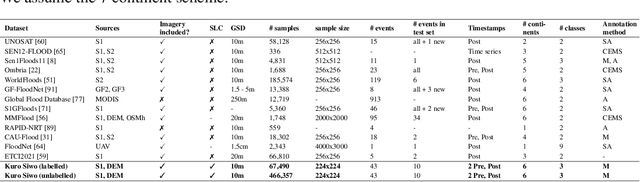

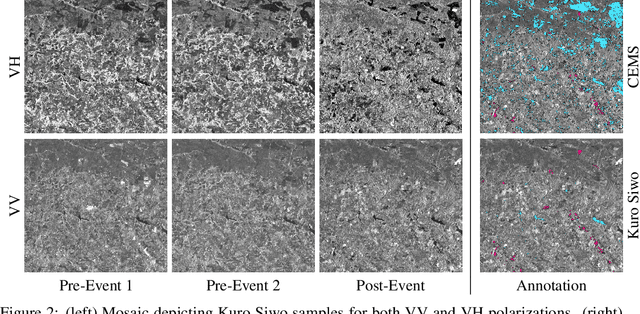

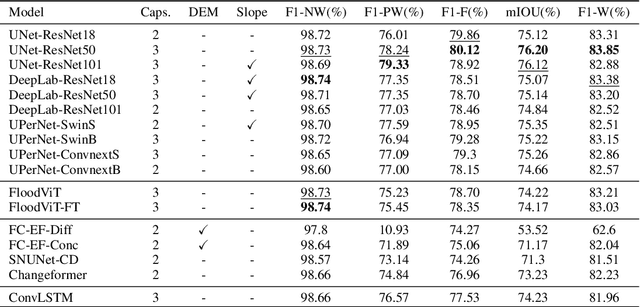

Abstract:Global floods, exacerbated by climate change, pose severe threats to human life, infrastructure, and the environment. This urgency is highlighted by recent catastrophic events in Pakistan and New Zealand, underlining the critical need for precise flood mapping for guiding restoration efforts, understanding vulnerabilities, and preparing for future events. While Synthetic Aperture Radar (SAR) offers day-and-night, all-weather imaging capabilities, harnessing it for deep learning is hindered by the absence of a large annotated dataset. To bridge this gap, we introduce Kuro Siwo, a meticulously curated multi-temporal dataset, spanning 32 flood events globally. Our dataset maps more than 63 billion m2 of land, with 12.1 billion of them being either a flooded area or a permanent water body. Kuro Siwo stands out for its unparalleled annotation quality to facilitate rapid flood mapping in a supervised setting. We also augment learning by including a large unlabeled set of SAR samples, aimed at self-supervised pretraining. We provide an extensive benchmark and strong baselines for a diverse set of flood events from Europe, America, Africa and Australia. Our benchmark demonstrates the quality of Kuro Siwo annotations, training models that can achieve $\approx$ 85% and $\approx$ 87% in F1-score for flooded areas and general water detection respectively. This work calls on the deep learning community to develop solution-driven algorithms for rapid flood mapping, with the potential to aid civil protection and humanitarian agencies amid climate change challenges. Our code and data will be made available at https://github.com/Orion-AI-Lab/KuroSiwo

Hephaestus: A large scale multitask dataset towards InSAR understanding

Apr 20, 2022

Abstract:Synthetic Aperture Radar (SAR) data and Interferometric SAR (InSAR) products in particular, are one of the largest sources of Earth Observation data. InSAR provides unique information on diverse geophysical processes and geology, and on the geotechnical properties of man-made structures. However, there are only a limited number of applications that exploit the abundance of InSAR data and deep learning methods to extract such knowledge. The main barrier has been the lack of a large curated and annotated InSAR dataset, which would be costly to create and would require an interdisciplinary team of experts experienced on InSAR data interpretation. In this work, we put the effort to create and make available the first of its kind, manually annotated dataset that consists of 19,919 individual Sentinel-1 interferograms acquired over 44 different volcanoes globally, which are split into 216,106 InSAR patches. The annotated dataset is designed to address different computer vision problems, including volcano state classification, semantic segmentation of ground deformation, detection and classification of atmospheric signals in InSAR imagery, interferogram captioning, text to InSAR generation, and InSAR image quality assessment.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge