Nassim Ait Ali Braham

HyBiomass: Global Hyperspectral Imagery Benchmark Dataset for Evaluating Geospatial Foundation Models in Forest Aboveground Biomass Estimation

Jun 12, 2025

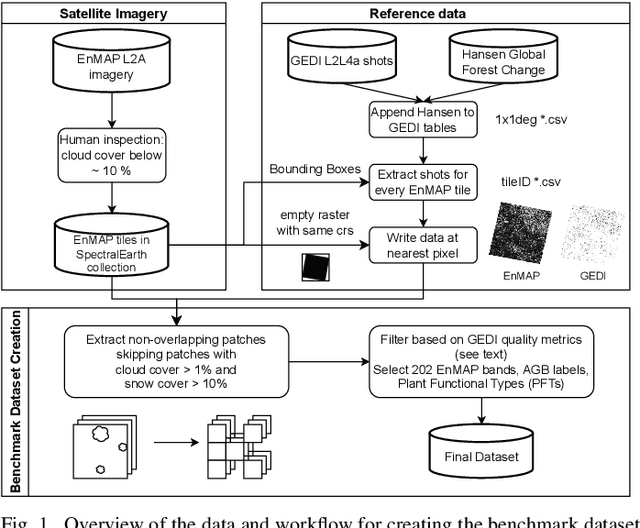

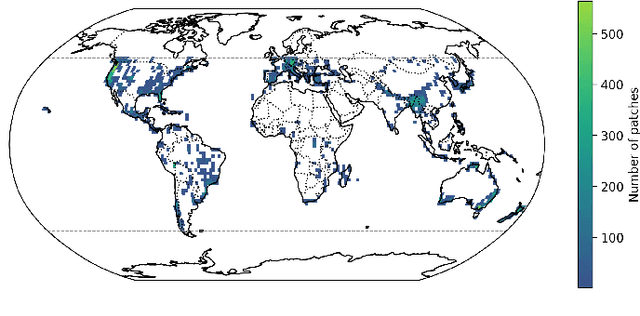

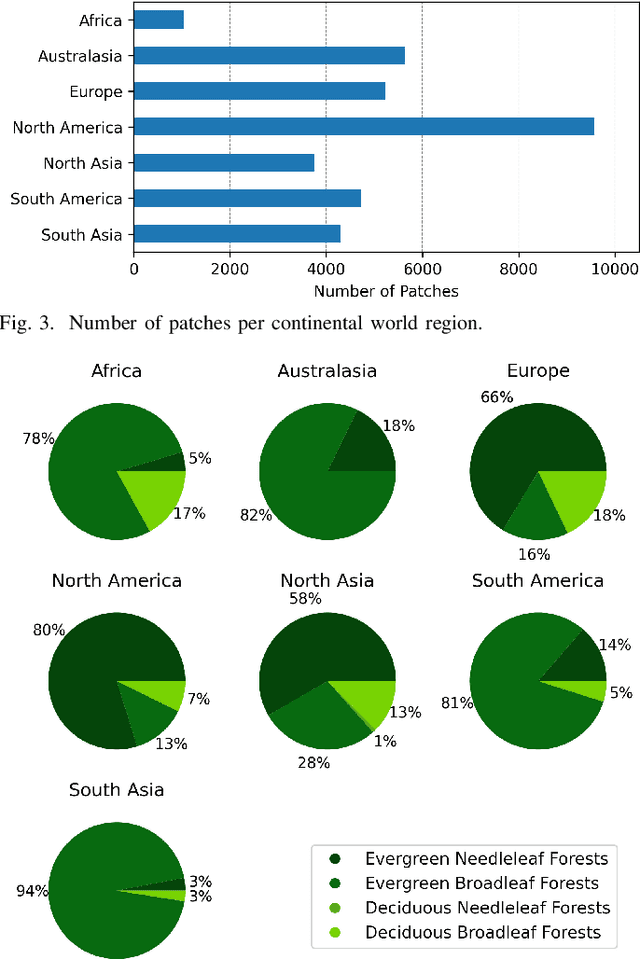

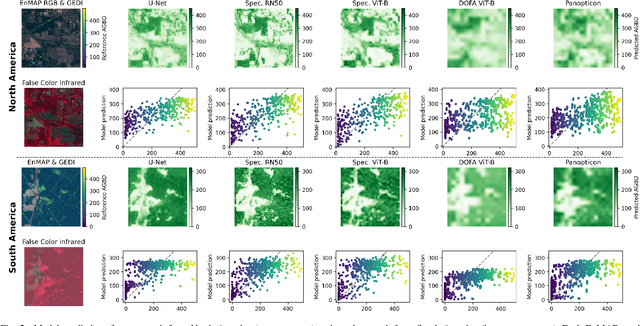

Abstract:Comprehensive evaluation of geospatial foundation models (Geo-FMs) requires benchmarking across diverse tasks, sensors, and geographic regions. However, most existing benchmark datasets are limited to segmentation or classification tasks, and focus on specific geographic areas. To address this gap, we introduce a globally distributed dataset for forest aboveground biomass (AGB) estimation, a pixel-wise regression task. This benchmark dataset combines co-located hyperspectral imagery (HSI) from the Environmental Mapping and Analysis Program (EnMAP) satellite and predictions of AGB density estimates derived from the Global Ecosystem Dynamics Investigation lidars, covering seven continental regions. Our experimental results on this dataset demonstrate that the evaluated Geo-FMs can match or, in some cases, surpass the performance of a baseline U-Net, especially when fine-tuning the encoder. We also find that the performance difference between the U-Net and Geo-FMs depends on the dataset size for each region and highlight the importance of the token patch size in the Vision Transformer backbone for accurate predictions in pixel-wise regression tasks. By releasing this globally distributed hyperspectral benchmark dataset, we aim to facilitate the development and evaluation of Geo-FMs for HSI applications. Leveraging this dataset additionally enables research into geographic bias and generalization capacity of Geo-FMs. The dataset and source code will be made publicly available.

Hyperspectral Vision Transformers for Greenhouse Gas Estimations from Space

Apr 23, 2025Abstract:Hyperspectral imaging provides detailed spectral information and holds significant potential for monitoring of greenhouse gases (GHGs). However, its application is constrained by limited spatial coverage and infrequent revisit times. In contrast, multispectral imaging offers broader spatial and temporal coverage but often lacks the spectral detail that can enhance GHG detection. To address these challenges, this study proposes a spectral transformer model that synthesizes hyperspectral data from multispectral inputs. The model is pre-trained via a band-wise masked autoencoder and subsequently fine-tuned on spatio-temporally aligned multispectral-hyperspectral image pairs. The resulting synthetic hyperspectral data retain the spatial and temporal benefits of multispectral imagery and improve GHG prediction accuracy relative to using multispectral data alone. This approach effectively bridges the trade-off between spectral resolution and coverage, highlighting its potential to advance atmospheric monitoring by combining the strengths of hyperspectral and multispectral systems with self-supervised deep learning.

Prospects for Mitigating Spectral Variability in Tropical Species Classification Using Self-Supervised Learning

Mar 17, 2025Abstract:Airborne hyperspectral imaging is a promising method for identifying tropical species, but spectral variability between acquisitions hinders consistent results. This paper proposes using Self-Supervised Learning (SSL) to encode spectral features that are robust to abiotic variability and relevant for species identification. By employing the state-of-the-art Barlow-Twins approach on repeated spectral acquisitions, we demonstrate the ability to develop stable features. For the classification of 40 tropical species, experiments show that these features can outperform typical reflectance products in terms of robustness to spectral variability by 10 points of accuracy across dates.

* 5 pages, 3 figures, published as proceeding of the "2024 14th Workshop on Hyperspectral Imaging and Signal Processing: Evolution in Remote Sensing (WHISPERS)"

Multispectral to Hyperspectral using Pretrained Foundational model

Feb 26, 2025

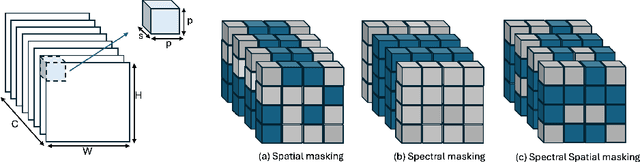

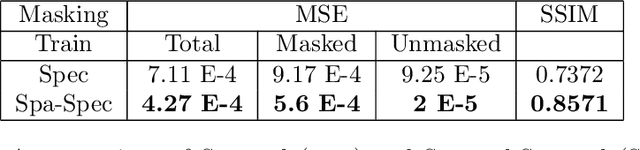

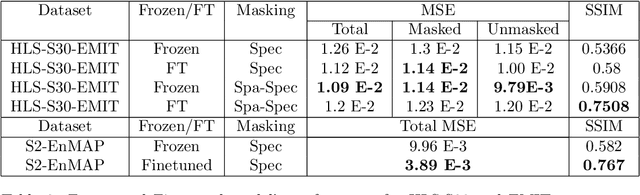

Abstract:Hyperspectral imaging provides detailed spectral information, offering significant potential for monitoring greenhouse gases like CH4 and NO2. However, its application is constrained by limited spatial coverage and infrequent revisit times. In contrast, multispectral imaging delivers broader spatial and temporal coverage but lacks the spectral granularity required for precise GHG detection. To address these challenges, this study proposes Spectral and Spatial-Spectral transformer models that reconstruct hyperspectral data from multispectral inputs. The models in this paper are pretrained on EnMAP and EMIT datasets and fine-tuned on spatio-temporally aligned (Sentinel-2, EnMAP) and (HLS-S30, EMIT) image pairs respectively. Our model has the potential to enhance atmospheric monitoring by combining the strengths of hyperspectral and multispectral imaging systems.

SpectralEarth: Training Hyperspectral Foundation Models at Scale

Aug 15, 2024

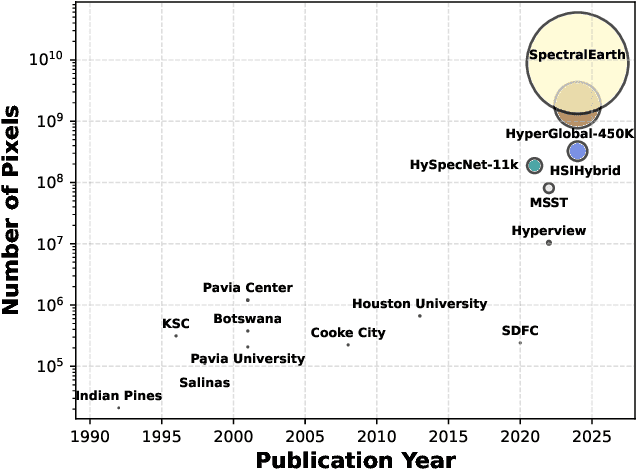

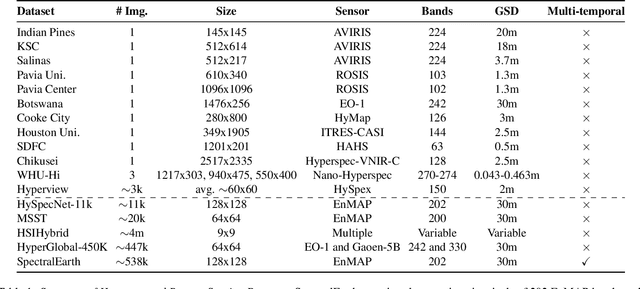

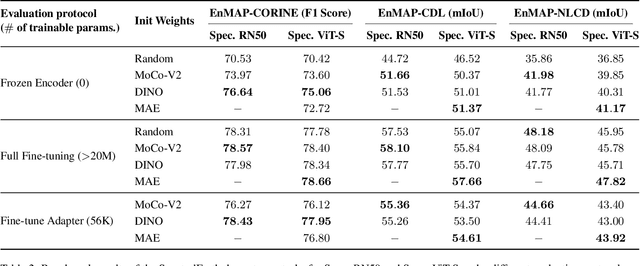

Abstract:Foundation models have triggered a paradigm shift in computer vision and are increasingly being adopted in remote sensing, particularly for multispectral imagery. Yet, their potential in hyperspectral imaging (HSI) remains untapped due to the absence of comprehensive and globally representative hyperspectral datasets. To close this gap, we introduce SpectralEarth, a large-scale multi-temporal dataset designed to pretrain hyperspectral foundation models leveraging data from the Environmental Mapping and Analysis Program (EnMAP). SpectralEarth comprises 538,974 image patches covering 415,153 unique locations from more than 11,636 globally distributed EnMAP scenes spanning two years of archive. Additionally, 17.5% of these locations include multiple timestamps, enabling multi-temporal HSI analysis. Utilizing state-of-the-art self-supervised learning (SSL) algorithms, we pretrain a series of foundation models on SpectralEarth. We integrate a spectral adapter into classical vision backbones to accommodate the unique characteristics of HSI. In tandem, we construct four downstream datasets for land-cover and crop-type mapping, providing benchmarks for model evaluation. Experimental results support the versatility of our models, showcasing their generalizability across different tasks and sensors. We also highlight computational efficiency during model fine-tuning. The dataset, models, and source code will be made publicly available.

DeCUR: decoupling common & unique representations for multimodal self-supervision

Sep 15, 2023Abstract:The increasing availability of multi-sensor data sparks interest in multimodal self-supervised learning. However, most existing approaches learn only common representations across modalities while ignoring intra-modal training and modality-unique representations. We propose Decoupling Common and Unique Representations (DeCUR), a simple yet effective method for multimodal self-supervised learning. By distinguishing inter- and intra-modal embeddings, DeCUR is trained to integrate complementary information across different modalities. We evaluate DeCUR in three common multimodal scenarios (radar-optical, RGB-elevation, and RGB-depth), and demonstrate its consistent benefits on scene classification and semantic segmentation downstream tasks. Notably, we get straightforward improvements by transferring our pretrained backbones to state-of-the-art supervised multimodal methods without any hyperparameter tuning. Furthermore, we conduct a comprehensive explainability analysis to shed light on the interpretation of common and unique features in our multimodal approach. Codes are available at \url{https://github.com/zhu-xlab/DeCUR}.

Semi-Supervised Learning for hyperspectral images by non parametrically predicting view assignment

Jun 19, 2023Abstract:Hyperspectral image (HSI) classification is gaining a lot of momentum in present time because of high inherent spectral information within the images. However, these images suffer from the problem of curse of dimensionality and usually require a large number samples for tasks such as classification, especially in supervised setting. Recently, to effectively train the deep learning models with minimal labelled samples, the unlabeled samples are also being leveraged in self-supervised and semi-supervised setting. In this work, we leverage the idea of semi-supervised learning to assist the discriminative self-supervised pretraining of the models. The proposed method takes different augmented views of the unlabeled samples as input and assigns them the same pseudo-label corresponding to the labelled sample from the downstream task. We train our model on two HSI datasets, namely Houston dataset (from data fusion contest, 2013) and Pavia university dataset, and show that the proposed approach performs better than self-supervised approach and supervised training.

SSL4EO-L: Datasets and Foundation Models for Landsat Imagery

Jun 15, 2023

Abstract:The Landsat program is the longest-running Earth observation program in history, with 50+ years of data acquisition by 8 satellites. The multispectral imagery captured by sensors onboard these satellites is critical for a wide range of scientific fields. Despite the increasing popularity of deep learning and remote sensing, the majority of researchers still use decision trees and random forests for Landsat image analysis due to the prevalence of small labeled datasets and lack of foundation models. In this paper, we introduce SSL4EO-L, the first ever dataset designed for Self-Supervised Learning for Earth Observation for the Landsat family of satellites (including 3 sensors and 2 product levels) and the largest Landsat dataset in history (5M image patches). Additionally, we modernize and re-release the L7 Irish and L8 Biome cloud detection datasets, and introduce the first ML benchmark datasets for Landsats 4-5 TM and Landsat 7 ETM+ SR. Finally, we pre-train the first foundation models for Landsat imagery using SSL4EO-L and evaluate their performance on multiple semantic segmentation tasks. All datasets and model weights are available via the TorchGeo (https://github.com/microsoft/torchgeo) library, making reproducibility and experimentation easy, and enabling scientific advancements in the burgeoning field of remote sensing for a myriad of downstream applications.

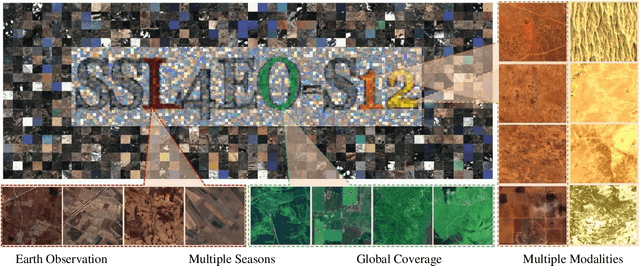

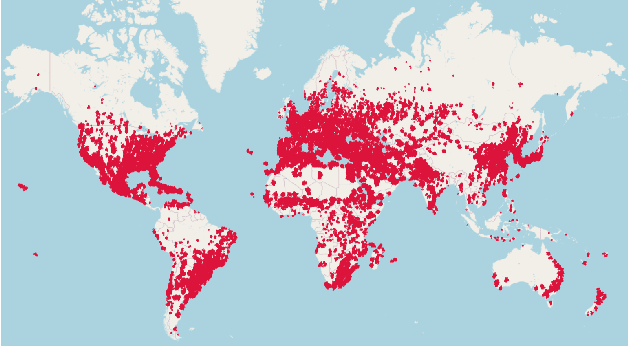

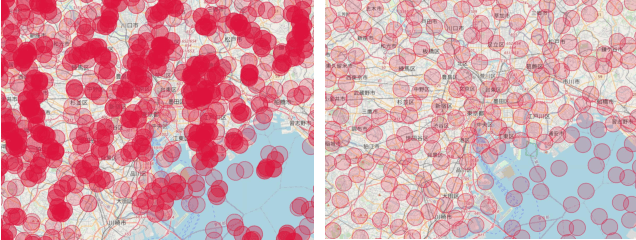

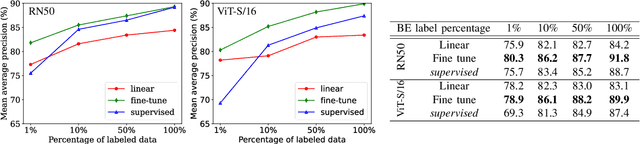

SSL4EO-S12: A Large-Scale Multi-Modal, Multi-Temporal Dataset for Self-Supervised Learning in Earth Observation

Nov 13, 2022

Abstract:Self-supervised pre-training bears potential to generate expressive representations without human annotation. Most pre-training in Earth observation (EO) are based on ImageNet or medium-size, labeled remote sensing (RS) datasets. We share an unlabeled RS dataset SSL4EO-S12 (Self-Supervised Learning for Earth Observation - Sentinel-1/2) to assemble a large-scale, global, multimodal, and multi-seasonal corpus of satellite imagery from the ESA Sentinel-1 \& -2 satellite missions. For EO applications we demonstrate SSL4EO-S12 to succeed in self-supervised pre-training for a set of methods: MoCo-v2, DINO, MAE, and data2vec. Resulting models yield downstream performance close to, or surpassing accuracy measures of supervised learning. In addition, pre-training on SSL4EO-S12 excels compared to existing datasets. We make openly available the dataset, related source code, and pre-trained models at https://github.com/zhu-xlab/SSL4EO-S12.

Self-supervised Learning in Remote Sensing: A Review

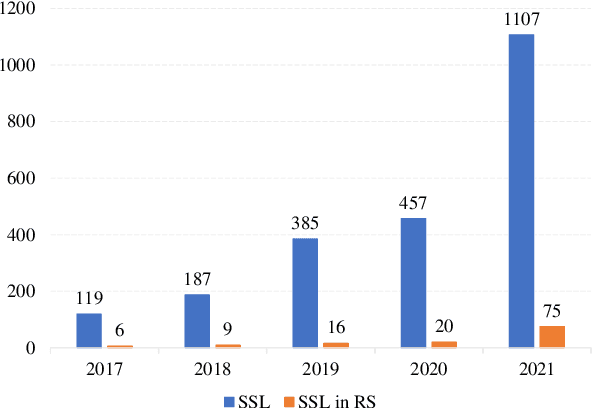

Jun 27, 2022

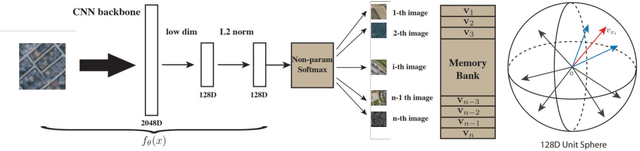

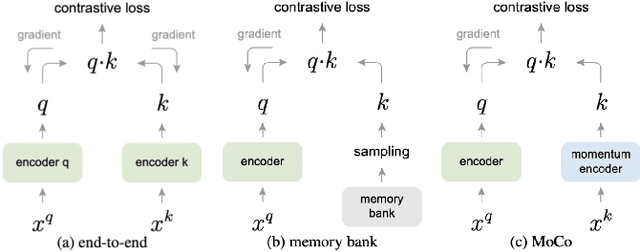

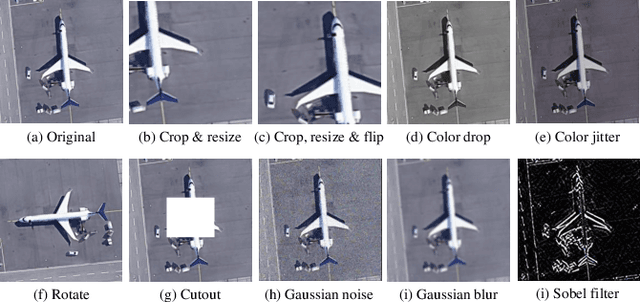

Abstract:In deep learning research, self-supervised learning (SSL) has received great attention triggering interest within both the computer vision and remote sensing communities. While there has been a big success in computer vision, most of the potential of SSL in the domain of earth observation remains locked. In this paper, we provide an introduction to, and a review of the concepts and latest developments in SSL for computer vision in the context of remote sensing. Further, we provide a preliminary benchmark of modern SSL algorithms on popular remote sensing datasets, verifying the potential of SSL in remote sensing and providing an extended study on data augmentations. Finally, we identify a list of promising directions of future research in SSL for earth observation (SSL4EO) to pave the way for fruitful interaction of both domains.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge