Ziyan Zhang

ROG: Retrieval-Augmented LLM Reasoning for Complex First-Order Queries over Knowledge Graphs

Feb 02, 2026Abstract:Answering first-order logic (FOL) queries over incomplete knowledge graphs (KGs) is difficult, especially for complex query structures that compose projection, intersection, union, and negation. We propose ROG, a retrieval-augmented framework that combines query-aware neighborhood retrieval with large language model (LLM) chain-of-thought reasoning. ROG decomposes a multi-operator query into a sequence of single-operator sub-queries and grounds each step in compact, query-relevant neighborhood evidence. Intermediate answer sets are cached and reused across steps, improving consistency on deep reasoning chains. This design reduces compounding errors and yields more robust inference on complex and negation-heavy queries. Overall, ROG provides a practical alternative to embedding-based logical reasoning by replacing learned operators with retrieval-grounded, step-wise inference. Experiments on standard KG reasoning benchmarks show consistent gains over strong embedding-based baselines, with the largest improvements on high-complexity and negation-heavy query types.

Robust Graph Fine-Tuning with Adversarial Graph Prompting

Jan 01, 2026Abstract:Parameter-Efficient Fine-Tuning (PEFT) method has emerged as a dominant paradigm for adapting pre-trained GNN models to downstream tasks. However, existing PEFT methods usually exhibit significant vulnerability to various noise and attacks on graph topology and node attributes/features. To address this issue, for the first time, we propose integrating adversarial learning into graph prompting and develop a novel Adversarial Graph Prompting (AGP) framework to achieve robust graph fine-tuning. Our AGP has two key aspects. First, we propose the general problem formulation of AGP as a min-max optimization problem and develop an alternating optimization scheme to solve it. For inner maximization, we propose Joint Projected Gradient Descent (JointPGD) algorithm to generate strong adversarial noise. For outer minimization, we employ a simple yet effective module to learn the optimal node prompts to counteract the adversarial noise. Second, we demonstrate that the proposed AGP can theoretically address both graph topology and node noise. This confirms the versatility and robustness of our AGP fine-tuning method across various graph noise. Note that, the proposed AGP is a general method that can be integrated with various pre-trained GNN models to enhance their robustness on the downstream tasks. Extensive experiments on multiple benchmark tasks validate the robustness and effectiveness of AGP method compared to state-of-the-art methods.

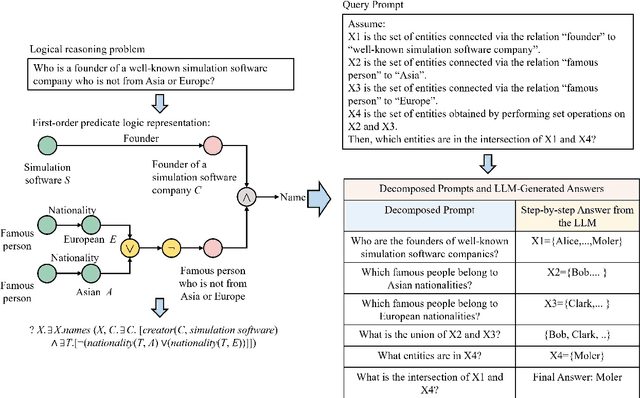

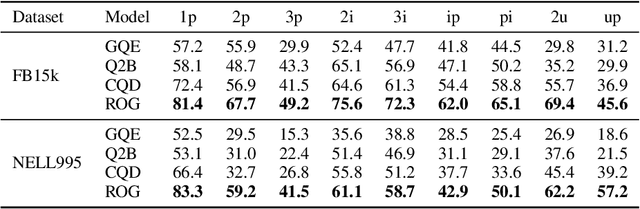

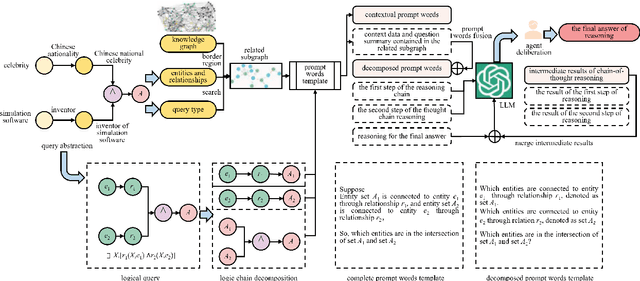

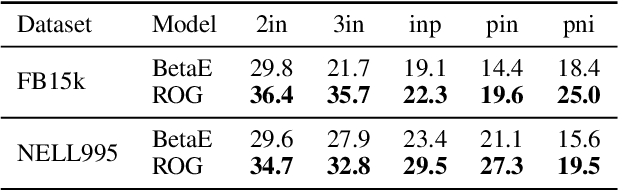

A Large Language Model Based Method for Complex Logical Reasoning over Knowledge Graphs

Dec 22, 2025

Abstract:Reasoning over knowledge graphs (KGs) with first-order logic (FOL) queries is challenging due to the inherent incompleteness of real-world KGs and the compositional complexity of logical query structures. Most existing methods rely on embedding entities and relations into continuous geometric spaces and answer queries via differentiable set operations. While effective for simple query patterns, these approaches often struggle to generalize to complex queries involving multiple operators, deeper reasoning chains, or heterogeneous KG schemas. We propose ROG (Reasoning Over knowledge Graphs with large language models), an ensemble-style framework that combines query-aware KG neighborhood retrieval with large language model (LLM)-based chain-of-thought reasoning. ROG decomposes complex FOL queries into sequences of simpler sub-queries, retrieves compact, query-relevant subgraphs as contextual evidence, and performs step-by-step logical inference using an LLM, avoiding the need for task-specific embedding optimization. Experiments on standard KG reasoning benchmarks demonstrate that ROG consistently outperforms strong embedding-based baselines in terms of mean reciprocal rank (MRR), with particularly notable gains on high-complexity query types. These results suggest that integrating structured KG retrieval with LLM-driven logical reasoning offers a robust and effective alternative for complex KG reasoning tasks.

Self-Correction Makes LLMs Better Parsers

Apr 19, 2025Abstract:Large language models (LLMs) have achieved remarkable success across various natural language processing (NLP) tasks. However, recent studies suggest that they still face challenges in performing fundamental NLP tasks essential for deep language understanding, particularly syntactic parsing. In this paper, we conduct an in-depth analysis of LLM parsing capabilities, delving into the specific shortcomings of their parsing results. We find that LLMs may stem from limitations to fully leverage grammar rules in existing treebanks, which restricts their capability to generate valid syntactic structures. To help LLMs acquire knowledge without additional training, we propose a self-correction method that leverages grammar rules from existing treebanks to guide LLMs in correcting previous errors. Specifically, we automatically detect potential errors and dynamically search for relevant rules, offering hints and examples to guide LLMs in making corrections themselves. Experimental results on three datasets with various LLMs, demonstrate that our method significantly improves performance in both in-domain and cross-domain settings on the English and Chinese datasets.

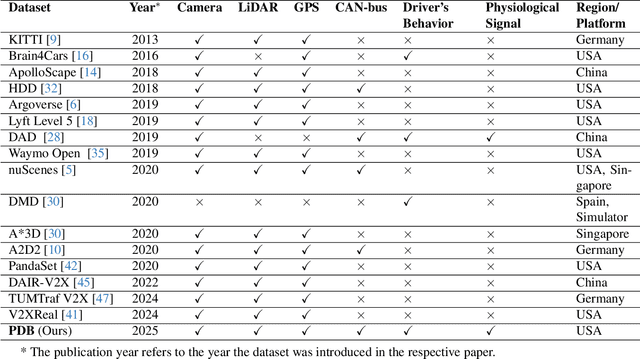

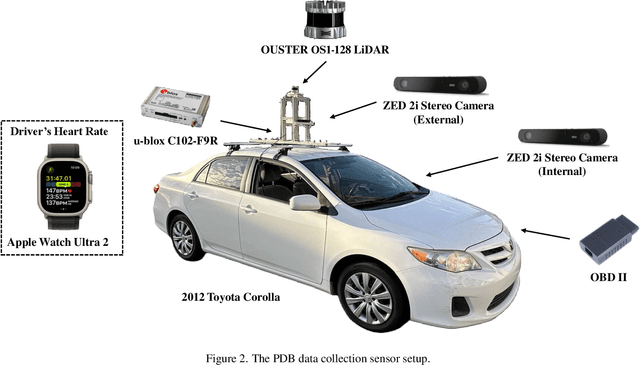

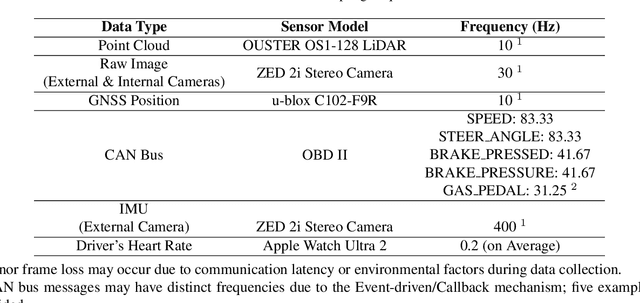

PDB: Not All Drivers Are the Same -- A Personalized Dataset for Understanding Driving Behavior

Mar 09, 2025

Abstract:Driving behavior is inherently personal, influenced by individual habits, decision-making styles, and physiological states. However, most existing datasets treat all drivers as homogeneous, overlooking driver-specific variability. To address this gap, we introduce the Personalized Driving Behavior (PDB) dataset, a multi-modal dataset designed to capture personalization in driving behavior under naturalistic driving conditions. Unlike conventional datasets, PDB minimizes external influences by maintaining consistent routes, vehicles, and lighting conditions across sessions. It includes sources from 128-line LiDAR, front-facing camera video, GNSS, 9-axis IMU, CAN bus data (throttle, brake, steering angle), and driver-specific signals such as facial video and heart rate. The dataset features 12 participants, approximately 270,000 LiDAR frames, 1.6 million images, and 6.6 TB of raw sensor data. The processed trajectory dataset consists of 1,669 segments, each spanning 10 seconds with a 0.2-second interval. By explicitly capturing drivers' behavior, PDB serves as a unique resource for human factor analysis, driver identification, and personalized mobility applications, contributing to the development of human-centric intelligent transportation systems.

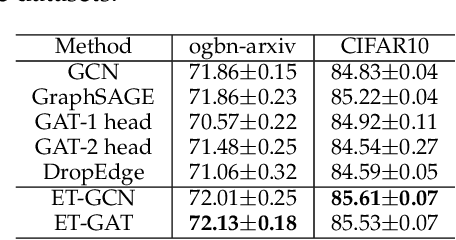

Graph Edge Representation via Tensor Product Graph Convolutional Representation

Jun 21, 2024

Abstract:Graph Convolutional Networks (GCNs) have been widely studied. The core of GCNs is the definition of convolution operators on graphs. However, existing Graph Convolution (GC) operators are mainly defined on adjacency matrix and node features and generally focus on obtaining effective node embeddings which cannot be utilized to address the graphs with (high-dimensional) edge features. To address this problem, by leveraging tensor contraction representation and tensor product graph diffusion theories, this paper analogously defines an effective convolution operator on graphs with edge features which is named as Tensor Product Graph Convolution (TPGC). The proposed TPGC aims to obtain effective edge embeddings. It provides a complementary model to traditional graph convolutions (GCs) to address the more general graph data analysis with both node and edge features. Experimental results on several graph learning tasks demonstrate the effectiveness of the proposed TPGC.

A Unified Graph Selective Prompt Learning for Graph Neural Networks

Jun 15, 2024Abstract:In recent years, graph prompt learning/tuning has garnered increasing attention in adapting pre-trained models for graph representation learning. As a kind of universal graph prompt learning method, Graph Prompt Feature (GPF) has achieved remarkable success in adapting pre-trained models for Graph Neural Networks (GNNs). By fixing the parameters of a pre-trained GNN model, the aim of GPF is to modify the input graph data by adding some (learnable) prompt vectors into graph node features to better align with the downstream tasks on the smaller dataset. However, existing GPFs generally suffer from two main limitations. First, GPFs generally focus on node prompt learning which ignore the prompting for graph edges. Second, existing GPFs generally conduct the prompt learning on all nodes equally which fails to capture the importances of different nodes and may perform sensitively w.r.t noisy nodes in aligning with the downstream tasks. To address these issues, in this paper, we propose a new unified Graph Selective Prompt Feature learning (GSPF) for GNN fine-tuning. The proposed GSPF integrates the prompt learning on both graph node and edge together, which thus provides a unified prompt model for the graph data. Moreover, it conducts prompt learning selectively on nodes and edges by concentrating on the important nodes and edges for prompting which thus make our model be more reliable and compact. Experimental results on many benchmark datasets demonstrate the effectiveness and advantages of the proposed GSPF method.

Power Transformer Fault Prediction Based on Knowledge Graphs

Feb 11, 2024Abstract:In this paper, we address the challenge of learning with limited fault data for power transformers. Traditional operation and maintenance tools lack effective predictive capabilities for potential faults. The scarcity of extensive fault data makes it difficult to apply machine learning techniques effectively. To solve this problem, we propose a novel approach that leverages the knowledge graph (KG) technology in combination with gradient boosting decision trees (GBDT). This method is designed to efficiently learn from a small set of high-dimensional data, integrating various factors influencing transformer faults and historical operational data. Our approach enables accurate safe state assessments and fault analyses of power transformers despite the limited fault characteristic data. Experimental results demonstrate that this method outperforms other learning approaches in prediction accuracy, such as artificial neural networks (ANN) and logistic regression (LR). Furthermore, it offers significant improvements in progressiveness, practicality, and potential for widespread application.

Unifying Graph Contrastive Learning via Graph Message Augmentation

Jan 08, 2024

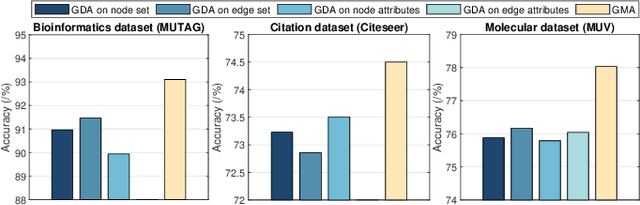

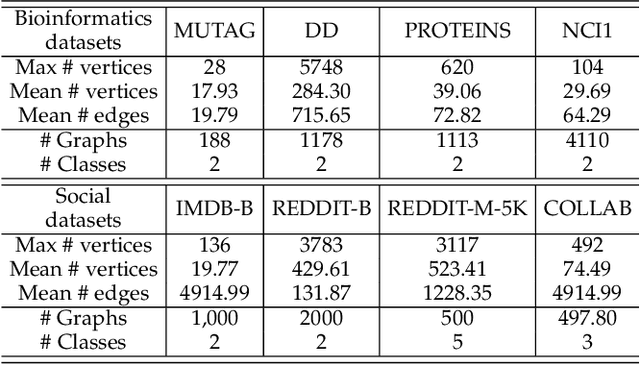

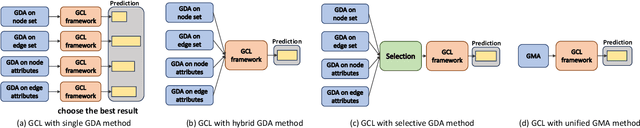

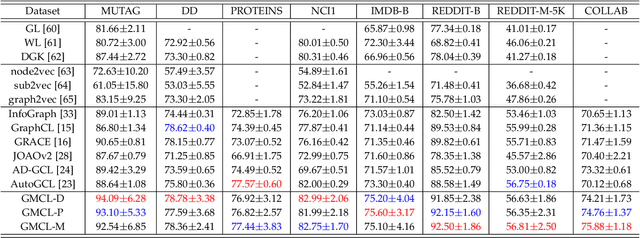

Abstract:Graph contrastive learning is usually performed by first conducting Graph Data Augmentation (GDA) and then employing a contrastive learning pipeline to train GNNs. As we know that GDA is an important issue for graph contrastive learning. Various GDAs have been developed recently which mainly involve dropping or perturbing edges, nodes, node attributes and edge attributes. However, to our knowledge, it still lacks a universal and effective augmentor that is suitable for different types of graph data. To address this issue, in this paper, we first introduce the graph message representation of graph data. Based on it, we then propose a novel Graph Message Augmentation (GMA), a universal scheme for reformulating many existing GDAs. The proposed unified GMA not only gives a new perspective to understand many existing GDAs but also provides a universal and more effective graph data augmentation for graph self-supervised learning tasks. Moreover, GMA introduces an easy way to implement the mixup augmentor which is natural for images but usually challengeable for graphs. Based on the proposed GMA, we then propose a unified graph contrastive learning, termed Graph Message Contrastive Learning (GMCL), that employs attribution-guided universal GMA for graph contrastive learning. Experiments on many graph learning tasks demonstrate the effectiveness and benefits of the proposed GMA and GMCL approaches.

VcT: Visual change Transformer for Remote Sensing Image Change Detection

Oct 17, 2023Abstract:Existing visual change detectors usually adopt CNNs or Transformers for feature representation learning and focus on learning effective representation for the changed regions between images. Although good performance can be obtained by enhancing the features of the change regions, however, these works are still limited mainly due to the ignorance of mining the unchanged background context information. It is known that one main challenge for change detection is how to obtain the consistent representations for two images involving different variations, such as spatial variation, sunlight intensity, etc. In this work, we demonstrate that carefully mining the common background information provides an important cue to learn the consistent representations for the two images which thus obviously facilitates the visual change detection problem. Based on this observation, we propose a novel Visual change Transformer (VcT) model for visual change detection problem. To be specific, a shared backbone network is first used to extract the feature maps for the given image pair. Then, each pixel of feature map is regarded as a graph node and the graph neural network is proposed to model the structured information for coarse change map prediction. Top-K reliable tokens can be mined from the map and refined by using the clustering algorithm. Then, these reliable tokens are enhanced by first utilizing self/cross-attention schemes and then interacting with original features via an anchor-primary attention learning module. Finally, the prediction head is proposed to get a more accurate change map. Extensive experiments on multiple benchmark datasets validated the effectiveness of our proposed VcT model.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge