Beibei Wang

Recovering 3D Shapes from Ultra-Fast Motion-Blurred Images

Feb 08, 2026Abstract:We consider the problem of 3D shape recovery from ultra-fast motion-blurred images. While 3D reconstruction from static images has been extensively studied, recovering geometry from extreme motion-blurred images remains challenging. Such scenarios frequently occur in both natural and industrial settings, such as fast-moving objects in sports (e.g., balls) or rotating machinery, where rapid motion distorts object appearance and makes traditional 3D reconstruction techniques like Multi-View Stereo (MVS) ineffective. In this paper, we propose a novel inverse rendering approach for shape recovery from ultra-fast motion-blurred images. While conventional rendering techniques typically synthesize blur by averaging across multiple frames, we identify a major computational bottleneck in the repeated computation of barycentric weights. To address this, we propose a fast barycentric coordinate solver, which significantly reduces computational overhead and achieves a speedup of up to 4.57x, enabling efficient and photorealistic simulation of high-speed motion. Crucially, our method is fully differentiable, allowing gradients to propagate from rendered images to the underlying 3D shape, thereby facilitating shape recovery through inverse rendering. We validate our approach on two representative motion types: rapid translation and rotation. Experimental results demonstrate that our method enables efficient and realistic modeling of ultra-fast moving objects in the forward simulation. Moreover, it successfully recovers 3D shapes from 2D imagery of objects undergoing extreme translational and rotational motion, advancing the boundaries of vision-based 3D reconstruction. Project page: https://maxmilite.github.io/rec-from-ultrafast-blur/

Language as Prior, Vision as Calibration: Metric Scale Recovery for Monocular Depth Estimation

Jan 07, 2026Abstract:Relative-depth foundation models transfer well, yet monocular metric depth remains ill-posed due to unidentifiable global scale and heightened domain-shift sensitivity. Under a frozen-backbone calibration setting, we recover metric depth via an image-specific affine transform in inverse depth and train only lightweight calibration heads while keeping the relative-depth backbone and the CLIP text encoder fixed. Since captions provide coarse but noisy scale cues that vary with phrasing and missing objects, we use language to predict an uncertainty-aware envelope that bounds feasible calibration parameters in an unconstrained space, rather than committing to a text-only point estimate. We then use pooled multi-scale frozen visual features to select an image-specific calibration within this envelope. During training, a closed-form least-squares oracle in inverse depth provides per-image supervision for learning the envelope and the selected calibration. Experiments on NYUv2 and KITTI improve in-domain accuracy, while zero-shot transfer to SUN-RGBD and DDAD demonstrates improved robustness over strong language-only baselines.

When Prompting Meets Spiking: Graph Sparse Prompting via Spiking Graph Prompt Learning

Jan 06, 2026Abstract:Graph Prompt Feature (GPF) learning has been widely used in adapting pre-trained GNN model on the downstream task. GPFs first introduce some prompt atoms and then learns the optimal prompt vector for each graph node using the linear combination of prompt atoms. However, existing GPFs generally conduct prompting over node's all feature dimensions which is obviously redundant and also be sensitive to node feature noise. To overcome this issue, for the first time, this paper proposes learning sparse graph prompts by leveraging the spiking neuron mechanism, termed Spiking Graph Prompt Feature (SpikingGPF). Our approach is motivated by the observation that spiking neuron can perform inexpensive information processing and produce sparse outputs which naturally fits the task of our graph sparse prompting. Specifically, SpikingGPF has two main aspects. First, it learns a sparse prompt vector for each node by exploiting a spiking neuron architecture, enabling prompting on selective node features. This yields a more compact and lightweight prompting design while also improving robustness against node noise. Second, SpikingGPF introduces a novel prompt representation learning model based on sparse representation theory, i.e., it represents each node prompt as a sparse combination of prompt atoms. This encourages a more compact representation and also facilitates efficient computation. Extensive experiments on several benchmarks demonstrate the effectiveness and robustness of SpikingGPF.

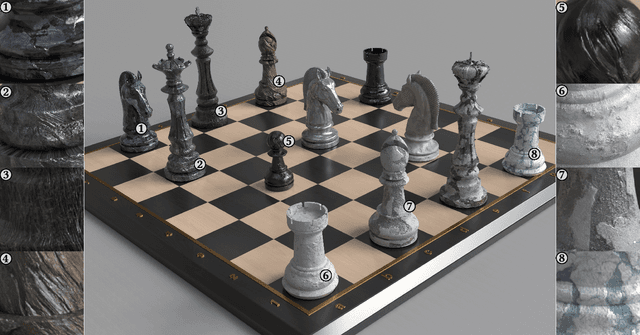

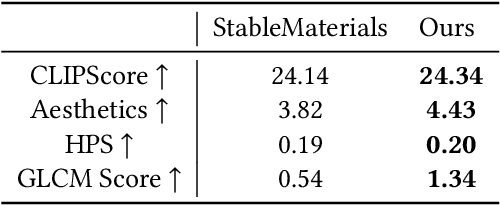

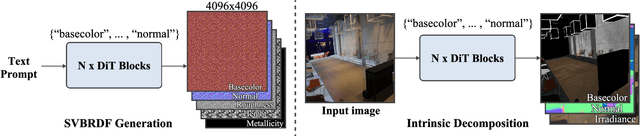

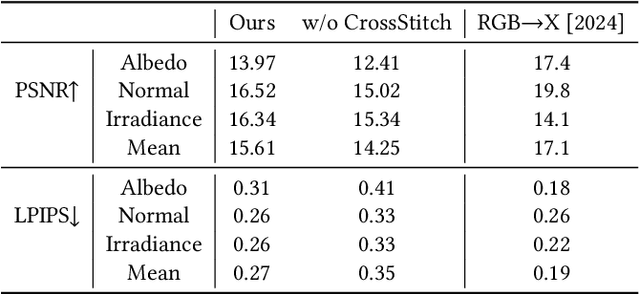

HiMat: DiT-based Ultra-High Resolution SVBRDF Generation

Aug 12, 2025

Abstract:Creating highly detailed SVBRDFs is essential for 3D content creation. The rise of high-resolution text-to-image generative models, based on diffusion transformers (DiT), suggests an opportunity to finetune them for this task. However, retargeting the models to produce multiple aligned SVBRDF maps instead of just RGB images, while achieving high efficiency and ensuring consistency across different maps, remains a challenge. In this paper, we introduce HiMat: a memory- and computation-efficient diffusion-based framework capable of generating native 4K-resolution SVBRDFs. A key challenge we address is maintaining consistency across different maps in a lightweight manner, without relying on training new VAEs or significantly altering the DiT backbone (which would damage its prior capabilities). To tackle this, we introduce the CrossStitch module, a lightweight convolutional module that captures inter-map dependencies through localized operations. Its weights are initialized such that the DiT backbone operation is unchanged before finetuning starts. HiMat enables generation with strong structural coherence and high-frequency details. Results with a large set of text prompts demonstrate the effectiveness of our approach for 4K SVBRDF generation. Further experiments suggest generalization to tasks such as intrinsic decomposition.

WeatherDiffusion: Weather-Guided Diffusion Model for Forward and Inverse Rendering

Aug 09, 2025Abstract:Forward and inverse rendering have emerged as key techniques for enabling understanding and reconstruction in the context of autonomous driving (AD). However, complex weather and illumination pose great challenges to this task. The emergence of large diffusion models has shown promise in achieving reasonable results through learning from 2D priors, but these models are difficult to control and lack robustness. In this paper, we introduce WeatherDiffusion, a diffusion-based framework for forward and inverse rendering on AD scenes with various weather and lighting conditions. Our method enables authentic estimation of material properties, scene geometry, and lighting, and further supports controllable weather and illumination editing through the use of predicted intrinsic maps guided by text descriptions. We observe that different intrinsic maps should correspond to different regions of the original image. Based on this observation, we propose Intrinsic map-aware attention (MAA) to enable high-quality inverse rendering. Additionally, we introduce a synthetic dataset (\ie WeatherSynthetic) and a real-world dataset (\ie WeatherReal) for forward and inverse rendering on AD scenes with diverse weather and lighting. Extensive experiments show that our WeatherDiffusion outperforms state-of-the-art methods on several benchmarks. Moreover, our method demonstrates significant value in downstream tasks for AD, enhancing the robustness of object detection and image segmentation in challenging weather scenarios.

Experience Paper: Scaling WiFi Sensing to Millions of Commodity Devices for Ubiquitous Home Monitoring

Jun 04, 2025Abstract:WiFi-based home monitoring has emerged as a compelling alternative to traditional camera- and sensor-based solutions, offering wide coverage with minimal intrusion by leveraging existing wireless infrastructure. This paper presents key insights and lessons learned from developing and deploying a large-scale WiFi sensing solution, currently operational across over 10 million commodity off-the-shelf routers and 100 million smart bulbs worldwide. Through this extensive deployment, we identify four real-world challenges that hinder the practical adoption of prior research: 1) Non-human movements (e.g., pets) frequently trigger false positives; 2) Low-cost WiFi chipsets and heterogeneous hardware introduce inconsistencies in channel state information (CSI) measurements; 3) Motion interference in multi-user environments complicates occupant differentiation; 4) Computational constraints on edge devices and limited cloud transmission impede real-time processing. To address these challenges, we present a practical and scalable system, validated through comprehensive two-year evaluations involving 280 edge devices, across 16 scenarios, and over 4 million motion samples. Our solutions achieve an accuracy of 92.61% in diverse real-world homes while reducing false alarms due to non-human movements from 63.1% to 8.4% and lowering CSI transmission overhead by 99.72%. Notably, our system integrates sensing and communication, supporting simultaneous WiFi sensing and data transmission over home WiFi networks. While focused on home monitoring, our findings and strategies generalize to various WiFi sensing applications. By bridging the gaps between theoretical research and commercial deployment, this work offers practical insights for scaling WiFi sensing in real-world environments.

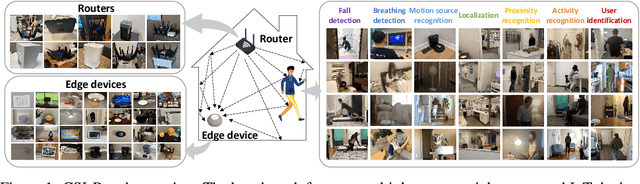

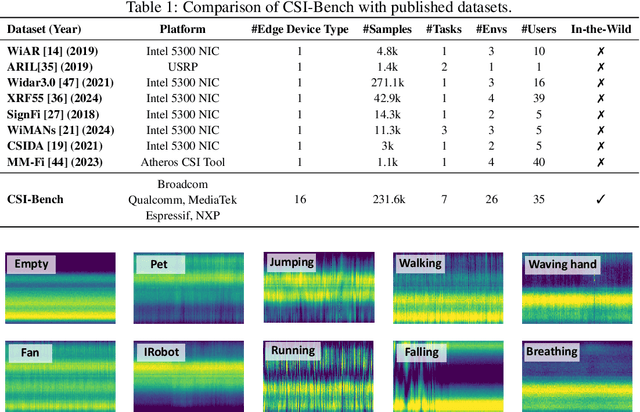

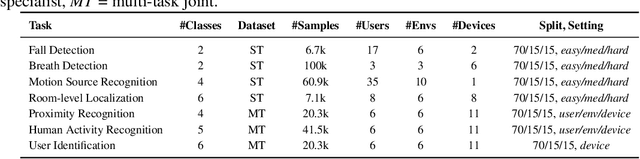

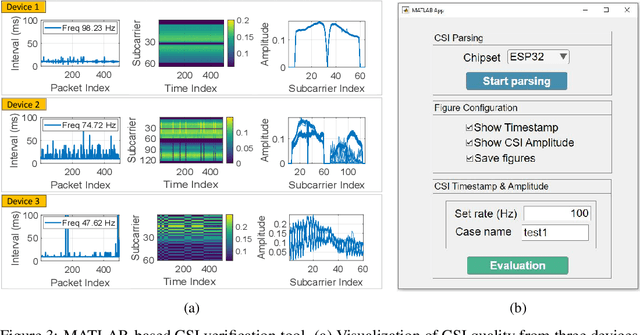

CSI-Bench: A Large-Scale In-the-Wild Dataset for Multi-task WiFi Sensing

May 28, 2025

Abstract:WiFi sensing has emerged as a compelling contactless modality for human activity monitoring by capturing fine-grained variations in Channel State Information (CSI). Its ability to operate continuously and non-intrusively while preserving user privacy makes it particularly suitable for health monitoring. However, existing WiFi sensing systems struggle to generalize in real-world settings, largely due to datasets collected in controlled environments with homogeneous hardware and fragmented, session-based recordings that fail to reflect continuous daily activity. We present CSI-Bench, a large-scale, in-the-wild benchmark dataset collected using commercial WiFi edge devices across 26 diverse indoor environments with 35 real users. Spanning over 461 hours of effective data, CSI-Bench captures realistic signal variability under natural conditions. It includes task-specific datasets for fall detection, breathing monitoring, localization, and motion source recognition, as well as a co-labeled multitask dataset with joint annotations for user identity, activity, and proximity. To support the development of robust and generalizable models, CSI-Bench provides standardized evaluation splits and baseline results for both single-task and multi-task learning. CSI-Bench offers a foundation for scalable, privacy-preserving WiFi sensing systems in health and broader human-centric applications.

DeepCPD: Deep Learning Based In-Car Child Presence Detection Using WiFi

May 13, 2025

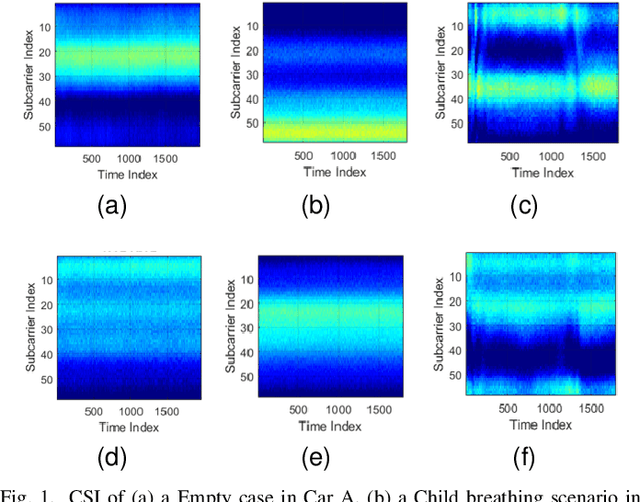

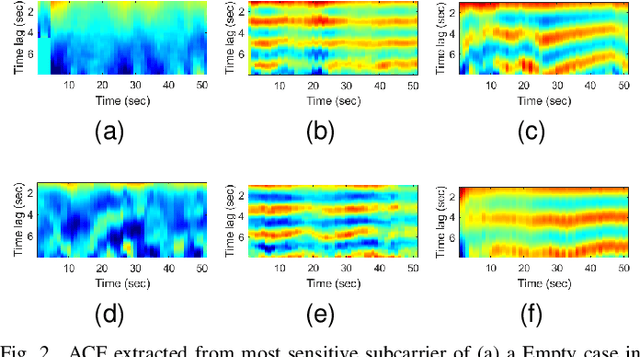

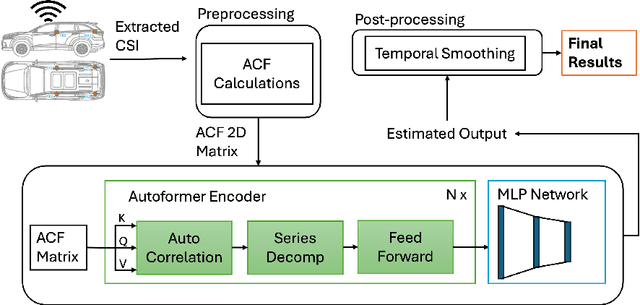

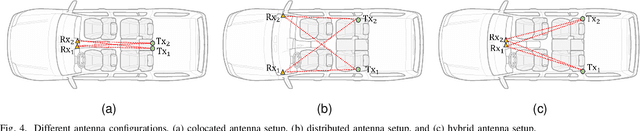

Abstract:Child presence detection (CPD) is a vital technology for vehicles to prevent heat-related fatalities or injuries by detecting the presence of a child left unattended. Regulatory agencies around the world are planning to mandate CPD systems in the near future. However, existing solutions have limitations in terms of accuracy, coverage, and additional device requirements. While WiFi-based solutions can overcome the limitations, existing approaches struggle to reliably distinguish between adult and child presence, leading to frequent false alarms, and are often sensitive to environmental variations. In this paper, we present DeepCPD, a novel deep learning framework designed for accurate child presence detection in smart vehicles. DeepCPD utilizes an environment-independent feature-the auto-correlation function (ACF) derived from WiFi channel state information (CSI)-to capture human-related signatures while mitigating environmental distortions. A Transformer-based architecture, followed by a multilayer perceptron (MLP), is employed to differentiate adults from children by modeling motion patterns and subtle body size differences. To address the limited availability of in-vehicle child and adult data, we introduce a two-stage learning strategy that significantly enhances model generalization. Extensive experiments conducted across more than 25 car models and over 500 hours of data collection demonstrate that DeepCPD achieves an overall accuracy of 92.86%, outperforming a CNN baseline by a substantial margin (79.55%). Additionally, the model attains a 91.45% detection rate for children while maintaining a low false alarm rate of 6.14%.

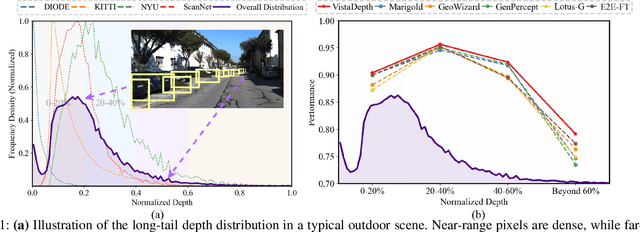

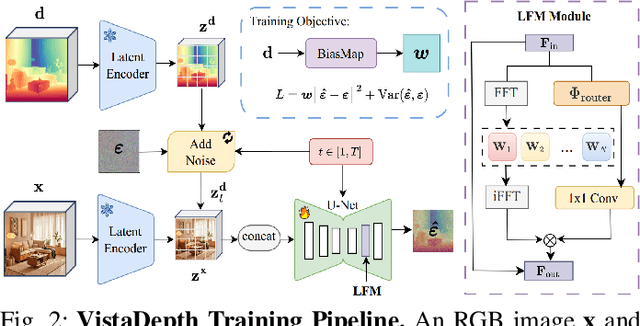

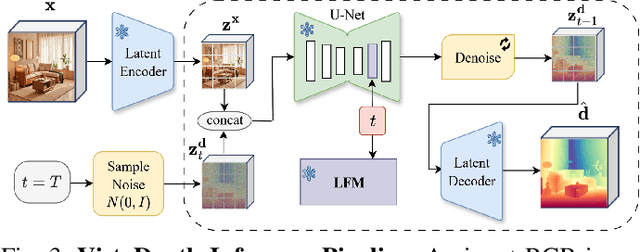

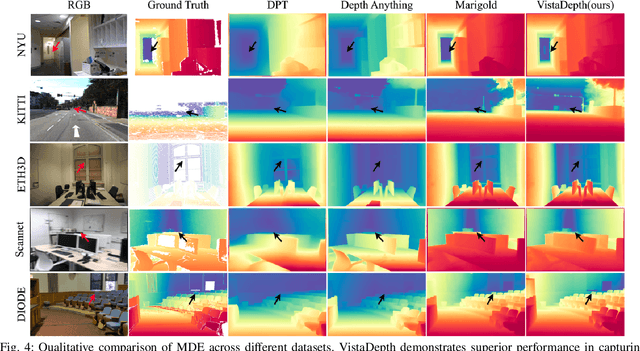

VistaDepth: Frequency Modulation With Bias Reweighting For Enhanced Long-Range Depth Estimation

Apr 22, 2025

Abstract:Monocular depth estimation (MDE) aims to predict per-pixel depth values from a single RGB image. Recent advancements have positioned diffusion models as effective MDE tools by framing the challenge as a conditional image generation task. Despite their progress, these methods often struggle with accurately reconstructing distant depths, due largely to the imbalanced distribution of depth values and an over-reliance on spatial-domain features. To overcome these limitations, we introduce VistaDepth, a novel framework that integrates adaptive frequency-domain feature enhancements with an adaptive weight-balancing mechanism into the diffusion process. Central to our approach is the Latent Frequency Modulation (LFM) module, which dynamically refines spectral responses in the latent feature space, thereby improving the preservation of structural details and reducing noisy artifacts. Furthermore, we implement an adaptive weighting strategy that modulates the diffusion loss in real-time, enhancing the model's sensitivity towards distant depth reconstruction. These innovations collectively result in superior depth perception performance across both distance and detail. Experimental evaluations confirm that VistaDepth achieves state-of-the-art performance among diffusion-based MDE techniques, particularly excelling in the accurate reconstruction of distant regions.

SVG-IR: Spatially-Varying Gaussian Splatting for Inverse Rendering

Apr 09, 2025

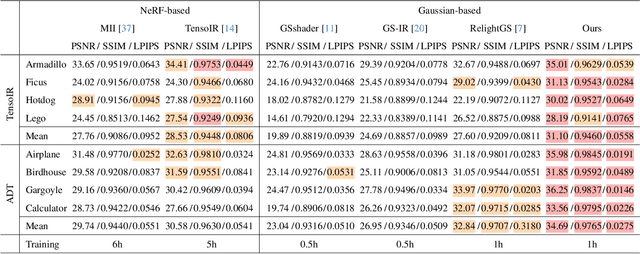

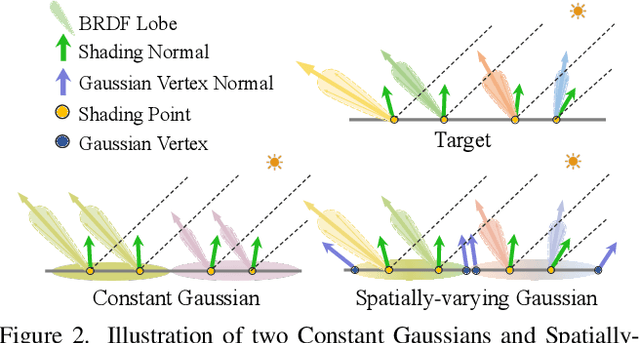

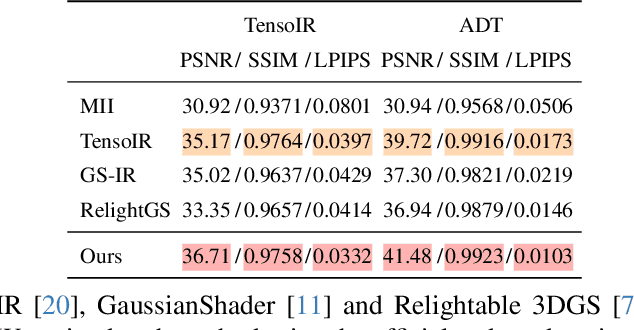

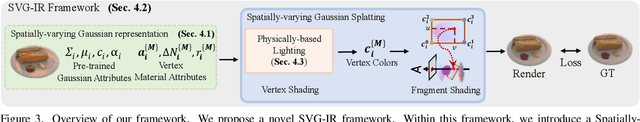

Abstract:Reconstructing 3D assets from images, known as inverse rendering (IR), remains a challenging task due to its ill-posed nature. 3D Gaussian Splatting (3DGS) has demonstrated impressive capabilities for novel view synthesis (NVS) tasks. Methods apply it to relighting by separating radiance into BRDF parameters and lighting, yet produce inferior relighting quality with artifacts and unnatural indirect illumination due to the limited capability of each Gaussian, which has constant material parameters and normal, alongside the absence of physical constraints for indirect lighting. In this paper, we present a novel framework called Spatially-vayring Gaussian Inverse Rendering (SVG-IR), aimed at enhancing both NVS and relighting quality. To this end, we propose a new representation-Spatially-varying Gaussian (SVG)-that allows per-Gaussian spatially varying parameters. This enhanced representation is complemented by a SVG splatting scheme akin to vertex/fragment shading in traditional graphics pipelines. Furthermore, we integrate a physically-based indirect lighting model, enabling more realistic relighting. The proposed SVG-IR framework significantly improves rendering quality, outperforming state-of-the-art NeRF-based methods by 2.5 dB in peak signal-to-noise ratio (PSNR) and surpassing existing Gaussian-based techniques by 3.5 dB in relighting tasks, all while maintaining a real-time rendering speed.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge