Chuheng Wei

PDB: Not All Drivers Are the Same -- A Personalized Dataset for Understanding Driving Behavior

Mar 09, 2025

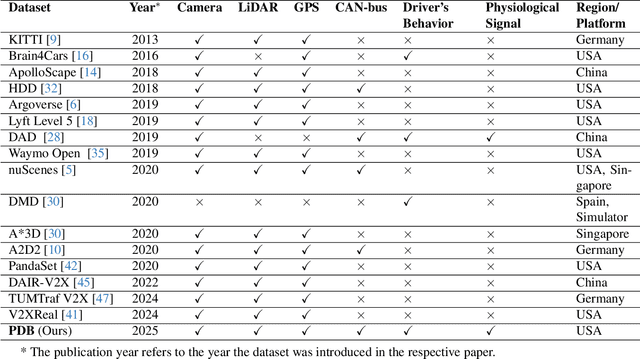

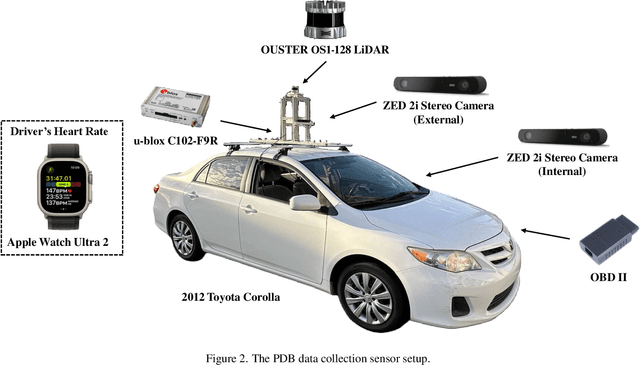

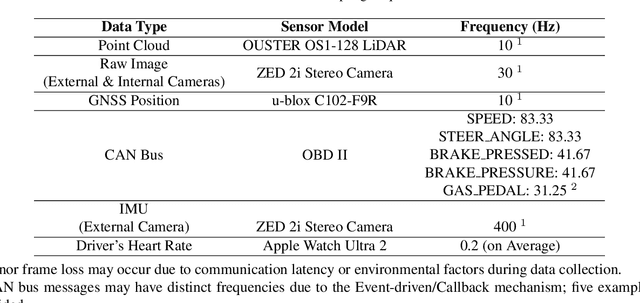

Abstract:Driving behavior is inherently personal, influenced by individual habits, decision-making styles, and physiological states. However, most existing datasets treat all drivers as homogeneous, overlooking driver-specific variability. To address this gap, we introduce the Personalized Driving Behavior (PDB) dataset, a multi-modal dataset designed to capture personalization in driving behavior under naturalistic driving conditions. Unlike conventional datasets, PDB minimizes external influences by maintaining consistent routes, vehicles, and lighting conditions across sessions. It includes sources from 128-line LiDAR, front-facing camera video, GNSS, 9-axis IMU, CAN bus data (throttle, brake, steering angle), and driver-specific signals such as facial video and heart rate. The dataset features 12 participants, approximately 270,000 LiDAR frames, 1.6 million images, and 6.6 TB of raw sensor data. The processed trajectory dataset consists of 1,669 segments, each spanning 10 seconds with a 0.2-second interval. By explicitly capturing drivers' behavior, PDB serves as a unique resource for human factor analysis, driver identification, and personalized mobility applications, contributing to the development of human-centric intelligent transportation systems.

KI-GAN: Knowledge-Informed Generative Adversarial Networks for Enhanced Multi-Vehicle Trajectory Forecasting at Signalized Intersections

Apr 19, 2024

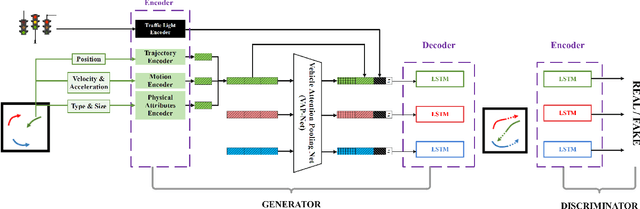

Abstract:Reliable prediction of vehicle trajectories at signalized intersections is crucial to urban traffic management and autonomous driving systems. However, it presents unique challenges, due to the complex roadway layout at intersections, involvement of traffic signal controls, and interactions among different types of road users. To address these issues, we present in this paper a novel model called Knowledge-Informed Generative Adversarial Network (KI-GAN), which integrates both traffic signal information and multi-vehicle interactions to predict vehicle trajectories accurately. Additionally, we propose a specialized attention pooling method that accounts for vehicle orientation and proximity at intersections. Based on the SinD dataset, our KI-GAN model is able to achieve an Average Displacement Error (ADE) of 0.05 and a Final Displacement Error (FDE) of 0.12 for a 6-second observation and 6-second prediction cycle. When the prediction window is extended to 9 seconds, the ADE and FDE values are further reduced to 0.11 and 0.26, respectively. These results demonstrate the effectiveness of the proposed KI-GAN model in vehicle trajectory prediction under complex scenarios at signalized intersections, which represents a significant advancement in the target field.

Feature Corrective Transfer Learning: End-to-End Solutions to Object Detection in Non-Ideal Visual Conditions

Apr 19, 2024Abstract:A significant challenge in the field of object detection lies in the system's performance under non-ideal imaging conditions, such as rain, fog, low illumination, or raw Bayer images that lack ISP processing. Our study introduces "Feature Corrective Transfer Learning", a novel approach that leverages transfer learning and a bespoke loss function to facilitate the end-to-end detection of objects in these challenging scenarios without the need to convert non-ideal images into their RGB counterparts. In our methodology, we initially train a comprehensive model on a pristine RGB image dataset. Subsequently, non-ideal images are processed by comparing their feature maps against those from the initial ideal RGB model. This comparison employs the Extended Area Novel Structural Discrepancy Loss (EANSDL), a novel loss function designed to quantify similarities and integrate them into the detection loss. This approach refines the model's ability to perform object detection across varying conditions through direct feature map correction, encapsulating the essence of Feature Corrective Transfer Learning. Experimental validation on variants of the KITTI dataset demonstrates a significant improvement in mean Average Precision (mAP), resulting in a 3.8-8.1% relative enhancement in detection under non-ideal conditions compared to the baseline model, and a less marginal performance difference within 1.3% of the mAP@[0.5:0.95] achieved under ideal conditions by the standard Faster RCNN algorithm.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge