Zheng Zhou

Local-Global Feature Fusion for Subject-Independent EEG Emotion Recognition

Jan 13, 2026Abstract:Subject-independent EEG emotion recognition is challenged by pronounced inter-subject variability and the difficulty of learning robust representations from short, noisy recordings. To address this, we propose a fusion framework that integrates (i) local, channel-wise descriptors and (ii) global, trial-level descriptors, improving cross-subject generalization on the SEED-VII dataset. Local representations are formed per channel by concatenating differential entropy with graph-theoretic features, while global representations summarize time-domain, spectral, and complexity characteristics at the trial level. These representations are fused in a dual-branch transformer with attention-based fusion and domain-adversarial regularization, with samples filtered by an intensity threshold. Experiments under a leave-one-subject-out protocol demonstrate that the proposed method consistently outperforms single-view and classical baselines, achieving approximately 40% mean accuracy in 7-class subject-independent emotion recognition. The code has been released at https://github.com/Danielz-z/LGF-EEG-Emotion.

MindWatcher: Toward Smarter Multimodal Tool-Integrated Reasoning

Dec 29, 2025Abstract:Traditional workflow-based agents exhibit limited intelligence when addressing real-world problems requiring tool invocation. Tool-integrated reasoning (TIR) agents capable of autonomous reasoning and tool invocation are rapidly emerging as a powerful approach for complex decision-making tasks involving multi-step interactions with external environments. In this work, we introduce MindWatcher, a TIR agent integrating interleaved thinking and multimodal chain-of-thought (CoT) reasoning. MindWatcher can autonomously decide whether and how to invoke diverse tools and coordinate their use, without relying on human prompts or workflows. The interleaved thinking paradigm enables the model to switch between thinking and tool calling at any intermediate stage, while its multimodal CoT capability allows manipulation of images during reasoning to yield more precise search results. We implement automated data auditing and evaluation pipelines, complemented by manually curated high-quality datasets for training, and we construct a benchmark, called MindWatcher-Evaluate Bench (MWE-Bench), to evaluate its performance. MindWatcher is equipped with a comprehensive suite of auxiliary reasoning tools, enabling it to address broad-domain multimodal problems. A large-scale, high-quality local image retrieval database, covering eight categories including cars, animals, and plants, endows model with robust object recognition despite its small size. Finally, we design a more efficient training infrastructure for MindWatcher, enhancing training speed and hardware utilization. Experiments not only demonstrate that MindWatcher matches or exceeds the performance of larger or more recent models through superior tool invocation, but also uncover critical insights for agent training, such as the genetic inheritance phenomenon in agentic RL.

Real, Fake, or Manipulated? Detecting Machine-Influenced Text

Sep 18, 2025Abstract:Large Language Model (LLMs) can be used to write or modify documents, presenting a challenge for understanding the intent behind their use. For example, benign uses may involve using LLM on a human-written document to improve its grammar or to translate it into another language. However, a document entirely produced by a LLM may be more likely to be used to spread misinformation than simple translation (\eg, from use by malicious actors or simply by hallucinating). Prior works in Machine Generated Text (MGT) detection mostly focus on simply identifying whether a document was human or machine written, ignoring these fine-grained uses. In this paper, we introduce a HiErarchical, length-RObust machine-influenced text detector (HERO), which learns to separate text samples of varying lengths from four primary types: human-written, machine-generated, machine-polished, and machine-translated. HERO accomplishes this by combining predictions from length-specialist models that have been trained with Subcategory Guidance. Specifically, for categories that are easily confused (\eg, different source languages), our Subcategory Guidance module encourages separation of the fine-grained categories, boosting performance. Extensive experiments across five LLMs and six domains demonstrate the benefits of our HERO, outperforming the state-of-the-art by 2.5-3 mAP on average.

DiffHash: Text-Guided Targeted Attack via Diffusion Models against Deep Hashing Image Retrieval

Sep 16, 2025

Abstract:Deep hashing models have been widely adopted to tackle the challenges of large-scale image retrieval. However, these approaches face serious security risks due to their vulnerability to adversarial examples. Despite the increasing exploration of targeted attacks on deep hashing models, existing approaches still suffer from a lack of multimodal guidance, reliance on labeling information and dependence on pixel-level operations for attacks. To address these limitations, we proposed DiffHash, a novel diffusion-based targeted attack for deep hashing. Unlike traditional pixel-based attacks that directly modify specific pixels and lack multimodal guidance, our approach focuses on optimizing the latent representations of images, guided by text information generated by a Large Language Model (LLM) for the target image. Furthermore, we designed a multi-space hash alignment network to align the high-dimension image space and text space to the low-dimension binary hash space. During reconstruction, we also incorporated text-guided attention mechanisms to refine adversarial examples, ensuring them aligned with the target semantics while maintaining visual plausibility. Extensive experiments have demonstrated that our method outperforms state-of-the-art (SOTA) targeted attack methods, achieving better black-box transferability and offering more excellent stability across datasets.

Wavelet Mixture of Experts for Time Series Forecasting

Aug 12, 2025

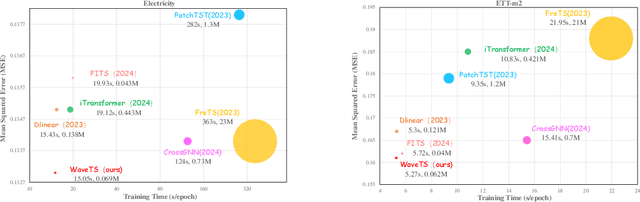

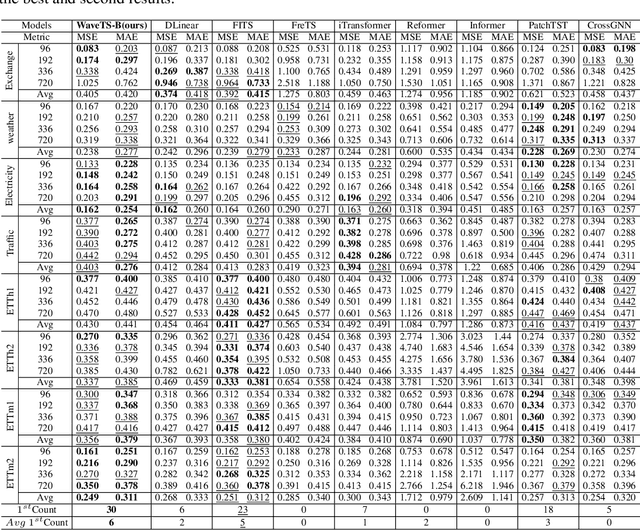

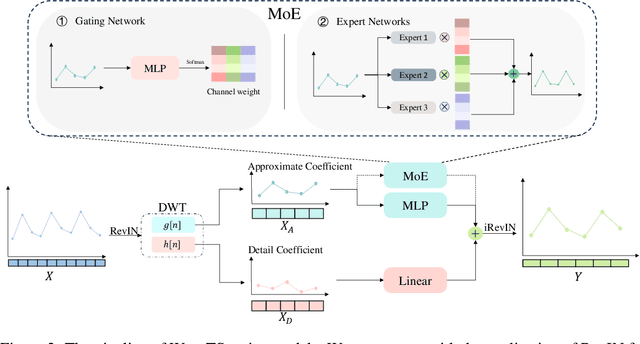

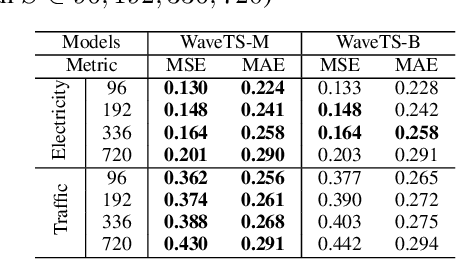

Abstract:The field of time series forecasting is rapidly advancing, with recent large-scale Transformers and lightweight Multilayer Perceptron (MLP) models showing strong predictive performance. However, conventional Transformer models are often hindered by their large number of parameters and their limited ability to capture non-stationary features in data through smoothing. Similarly, MLP models struggle to manage multi-channel dependencies effectively. To address these limitations, we propose a novel, lightweight time series prediction model, WaveTS-B. This model combines wavelet transforms with MLP to capture both periodic and non-stationary characteristics of data in the wavelet domain. Building on this foundation, we propose a channel clustering strategy that incorporates a Mixture of Experts (MoE) framework, utilizing a gating mechanism and expert network to handle multi-channel dependencies efficiently. We propose WaveTS-M, an advanced model tailored for multi-channel time series prediction. Empirical evaluation across eight real-world time series datasets demonstrates that our WaveTS series models achieve state-of-the-art (SOTA) performance with significantly fewer parameters. Notably, WaveTS-M shows substantial improvements on multi-channel datasets, highlighting its effectiveness.

CausalDiffTab: Mixed-Type Causal-Aware Diffusion for Tabular Data Generation

Jun 17, 2025Abstract:Training data has been proven to be one of the most critical components in training generative AI. However, obtaining high-quality data remains challenging, with data privacy issues presenting a significant hurdle. To address the need for high-quality data. Synthesize data has emerged as a mainstream solution, demonstrating impressive performance in areas such as images, audio, and video. Generating mixed-type data, especially high-quality tabular data, still faces significant challenges. These primarily include its inherent heterogeneous data types, complex inter-variable relationships, and intricate column-wise distributions. In this paper, we introduce CausalDiffTab, a diffusion model-based generative model specifically designed to handle mixed tabular data containing both numerical and categorical features, while being more flexible in capturing complex interactions among variables. We further propose a hybrid adaptive causal regularization method based on the principle of Hierarchical Prior Fusion. This approach adaptively controls the weight of causal regularization, enhancing the model's performance without compromising its generative capabilities. Comprehensive experiments conducted on seven datasets demonstrate that CausalDiffTab outperforms baseline methods across all metrics. Our code is publicly available at: https://github.com/Godz-z/CausalDiffTab.

GaussianCAD: Robust Self-Supervised CAD Reconstruction from Three Orthographic Views Using 3D Gaussian Splatting

Mar 07, 2025

Abstract:The automatic reconstruction of 3D computer-aided design (CAD) models from CAD sketches has recently gained significant attention in the computer vision community. Most existing methods, however, rely on vector CAD sketches and 3D ground truth for supervision, which are often difficult to be obtained in industrial applications and are sensitive to noise inputs. We propose viewing CAD reconstruction as a specific instance of sparse-view 3D reconstruction to overcome these limitations. While this reformulation offers a promising perspective, existing 3D reconstruction methods typically require natural images and corresponding camera poses as inputs, which introduces two major significant challenges: (1) modality discrepancy between CAD sketches and natural images, and (2) difficulty of accurate camera pose estimation for CAD sketches. To solve these issues, we first transform the CAD sketches into representations resembling natural images and extract corresponding masks. Next, we manually calculate the camera poses for the orthographic views to ensure accurate alignment within the 3D coordinate system. Finally, we employ a customized sparse-view 3D reconstruction method to achieve high-quality reconstructions from aligned orthographic views. By leveraging raster CAD sketches for self-supervision, our approach eliminates the reliance on vector CAD sketches and 3D ground truth. Experiments on the Sub-Fusion360 dataset demonstrate that our proposed method significantly outperforms previous approaches in CAD reconstruction performance and exhibits strong robustness to noisy inputs.

Iterative Tree Analysis for Medical Critics

Jan 18, 2025Abstract:Large Language Models (LLMs) have been widely adopted across various domains, yet their application in the medical field poses unique challenges, particularly concerning the generation of hallucinations. Hallucinations in open-ended long medical text manifest as misleading critical claims, which are difficult to verify due to two reasons. First, critical claims are often deeply entangled within the text and cannot be extracted based solely on surface-level presentation. Second, verifying these claims is challenging because surface-level token-based retrieval often lacks precise or specific evidence, leaving the claims unverifiable without deeper mechanism-based analysis. In this paper, we introduce a novel method termed Iterative Tree Analysis (ITA) for medical critics. ITA is designed to extract implicit claims from long medical texts and verify each claim through an iterative and adaptive tree-like reasoning process. This process involves a combination of top-down task decomposition and bottom-up evidence consolidation, enabling precise verification of complex medical claims through detailed mechanism-level reasoning. Our extensive experiments demonstrate that ITA significantly outperforms previous methods in detecting factual inaccuracies in complex medical text verification tasks by 10%. Additionally, we will release a comprehensive test set to the public, aiming to foster further advancements in research within this domain.

Joint-Optimized Unsupervised Adversarial Domain Adaptation in Remote Sensing Segmentation with Prompted Foundation Model

Nov 18, 2024

Abstract:Unsupervised Domain Adaptation for Remote Sensing Semantic Segmentation (UDA-RSSeg) addresses the challenge of adapting a model trained on source domain data to target domain samples, thereby minimizing the need for annotated data across diverse remote sensing scenes. This task presents two principal challenges: (1) severe inconsistencies in feature representation across different remote sensing domains, and (2) a domain gap that emerges due to the representation bias of source domain patterns when translating features to predictive logits. To tackle these issues, we propose a joint-optimized adversarial network incorporating the "Segment Anything Model (SAM) (SAM-JOANet)" for UDA-RSSeg. Our approach integrates SAM to leverage its robust generalized representation capabilities, thereby alleviating feature inconsistencies. We introduce a finetuning decoder designed to convert SAM-Encoder features into predictive logits. Additionally, a feature-level adversarial-based prompted segmentor is employed to generate class-agnostic maps, which guide the finetuning decoder's feature representations. The network is optimized end-to-end, combining the prompted segmentor and the finetuning decoder. Extensive evaluations on benchmark datasets, including ISPRS (Potsdam/Vaihingen) and CITY-OSM (Paris/Chicago), demonstrate the effectiveness of our method. The results, supported by visualization and analysis, confirm the method's interpretability and robustness. The code of this paper is available at https://github.com/CV-ShuchangLyu/SAM-JOANet.

BEARD: Benchmarking the Adversarial Robustness for Dataset Distillation

Nov 14, 2024

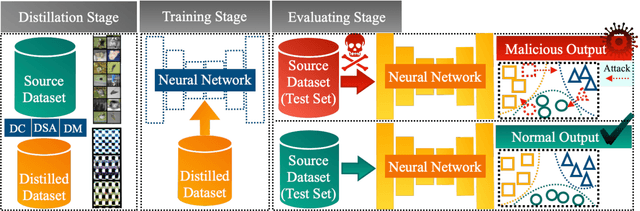

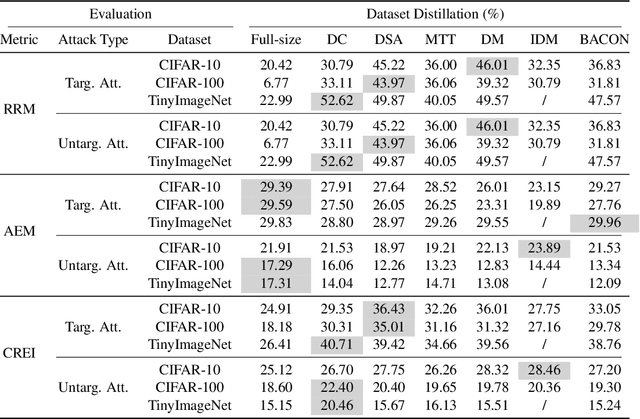

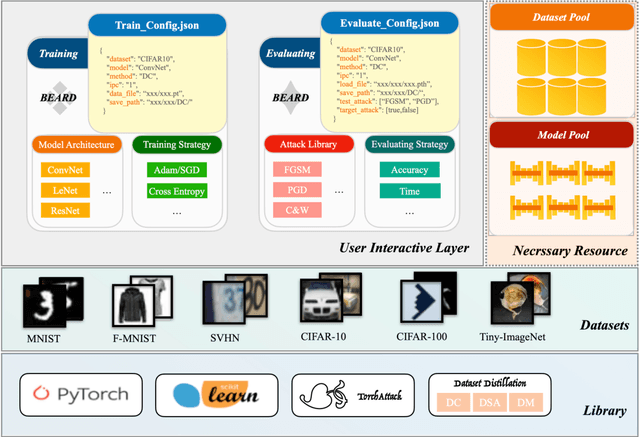

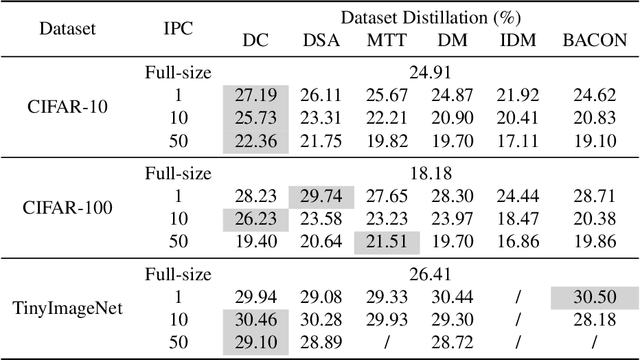

Abstract:Dataset Distillation (DD) is an emerging technique that compresses large-scale datasets into significantly smaller synthesized datasets while preserving high test performance and enabling the efficient training of large models. However, current research primarily focuses on enhancing evaluation accuracy under limited compression ratios, often overlooking critical security concerns such as adversarial robustness. A key challenge in evaluating this robustness lies in the complex interactions between distillation methods, model architectures, and adversarial attack strategies, which complicate standardized assessments. To address this, we introduce BEARD, an open and unified benchmark designed to systematically assess the adversarial robustness of DD methods, including DM, IDM, and BACON. BEARD encompasses a variety of adversarial attacks (e.g., FGSM, PGD, C&W) on distilled datasets like CIFAR-10/100 and TinyImageNet. Utilizing an adversarial game framework, it introduces three key metrics: Robustness Ratio (RR), Attack Efficiency Ratio (AE), and Comprehensive Robustness-Efficiency Index (CREI). Our analysis includes unified benchmarks, various Images Per Class (IPC) settings, and the effects of adversarial training. Results are available on the BEARD Leaderboard, along with a library providing model and dataset pools to support reproducible research. Access the code at BEARD.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge